Imagine you are working on a spam detection system for emails. Your goal is to classify incoming emails as either "spam" or "not spam." For this, you use logistic regression because it is well-suited for binary classification problems. Let’s break down the process step by step:

1. Defining Features: First, you identify the features that may influence whether an email is spam. These features could include:

- The presence of specific words (e.g., "free," "offer").

- The frequency of exclamation marks or dollar signs.

- The sender's email address domain.

- Whether the email contains links or attachments.

2. Assigning Labels: You create a dataset where each email is labeled as:

- 1 (spam) for emails marked as spam.

- 0 (not spam) for emails not marked as spam.

3. Model Training: The logistic regression model learns a linear relationship between the features and the log-odds of the email being spam. It calculates coefficients for each feature to determine their influence on the outcome. For instance:

- A higher frequency of the word "free" might increase the probability of an email being spam.

- Emails from a known trusted domain might decrease the probability.

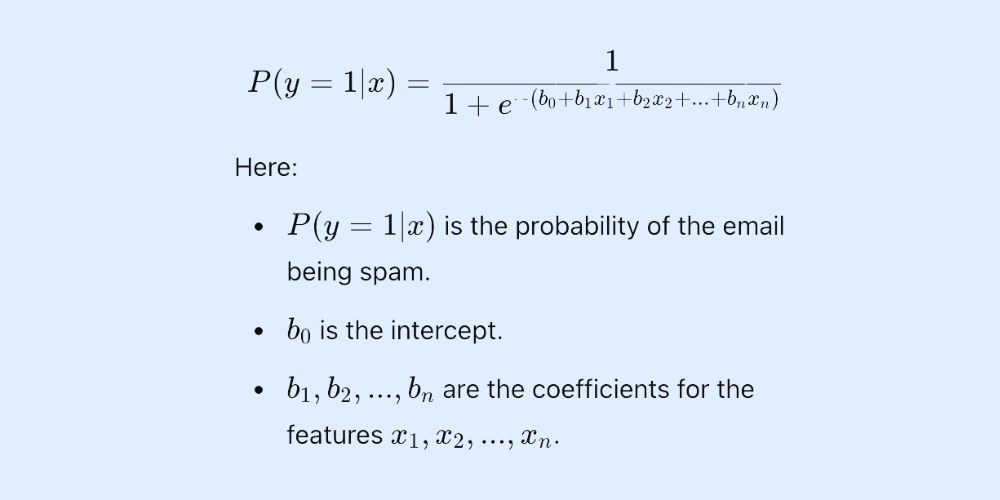

4. Calculating Probabilities: For each email, the model calculates the probability of it being spam using the logistic (sigmoid) function. This function ensures the output is a value between 0 and 1:

5. Making Predictions: After calculating the probability for a new email, the model applies a threshold (commonly 0.5).

- If P(y=1∣x) > 0.5P(y=1|x) > 0.5P(y=1∣x) > 0.5, the email is classified as spam.

- Otherwise, it is classified as not spam.

6. Interpreting Results: The coefficients can be analyzed to understand the impact of each feature. For example:

- A positive coefficient for "contains the word 'offer'" suggests that this feature increases the likelihood of an email being spam.

- A negative coefficient for "sent from a known domain" implies this feature decreases the likelihood.

This process highlights the simplicity and interpretability of logistic regression. It not only classifies emails but also provides insights into which features most strongly influence the classification.

Assumptions of Logistic Regression

To ensure the effectiveness of logistic regression, several key assumptions should be met:

- Binary or Categorical Dependent Variable: The outcome variable should be binary or categorical for standard logistic regression.

- Linearity of Predictors: The log-odds of the outcome should have a linear relationship with the independent variables.

- Independence of Observations: Observations in the dataset should be independent of each other.

- No Multicollinearity: Independent variables should not be highly correlated with each other.

- Large Sample Size: Logistic regression performs better with larger datasets to ensure stable estimates.

Types of Logistic Regression

- Binary Logistic Regression: Used when the dependent variable has two possible outcomes, such as yes/no or true/false.

- Multinomial Logistic Regression: Applied when the dependent variable has three or more unordered categories.

- Ordinal Logistic Regression: Used when the dependent variable has three or more ordered categories, such as low, medium, and high.

Comments

Add comment