- What Is A Compiler?

- What Are The Phases Of A Compiler?

- Lexical Analysis – 1st Phase Of Compiler

- Syntax Analysis – 2nd Phase Of Compiler

- Semantic Analysis – 3rd Phase Of Compiler

- Intermediate Code Generation – 4th Phase Of Compiler

- Code Optimization – 5th Phase Of Compiler

- Code Generation – 6th Phase Of Compiler

- Error Handling In Compiler Phases

- Symbol Table & Symbol Table Management

- Phases Of Compiler Design (Step-by-Step Code Progression)

- Applications Of A Compiler

- Conclusion

- Frequently Asked Questions

6 Phases Of Compiler Explained With Examples & Visual Flowchart

Ever wondered how a simple piece of code turns into a fully functional program? It’s not magic! It’s the compiler working behind the scenes, translating human-readable instructions into machine code. But this translation isn’t a one-step process. It goes through multiple structured phases, each refining the code to ensure correctness and efficiency.

In this article, we will break down the phases of a compiler, exploring how your code progresses from raw text to executable instructions. Let’s get going!

What Is A Compiler?

A compiler is a software program that converts high-level source code into low-level machine code or an intermediate representation.

- It enables computers to understand and execute instructions written in programming languages like C, C++, and Java.

- The compilation process involves multiple steps, collectively known as the phases of a compiler.

- Unlike an interpreter, which executes code line by line, a compiler processes the entire program in one go.

- It performs error checking, optimizations, and translation before generating an executable file.

- This approach generally results in faster execution times compared to interpreted languages.

Each phase of a compiler has a specific role, from analyzing the structure of the code to optimizing and generating the final machine instructions. They ensure that the code is syntactically and semantically correct before execution. We will explore these compiler phases in detail in the following sections.

Related read: Difference Between Compiler and Interpreter

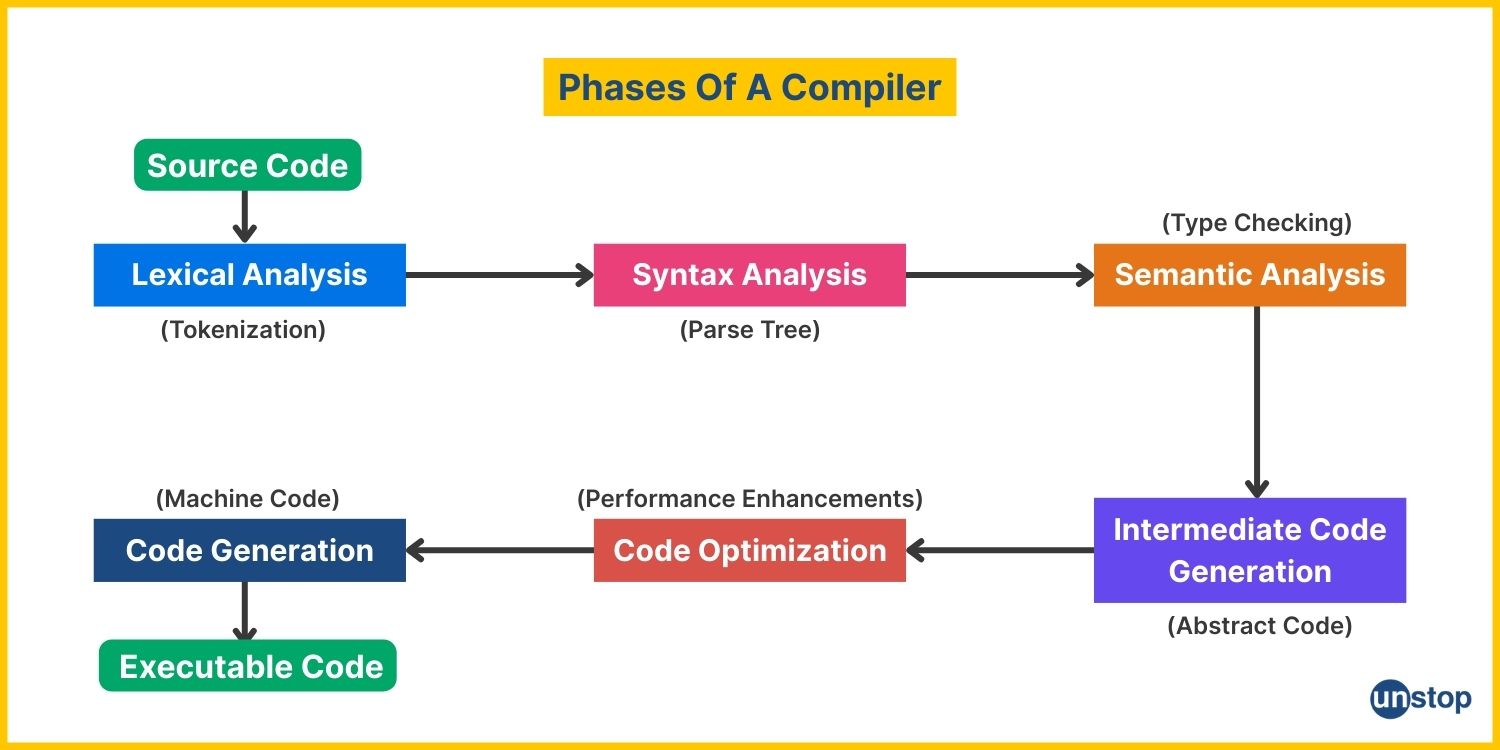

What Are The Phases Of A Compiler?

A compiler works in multiple stages, each performing a specific task to transform source code into machine code. Here are the six main phases of a compiler:

- Lexical Analysis– Breaks the source code into meaningful tokens.

- Syntax Analysis (Parsing)– Checks the structure of the code according to grammar rules.

- Semantic Analysis– Ensures logical correctness and detects type errors.

- Intermediate Code Generation– Converts code into an intermediate representation (IR).

- Code Optimization– Improves efficiency by refining the IR.

- Code Generation– Translates the optimized IR into final machine code.

The following diagram illustrates how these phases work together:

Quick Test

Lexical Analysis – 1st Phase Of Compiler

The Lexical Analysis phase is the first step in compilation. In this phase, the compiler scans the source code and breaks it into smaller, meaningful units called tokens. This process is performed by a lexical analyzer (lexer or scanner), which removes unnecessary spaces and comments in the code and recognizes keywords, operators, identifiers, and literals.

How Lexical Analysis Works

- Reads the source code character by character.

- Groups characters into lexemes (meaningful sequences).

- Converts lexemes into tokens (e.g., keywords, identifiers, operators).

- Ignores spaces, comments, and unnecessary symbols.

- Passes the tokens to the Syntax Analysis phase.

Example Of Lexical Analysis

Let’s take a simple C++ statement:

int x = 10;

After lexical analysis, the following tokens are generated:

|

Lexeme |

Token Type |

|

int |

Keyword |

|

x |

Identifier |

|

= |

Operator |

|

10 |

Literal |

|

; |

Separator |

The lexical analyzer removes spaces and unnecessary symbols, producing a structured output that the parser (syntax analysis phase) will process next. Next, let’s move on to Syntax Analysis, where the compiler checks whether these tokens form a valid structure.

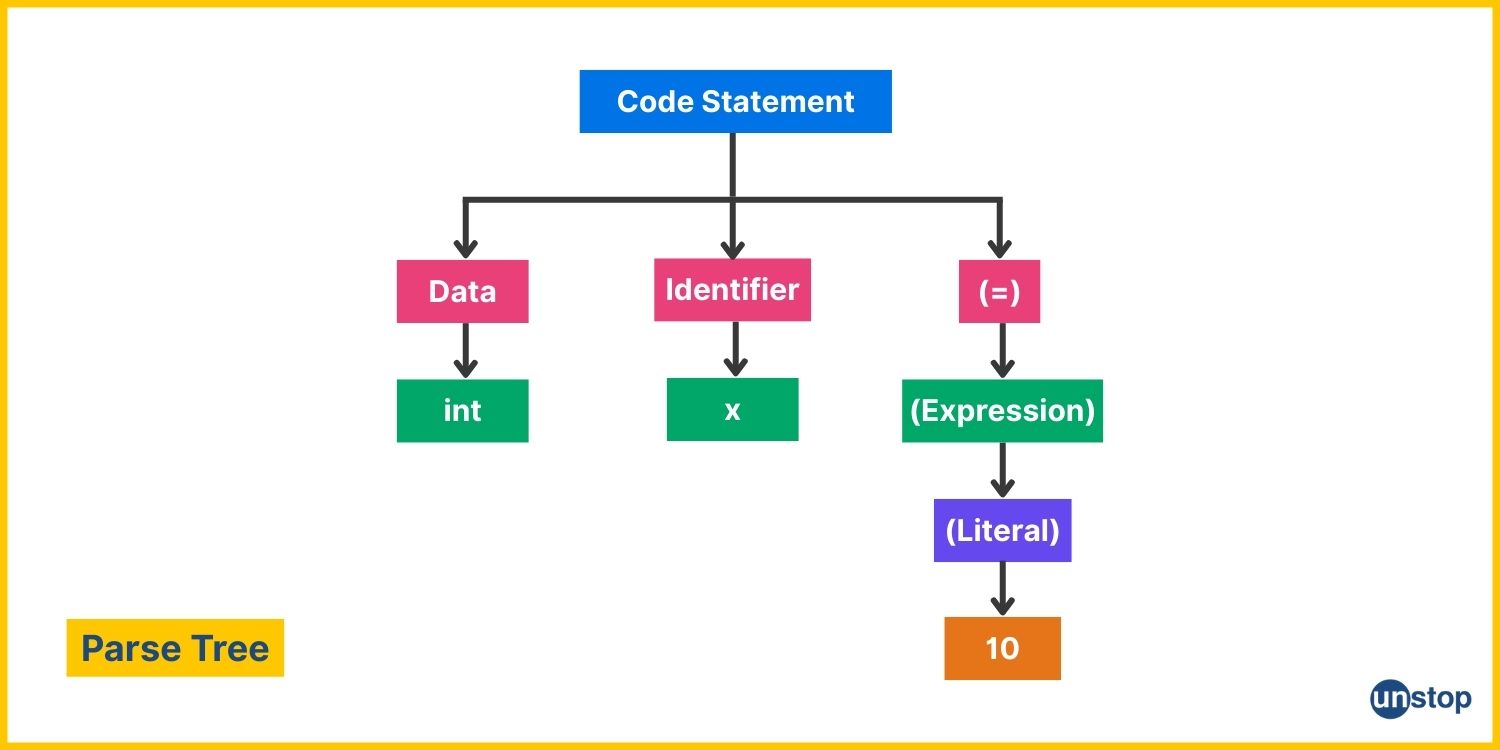

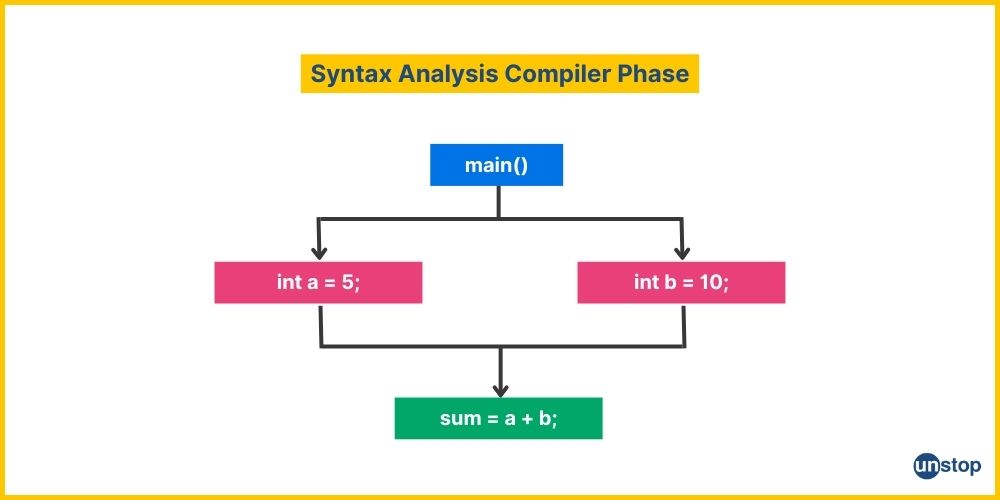

Syntax Analysis – 2nd Phase Of Compiler

Once the Lexical Analysis phase converts source code into tokens, the Syntax Analysis phase (also called Parsing) ensures that these tokens follow the correct grammatical structure of the programming language.

How Syntax Analysis Works

- Takes tokens from the Lexical Analyzer.

- Checks if they form a syntactically correct structure using grammar rules.

- Builds a parse tree to represent the structure.

- Reports syntax errors, such as missing semicolons or incorrect keyword usage.

- Passes the structured output to the Semantic Analysis phase.

Example Of Syntax Analysis

Consider the following statement in C++ language:

int x = 10;

From Lexical Analysis, we get these tokens:

|

Token |

Type |

|

int |

Keyword |

|

x |

Identifier |

|

= |

Operator |

|

10 |

Literal |

|

; |

Separator |

Now, Syntax Analysis checks if these tokens form a valid sentence based on the C++ grammar rule:

<declaration> → <datatype> <identifier> = <literal> ;

Since the given statement follows this rule, it is syntactically correct.

Syntax Errors Example

If the statement was this instead: int = x 10

The parser will flag errors like:

- Unexpected token = (because an identifier must come after int).

- Missing semicolon.

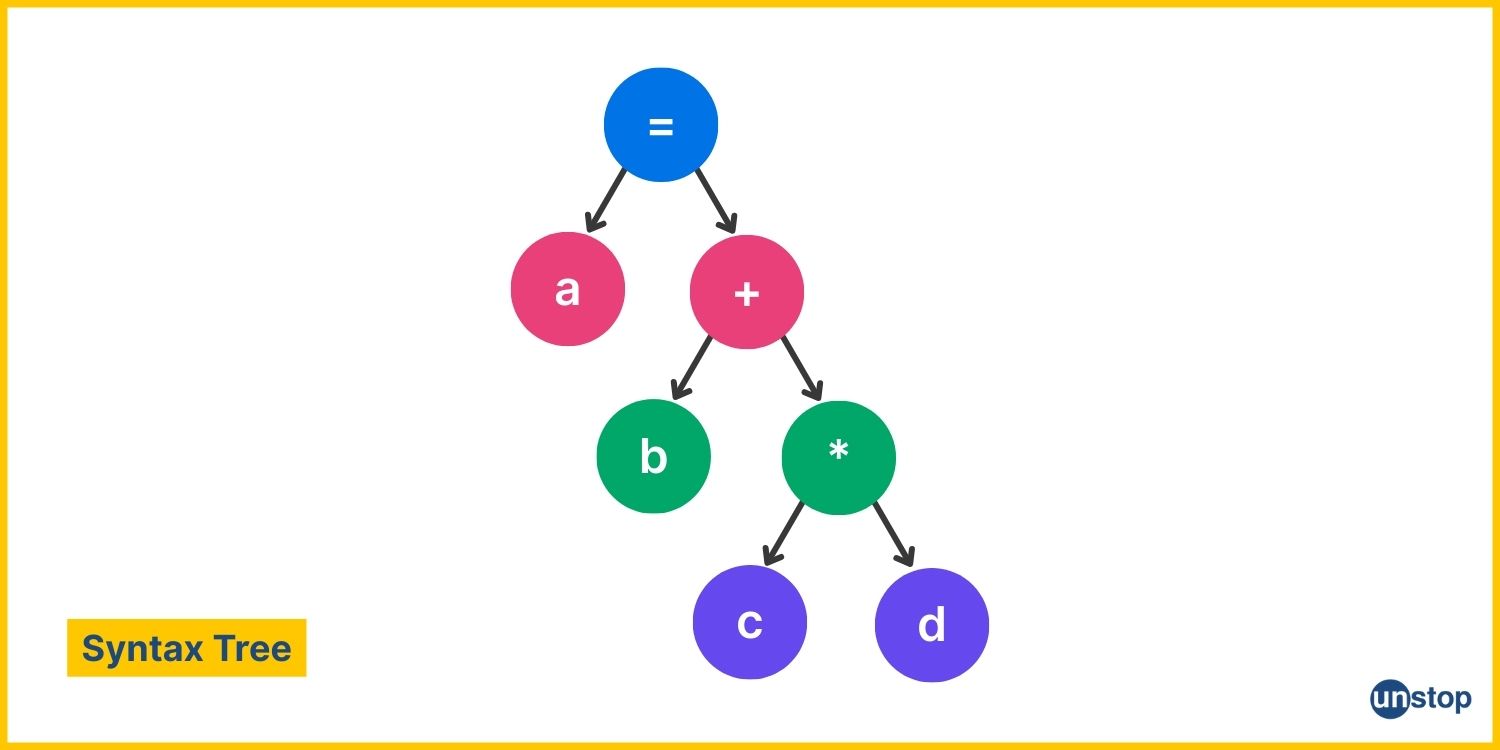

Visual Representation

A Parse Tree or Syntax Tree is created to represent the structure:

- The root node represents the entire statement.

- Child nodes represent components (data type, identifier, operator, etc.).

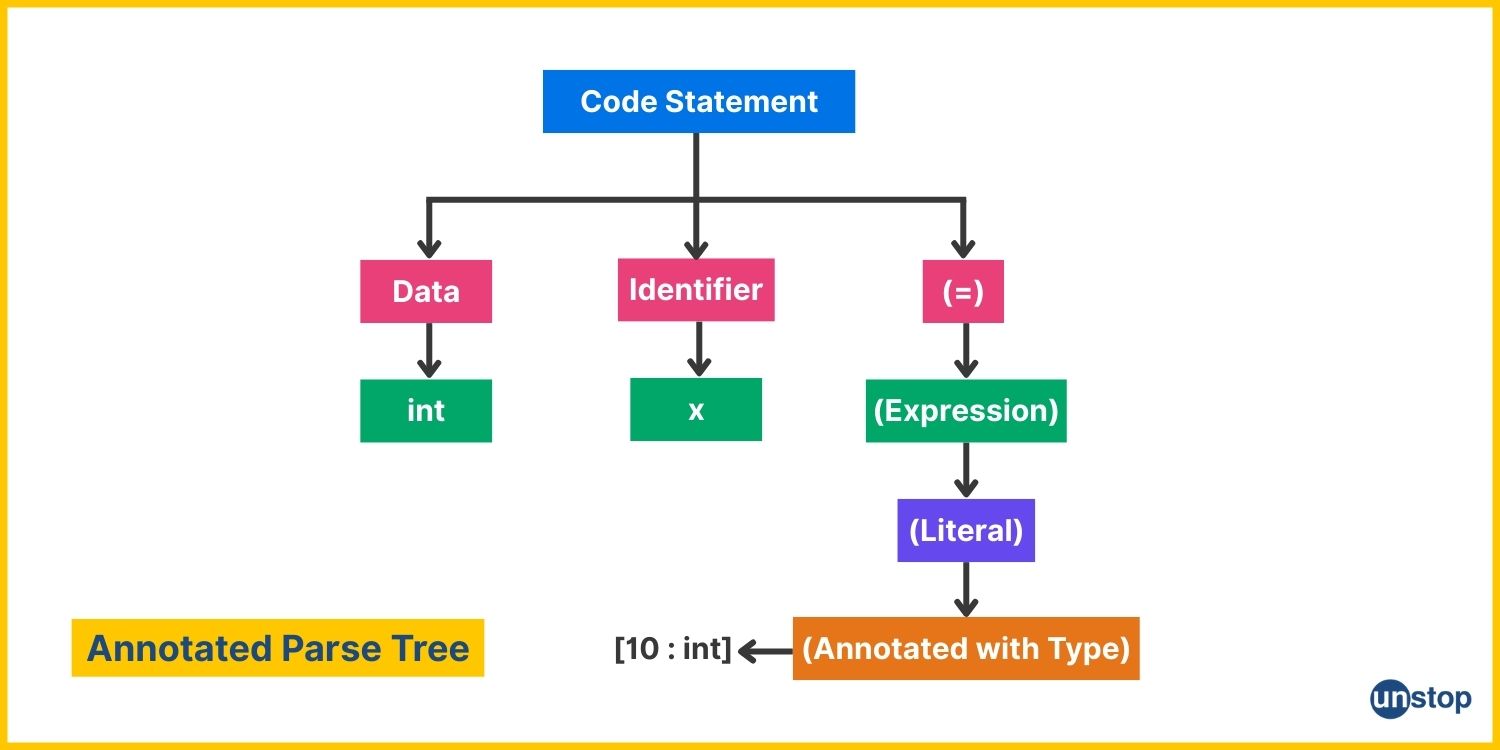

Semantic Analysis – 3rd Phase Of Compiler

Once Syntax Analysis verifies that the code follows grammatical rules, the Semantic Analysis phase ensures that the meaning of the statements is correct. This phase of compilation is important since even syntactically correct code can be meaningless if it violates semantic rules.

How Semantic Analysis Works

- Type Checking– Ensures variables and operations are used correctly (e.g., no adding integers to strings).

- Scope Resolution– Ensures variables are declared before use and accessed within valid scopes.

- Function & Operator Checks– Ensures function calls match their definitions and operators are used properly.

- Semantic Error Detection– Flags errors like type mismatches, undeclared variables, or invalid operations.

Example Of Semantic Analysis

|

✅ Valid Statement |

❌ Semantic Error Example |

|

int x = 10; |

int x = "hello"; //Type mismatch: Cannot assign a string to an int |

|

Here, the type (int) matches the assigned value (10), so no semantic errors occur. |

Even though this is syntactically correct, it is semantically incorrect because "hello" is a string, not an integer. |

Visual Representation

The Semantic Analyzer builds an Annotated Syntax Tree (AST), similar to a Parse Tree but enriched with semantic information such as data types, scope details, and references to the symbol table. Representation of an annotated syntax tree for the valid statement int x = 10; is given below.

Key Differences from Syntax Analysis:

- The Literal node (10) is now annotated with its data type (int).

- Semantic errors would be caught here, ensuring no type mismatches or scope violations.

Quick Test

Understanding how a compiler checks for meaning in code can be tricky. Get expert guidance from top mentors and fast-track your learning now!

Intermediate Code Generation – 4th Phase Of Compiler

Once the Semantic Analysis phase ensures the code is meaningful and error-free, the compiler translates it into an Intermediate Representation (IR). This IR is not machine code yet, but a low-level representation that is easier to optimize and translate into multiple machine architectures.

How Intermediate Code Generation Works

- Converts high-level code into an abstract intermediate form.

- Simplifies complex expressions while keeping program logic intact.

- Enables platform independence, i.e., the same IR can be converted into machine code for different processors.

- Acts as a bridge between analysis phases (front end) and code generation (back end).

Common Forms Of Intermediate Code

- Three-Address Code (TAC)– Uses simple statements like x = y op z.

- Abstract Syntax Tree (AST)– A tree representation with operators and operands.

- Control Flow Graph (CFG)– Represents the program flow as nodes and edges.

Example Of Intermediate Code Generation

Say we begin with the C++ statement:

a = b + c * d;

Generated Three-Address Code (TAC):

t1 = c * d

t2 = b + t1

a = t2

What happens here is:

- The multiplication (*) is computed first and stored in t1.

- The addition (+) is performed and stored in t2.

- Finally, t2 is assigned to a.

- This makes it easier to optimize and convert to assembly/machine code.

Visual Representation

The Intermediate Representation (IR) Tree for a = b + c * d; (Abstract Syntax Tree Format) is as shown below.

Here’s how it works:

- The multiplication operation (*) happens first (c * d).

- The addition arithmetic operation (+) happens next (b + (c * d)).

- The assignment operation (=) stores the result in a.

The intermediate code helps the compiler rearrange and optimize computations efficiently before generating actual machine code.

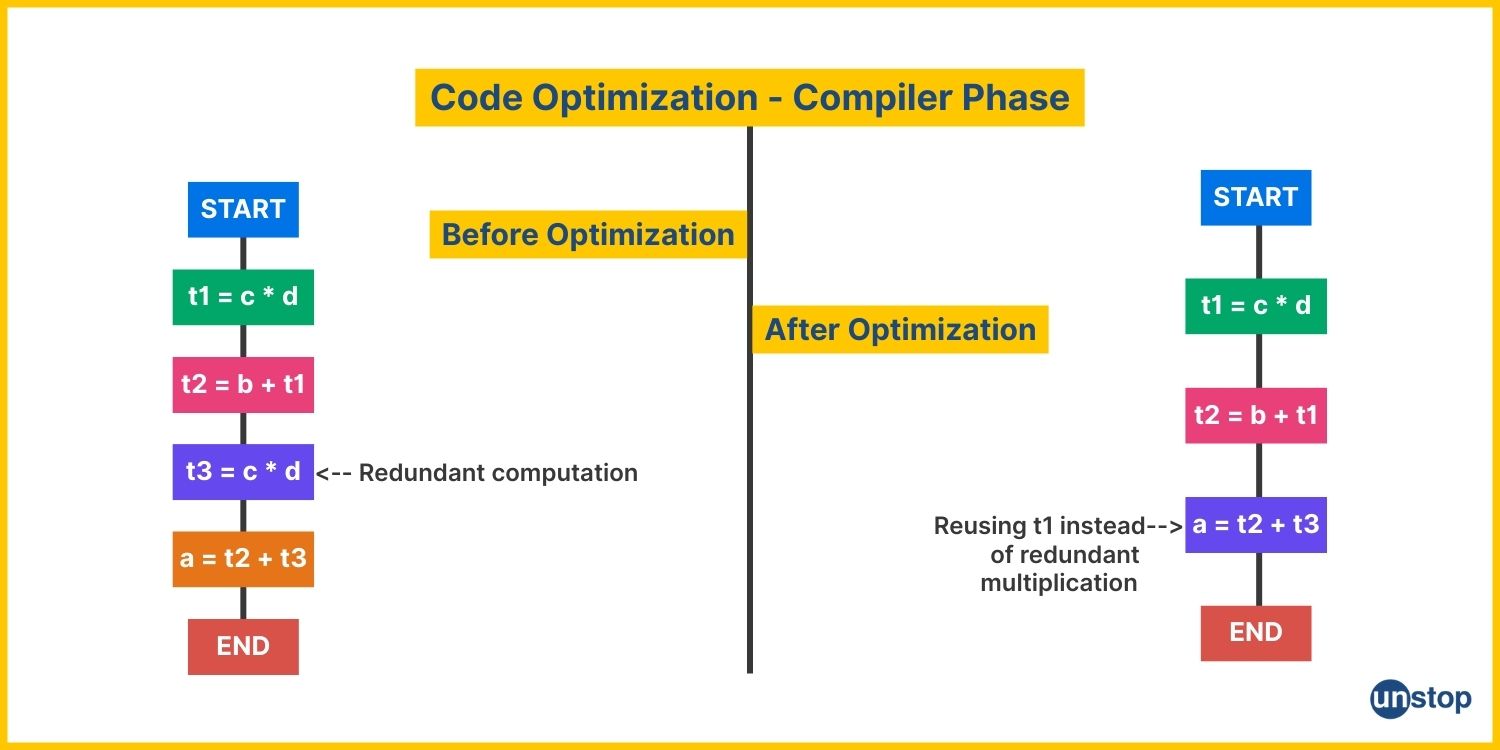

Code Optimization – 5th Phase Of Compiler

Now that we have Intermediate Code, the next step is Code Optimization. This means making the code faster, smaller, and more efficient without changing its meaning.

How Code Optimization Works

- Eliminates unnecessary computations (e.g., redundant calculations).

- Reduces memory usage by reusing variables or removing dead code.

- Improves execution speed by reordering instructions for efficiency.

- Preserves correctness, i.e., optimized code must behave exactly like the original.

Example Of Code Optimization

Code Before Optimization (Intermediate Code - TAC Format):

t1 = c * d

t2 = b + t1

t3 = c * d

a = t2 + t3

Issue: The expression c * d is computed twice.

After Optimization (Common Subexpression Elimination):

t1 = c * d

t2 = b + t1

a = t2 + t1 // Reusing t1 instead of recomputing c * d

Result: One multiplication is removed, making execution faster.

Visual Representation

The Optimization Phase can be represented as a Control Flow Graph (CFG), showing redundant operations being removed or merged. The diagram below represents the code flow by comparing the stages before and after the optimization of the code.

This optimization reduces CPU cycles and memory usage, making the program more efficient without affecting correctness.

Like compilers optimize code for better performance, consistent coding practice sharpens your problem-solving skills.

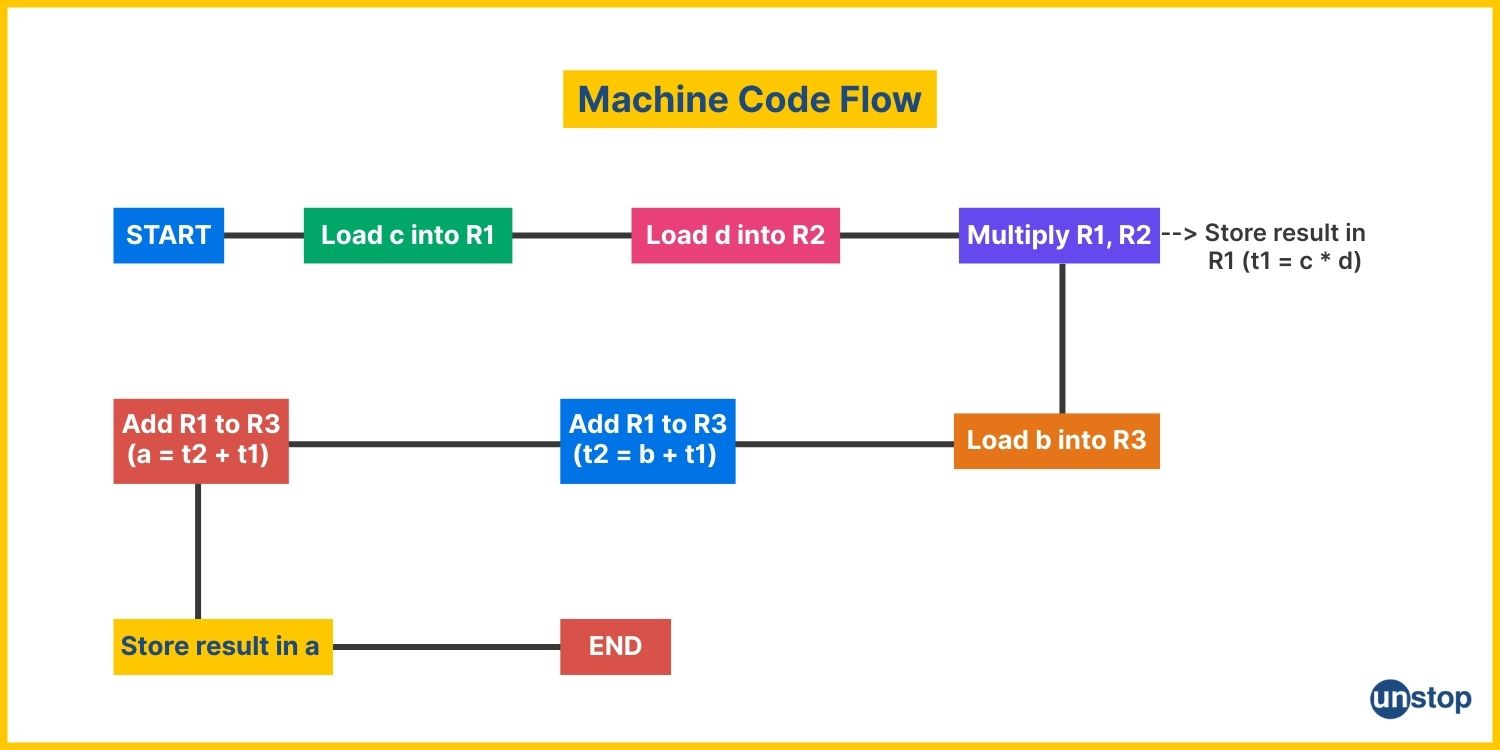

Code Generation – 6th Phase Of Compiler

Now that the code is optimized, the compiler translates the Intermediate Representation (IR) into Machine Code (binary instructions that the CPU can execute). This is the final output of the compilation process.

How Code Generation Works

- Instruction Selection– Converts IR into target-specific assembly instructions.

- Register Allocation– Assigns variables to CPU registers for faster access.

- Instruction Scheduling– Orders instructions for efficient execution.

- Memory Management– Manages stack and heap usage for variables.

Example Of Code Generation

Optimized Intermediate Code (TAC Format):

t1 = c * d

t2 = b + t1

a = t2 + t1

Generated Assembly Code (for x86 architecture):

MOV R1, c

MOV R2, d

MUL R1, R2 ; t1 = c * d

MOV R3, b

ADD R3, R1 ; t2 = b + t1

ADD R3, R1 ; a = t2 + t1

MOV a, R3

Here,

- MOV moves values into registers.

- MUL performs multiplication.

- ADD adds values.

- The result is stored in a, ready for execution.

Visual Representation Machine Code Flow

The Code Generation phase of the compiler converts an optimized IR into machine instructions in a linear sequence. The diagram below illustrates the flow of code in this phase of the compiler.

This final machine code is now executable by the CPU, completing the compilation process!

Quick Test

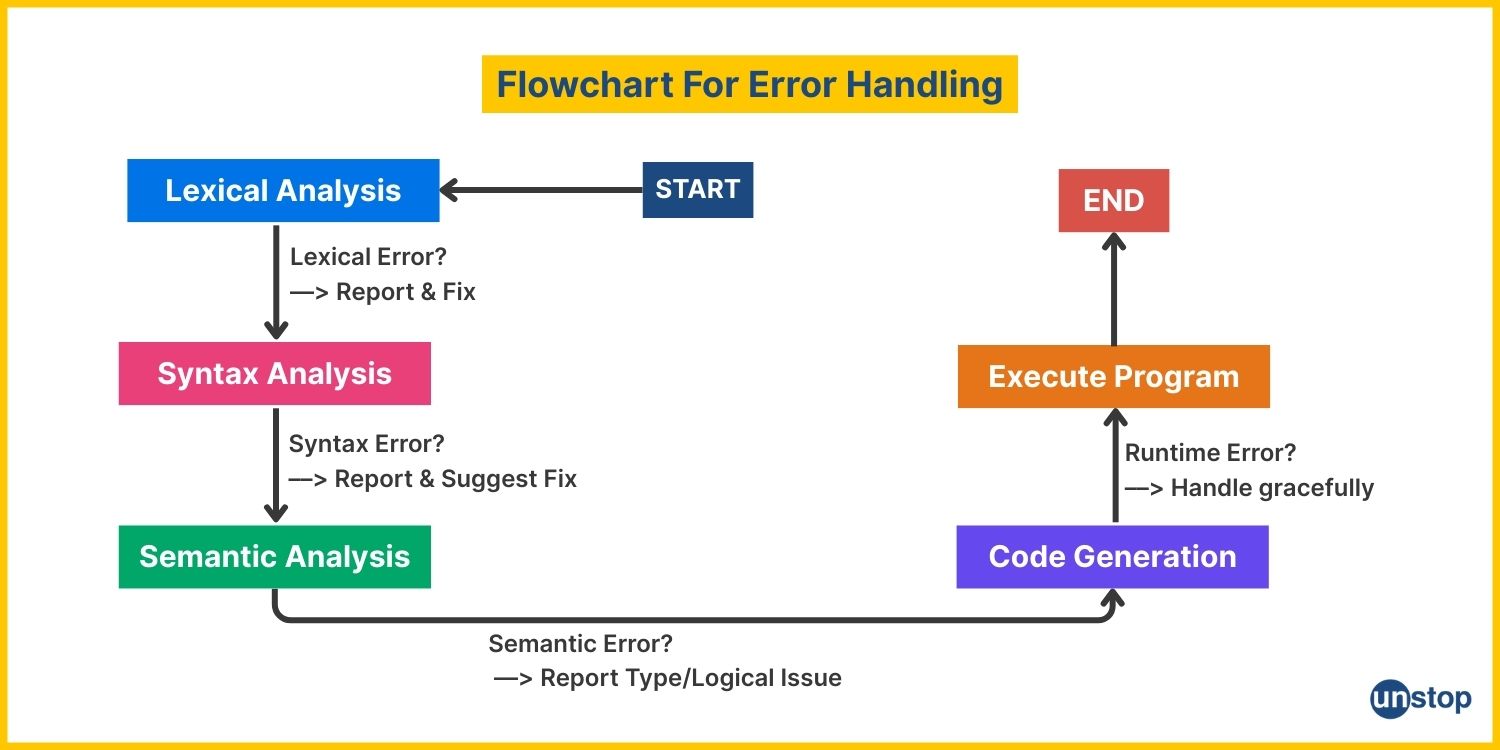

Error Handling In Compiler Phases

Errors can occur at any stage of the compilation process. A good compiler must detect, report, and sometimes recover from these errors to ensure smooth execution.

Types Of Errors In Compilation

- Lexical Errors– Mistakes in token formation (e.g., invalid characters, misspelled keywords).

- Syntax Error– Violations of grammar rules (e.g., missing semicolons, mismatched parentheses).

- Semantic Errors– Meaning-related issues (e.g., type mismatches, undefined variables).

- Runtime Errors– Errors that occur during program execution (e.g., division by zero).

- Logical Errors– The program runs but produces incorrect results.

Error Handling Mechanisms In Compiler Phases

1. Lexical Analysis Phase (Detects invalid tokens)

int num@ = 10; // Invalid character '@'

Error: @ is not allowed in variable names.

Handling: The compiler discards @ and suggests valid alternatives.

2. Syntax Analysis Phase (Detects syntax mistakes)

if (x > 10 { cout << "Hello"; } // Missing closing parenthesis

Error: Syntax is incorrect due to a missing ).

Handling: The compiler suggests the correct syntax.

3. Semantic Analysis Phase (Detects logical inconsistencies)

int x = "Hello"; // Type mismatch: assigning string to int

Error: x expects an integer but got a string.

Handling: The compiler throws a type mismatch error.

4. Runtime Error Handling

int a = 5 / 0; // Division by zero

Error: Arithmetic exception at runtime.

Handling: The program crashes or handles the error gracefully.

Visual Representation Of Error Handling In Compiler Phases

The compiler detects errors as early as possible, reducing the chances of faulty code execution. Some compilers even implement error recovery strategies to proceed with compilation despite minor issues.

Symbol Table & Symbol Table Management

The symbol table is a crucial data structure in a compiler that stores information about variables, functions, objects, and other identifiers used in the program. It helps various phases of the compiler by keeping track of scope, type, memory location, and access permissions of identifiers.

What Is A Symbol Table?

Think of it as a dictionary for the compiler, mapping variable names to useful information.

Example of Symbol Table Entries:

|

Identifier |

Type |

Scope |

Memory Location |

Additional Info |

|

x |

int |

Global |

0x1001 |

Initialized |

|

y |

float |

Local |

0x1005 |

- |

|

add() |

func |

Global |

0x2002 |

Returns int |

Role Of Symbol Table In Compiler Phases

- Lexical Analysis– Stores identifiers as tokens are generated.

- Syntax Analysis– Helps check syntax correctness.

- Semantic Analysis– Ensures variables and functions are correctly used.

- Intermediate Code Generation– Aids in variable-to-register mapping.

- Code Optimization– Helps track redundant computations.

- Code Generation– Provides memory addresses for identifiers.

Operations On Symbol Table

- Insertion– Add new identifiers when declared.

- Lookup– Retrieve identifier information when referenced.

- Modification– Update attributes like scope or type.

- Deletion– Remove variables that go out of scope.

Example Of Symbol Table Management

Say we have the following C++ code:

int a = 10;

float b = 5.5;

void fun() {

int c = 20;

}

Symbol Table After Compilation

|

Name |

Type |

Scope |

Memory Address |

Additional Info |

|

a |

int |

Global |

0x1001 |

Initialized |

|

b |

float |

Global |

0x1005 |

Initialized |

|

fun |

func |

Global |

0x2002 |

Returns void |

|

c |

int |

Local |

0x3001 |

Inside fun() |

Symbol tables streamline the compilation process by providing a structured way to track and manage identifiers.

Phases Of Compiler Design (Step-by-Step Code Progression)

This section visually represents how a simple C++ program moves through various phases of a compiler, illustrating the transformations that occur at each stage.

Example Code:

int main() {

int a = 5, b = 10;

int sum = a + b;

return sum;

}

Step-by-Step Compilation Process

|

Compiler Phase |

Description |

Transformation Example |

|

1. Lexical Analysis |

Converts source code into tokens. |

int → keyword, main → identifier, = → operator |

|

2. Syntax Analysis |

Checks grammar and generates a parse tree. |

Constructs a tree with main() as the root, = and + as child nodes. |

|

3. Semantic Analysis |

Ensures type correctness. |

Confirms sum = a + b; is valid for integers. |

|

4. Intermediate Code Generation |

Generates an abstract machine code representation. |

T1 = 5, T2 = 10, T3 = T1 + T2, return T3 |

|

5. Code Optimization |

Improves efficiency by reducing redundancy. |

Eliminates unnecessary T1 and T2 assignments. |

|

6. Code Generation |

Produces machine code for execution. |

MOV R1, 5, MOV R2, 10, ADD R3, R1, R2, RET R3 |

ASCII Representation of Code Progression Through Compiler Phases

1. Parse Tree Representation (Syntax Analysis)

2. Intermediate Representation (Three-Address Code)

T1 = 5

T2 = 10

T3 = T1 + T2

return T3

3. Final Assembly-Like Code (Code Generation)

MOV R1, 5

MOV R2, 10

ADD R3, R1, R2

RET R3

Key Takeaways

- Each phase transforms the program incrementally, ensuring correctness and optimization.

- Lexical, syntax, and semantic analysis ensure proper structure and meaning.

- Intermediate representation allows easier optimizations.

- Final machine code is highly optimized for execution.

Applications Of A Compiler

Compilers are essential in software development and system programming. Here are some key applications:

- Programming Language Translation: Converts high-level code (e.g., C, Java, Python) into machine code.

- Performance Optimization: Enhances execution speed by optimizing memory usage and reducing redundant computations.

- Error Detection and Debugging: Identifies syntax and semantic errors, helping developers write correct code.

- Cross-Platform Development: Enables code to run on multiple architectures using platform-independent intermediate code.

- Embedded Systems and OS Development: Used in creating operating systems, device drivers, and microcontroller firmware.

Compilers are the backbone of modern software, making programming efficient and scalable.

Conclusion

The compilation process is a multi-stage transformation that converts high-level source code into efficient machine code. Each phase of the compiler, namely, Lexical Analysis, Syntax Analysis, Semantic Analysis, Intermediate Code Generation, Code Optimization, and Code Generation, is crucial in ensuring correctness and efficiency.

- Lexical Analysis breaks code into tokens.

- Syntax Analysis ensures grammatical correctness.

- Semantic Analysis verifies meaning and data types.

- Intermediate Code Generation bridges high-level and machine code.

- Code Optimization enhances performance.

- Code Generation produces final executable instructions.

- Symbol Table Management helps track variables, functions, and scope.

- Error Handling ensures robust compilation with meaningful error messages.

Understanding these phases of a compiler is crucial for anyone diving into compilation, programming language development, or software engineering. Whether optimizing code, debugging compilation errors, or designing a new language, knowing how compilers work gives you a solid foundation.

Frequently Asked Questions

Q1. What are the six phases of a compiler?

The six main phases of a compiler are:

- Lexical Analysis – Converts source code into tokens.

- Syntax Analysis – Constructs a parse tree to check grammar.

- Semantic Analysis – Ensures logical correctness and type consistency.

- Intermediate Code Generation – Produces an abstract representation of the code.

- Code Optimization – Improves efficiency by eliminating redundancies.

- Code Generation – Converts optimized intermediate code into machine code.

Q2. Why is lexical analysis necessary in a compiler?

Lexical analysis is the first phase of compilation. This phase entails breaking down the source code into meaningful tokens. This makes it easier for the parser to process structured input instead of raw characters, setting down the foundation for the other phases of compilers.

Q3. What is the difference between syntax analysis and semantic analysis?

Many get confused between the two seemingly similar phases of a compiler. Here is the key difference between the two:

- Syntax analysis checks grammatical correctness using a parse tree (e.g., ensuring correct placement of brackets and operators).

- Semantic analysis ensures logical correctness, such as verifying that operations are performed on compatible data types (e.g., avoiding adding an integer to a string).

Q4. What is the purpose of intermediate code generation?

Intermediate code acts as a bridge between high-level source code and machine code. It simplifies optimizations and enables portability across different platforms by standardizing code representation.

Q5. How does a compiler optimize code?

Code optimization is an important phase of compilation. The compilers use multiple techniques for this, such as:

- Constant folding (e.g., replacing 2 + 3 with 5)

- Dead code elimination (removing unused variables/functions)

- Loop unrolling (reducing loop iterations for speed)

- Common subexpression elimination (storing repeated expressions)

Q6. What is the role of a symbol table in compilation?

A symbol table stores information about variables, functions, objects, and scope during compilation. It helps track identifiers and resolve references efficiently.

By now, you must be familiar with all the phases of a compiler. Here are a few more topics you must read:

- Difference Between Scripting Language And Programming Language

- Explained: Difference Between Compiler, Interpreter and Assembler

- How To Run C Program | Step-by-Step Explanation (Images + Example)

- Structure of C++ Programs Explained With Examples

- Memory Units: Types, Role & Measurement Of Computer Memory Units

An economics graduate with a passion for storytelling, I thrive on crafting content that blends creativity with technical insight. At Unstop, I create in-depth, SEO-driven content that simplifies complex tech topics and covers a wide array of subjects, all designed to inform, engage, and inspire our readers. My goal is to empower others to truly #BeUnstoppable through content that resonates. When I’m not writing, you’ll find me immersed in art, food, or lost in a good book—constantly drawing inspiration from the world around me.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment