Understanding the domain of Data Science

Being a Data Scientist has become an uber cool proposition in 2018 with almost every other brand be it in banking or FMCG sector, is on a hiring spree. Adepts of big data, machine learning, artificial intelligence are being most sought after due to the internet boom. With the opening up of new markets in developing nations, where technology is still trying to catch the optimum level, Data Scientist is in the centre-stage of recruitment for upcoming years as well.

If one checks the recent news on recruitment strategy unleashed by e-commerce giant Amazon, who had been the top employer in 2017, is looking for talents who are adept in big data, machine learning, decision sciences and artificial intelligence to smoothen their internal and external processes.

Before delving further to explore the wide horizons of Data Science, perhaps a definition will make us understand the basics of it.

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from data in various forms, both structured and unstructured, similar to data mining.

Data science is a "concept to unify statistics, data analysis, machine learning and their related methods" in order to "understand and analyze actual phenomena" with data. It employs techniques and theories drawn from many fields within the context of mathematics, statistics, information science, and computer science. (Wikipedia)

Uses of Data Science

Data science can be used to assist minimize costs, discover new markets and make better decisions. It will be a bit difficult to pinpoint a single or specific example to make one understand about Data Science since it mainly works in confluence with other variables.

There are many algorithms and methods that data experts analyse to help the managers and directors extend their companies bottom line and strategic positioning. Data science techniques are incredible at spotting abnormalities, optimizing constraint problems, predicting and targeting.

For example, data scientists can analyse the data on food habits of the people in a certain area and can help in identifying the location and type of food joint that would be successful. They can also predict the amount of sale a particular food joint will earn there.

Various Processes in Data Science

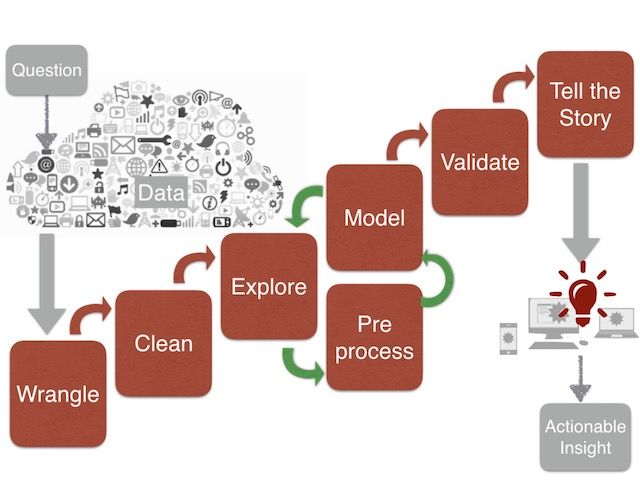

Data Science Pipeline

Data science pipelines are sequences of obtaining, cleaning, visualizing, modelling and interpreting data for a particular purpose. They're useful in production projects, and they can also be beneficial if one expects to come across an identical kind of business question in the future, so as to retailer on design time and coding.

- Obtaining data

- Cleaning or Scrubbing data

- Visualizing and Exploring data will allow us to find patterns and trends

- Modelling data will give us our predictive power as a data magician

- Interpreting data

Obtaining Data

Before delving into any research we need to collect or obtain our data. We need to first identify our strong reasons as to why we are collecting data and if we do what will our sources be? It can be internal or external, there can be an existing database which has to re-analysed, create a new database or use both. Here, the Database Management System comes into play.

A database management system (DBMS) is a system software for creating and managing databases. The DBMS presents users and programmers with a systematic way to create, retrieve, update and control data.

There are two different kinds of DBMS- SQL and NoSQL database. SQL databases are particularly known as Relational Databases (RDBMS) which characterize information in form of tables which consists of n range of rows of records.

On the other hand, NoSQL databases are especially called as non-relational or distributed database which is document based consisting of a series of a key-value pair, documents, graph databases or wide-column stores.

SQL databases have predefined schema whereas NoSQL databases have a dynamic schema for unstructured data.

SQL is more conventionally used data based, however, NoSQL has become more popular in recent years.

Process

- Going through both SQL and NoSQL database and identify one that you need to follow

- Querying these databases

- Retrieving unstructured data in the form of videos, audios, texts, documents, etc.

- Storing the information

Cleaning Data

Data cleaning or Data Scrubbing is the technique of detecting and correcting (or removing) corrupt or inaccurate pieces of information from a record set, table, or database. It helps in figuring out incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the soiled or coarse data. Data cleaning can also be performed with the help of data wrangling tools, or as batch processing through scripting.

Cleaning helps in removing differences or anomalies in data and fills in empty/missing values, thus making records consistent and fit for further processing.

To perform this action an adept needs to have a thorough knowledge of Scripting and Data wrangling tools like Python & R.

Data Visualization

Once your data is preprocessed, now you have the matter to work upon. Though the data is clean it is still raw and it will be hard to convey the hidden meaning behind those numbers and statistics to the end client. Data Visualization is like a visual interpretation of the raw data with the help of statistical graphics, plots, information graphics and other tools.

According to Vitaly Friedman (2008), the "main goal of data visualization is to communicate information clearly and effectively through graphical means. It doesn't mean that data visualization needs to look boring to be functional or extremely sophisticated to look beautiful. To convey ideas effectively, both aesthetic form and functionality need to go hand in hand, providing insights into a rather sparse and complex data set by communicating its key-aspects in a more intuitive way. Yet designers often fail to achieve a balance between form and function, creating gorgeous data visualizations which fail to serve their main purpose — to communicate information"

Data Visualization is extremely important as it is an easy and a comprehensive way to convey the central message to the end users.

To execute this process perfectly one needs to possess certain pre-requisite knowledge-

- Python libraries: Numpy, Matplotlib, Pandas, Scipy

- R libraries: GGplot2, Dplyr

- Inferential statistics

- Data Visualisation

- Experimental design

The journey from learning to earning starts here—browse data analyst jobs in Chennai and take a step toward achieving your goals.

Data Modelling

Data modelling is used to define and analyze data requirements, it is the framework of what the relations are within the database. A data model can be thought of as a flowchart that illustrates the relationships between data.

Models are simply general rules in a statistical sense. There are quite a few special strategies for facts modelling, including:

Conceptual Data Modeling - identifies the highest-level relationships between different entities.

Enterprise Data Modeling - comparable to conceptual records modelling but addresses the special requirements of a unique business.

Logical Data Modeling - illustrates the unique entities, attributes and relationships involved in an enterprise function. Serves as the basis for the creation of the physical records model.

Physical Data Modeling - represents an application and database-specific implementation of a logical information model.

One ought to have this particular skill-set to go about it-

- Machine Learning: Supervised/Unsupervised/Reinforcement learning algorithms

- Evaluation methods

- Machine Learning Libraries: Python (Sci-kit Learn) / R (CARET)

- Linear algebra & Multivariate Calculus

Data Interpretation

All the above steps were restricted to your personal boundary, however, data interpretation makes your work go public. It is supposedly the toughest of all the steps followed above and also the culmination point.

The entire interpretation should be lucid for the user to grasp the meaning of it. A data scientist would understand the data in entirety having worked upon it but a companies management body who has not dealt with the database in -depth will not be able to get at if the interpretation is not up to the mark.

Interpretation is more like weaving a beautiful story with the facts, flowcharts, pie-diagrams, graphs etc as its base on which the entire blueprint has been created. To ace interpreting data one needs to have a-

- Thorough knowledge of your business domain

- Data Visualisation tools: Tableau, D3.JS, Matplotlib, GGplot, Seaborn, etc.

- Communication: Presentation skills – both verbal and written.

How to be a Data Scientist?

There are three general ways of becoming a data scientist:

- Earn an undergraduate degree in IT (Bachelor of Science in Information Technology / Data Management), computer science, math, physics, or another related field;

- Earn a postgraduate degree in data or related field (MS in Data Analytics)

- Gain experience in the field you intend to work in (ex: healthcare, physics, business)

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Comments

Add comment