- AI Project Ideas For Beginners

- Advance Level AI Project Ideas

- Conclusion

Top 10+ Artificial Intelligence Project Ideas [Beginner+Advanced]

![Top 10+ Artificial Intelligence Project Ideas [Beginner+Advanced]](https://d8it4huxumps7.cloudfront.net/bites/wp-content/banners/2023/10/6540d66df2997_ai_project_ideas_fi.png?d=1200x800)

Artificial Intelligence (AI) has been the talk of the tech world in recent times due to the efficiency it brings and the problems it solves. Due to the surge of interest in Data Science, Machine Learning, and Artificial Intelligence topics, there has been an influx of learning mediums present in the market. Still, the question remains that after studying the theoretical aspects of Artificial Intelligence, it is necessary to test that level of knowledge as well. In this article, we will read about artificial intelligence project ideas that can be used in 2023.

AI employs a variety of theories, techniques, and effective strategies. Among the subfields are machine learning, neural networks, deep learning, cognitive computing, machine vision, and Natural Language Processing (NLP). Other AI-supporting technologies include graphical processing units, IoT, advanced algorithms, and API.

By developing an AI-based project, students will be able to evaluate themselves, receive hands-on experience, and build a job profile portfolio to showcase the fact that they are industry-ready. Let's see some artificial intelligence projects for beginners and advanced levels.

Also Read: Difference between Artificial Intelligence and Data Science

AI Project Ideas For Beginners :

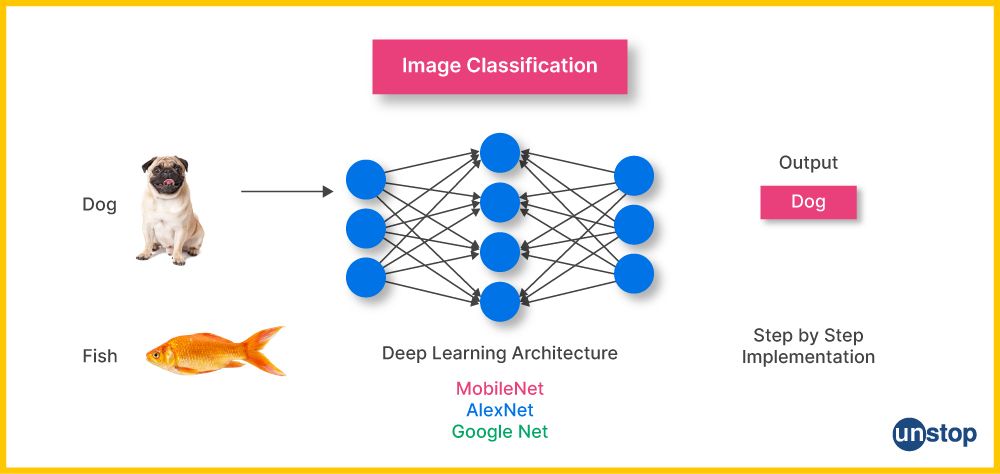

1. Image Classification with Deep Learning

Aim

The aim of this project is to create a deep-learning model that can classify images into predefined categories. Image classification involves training a model to recognize patterns and features in images and then assigning a label or category to each image based on what it has learned.

Prerequisites

- Python Programming: You need a good understanding of Python programming as it's the primary language used for building deep learning models.

- Basic Machine Learning and Neural Networks: A basic understanding of machine learning concepts and neural networks is essential to grasp the fundamentals of image classification with deep learning.

- Deep Learning Framework: You will need a deep learning framework like TensorFlow, Keras, or PyTorch to build and train your neural network.

Working

- Data Collection: You start by collecting a labeled dataset of images. This dataset should contain images along with corresponding labels or categories. For example, a dataset of cats and dogs would have images of cats labeled as "cat" and images of dogs labeled as "dog."

- Data Preprocessing: Images in the dataset need to be preprocessed. This includes resizing, normalizing pixel values, and augmenting the data to create more diverse examples for training.

- Model Architecture: You design a deep neural network architecture suitable for image classification. Convolutional Neural Networks (CNNs) are commonly used for this purpose because they are well-suited for image data.

- Training: The model is trained on the preprocessed dataset. During training, the model learns to recognize features and patterns in the images that are associated with each category. The process involves feeding the training images through the network, making predictions, and adjusting the model's parameters to minimize prediction errors.

- Validation: After training, the model is validated using a separate dataset to ensure it's not overfitting (i.e., memorizing the training data but failing to generalize to new data).

- Testing: The model's performance is evaluated on a testing dataset. This dataset is unseen by the model during training and validation. The model's accuracy and other metrics are assessed to determine how well it classifies images.

- Deployment: Once the model has been trained and validated to satisfaction, it can be deployed for use. This may involve integrating it into an application or system that requires image classification.

Application

Image classification has a wide range of applications, including object recognition, medical image analysis, autonomous vehicles, content moderation, and more. For example, it can be used to identify objects in images taken by autonomous cars, diagnose diseases from medical images, or sort images in a photo library.

Conclusion

Building an image classification model with deep learning is a fundamental AI project that introduces you to the core concepts of deep neural networks and computer vision.

GitHub Link: Click Here

2. AI-Based Chatbots

Aim

The aim of this project is to design an AI chatbot that can engage in natural language conversations with users, answer questions, provide information, and offer assistance across various domains.

Prerequisites

- Natural Language Processing (NLP): Understanding NLP concepts, including language understanding, generation, and text analysis.

- Programming: Proficiency in programming languages such as Python for developing chatbot algorithms.

- NLP Libraries and Frameworks: Familiarity with NLP libraries and frameworks, including NLTK, spaCy, and Transformers (for advanced NLP tasks).

- Machine Learning: Knowledge of machine learning algorithms and techniques, particularly for NLP-related tasks like text classification and named entity recognition.

- Conversational Design: Understanding conversational design principles for creating engaging and user-friendly interactions.

- Ethical Considerations: Awareness of ethical concerns related to chatbot interactions, privacy, and bias.

Working

- Data Collection: Gather a dataset of textual conversations or dialogues that serve as training data for the chatbot. This data should include various conversation styles and topics.

- Data Preprocessing: Preprocess the text data, which may involve tokenization, stop-word removal, and text normalization. This step helps to make the data suitable for analysis.

- Intent Recognition: Implement intent recognition to understand user queries and determine the user's intention. This can be done using techniques like rule-based matching or machine learning models.

- Response Generation: Develop algorithms for generating responses based on user queries and identified intents. Responses can be pre-defined or generated dynamically based on the context.

- NLP Models: Utilize NLP models, such as transformers or recurrent neural networks (RNNs), for more advanced chatbot capabilities, including generating human-like responses.

- User Interface: Design a user-friendly chatbot interface that allows users to input queries and receive responses in a conversational manner.

- Conversational Flow: Create conversation flows that guide users through interactions, providing context-appropriate responses and maintaining coherent dialogues.

- Real-time Processing: Ensure that the chatbot can process user inputs and generate responses in real-time, providing an interactive experience.

- Testing and Evaluation: Evaluate the chatbot's performance by conducting user testing and assessing user satisfaction. Metrics like accuracy, response time, and user engagement can be important indicators.

- Deployment: Deploy the chatbot in various platforms, including websites, messaging apps, and customer support channels, based on the intended use.

- Ethical Considerations: Address ethical considerations, privacy, and responsible AI use in chatbot interactions. Ensure that the chatbot respects user privacy and handles sensitive information securely.

Applications

AI chatbots have diverse applications, including customer support, virtual assistants, e-commerce, healthcare, and education.

Conclusion

Developing an AI chatbot is an exciting project that involves a blend of NLP, machine learning, and conversational design. It offers an interactive and automated solution for engaging with users, answering queries, and assisting in various domains.

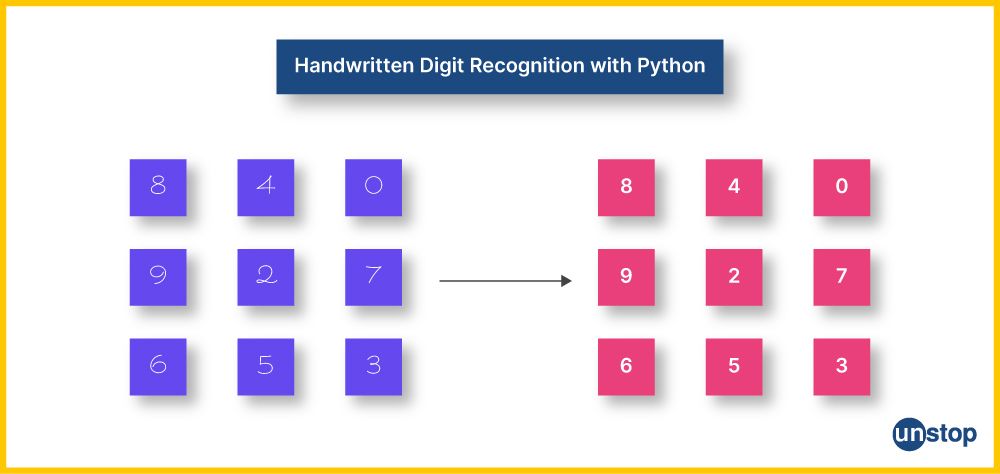

3. Handwritten Digit Recognition

Aim

This project aims to develop a model that can recognize and classify handwritten digits (0-9). Handwritten digit recognition is a fundamental task in computer vision and is often used as a stepping stone to more complex image recognition tasks.

Prerequisites

- Python Programming: Proficiency in Python is essential for developing the image recognition model.

- Understanding of Neural Networks: A basic understanding of neural networks and deep learning concepts is important as you'll be working with neural networks.

- Deep Learning Framework: You'll need a deep learning framework like TensorFlow, Keras, or PyTorch for building and training the neural network.

Working

- Data Collection: Gather a labeled dataset of handwritten digits. The dataset should consist of images of digits (0-9) and their corresponding labels.

- Data Preprocessing: Preprocess the images to make them suitable for the neural network. Common preprocessing steps include resizing the images to a consistent size, normalizing pixel values, and augmenting the data to create more diverse examples for training.

- Model Architecture: Design a neural network architecture suitable for image classification. Convolutional Neural Networks (CNNs) are commonly used for this task because they are well-suited for image data.

- Training: Train the neural network on the preprocessed dataset. During training, the model learns to recognize features and patterns in the images that are associated with each digit (0-9). Training involves forward and backward passes, weight adjustments, and optimization of the model's parameters.

- Validation: After training, the model is validated using a separate dataset to ensure it generalizes well and does not overfit.

- Testing: The model's performance is evaluated on a testing dataset, which is unseen during training and validation. The model's accuracy and other metrics are assessed to determine how well it classifies handwritten digits.

- Inference: Once the model has been trained and validated, it can be used for inference. Given a new handwritten digit image, the model can predict the digit it represents.

Application

Handwritten digit recognition has applications in various fields, including digit recognition in forms, check processing, postal services, and numerical input in various applications.

Conclusion

This project provides insights into image classification and pattern recognition, serving as a building block for more advanced image recognition tasks such as object detection model and character recognition.

GitHub Link: Click Here

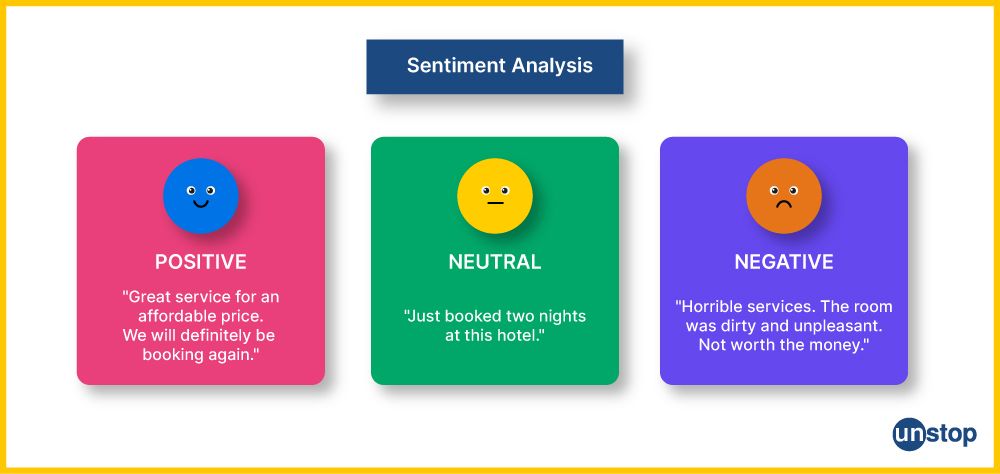

4. Sentiment Analysis for Social Media Posts

Aim

The aim of this project is to develop a model that can analyze and classify the sentiment of social media posts into categories like positive, negative, or neutral. Sentiment analysis, also known as opinion mining, is a valuable application of natural language processing (NLP).

Prerequisites

- Python Programming: Proficiency in Python is essential for NLP and machine learning tasks.

- Basic NLP Knowledge: Understanding the basics of Natural Language Processing (NLP) is important as you'll be working with text data and language understanding.

- NLP Libraries: You'll need NLP libraries like NLTK, spaCy, or TextBlob for text preprocessing and sentiment analysis.

Working

- Data Collection: Gather a dataset of social media posts along with their corresponding labels that indicate the sentiment (positive, negative, or neutral). You can use pre-existing datasets or create your own.

- Text Preprocessing: Preprocess the text data. This typically involves tokenization, removing stop words, stemming or lemmatization, and handling special characters and emoticons.

- Feature Extraction: Convert the preprocessed text data into numerical features that can be used by machine learning models. Common techniques include TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings (e.g., Word2Vec or GloVe).

- Model Selection: Choose a machine learning or deep learning model for sentiment analysis. Common choices include logistic regression, support vector machines, recurrent neural networks (RNNs), or transformers.

- Training: Train the selected model on the preprocessed data. During training, the model learns to predict the sentiment of social media posts based on the features extracted from the text.

- Validation: Validate the model's performance using a separate validation dataset to ensure it generalizes well and doesn't overfit.

- Testing: Evaluate the model's performance on a testing dataset, which should be unseen during training and validation. Assess the model's accuracy and other sentiment analysis metrics.

- Inference: Once the model is trained and validated, it can be used for sentiment analysis on new social media posts. Given a post, the model can predict its sentiment (positive, negative, or neutral).

Application

Sentiment analysis for social media posts has various applications, including brand reputation management, customer feedback analysis, market research, and social media monitoring. It helps organizations gauge public opinion and sentiment about their products or services.

Conclusion

Developing a sentiment analysis model for social media posts is a practical NLP project that introduces you to the domain of text analysis and sentiment classification. It's useful for extracting insights from unstructured text data and automating sentiment assessment in social media content.

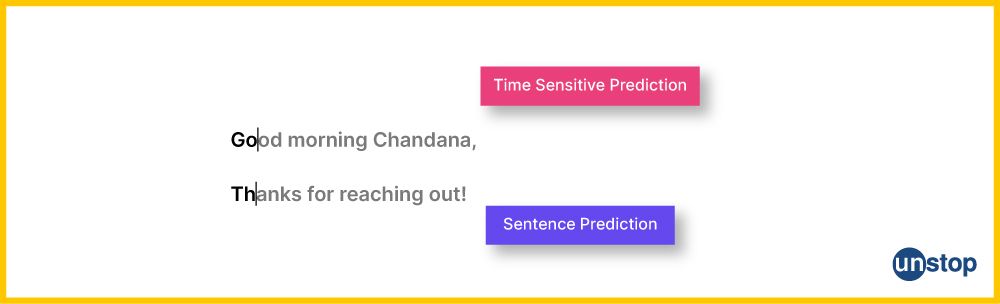

5. Predictive Text Generator

Aim

The aim of this project is to build a predictive text generator that can generate coherent and contextually relevant text while taking into account the sentiment expressed in the text.

Prerequisites

- Python Programming: Proficiency in Python is necessary as it's the primary language for NLP tasks and machine learning.

- NLP Knowledge: A strong understanding of NLP concepts, including text preprocessing, sentiment analysis, and language modeling, is essential.

- NLP Libraries: You'll need NLP libraries like spaCy, NLTK, and transformers (for sentiment analysis) and text generation libraries like GPT-3, GPT-4, or a custom language model.

- Language: Python (with libraries like Keras).

Working

- Data Collection: Gather a dataset of text data with sentiment labels. This dataset should include text samples with associated sentiments (e.g., positive, negative, neutral). You can use pre-existing sentiment-labeled datasets or create your own.

- Sentiment Analysis: Train a sentiment analysis model on the labeled dataset. This model should classify text samples into different sentiment categories based on the content.

- Text Preprocessing: Preprocess the text data for both sentiment analysis and text generation. This includes tokenization, removal of stop words, stemming/lemmatization, and cleaning the text.

- Language Modeling: Choose or fine-tune a language model capable of generating text. Transformers like GPT-3, GPT-4, or custom models are often used for this purpose.

- Model Integration: Develop an integration between the sentiment analysis model and the text generation model. This integration allows the text generator to take into account the sentiment of the input text.

- User Input: When a user provides an input text, the sentiment analysis model analyzes the sentiment of the input. This information is then passed to the text generator.

- Text Generation: The text generator generates coherent and contextually relevant text, considering the sentiment indicated by the user's input. For example, if the input text expresses a negative sentiment, the text generator should produce text that aligns with that sentiment.

- Output: The generated text, which matches the sentiment conveyed in the input, is provided as the output to the user.

Application

This project has applications in various areas, including content generation for marketing, personalized chatbots, and auto-completion systems. It allows the generation of text that not only makes sense contextually but also aligns with the intended sentiment.

Conclusion

Sentiment Analysis for Predictive Text Generator is a challenging and creative project that combines two critical NLP tasks. It enables the development of text generation systems that can generate contextually relevant and sentiment-aware content.

GitHub Link: Click Here

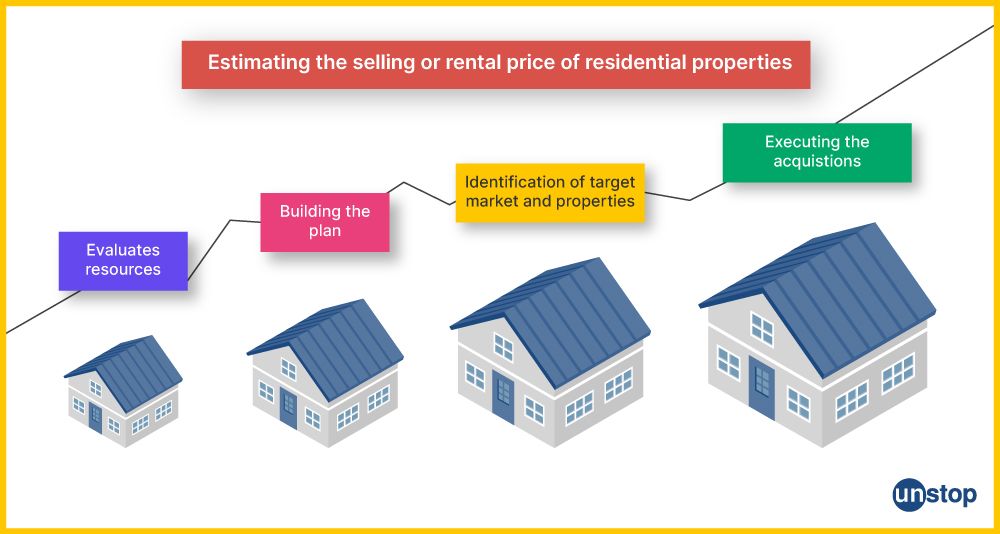

6. Predict Housing Price

Aim

This project aims to develop a predictive model that can estimate the selling or rental price of residential properties based on various features and characteristics.

Prerequisites

- Data Collection: Access to a dataset of historical property sales, including property features (e.g., square footage, number of bedrooms, location, etc.) and their corresponding prices.

- Data Preprocessing: Skills in data preprocessing, including cleaning, handling missing data, and feature engineering.

- Machine Learning: Proficiency in machine learning techniques and libraries for regression tasks (predicting continuous values).

- Statistical Analysis: Knowledge of statistical techniques to identify relationships and correlations in the data.

- Geospatial Data Analysis (if location is a key factor): Familiarity with geospatial data to account for the impact of location on housing prices.

- Data Visualization: Ability to create visualizations for data exploration and model evaluation.

- Ethical Considerations: Awareness of ethical considerations related to housing data, fairness, and bias.

Working

- Data Collection: Gather a comprehensive dataset of historical property sales records, including various features and the corresponding prices. This dataset should be representative of the housing market you intend to predict.

- Data Preprocessing: Clean and preprocess the data, handling missing values, outliers, and transforming features. Feature engineering can involve creating new variables or encoding categorical data.

- Feature Selection: Identify the most relevant features that have the greatest impact on housing prices. Feature selection techniques can help improve model efficiency.

- Machine Learning Model: Choose a regression model suitable for the task. Common choices include linear regression, decision trees, random forests, or more complex models like gradient boosting and neural networks.

- Model Training: Train the selected machine learning model on the preprocessed dataset, with property features as input and prices as the target variable.

- Model Evaluation: Evaluate the model's performance using metrics such as Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared to assess its accuracy in predicting housing prices.

- Visualization: Create visualizations, including scatter plots, residual plots, and geographical heatmaps (if location data is a factor), to understand model predictions and identify areas of improvement.

- Prediction: Once the model is trained and validated, it can be used to predict housing prices for new properties based on their features.

- Deployment: Deploy the model in an application or website to provide users with a housing price estimation tool.

- Ethical Considerations: Address ethical considerations, such as fairness, transparency, and privacy, in housing price predictions and model deployment.

Applications

Predicting housing prices has applications in the real estate industry for pricing properties, aiding buyers and sellers in negotiations, and assisting investors in making informed decisions.

Conclusion

Creating a housing price prediction model is a practical and valuable project that serves the real estate market and empowers individuals and professionals with data-driven insights for property transactions.

GitHub Link: Click Here

Advance Level AI Project Ideas:

1. Online Plagiarism Analyzers

Aim

This project aims to create an online plagiarism analyzer, an AI-driven tool that checks the originality of written content by comparing it to existing text from various sources to identify potential instances of plagiarism.

Prerequisites

- Web Development: Proficiency in web development technologies and frameworks (e.g., HTML, CSS, JavaScript) to build the online interface.

- NLP Knowledge: Understanding of Natural Language Processing (NLP) techniques is essential for text analysis and comparison.

- Machine Learning: Familiarity with machine learning algorithms, particularly for text similarity and plagiarism detection.

Working

- Data Collection: Collect a repository of text documents or articles to serve as the potential sources for plagiarism checks. This can include academic papers, web content, books, and other textual resources.

- Web Interface: Develop a user-friendly web interface that allows users to input their text for plagiarism analysis. This interface should also display the results of the analysis.

- Text Preprocessing: Preprocess the input text and the reference documents by removing punctuation, converting text to lowercase, and tokenizing the text into words or phrases.

- Text Comparison: Implement text comparison algorithms to measure the similarity between the user's input and the reference documents. Common techniques include cosine similarity, Jaccard similarity, and embeddings like Word2Vec or BERT.

- Machine Learning Models: Train machine learning models (e.g., decision trees, random forests, or neural networks) to identify potential instances of plagiarism. These models learn to detect similarities between the input text and reference sources.

- Plagiarism Detection: When a user submits text for analysis, the system compares it to the reference documents and calculates a similarity score. If the similarity exceeds a predefined threshold, the system flags the text as potentially plagiarized.

- Report Generation: Generate a plagiarism report for the user, indicating the potential sources of plagiarism and the similarity percentages. The report can help users understand where their content may have been copied or closely paraphrased.

- Database Management: Maintain a database of reference documents and keep it updated regularly. Additionally, ensure that the system can efficiently query and compare large volumes of text.

Application

Online plagiarism analyzers are useful for educational institutions, content creators, and businesses to ensure the integrity of written content. They can be used to detect plagiarism in academic papers, articles, blog posts, and more.

Conclusion

Building an online plagiarism analyzer involves a combination of web development, NLP, and machine learning techniques to help users identify and prevent plagiarism in their written content. This project serves as a practical tool for promoting originality and academic honesty.

GitHub Link: Click Here

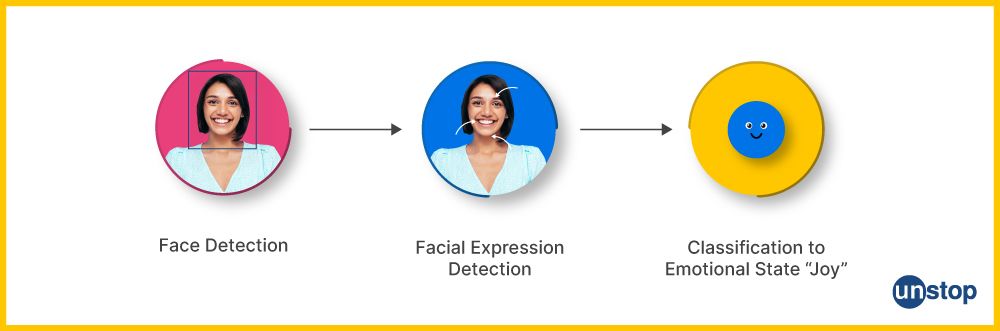

2. Facial Emotion Recognition and Detection

Aim

This project aims to develop a system that can recognize and detect human emotions from facial expressions in images or videos. This project is a part of the broader field of computer vision and can be applied in various domains, including human-computer interaction and mental health monitoring.

Prerequisites

- Python Programming: Proficiency in Python is essential for implementing machine learning and computer vision algorithms.

- Computer Vision Knowledge: Understanding of computer vision concepts and libraries such as OpenCV is crucial for image and video processing.

- Deep Learning: Familiarity with deep learning frameworks like TensorFlow or PyTorch for training emotion recognition models.

- Machine Learning: Understanding of machine learning algorithms and concepts related to image classification and neural networks.

Working

- Data Collection: Gather a dataset of facial images or videos labeled with the corresponding emotional states (e.g., happy, sad, angry, etc.). These images serve as the training data for the model.

- Data Preprocessing: Preprocess the facial data, which includes face detection, alignment, and resizing. Preprocessing techniques may also involve data augmentation to create more diverse training examples.

- Model Architecture: Design a deep neural network architecture suitable for facial emotion recognition. Convolutional Neural Networks (CNNs) are commonly used for this task due to their effectiveness in image analysis.

- Training: Train the neural network on the preprocessed dataset. During training, the model learns to recognize facial features and expressions associated with different emotions. It optimizes its parameters through forward and backward passes, aiming to minimize prediction errors.

- Validation: Validate the model's performance using a separate dataset to ensure it generalizes well and does not overfit the training data.

- Testing: Evaluate the model's accuracy and performance on a testing dataset, which contains unseen data. Assess its ability to correctly detect and classify emotions from facial expressions.

- Real-time Detection: Implement real-time facial emotion detection by applying the trained model to live video streams or images. The system can identify and display the detected emotion in real-time.

Application

The facial emotion recognition system can be applied to various contexts, including human-computer interaction, emotion-aware user interfaces, mental health monitoring, and market research to analyze emotional responses to products or content.

Conclusion

Building a facial emotion recognition and detection system is an exciting project that involves computer vision, deep learning, and real-time processing. It can potentially improve human-computer interaction and find applications in fields like healthcare, entertainment, and customer experience analysis.

GitHub Link: Click Here

3. Self-Driving Automobile

Aim

The aim of this project is to design and build an autonomous vehicle for eg. a self-driving car that is capable of navigating without human intervention. A self-driving automobile, often referred to as an autonomous vehicle, uses artificial intelligence, sensors, and control systems to operate safely on roads.

Prerequisites

- Robotics and Control Systems: Understanding the principles of robotics, control systems, and sensor integration is crucial.

- Computer Vision: Proficiency in computer vision techniques for object detection, lane detection, and scene understanding.

- Deep Learning: Knowledge of deep learning and neural networks for tasks such as image recognition and decision making.

- Sensor Integration: Familiarity with sensor technologies like LiDAR, radar, cameras, and GPS.

- Machine Learning and AI: Skills in machine learning algorithms for vehicle behavior prediction and path planning.

- Safety Regulations: Knowledge of safety and regulatory standards related to autonomous vehicles.

- Hardware Knowledge: Understanding the hardware components required for vehicle control and communication.

Working

- Sensor Integration: Equip the vehicle with an array of sensors, such as LiDAR, radar, cameras, and ultrasonic sensors, to provide real-time data about the vehicle's surroundings.

- Data Collection: Collect and preprocess the sensor data, including capturing and labeling data for training machine learning models.

- Perception and Localization: Implement computer vision and sensor fusion techniques to perceive and localize the vehicle in its environment. This includes detecting lane boundaries, other vehicles, pedestrians, and traffic signals.

- Path Planning: Develop algorithms that determine the optimal path for the vehicle to follow. Path planning includes considerations for lane changes, merging onto highways, and handling complex urban environments.

- Machine Learning Models: Train machine learning models to predict the behavior of other road users, such as predicting where pedestrians will cross or how other vehicles will maneuver.

- Control Systems: Implement control systems to steer, accelerate, and brake the vehicle based on the path planning and perception data.

- Real-time Processing: Ensure the system can process data and make decisions in real-time, as even minor delays can have safety implications.

- Safety Mechanisms: Incorporate safety mechanisms like emergency braking and collision avoidance to ensure the safety of passengers and other road users.

- Testing and Validation: Rigorously test and validate the autonomous vehicle under various road and weather conditions. This includes testing in simulated environments and controlled test tracks.

- Regulatory Compliance: Ensure that the self-driving vehicle complies with local and international regulations governing autonomous vehicles.

- Deployment: Once the vehicle has passed extensive testing and validation, it can be deployed for autonomous driving on public roads or specific domains such as warehouses or closed environments.

Applications

Self-driving automobiles have the potential to revolutionize transportation, including ride-sharing, delivery services, and reduced traffic accidents. They can also improve mobility for the elderly and disabled.

Conclusion

Building a self-driving automobile is a complex and exciting project that combines robotics, artificial intelligence, and real-world engineering challenges. It represents the cutting edge of technology and has the potential to reshape transportation and improve safety on our roads.

4. AI-Powered Healthcare Diagnosis

Aim

The aim of this project is to create an AI system capable of diagnosing medical conditions and providing healthcare recommendations. It leverages artificial intelligence, machine learning, and medical data to assist healthcare professionals and improve patient care by providing effective medical advice.

Prerequisites

- Machine Learning and AI: Proficiency in machine learning, deep learning, and artificial intelligence, especially in the context of healthcare applications.

- Medical Knowledge: A strong understanding of medical terminology, anatomy, physiology, and disease pathology.

- Data Handling: Skills in managing, preprocessing, and analyzing medical data, including electronic health records, medical images, and clinical notes.

- Ethics and Regulations: Awareness of medical ethics, patient privacy regulations (e.g., HIPAA), and medical liability.

- Collaboration: Ability to collaborate with healthcare professionals, radiologists, and domain experts to ensure accurate diagnosis and recommendations.

- Integration: Knowledge of healthcare IT systems for seamless integration with existing healthcare workflows.

Working

- Data Collection: Collect medical data from various sources, including electronic health records (EHRs), medical images (X-rays, MRIs, CT scans), clinical notes, and patient history. The dataset should be labeled with diagnoses and outcomes.

- Data Preprocessing: Preprocess the medical data, which may include cleaning, anonymization, normalization, and feature extraction for machine learning models.

- Machine Learning Models: Build machine learning models that can diagnose medical conditions, predict disease progression, or recommend treatment options. These models can be based on various algorithms, including deep neural networks, decision trees, or support vector machines.

- Image Analysis: For medical imaging, develop computer vision models to detect and analyze anomalies or diseases in images. This may include tumor detection in radiology images or skin lesion classification in dermatology.

- Natural Language Processing (NLP): Utilize NLP techniques to analyze clinical notes, patient history, and medical literature for diagnosis support and patient-specific recommendations.

- Clinical Decision Support: Integrate the AI system into clinical workflows to provide healthcare professionals with decision support. This may include real-time recommendations for treatment options, drug interactions, or risk assessment.

- Patient-Facing Applications: Create patient-facing applications or portals that provide health-related information, reminders, and telemedicine services.

- Validation and Testing: Rigorously validate the AI system's performance through cross-validation, external validation, and clinical trials. Verify the accuracy, sensitivity, specificity, and overall reliability of the system.

- Ethical Considerations: Ensure that the AI system respects patient privacy, adheres to ethical guidelines, and complies with healthcare regulations.

- Deployment: Deploy the AI-Powered Healthcare Diagnosis system in healthcare facilities, telemedicine platforms, and mobile health applications. Continuously monitor and update the system to improve its performance.

Applications

AI-Powered Healthcare Diagnosis has extensive applications, including disease diagnosis, treatment recommendations, personalized medicine, early disease detection, and telemedicine.

Conclusion

Building an AI-Powered Healthcare Diagnosis system is a complex and impactful project that holds the potential to revolutionize healthcare. It enables faster and more accurate diagnoses, improved patient care, and can alleviate the burden on healthcare professionals. However, it must be developed and deployed with utmost attention to data security, ethics, and patient privacy.

GitHub Link: Click Here

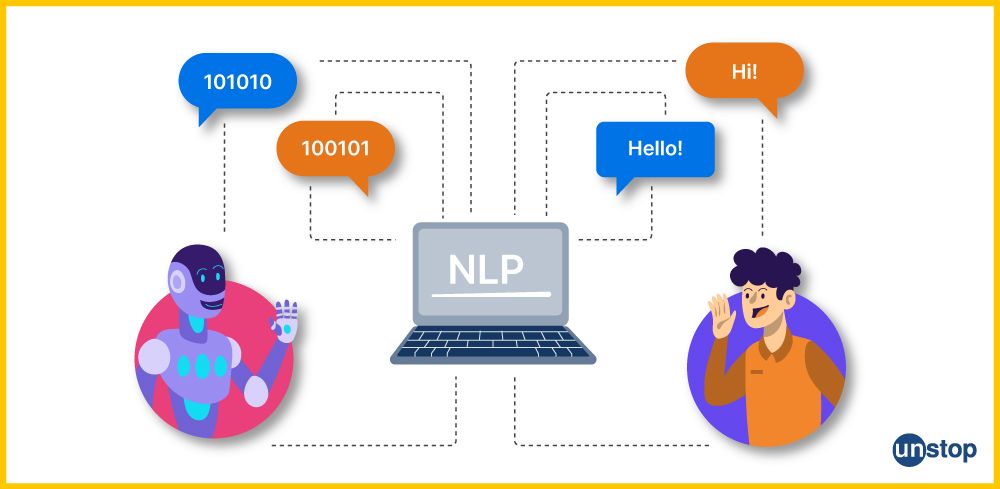

5. Natural Language Processing (NLP)

Aim

The aim of NLP projects is to develop systems that can process and understand human language, enabling various applications such as chatbots, sentiment analysis, translation, and information extraction.

Prerequisites

- Python Programming: Proficiency in Python is crucial as it's the dominant language for NLP due to its rich libraries.

- Linguistic Knowledge: A foundational understanding of linguistics and language structure can be beneficial.

- NLP Libraries: Familiarity with NLP libraries like NLTK, spaCy, Gensim, and Transformers (for advanced tasks) is essential.

- Machine Learning and Deep Learning: Knowledge of machine learning algorithms, neural networks, and their application in NLP tasks.

- Text Preprocessing: Skills in text preprocessing, which includes tokenization, stemming, and stop-word removal.

- Data Annotation: If working with supervised learning, understanding how to label and annotate text data for training.

- Ethical Considerations: Awareness of ethical concerns, privacy, and bias in NLP applications.

Working

1. Data Collection: Gather a dataset of text documents, which can be unstructured or structured text, depending on the NLP task. For example, if building a chatbot, collect dialogues; for sentiment analysis, gather text reviews.

2. Text Preprocessing: Clean and preprocess the text data to prepare it for analysis. This step involves removing special characters, stop words, and stemming or lemmatization to normalize text.

3. Feature Extraction: Convert text into numerical features using techniques such as TF-IDF, Word Embeddings (Word2Vec, GloVe), or BERT embeddings for advanced NLP tasks.

4. NLP Algorithms and Models: Choose appropriate NLP algorithms or models depending on the project. Common approaches include:

- Text Classification: For sentiment analysis, topic classification, spam detection, etc.

- Named Entity Recognition (NER): Identifying entities (e.g., names of people, places, organizations) in text.

- Part-of-Speech (POS) Tagging: Labeling words in a sentence with their respective parts of speech.

- Sequence-to-Sequence Models: For machine translation, text summarization, and chatbots.

- Transformer Models: For advanced tasks like language translation, question-answering, and document summarization.

5. Model Training: Train the selected NLP model on the preprocessed dataset. This involves feeding the data through the model, making predictions, and updating model parameters through backpropagation (for deep learning models).

6. Validation: Assess the model's performance using validation datasets to ensure it generalizes well and doesn't overfit.

7. Testing: Evaluate the model's performance on unseen data to validate its real-world applicability.

8. Deployment: Deploy the NLP system in an application or platform, whether it's a chatbot for customer support, a language translation service, or a text analytics tool.

Applications

NLP is widely applied in diverse areas, including chatbots, virtual assistants, sentiment analysis, information retrieval, machine translation, content recommendation, and much more.

Conclusion

NLP projects are instrumental in enabling machines to understand and work with human language, opening up numerous possibilities for automation and enhanced user experiences. It's a dynamic field that continues to evolve with the advent of transformer models and ongoing research in language understanding.

6. Detecting Violence in Videos

Aim

The aim of this project is to develop a system that can automatically detect instances of violence or violent behavior in videos, helping in content moderation, security, and public safety.

Prerequisites

- Computer Vision: Proficiency in computer vision techniques for video analysis and object detection.

- Machine Learning: Knowledge of machine learning algorithms, particularly for classification tasks.

- Deep Learning: Familiarity with deep learning techniques like TensorFlow or PyTorch for training violence detection models.

- Video Preprocessing: Skills in video preprocessing, such as frame extraction, resizing, and frame normalization.

- Data Annotation: The ability to annotate training data, labeling frames or segments of videos as violent or non-violent.

- Ethical Considerations: Awareness of ethical concerns related to content moderation and privacy.

Working

- Data Collection: Gather a dataset of videos containing both violent and non-violent content, ensuring that it is representative of real-world scenarios.

- Data Preprocessing: Preprocess video data, extracting individual frames or segments and converting them into a suitable format for analysis. Normalize frames and adjust the resolution as needed.

- Feature Extraction: Extract features from video frames or segments that are informative for violence detection. This can include color histograms, optical flow, or deep features from pre-trained neural networks.

- Violence Detection Model: Choose and train a machine learning or deep learning model for violence detection. Common approaches include convolutional neural networks (CNNs) or 3D CNNs for spatiotemporal analysis.

- Video Analysis: Apply the trained model to video data for real-time or offline analysis. This involves classifying frames or segments as violent or non-violent.

- Thresholding: Set a suitable threshold for violence detection to determine what constitutes a violent event. This can vary based on the application, such as security or content moderation.

- Alerts and Actions: If the system detects violence, it can trigger alerts, notify authorities, or take predefined actions such as pausing or reporting the video.

- Real-time Processing: Ensure that the system can analyze video streams in real-time or process recorded videos efficiently.

- Testing and Validation: Rigorously test and validate the violence detection system using a wide range of videos, including those with different lighting conditions, camera angles, and violence scenarios.

- Ethical Considerations: Address privacy concerns and ethical considerations in content moderation, ensuring that the system respects privacy and is used responsibly.

Applications

Violence detection in videos has applications in security, content moderation for online platforms, and public safety. It can assist in identifying violent content, abuse, or criminal activities.

Conclusion

Developing a violence detection system for videos is a crucial project that contributes to content moderation and public safety. It helps in identifying and addressing violent incidents and harmful content in videos, making online and real-world spaces safer.

GitHub Link: Click Here

7. Earthquake Prediction Model

Aim

The aim of this project is to develop a predictive model that can forecast seismic events, specifically earthquakes, to provide early warnings and aid in disaster preparedness and response.

Prerequisites

- Seismology Knowledge: Understanding of seismology, geological processes, and the causes of earthquakes is crucial.

- Data Collection: Access to a comprehensive dataset of seismic activity, including historical earthquake data, ground motion sensors, and geological information.

- Machine Learning and Data Analysis: Proficiency in machine learning and data analysis tools for processing and analyzing seismic data.

- Geographical Information Systems (GIS): Knowledge of GIS software to analyze spatial and geographical aspects of seismic events.

- Statistical Techniques: Familiarity with statistical methods and time series analysis for identifying patterns in earthquake data.

Working

- Data Collection: Gather a vast dataset of historical earthquake records, including information on location, magnitude, depth, and time of occurrence. Additionally, collect data on geological features, fault lines, and ground motion measurements.

- Data Preprocessing: Preprocess the collected data to clean and normalize it. This includes removing outliers, filling missing values, and transforming data for analysis.

- Feature Engineering: Extract relevant features from the data, such as seismic activity trends, geological attributes, and temporal patterns.

- Machine Learning Models: Develop machine learning models to analyze the data and predict the likelihood of earthquakes. Common models include regression models, time series analysis, and deep learning approaches.

- Spatial Analysis: Utilize geographical information systems (GIS) to perform spatial analysis and identify high-risk areas based on geological and seismic data.

- Real-time Data: Incorporate real-time seismic data from ground motion sensors to enable the model to make near real-time predictions.

- Early Warning System: Design an early warning system that uses the predictive model to issue alerts or warnings to authorities and the public when seismic activity is detected.

- Model Validation: Rigorously validate the model's performance by comparing its predictions with historical earthquake occurrences. Assess the model's accuracy and effectiveness in predicting seismic events.

- Communication and Public Awareness: Develop a communication system to disseminate warnings and educate the public about earthquake preparedness and safety measures.

- Ethical Considerations: Address ethical considerations, public safety concerns, and privacy issues related to earthquake prediction and early warning systems.

Applications

An earthquake prediction model has the potential to save lives and reduce the impact of seismic events by providing early warnings to the public, emergency responders, and infrastructure operators.

Conclusion

Building an earthquake prediction model is a significant and challenging project with the potential to improve disaster preparedness and public safety. It involves the integration of seismology, data analysis, and machine learning to predict and mitigate the impact of seismic events.

GitHub Link: Click Here

8. Weather Forecasting Model

Aim

The aim of this project is to build a predictive system that can forecast weather conditions, including temperature, precipitation, wind, and atmospheric pressure, over a specific geographic area for a given time frame.

Prerequisites

- Meteorology Knowledge: Understanding of meteorological concepts, including atmospheric physics, thermodynamics, and climate patterns.

- Data Sources: Access to real-time and historical weather data, which may include temperature, humidity, wind speed, pressure, and satellite imagery.

- Numerical Weather Prediction (NWP): Familiarity with NWP models and tools used in meteorology for simulating weather conditions.

- Machine Learning: Proficiency in machine learning and data analysis to develop prediction models.

- Geospatial Data Analysis: Knowledge of geospatial data analysis for understanding local variations in weather.

- Statistical Analysis: Skills in statistical techniques for analyzing and modeling weather data.

Working

- Data Collection: Gather a vast dataset of historical weather records, including data from ground-based weather stations, satellites, and remote sensing equipment. Real-time data sources, like weather radars and weather balloons, are also essential for current conditions.

- Data Preprocessing: Preprocess the collected data, cleaning, handling missing data, and transforming it into a suitable format for analysis. Feature engineering may involve aggregating data over time periods and geographical areas.

- Numerical Weather Prediction (NWP): Utilize NWP models to generate numerical simulations of atmospheric conditions over time. NWP models are based on mathematical equations that describe atmospheric processes.

- Data Assimilation: Combine real-world observations with NWP model output using data assimilation techniques to improve the accuracy of predictions.

- Machine Learning Models: Develop machine learning models for short-term and long-term weather forecasting. Common approaches include regression models for temperature prediction, time series models for precipitation, and deep learning for complex weather phenomena.

- Model Training: Train the chosen machine learning models on the preprocessed data, using historical data to develop accurate weather prediction models.

- Model Evaluation: Evaluate the model's performance using metrics like Mean Absolute Error (MAE), Mean Squared Error (MSE), and Root Mean Square Error (RMSE) for various weather variables.

- Visualization: Create visualizations, such as weather maps, time series graphs, and weather radar imagery, to visualize and communicate forecasted conditions.

- Real-time Updates: Incorporate real-time data updates to keep the forecast current and responsive to changing weather conditions.

- Alerts and Notifications: Develop a system for issuing weather alerts and notifications to the public and authorities in the event of severe weather events.

- Ethical Considerations: Address ethical considerations related to the communication of weather forecasts and the implications for public safety and preparedness.

Applications

Weather forecasting is used in agriculture, aviation, disaster management, and everyday life for planning outdoor activities and making informed decisions.

Conclusion

Building a weather forecasting system is a complex project that involves integrating meteorological knowledge, data analysis, and numerical weather prediction. It has profound implications for public safety, agriculture, and various industries that rely on accurate weather information.

GitHub Link: Click Here

9. Music Recommendation System

Aim

The aim of this project is to develop a music recommendation app that analyzes user preferences and behavior to offer personalized music suggestions, enhancing the user's listening experience.

Prerequisites

- Programming: Proficiency in programming languages like Python, Java, or Swift, depending on the platform for app development.

- Data Collection: Access to music data, including metadata, user listening history, and music features (e.g., genre, tempo, mood).

- Machine Learning: Knowledge of machine learning algorithms, recommendation systems, and collaborative filtering techniques.

- User Interface Design: Skills in designing user-friendly interfaces and user experiences.

- App Development: Familiarity with app development frameworks, such as Android Studio or iOS development tools.

- Database Management: Understanding of databases for storing and querying user data and music metadata.

- Ethical Considerations: Awareness of privacy and data security concerns related to user data.

Working

- Data Collection: Gather a dataset of music metadata, user listening history, user preferences, and music features. This data is crucial for training recommendation models.

- Data Preprocessing: Preprocess the collected data, including data cleaning, feature engineering, and user behavior analysis. Prepare data for model training and analysis.

- Music Feature Extraction: Extract relevant features from music tracks, such as genre, tempo, mood, and artist information. These features help the recommendation system understand music characteristics.

- Machine Learning Models: Develop recommendation models based on user preferences and music features. Common approaches include collaborative filtering, content-based filtering, and hybrid recommendation systems.

- User Profiling: Create user profiles by analyzing listening history, favorite genres, and music preferences. This information is used to personalize recommendations.

- Recommendation Engine: Implement a recommendation engine that combines user profiles and music features to suggest songs, albums, or playlists tailored to each user.

- User Interface: Design a user-friendly interface for the app, allowing users to input their preferences, browse recommended music, and create playlists.

- Real-time Updates: Ensure that the app continuously updates recommendations based on users' listening habits and feedback.

- Testing and Evaluation: Evaluate the recommendation system's performance by collecting user feedback, assessing click-through rates, and measuring user satisfaction.

- Deployment: Deploy the music recommendation app on the chosen platform, whether it's a mobile app for Android or iOS, a web app, or a desktop application.

- Ethical Considerations: Address ethical considerations, privacy, and data security in user data collection and recommendations, ensuring that user data is handled responsibly.

Applications

Music recommendation apps find applications in music streaming services, online radio, and personalized playlists, enhancing the music listening experience for users.

Conclusion

Creating a music recommendation app is an engaging project that combines user preferences, data analysis, and machine learning to deliver a personalized music listening experience. It enhances user engagement and satisfaction with music services.

GitHub Link: Click Here

10. Lane Line Detection

Aim

The aim of this project is to create a computer vision system that can detect and track lane lines on road images or videos to aid in lane-keeping and autonomous driving tasks.

Prerequisites

- Computer Vision: Understanding of computer vision concepts, including image processing, edge detection, and image segmentation.

- Programming: Proficiency in languages like Python and the use of libraries like OpenCV for image processing.

- Machine Learning: Knowledge of machine learning techniques for lane line detection, such as Hough Transform or Convolutional Neural Networks (CNNs).

- Sensor Data: Access to data from cameras or sensors mounted on vehicles to capture road images or videos.

- Geospatial Data Analysis (for advanced lane detection): Familiarity with geospatial data analysis to consider the road's geometry and real-world coordinates.

- Ethical Considerations: Awareness of ethical concerns related to the use of lane detection in autonomous vehicles and driver-assist systems.

Working

1. Data Collection: Gather a dataset of road images or videos, which can be recorded using cameras mounted on vehicles. These data should include examples of different road and weather conditions.

2. Image Preprocessing: Preprocess the images or video frames by applying techniques like resizing, color conversion, and noise reduction.

3. Lane Detection Techniques:

- Edge Detection: Use edge detection techniques like the Canny edge detector to identify the edges of lane lines.

- Hough Transform: Apply the Hough Transform to identify lines in the image, including the lane lines.

- Deep Learning (Optional): Implement deep learning models, such as Convolutional Neural Networks (CNNs), for lane line detection. This is particularly effective for complex road conditions and geospatial considerations.

4. Lane Tracking: Implement tracking algorithms to maintain consistency in lane detection across consecutive frames in videos.Lane Drawing: Overlay detected lane lines onto the original image or video frames to visually represent the lane lines.

5. Real-time Processing: Ensure that the system can process images or video frames in real-time for applications like autonomous driving.

6. Testing and Validation: Rigorously test the system's performance on various road conditions and validate its accuracy in detecting and tracking lane lines.

7. Deployment: Deploy the lane line detection system in autonomous vehicles or driver-assist systems, where it can be used for lane-keeping and lane departure warning.

8. Ethical Considerations: Address ethical considerations related to the use of lane line detection, particularly in autonomous driving, to ensure safety and responsible use.

Applications

Lane line detection has applications in autonomous vehicles, driver-assist systems, and lane-keeping features, improving road safety and driver comfort.

Conclusion

The lane line detection system is a project in computer vision and autonomous driving, contributing to the safety and efficiency of vehicles on the road. It involves image processing, detection algorithms, and real-time implementation.

GitHub Link: Click Here

Conclusion

AI projects offer a fantastic way to learn, innovate, and contribute to the ever-expanding world of artificial intelligence. Beginners can start with foundational projects, gaining experience with the basics, while advanced enthusiasts can explore cutting-edge applications. Regardless of your level, embarking on an AI project allows you to stay at the forefront of technological advancement and make a tangible impact in this exciting field. So, choose a project that suits your expertise and interests, and let your AI journey begin.

You might also be interested in reading:

- 8 Highest Paying Jobs For Computer Science Professionals In India

- Test Plan vs Test Strategy: What Makes The Two Different?

- The Difference Between Static And Dynamic Website Explained!

- Difference Between Mealy Machine And Moore Machine [With Comparison Table]

- What is The Difference Between Linear And Non Linear Data Structure?

As a biotechnologist-turned-writer, I love turning complex ideas into meaningful stories that inform and inspire. Outside of writing, I enjoy cooking, reading, and travelling, each giving me fresh perspectives and inspiration for my work.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Blogs you need to hog!

This Is My First Hackathon, How Should I Prepare? (Tips & Hackathon Questions Inside)

10 Best C++ IDEs That Developers Mention The Most!

Advantages and Disadvantages of Cloud Computing That You Should Know!

Comments

Add comment