- What Is Cache Memory In Computer?

- Characteristics Of Cache Memory In Computer

- Types Of Cache Memory In Computer

- How Does Cache Memory Work?

- Cache Vs RAM

- Cache Vs Virtual Memory

- Levels Of Cache Memory In Computer Systems

- Cache Mapping

- Conclusion

- Frequently Asked Questions

What Is Cache Memory In Computer? Levels, Characterstics And More

In the world of computing, efficiency is everything. Modern processors are incredibly fast, but they are often held back by the speed of memory access. This is where cache memory steps in—a small, high-speed storage located close to the CPU that significantly boosts the performance of a computer. Acting as a buffer between the CPU and the slower main memory (RAM), cache memory stores frequently accessed data and instructions so that they are readily available when the processor needs them.

By reducing the time the CPU spends waiting for data, cache memory plays a crucial role in enhancing the overall speed and efficiency of computer systems. In this article, we’ll explore what cache memory is, how it works, and why it’s an essential part of modern computing.

What Is Cache Memory In Computer?

Cache Memory is a very high-speed memory that is used for synchronizing with the CPU and to speed up the processes. The cache is comparatively costlier than normal disk memory but is more economical than CPU registers. These memories are extremely fast memory, and their main function is to act as a buffer between RAM and CPU. It holds data and instructions that are frequently requested so that they are immediately available to the CPU when needed. The term 'cache' primarily refers to a thing that is hidden or stored somewhere or the place where it is hidden.

Cache memory is a temporary memory officially termed as 'CPU Cache Memory'. Cache memory in your computer is mostly built on top of the processor/CPU chip itself for faster access to data.

Do you know? The term 'cache' in cache memory comes from the French word 'cacher,' which means 'to hide' or 'to conceal.' In the context of computing, cache memory is named so because it hides or conceals the slower main memory (RAM) by storing frequently accessed data closer to the processor.

Characteristics Of Cache Memory In Computer

Some of the important characteristics that make cache memory an essential component in modern computing are as follows:

-

High Speed: Cache memory operates at a much higher speed compared to RAM, allowing the CPU to access data faster. It helps reduce latency by providing quick access to frequently used instructions and data.

-

Small Size: Cache memory is much smaller in size than main memory (RAM). Typical sizes range from a few KBs (in L1) to several MBs (in L3). Its limited size is due to its high cost and the need for extremely fast access speeds.

-

Proximity to CPU: Cache memory is located either directly on the CPU chip or very close to it. This proximity helps reduce the time taken for the CPU to fetch data, making cache memory much faster than RAM.

-

Levels of Cache: Modern CPUs have multiple levels of cache, namely L1, L2, and L3, each with different speeds and sizes. L1 is the smallest and fastest, while L3 is larger but slower.

-

Volatility: Cache memory is volatile, meaning that its contents are lost when the computer is powered off. It only stores temporary data that is frequently accessed by the CPU during operations.

-

Expensive: Cache memory is more expensive than RAM and secondary storage (e.g., SSDs or HDDs), which is why it is used in limited amounts. The higher cost is due to the faster technology used in its construction.

-

Automatic Management: The cache memory is managed automatically by the CPU. Data that is frequently accessed by the CPU is loaded into the cache, and the CPU first checks the cache before retrieving data from slower memory locations like RAM or disk storage.

-

Temporary Storage: Cache is a temporary buffer that holds copies of frequently used data or instructions. It doesn't store data permanently; instead, it dynamically updates its contents based on what the CPU requires.

-

Data Locality: Cache memory takes advantage of the principle of locality (both spatial and temporal). Frequently accessed data (temporal locality) or data near the currently accessed location (spatial locality) is stored in the cache for quicker access.

-

Direct Mapped, Fully Associative, and Set-Associative Mapping: Cache memory can be organized using different mapping techniques—direct mapping, fully associative mapping, or set-associative mapping—to determine how data is stored and retrieved in the cache efficiently.

Types Of Cache Memory In Computer

Cache memory is typically divided into the following categories:

-

Primary Cache (L1 Cache): This is the smallest and fastest cache, located directly on the processor chip. Its proximity to the CPU ensures extremely quick access, with speeds comparable to those of processor registers.

-

Secondary Cache (L2 Cache): Positioned between the primary cache and the system's main memory, the L2 cache provides an additional layer of storage for frequently accessed data. While it is often integrated into the processor chip, in some cases, it may reside on a separate chip. L2 cache is larger than L1 but slightly slower. The different levels of cache—L1, L2, and L3—will be discussed in more detail later in this article.

How Does Cache Memory Work?

When the CPU needs data, it first checks the cache memory for fast and efficient access. Cache memory is a small, high-speed storage that temporarily holds frequently accessed data. Since system RAM is slower and located farther from the CPU, cache memory helps reduce the performance and latency impact when data needs to be accessed frequently. If the data is found in the cache, this is known as a cache hit.

The formula for the hit ratio is:

Hit ratio = hit / (hit + miss) = no.

A higher hit ratio means the CPU retrieves data more quickly, improving overall system efficiency.

However, cache memory is much smaller than system RAM, and because it only stores data temporarily, it may not always have the required data. When the cache does not contain the needed data, this is called a cache miss, and the CPU then retrieves data from RAM or, in some cases, the hard drive, which is slower.

Cache Vs RAM

Cache and RAM (Random Access Memory) are both types of volatile memory that play crucial roles in a computer's performance. RAM stores data and instructions that are actively being used by the CPU, while cache is a smaller, faster type of memory that sits closer to the CPU. Cache speeds up access to frequently used data by acting as a buffer between the CPU and RAM. Despite serving similar purposes, their differences in size, speed, and function are essential for optimizing system performance.

| Aspect | Cache | RAM |

|---|---|---|

| Speed | Extremely fast (closer to CPU speeds) | Slower than cache, but faster than storage |

| Location | Located inside or very close to the CPU | Separate from the CPU (on the motherboard) |

| Size | Small (typically in KBs to a few MBs) | Larger (in GBs) |

| Cost | More expensive per unit of storage | Less expensive compared to cache |

| Purpose | Temporarily stores frequently accessed data for quick retrieval | Stores active programs and data for CPU processing |

| Access Time | Very low latency | Higher latency compared to cache |

| Volatility | Volatile (data lost when power is off) | Volatile (data lost when power is off) |

| Management | Managed by hardware automatically (CPU) | Managed by the operating system |

Cache Vs Virtual Memory

Virtual memory is basically nothing but a logical unit of computer memory that increases the capacity of main memory by executing programs of larger size than the main memory in the computer system. Virtual memory comparatively has a very large size compared to cache memory. Although virtual memory is helpful, it is not defined as an actual memory unit. The virtual memory is not hardware-controlled but instead controlled by the Operating System (OS). It requires a mapping structure to map the virtual address with a physical address.

| Criteria | Cache Memory | Virtual Memory |

|---|---|---|

| Definition | A small, high-speed memory located on the CPU that stores frequently accessed data and instructions for quick access. | An extension of the computer's physical memory, which uses a portion of the hard drive as an overflow storage for data that cannot fit into RAM. |

| Size | Smaller in size compared to main memory (RAM). | Larger in size compared to cache memory and main memory (RAM). |

| Speed | Extremely fast access time, as it is located on the CPU. | Slower access time compared to cache memory, as it resides on the hard drive. |

| Purpose | To reduce the average time taken to access data and instructions by storing frequently used data closer to the CPU. | To provide additional memory space when the physical RAM is insufficient to hold all the running programs and data. |

| Volatility | Volatile memory, meaning its contents are lost when the power is turned off. | Non-volatile memory, meaning its contents are retained even when the power is turned off. |

| Cost | Expensive due to its high-speed and small size. | Relatively cheaper compared to cache memory, as it utilizes the hard drive for storage. |

Levels Of Cache Memory In Computer Systems

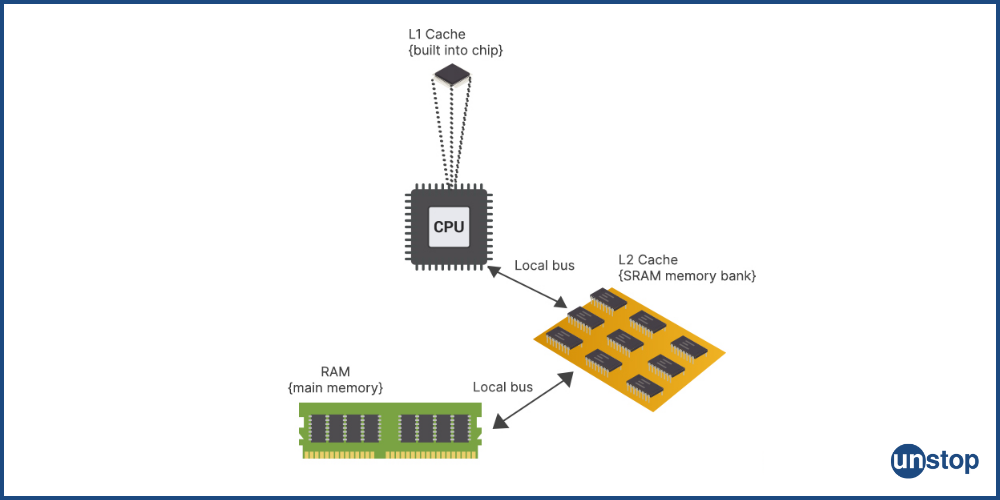

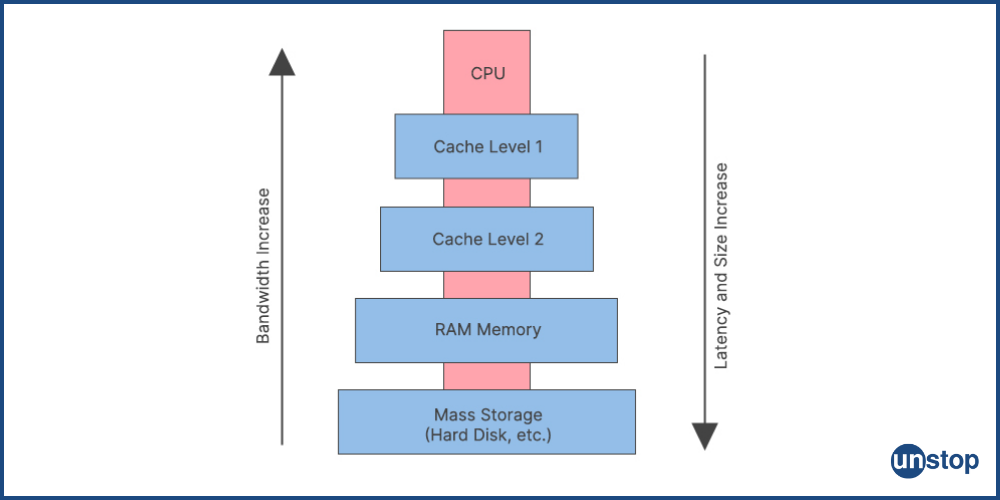

Modern computer systems often have multiple levels of cache memory, each varying in size, proximity to the processor cores, and processing speed. These levels of cache memory are typically referred to as L1, L2, and L3 cache, and each plays a crucial role in enhancing system performance.

-

Level 1 (L1): L1 cache is built directly into the CPU chip and is the fastest type of cache memory. It is usually split into two sections: the data cache (for storing data) and the instruction cache (for storing instructions). Since it operates at the same speed as the CPU, L1 cache is the first place the processor checks when looking for data. In modern CPUs, the L1 cache size is typically around 32 KB per core.

-

Level 2 (L2): L2 cache is larger but slightly slower than L1. It may still be located on the CPU chip or in close proximity, but in some architectures, it can be on a separate chip. L2 cache acts as a secondary buffer between the CPU and the main memory (RAM). Its size usually ranges from 256 KB to 1 MB per core in modern systems.

-

Level 3 (L3): L3 cache is much larger and slower compared to L1 and L2. However, it differs significantly in that it is shared among all the cores in multi-core processors, unlike L1 and L2, which are private to each core. This shared architecture allows for more efficient communication between cores. L3 cache sizes are typically 2 MB to several MBs per core.

Cache Mapping

As previously stated, cache memory is incredibly fast - meaning it can be read from very quickly. However, there is a bottleneck: data must first be located before it can be read from cache memory. The CPU is aware of the data or instruction address in RAM memory that it wishes to read. It must search the memory cache for a reference to that RAM memory location in the memory cache, as well as the data or instruction associated with it.

Data or instructions from RAM can be mapped into memory cache in a variety of ways, each of which has a direct impact on the speed with which they can be found. However, there is a cost: reducing the amount of time spent on searching also minimizes the likelihood of a cache hit, while maximizing the chances of a cache hit maximizes the search time.

The common cache mapping methods are:

1. Direct Mapping

With a direct-mapped cache, a given block of RAM data can only be stored in one location in cache memory. This means that the CPU only needs to check one location in the memory cache to determine if the data or instructions it seeks are present, and if they are, they will be discovered quickly. The disadvantage of direct-mapped cache is that it significantly restricts the types of data or instructions that can be placed in the memory cache, making cache hits infrequent.

2. Associative Mapping

This is the polar opposite of direct mapping and is also known as fully associated mapping. Any block of data or instructions from RAM can be placed in any cache memory block using an associative mapping mechanism. That means the CPU must explore the full cache memory to check if it contains the information it seeks, but the chances of finding it are significantly higher.

3. Set-Associative Mapping

Set associative mapping, which allows a block of RAM to be mapped to a limited number of different memory cache blocks, is a compromise between the two methods of mapping. A RAM block can be placed in one of two locations in cache memory using a 2-way associative mapping method. An 8-way associative mapping scheme, on the other hand, would allow a RAM block to be placed in any of the cache memory blocks. Because the CPU has to seek in two places instead of just one, a 2-way system takes twice as long to search as a direct-mapped system, but there is a significantly higher possibility of a cache hit.

Looking for mentors? Find the perfect mentor for select experienced coding & software experts here.

Conclusion

Cache memory significantly enhances a computer's speed and performance by allowing faster access to frequently used data compared to RAM and local disk storage. While a 6MB cache is decent, an 8MB cache or larger can provide better performance, especially for tasks that require frequent data access. However, it's important to note that more cache does not always mean better performance in a linear fashion, as it depends on the system architecture and workload.

Cache is the fastest memory in a computer, but the efficiency and speed of the CPU also rely on other factors, such as how effectively the cache is used. Lastly, it should be noted that increasing cache size does not impact the CPU clock speed, which is a separate measure of how fast the processor executes instructions.

Frequently Asked Questions

1. What is cache memory and why is it important?

Cache memory is a small, high-speed memory located on the processor chip or between the processor and main memory. It stores frequently accessed data and instructions, allowing the processor to quickly retrieve them when needed. Cache memory plays a crucial role in improving the overall performance and speed of a computer system.

2. How does cache memory work?

Cache memory operates on the principle of locality, which means that programs tend to access the same data and instructions multiple times. When the processor needs to fetch data, it first checks the cache. If the required data is found in the cache, it is called a cache hit, and the processor can access it quickly. If the data is not present in the cache, it is called a cache miss, and the processor has to retrieve it from the slower main memory.

3. What are the different levels of cache memory?

Cache memory is organized into multiple levels, commonly referred to as L1, L2, and L3 caches. L1 cache is the smallest but fastest cache located closest to the processor. L2 cache is larger and slightly slower than L1 cache, while L3 cache is even larger but slower than both L1 and L2 caches. The hierarchy allows for a trade-off between speed and capacity, ensuring that frequently accessed data is stored in faster caches.

4. How does cache memory impact system performance?

Cache memory significantly impacts system performance by reducing the time it takes for the CPU to access data. With data stored in cache, the CPU can fetch it quickly without waiting for slower main memory access. This leads to faster execution of instructions and overall improved system responsiveness. A larger cache size and higher cache hit rate generally result in better performance.

5. Can cache memory be upgraded or expanded?

Yes, cache memory can be upgraded or expanded in some cases. However, it depends on the specific hardware and its design. Some processors allow for upgrading or expanding cache memory, while others do not. It is important to check the specifications of your device or consult with a professional to determine if cache memory can be upgraded or expanded.

You may also like to read:

As a biotechnologist-turned-writer, I love turning complex ideas into meaningful stories that inform and inspire. Outside of writing, I enjoy cooking, reading, and travelling, each giving me fresh perspectives and inspiration for my work.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Blogs you need to hog!

This Is My First Hackathon, How Should I Prepare? (Tips & Hackathon Questions Inside)

10 Best C++ IDEs That Developers Mention The Most!

Advantages and Disadvantages of Cloud Computing That You Should Know!

Comments

Add comment