- Advantages Of Manual Testing

- Manual Testing: Best Practices

- Manual Testing Interview Questions

Frequently Asked 50+ Manual Testing Interview Questions 2023

Manual testing is an age-old technique used in software development to ensure the quality and functionality of a product. It involves writing test cases, running them manually against the system under test, and analyzing results. Manual testing requires great attention to detail, patience, and careful observation skills as testers need to compare expected outcomes with actual behaviour. This strategy has been demonstrated to be one of the most efficient ways to find faults before the final product is released into the market and/or test if apps are unfit for their intended use.

Manual testing is a process that consists of developing tests by consulting documents such as requirement specifications, design documents, etc., executing those tests on different platforms (browser/device combinations), and reporting any defects found via bug tracking tools like Jira or Bugzilla during this process.

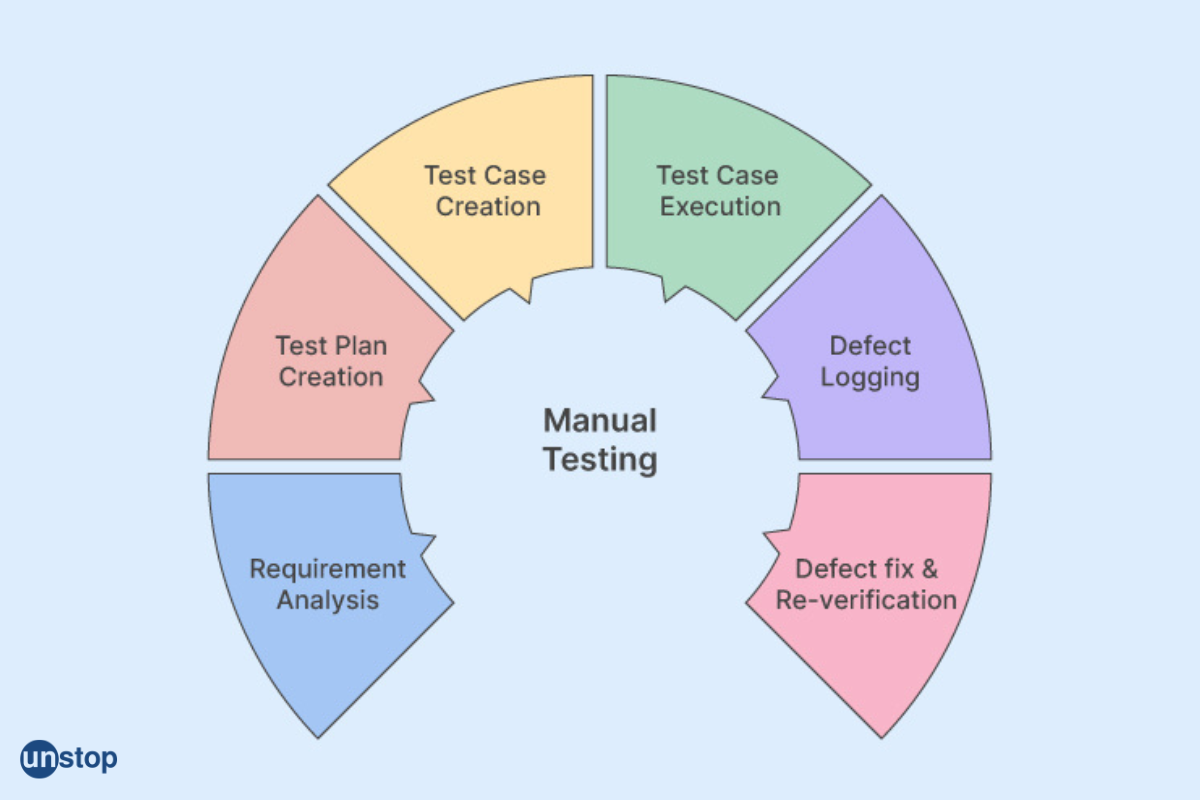

The following activities are usually required while performing manual testing:

Execution & Verification– Tests can only be executed if there is sufficient knowledge about how specific features should behave;

Data Validation– Sometimes it's necessary to validate data from databases or third-party APIs;

Compatibility– Testers must also verify compatibility across multiple operating systems, browsers versions & device types where applicable like IE8 vs Edge 16, etc.;

Stress Testing– How does your application perform when subjecting your app codebase (software) to an immense amount of load, or when making thousands/millions of API calls? As manual testers, these things must also be considered during the testing process.

Advantages Of Manual Testing

Manual testing has a number of advantages and is well-suited for situations where there's no automation tool available. This approach allows human input to catch edge cases that automated tests would miss; after all, software should work as intended, and in ways, users might expect it to - like using a tab key on specific fields rather than clicking on them.

Manual testing can help identify usability issues with your UI/UX, which sometimes might be hard to gauge unless experienced by real people (not just machines). Testers are humans, so they may find issues that could have been overlooked if you relied solely upon automation tools – this can save lots of time & money!

Another advantage that manual testing enjoys over automation testing is reusability. Test scripts written manually can easily be reused across different versions and environments without major modifications. In contrast, scripts used for automation suffer from brittleness, i.e. they often need changes whenever there's a slight change in the system codebase (software).

However, despite its advantages over automating every test case, manual testing does require significant effort to be effective. Testers often need in-depth knowledge and an intimate understanding of the system under test, as well as a sound familiarity with the business objectives it is designed to fulfill – knowing how your application should work versus actual behavior can significantly reduce time spent on troubleshooting unnecessary issues, while also helping you prioritize and focus on which bugs or performance problems are most important for users' experience.

Manual Testing: Best Practices

It's worth mentioning some best practices that manual testing teams might consider. Like the following:

Conduct Test Reviews– during each iteration, testers & developers alike should review all tests performed thus far (not just defects found) to ensure their accuracy and effectiveness;

Use Exploratory Testing– this methodology helps find unexpected behaviours since it adopts a pre-defined approach. When executing, look not only at what was specified but also use intuition about where possible weaknesses may lies (go beyond requirements);

Continuous Tracking and Reporting– once any issue has been identified, track the detailed steps taken to describe the exact location/situation where a bug occurred. Then have them reported clearly via bug tracking tools so everyone else involved knows what's going on and appropriate reporting can be done if they're facing the same outcome.

Rely On Collaboration- Collaboration between stakeholders, such as development team members and business domain experts, can provide invaluable insight to improve product quality.

Manual testing requires special attention to detail, patience and careful observation on the part of a tester, but when done properly, it can be invaluable in delivering bug-free products for users' satisfaction. Furthermore, following best practices like test reviews and exploratory testing along with continuous tracking and reporting helps ensure high-quality results while boosting teams' collaboration. All of this results in improved efficiency in general.

Testing is important for any software product's success in today's market. Manual testing plays an essential role in software development and is best used when you may not be able to use default tests. Therefore, many organizations still require manual testers with specialized skill sets. Being well-prepared before your interview for a position in manual testing can make all the difference.

Manual Testing Interview Questions

Here's a look at some frequently asked manual testing interview questions that can help you prepare.

1. What is software testing?

Software testing is a procedure used to assess the functionality, efficiency, security, and dependability of software programs. It involves running tests on different aspects of the application to identify any issues before it is released into production. Depending on the complexity of the system, testing can be done manually or automatically. Tests are designed to cover every element, from the user interface to design elements such as display widgets to backend services like database-level operations or API calls between two systems. Depending on how thorough testing needs to be for an application release, testers have options from manual exploratory methods to automation techniques powered by Extreme Programming (XP) principles.

2. Is it necessary to conduct software testing? Please explain.

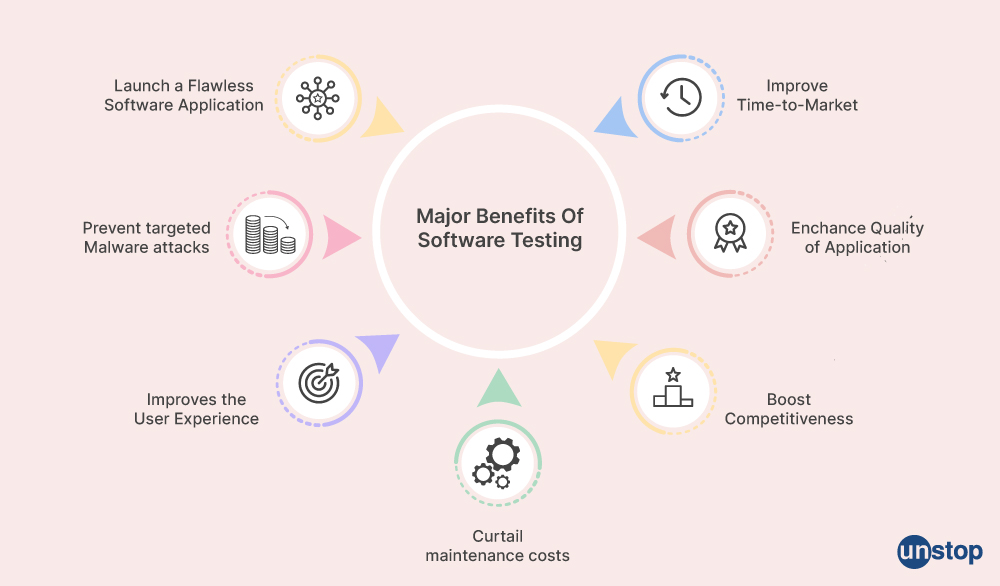

Yes, it's necessary to conduct software testing before releasing the software into production. Here's why:

1. To ensure the system meets its requirements and works as expected.

2. To identify any errors, gaps, or missing requirements in the software before release to production or end users.

3. To improve the quality of the product by reducing bugs and defects before delivery.

4. To verify if all components are appropriately integrated and function correctly with one another without any conflicts when used together in a single environment (integration testing).

5. To reduce costs by avoiding costly corrections after the deployment phase.

3. What are the uses of software testing?

Here are some major uses of software testing:

1. Identifying errors during development such as coding mistakes, logic flaws, etc. This prevents the error from being carried over into released products/systems, resulting in fewer problems for customers who use it after the launch/deployment date.

2. Providing customers assurance that their desired results will be achieved once they begin using your application.

3. Enabling developers to make sure applications perform consistently regardless of whether they are run on different hardware/software platforms.

4. Gaining user trust by ensuring stability and reliability.

5. Assuring compliance with industry regulations.

4. What various forms of software testing are there?

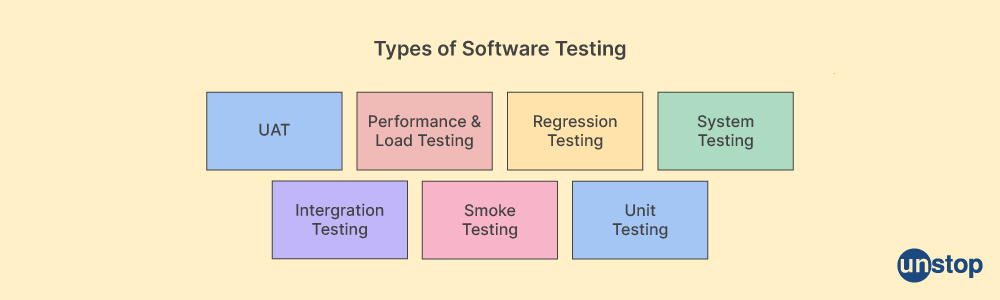

The different types of software testing include:

- Functional Testing: This type of software testing focuses on verifying the functionality and behaviour of an application as per requirements. It is further divided into various tests such as Usability, User Acceptance, System Integration, and Unit and Stress Testing.

- Performance Testing: As its name suggests, this test checks how fast or slow a system performs under certain conditions. For example, response time, throughput, etc. It also covers the stability aspect, that is testing if the application can perform without failure over a long period.

- UX/UI Testing: A UI/UX test aims to check whether the user interface provided by your product meets customer expectations. It includes evaluating setup, navigation, usability, presentation look and feel, colour combinations, etc. One should always make sure the usability factor has been taken care of while developing a solution.

- Regression Testing: This is used to verify if changes made in one part do not break another section. It also tests if the code is functioning with existing features and continues working correctly even after a change.

- Security Testing: Here, testers attempt to hack into the system using different tools and methods so they can suggest security measures that need to be implemented to safeguard against possible attacks from outside.

- Unit Testing: This method involves isolated components at the smallest unit level - checking each module's correctness through special techniques like boundary value analysis and equivalence partitioning.

- Black-Box Testing and White Box Testing: They refer to two major techniques in software testing. Black box testing does not require programmer knowledge for execution. However, white box testing involves deep code inspection and is best suited when a brief understanding of application structure is essential.

- Integration Testing and System Testing: This method ensures that individual units work correctly once integrated.

- Smoke Testing and Sanity Testing: This approach enables the tester to quickly identify defects in the system by performing a few tests so testers can decide whether or not the whole system needs further investigation.

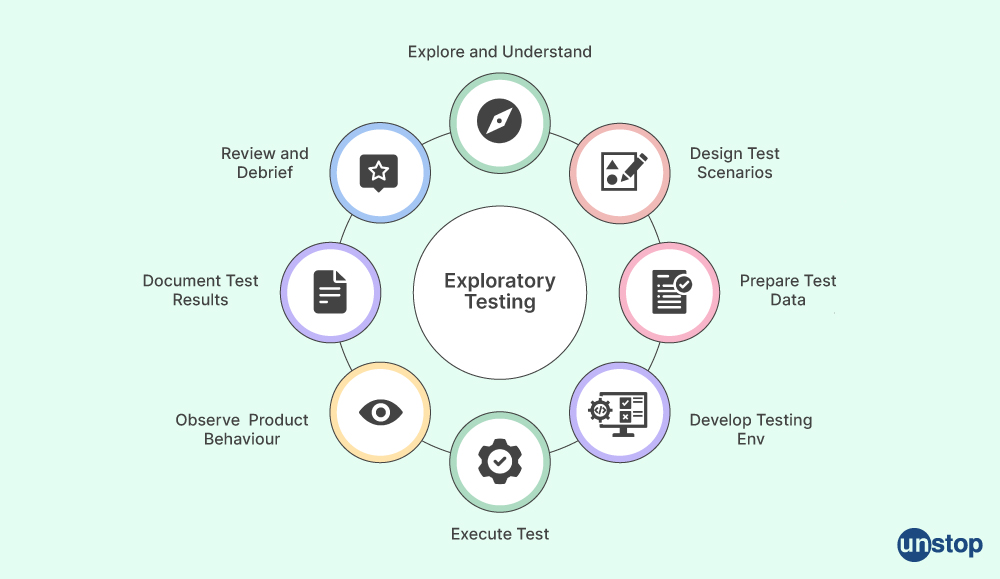

5. How does exploratory testing help identify software application risks and issues?

Exploratory testing helps identify risks and issues in the software application by allowing testers to use their experience with different test approaches, including ad-hoc, specification-based, or exploratory testing techniques, which allow for deeper coverage of test scenarios that typical scripted testing may miss. Exploratory testing also enables testers to find flaws or other issues that might not be obvious at first glance by applying their analytical and problem-solving abilities.

6. What steps are involved in performing effective beta testing of a product before its release?

Effective beta testing involves recruiting a group of users who will use the product as it would be used in real-life scenarios such as setting up an account, entering data, creating reports, etc. Beta testing includes identifying bugs and reporting them back, analyzing user feedback on usability, executing repeatable regression tests between builds, and running performance benchmark changes over time. All of this can help ensure that the release is successful.

To sum it up, beta testing includes:

- Creating a test plan.

- Designing tests.

- Scheduling feedback sessions throughout.

- Recording all bug submissions for tracking purposes.

- Releasing regular updates based on feedback.

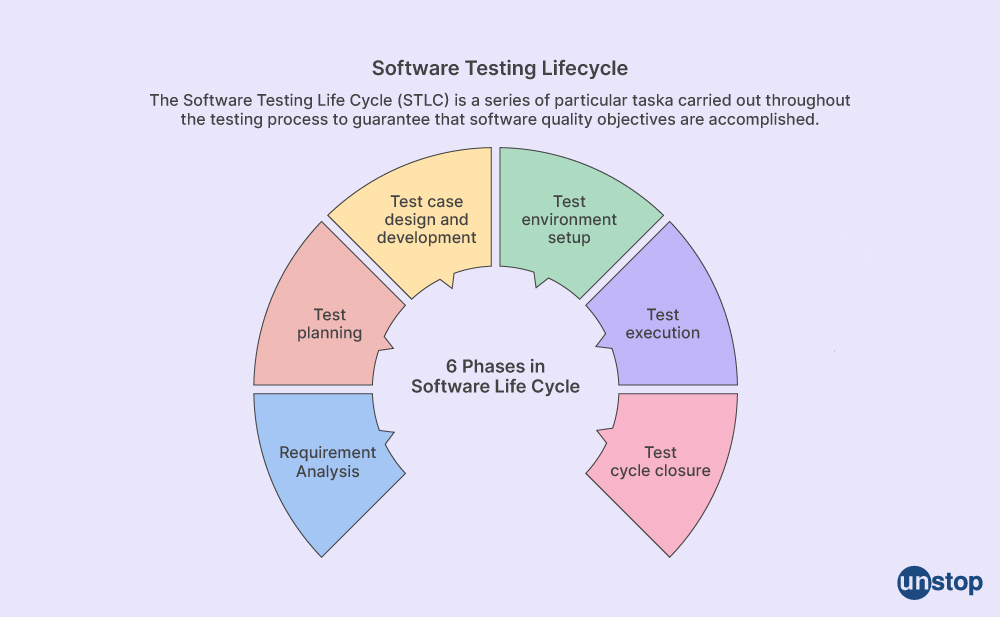

7. What techniques should be used during the different testing phases of the software testing life cycle (STLC)?

During each testing phase of STLC (Software Testing Life Cycle), various methodologies should be applied, such as functional & non-functional requirements gathering during the specification review stage, followed by detailed test plan creation before moving on to the unit/integration system, performance acceptance & regression testing at user acceptance level. This helps in verifying that the scope delivered meets expectations.

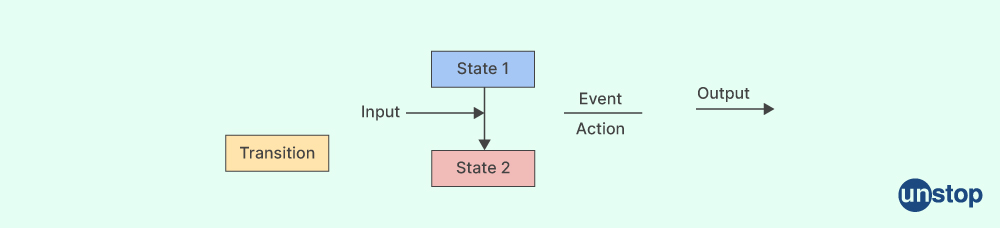

During the unit and Integration testing phase, techniques such as Boundary value analysis, equivalence partitioning, and state transition should be used to ensure the validation of functionalities at the component/module level in isolation.

The performance acceptance testing phase should use stress testing methods like load testing/volume testing i.e. simulating production environment traffic to analyze system performance under heavy user load scenarios with respect to response time and tracking resource utilization appropriately over a period of time.

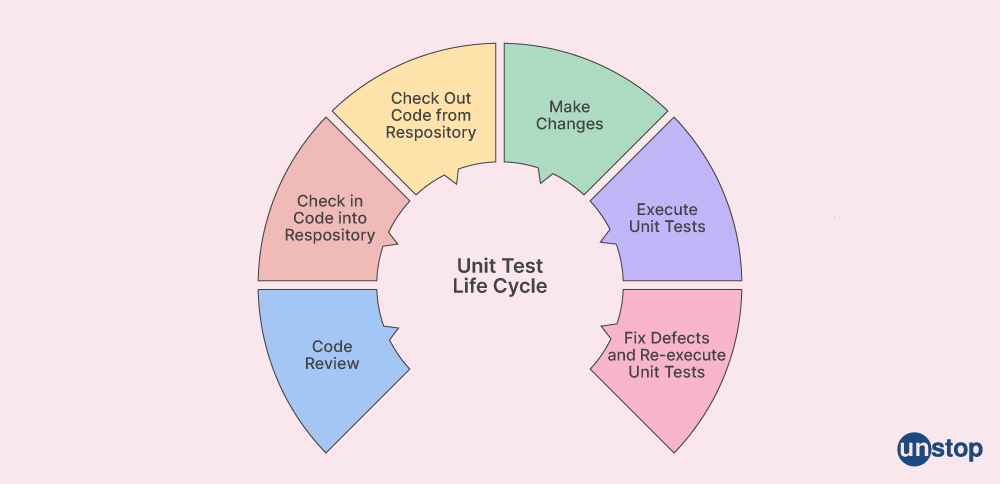

8. What's the unit testing process and what are some possible frameworks for carrying it out?

The unit testing process requires breaking down large chunks of code into individual components split across separate classes or modules so they can run independently. It creates unit test cases associated with verifying if the logic flow is working correctly, then debugging any discovered errors when expected results don't match. To ensure the unit testing is carried out successfully, using a reliable framework such as JUnit for Java or NUnit for .NET is important.

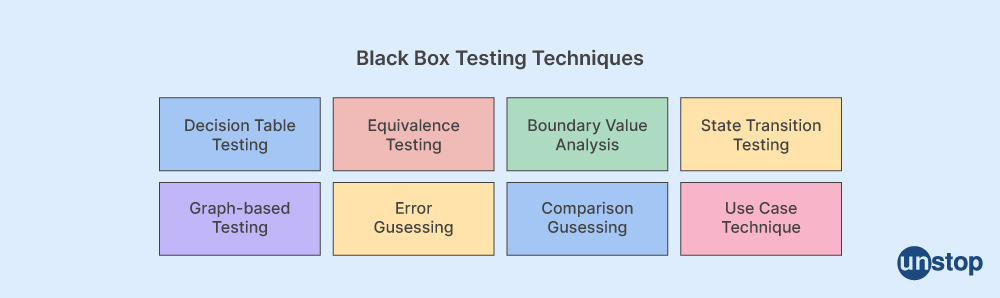

9. What is the black-box testing technique? What are a few of the difficulties involved with black-box testing?

The black-box testing technique involves focusing on the external user interface of the application and its functionality via input and output scenarios such as boundary value, equivalence partitioning, or state transition/state diagram approaches to ensure the app is working correctly from an end user's point of view.

Here are some difficulties involved with black-box testing:

1. Limited visibility into the application: Black-box testing requires testers to have only limited knowledge or familiarity with the inner workings of an application, making it difficult to identify bugs in more complex code.

2. Difficulty crafting test cases: Understanding how a system works makes it easier for testers to create accurate and comprehensive test cases that cover every possible use case and functional area within an application.

3. Time-consuming process: Testing with insight into how the system functions can be time-consuming and inefficient as some areas are not covered.

4 . Limitations on functionality verification: As black box testing excludes unconventional ways end users would interact with your products, some defects might go unnoticed, leading to irregularities when tested for abnormal conditions like stress situations such as hazardous temperature levels.

10. Explain how usability testing provides valuable insights about user experience with particular attention to UX design elements such as navigability, responsiveness, and overall satisfaction levels when using an app or website interface.

Usability testing seeks to evaluate how well a product meets its intended purpose. Usability testing focuses on UX elements like navigability, responsiveness, and overall satisfaction stated by participants when using the interface through various methods. Some of these methods are heuristic evaluations, cognitive walkthroughs, or user surveys.

Usability testing helps assess a product's functionality from the user's perspective, identifying potential problems and areas for improvement.

11. What is the purpose behind positive testing that checks whether any valid value generates expected results according to specifications?

Positive testing checks whether any valid values generate expected results according to specifications. By providing available input data, it can help verify that at least one path through logic flow successfully leads the program onto the desired result without crashing due to misconfigurations errors, etc. The purpose of positive testing is to ensure that the system meets its requirements and functions as expected when given valid input. This helps identify any potential errors in coding or configuration, allowing developers to fix them before the system is released.

12. How does dynamic testing differ from static ones in terms of its approaches to finding defects within a piece of code?

Dynamic testing focuses on the actual behaviour of code. At the same time, it runs looking for defects associated with incorrect handling parameters passed between functions. In contrast, static ones look more closely into individual instructions used within source code before execution to detect unanticipated coding issues not apparent during the initial development stage.

13. How do automation tools improve quality assurance processes?

Using automation tools helps speed up quality assurance processes as they provide continuous feedback on development progress along given criteria without manual intervention - helping to detect issues early during testing & providing quick implementation of changes required, if any.

14. How can manual testing be effectively used to ensure that requirements from the software development lifecycle, such as specifications and documents, are met without any human errors while performing static testing, sanity testing, and negative testing for non-functional testing along with smoke testing and user acceptance testing?

Manual testing can be effectively used to ensure that requirements from the software development lifecycle are met without any human errors while performing static testing, sanity testing, and negative testing for non-functional testing using a comprehensive test suite. The test suite should contain detailed descriptions of each step and expected results which should then be executed one at a time to check all functionalities as per specified requirement documentation.

Additionally, smoke testing and user acceptance testing should also be included in the manual testing process as they allow testers to quickly identify major issues with the application before full system deployment. Finally, manual testers must pay close attention throughout their work to ensure that human errors are identified and corrected before the product is released.

15. What is the process for sanity testing during a software development cycle?

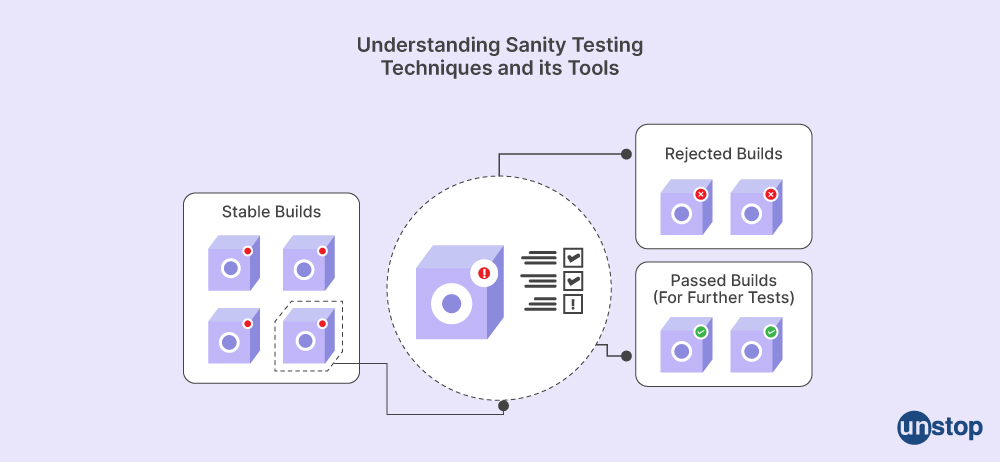

Sanity testing is a type of software testing performed to quickly evaluate the integrity and stability of an application during its development cycle. It involves verifying that all functions work as expected without delving deeper into functionality, checking for critical bugs, or evaluating usability. To conduct sanity testing effectively, teams should develop test cases before programming so that any exceptions can be identified before progressing further.

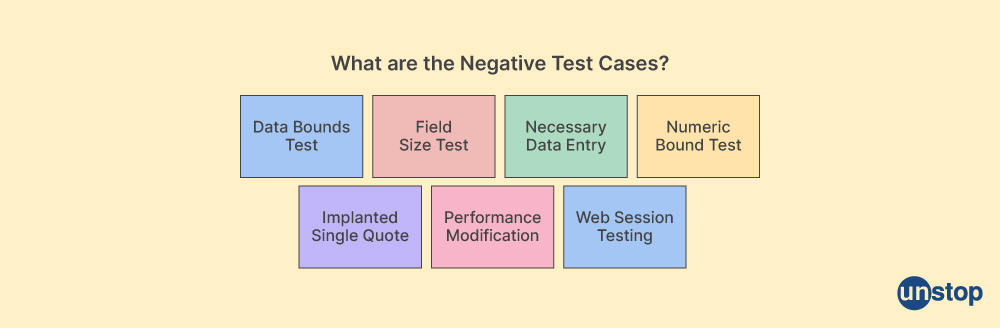

16. How should teams identify and prioritize negative testing scenarios?

Identifying negative test scenarios requires examining how an application behaves when certain boundaries are crossed or invalid input is provided by users/systems. This helps ensure applications are robust against these situations and meet performance requirements accordingly, depending on specified objectives in requirement documents. Teams must prioritize which areas require more focus using their experience coupled with risk management principles.

17. What are the advantages of automated testing over manual testing?

Automated testing offers faster results than manual tests due to its ability to run repeated and complex tasks simultaneously within shorter timescales while also reducing costs associated with manual, labor-intensive activities. Automated testing involves writing code scripts that interact directly with system components. Thus, for automated testing engineers need good coding skills (but less test design knowledge), whereas manual testers need the reverse.

18. Can you explain how to read and interpret requirement documents to ensure correct implementation by developers?

Reading and interpreting requirement documents for software development is a necessary process to ensure that developers build applications accurately according to user/stakeholder needs. These documents should be read thoroughly and discussed with project stakeholders to identify any ambiguities, missing information, or conflicts before proceeding with coding activities, as this could involve redesigning elements of the application later on.

19. What criteria should be included in requirement specifications before they can be approved for coding purposes?

Requirement specifications must include specific criteria such as clear definitions of how input and output information will travel around system components, expected performance levels under certain scenarios plus other parameters needed at coding time like programming languages used, etc. All these aspects must adhere to defined quality standards prior to their approval for implementation by developers otherwise issues may arise during code execution which would require fixing, further delaying progress.

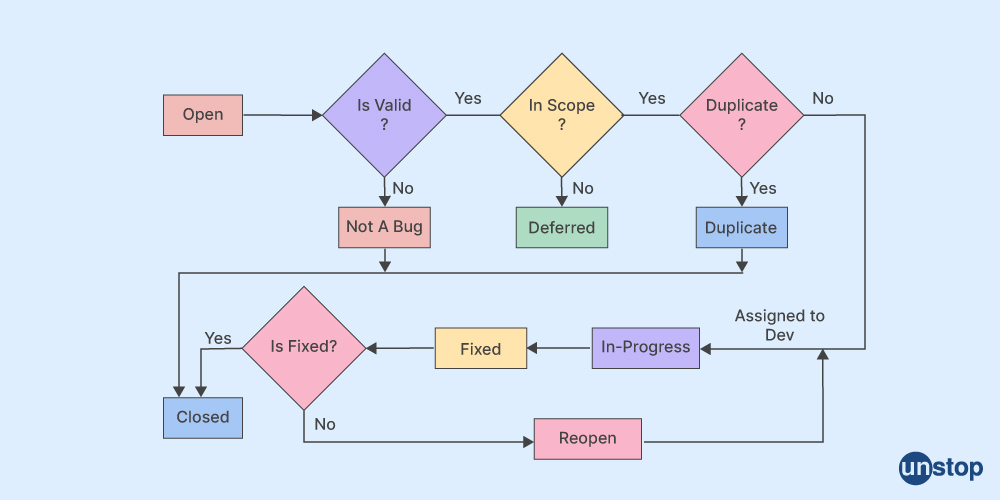

20. Explain the defect life cycle, from identification through verification/ closure stages.

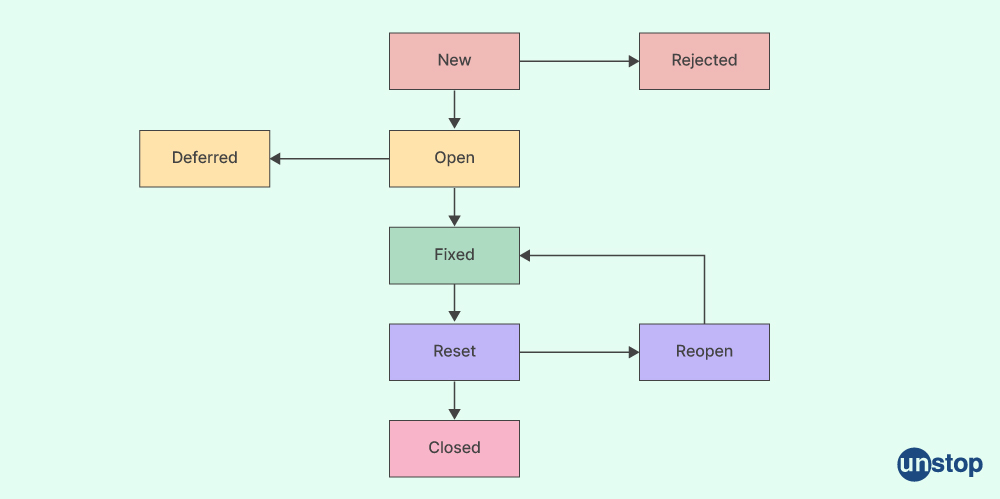

The defect life cycle involves various stages, from the initial identification to verification and eventual closure. Initial identification is where the tester opens a ticket describing the issue found due to incorrect behaviour within an application. It's followed by the verification phase, where the developer confirms bug details are valid after diagnosing the root cause. This is followed by closure when the fix becomes available officially. Post testing its success rate, the fix is finally delivered into the production environment safely and securely. In the defect life cycle, during each stage teams should maintain traceability so their progress is monitored efficiently and any dependencies are kept trackable.

21. How do testers effectively detect invalid input during test case execution?

Invalid inputs must be detected early in the software development cycle, usually through the execution of test cases where specific values (out of range or invalid data types) which can cause issues down the line if not prevented appropriately should be identified by testers & reported. Negative testing scenarios need to cover various usages, like web forms, plus other user input fields as well for maximum coverage during testing activities.

22. What metrics or techniques can help determine bug rate within a given period of time?

The bug rate helps teams measure release quality over a given period of time using metrics such as the total number of bugs divided by total releases or the ratio between open/closed tickets within a specified timeframe. This helps determine maturity levels before live deployment, ensuring customer satisfaction and thus, higher potential for revenue generation over a given period of time.

23. What criteria are used to assess the success of sanity testing?

Sanity testing is software testing used to quickly verify whether or not basic functions in an application are working correctly. It can be done after minor code changes, such as bug fixes, UI updates, or features under development. The goal of sanity testing is to find out if the system meets its requirements and verify that there have been no catastrophic failures since the last successful build. When assessing success criteria for sanity testing, testers should focus on determining how effective their test coverage has been and what bugs users found while using the product during this evaluation period. Areas like user interface responsiveness/performance should also be considered when evaluating overall performance results from these tests.

24. How often do testing teams review test results and update their testing strategies?

Testing teams typically review test results and update their testing strategies regularly. Ideally, they will do this at least once every sprint or iteration to ensure that the team's tests are up-to-date with any changes in requirements or design. This type of review can also identify failure patterns that indicate deeper problems and generate ideas for additional tests needed to improve product quality. Additionally, reviewing test results helps validate that the team is working efficiently by identifying areas where effort could be better allocated or tasks that could be automated to save time during future test cycles.

25. How does the development team contribute to successful bottom-up approach testing?

Development teams' involvement helps ensure that all areas within the architecture are tested thoroughly during bottom-up approach testing - starting with core services first and working up through layers of abstraction towards total integration tests on user interface layer applications.

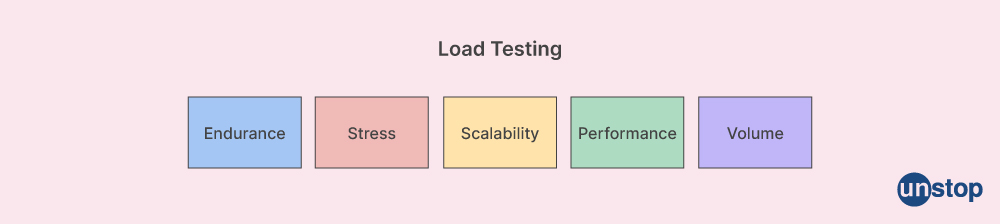

26. Name three types of load testing that can be conducted on software applications, databases, or network systems.

Three types of load testing typically conducted include:

- Stress Test

- Volume Test

- Endurance Tests

Each type of load testing performs distinct activities like detecting application malfunctioning under heavy load conditions and evaluating throughput capacity while increasing workloads incrementally. Load testing helps to identify software performance issues in an application and is a crucial step for preparation before launching into production.

27. Describe volume testing and how it is used in the software quality assurance process.

A type of software testing, volume testing evaluates an application's reliability and performance under high workloads. When running unit tests with various inputs, at different times throughout the development cycles, this type of test examines the system's capacity to handle large amounts of data. It considers factors like input volume (such as the number of concurrent users), throughput, response time under load, scalability, reliability/stability, and resource utilization levels. Regarding the software quality assurance process specifically, volume testing helps validate whether or not an application meets its predefined non-functional requirements. For example, the maximum responses from the server within certain defined SLA agreements without compromising user experience or availability in peak hours.

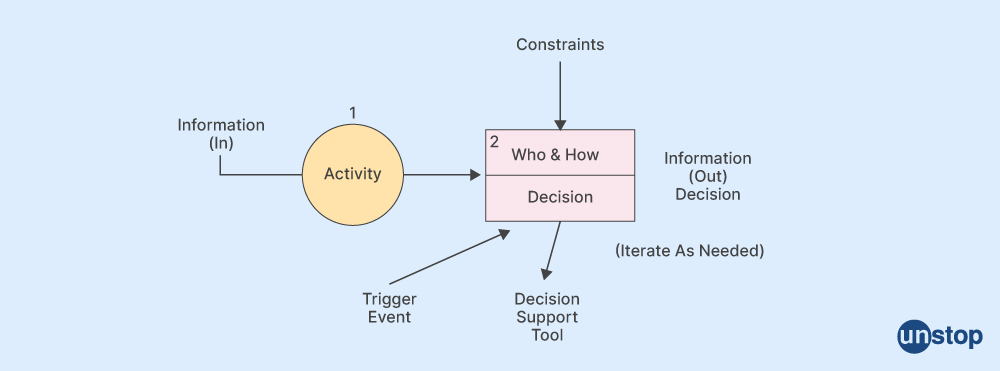

28. Can you explain the decision table testing algorithm with some example scenarios?

Decision table testing algorithm can be best explained using an example scenario whereby actors need clear guidance from a system based on defined input parameters (conditions) which generate corresponding output/actions according to defined logic rules. Decision table testing aims to identify all possible outcomes of a system according to a set of input conditions and given logic rules, ensuring that the system reacts adequately in any scenario.

29. What benefits come from using random testing as a comprehensive software testing strategy?

Random testing is a comprehensive software testing strategy that provides various benefits, like the following:

1. Improved Coverage: Random testing helps ensure thorough application coverage by selecting test cases at random, without bias or preconceived notions about which areas need more attention than others. This means all parts of an application can be tested equally and thoroughly in less time compared to other strategies.

2. Flexibility: A random tester can alter parameters for each subsequent pass if they feel certain scenarios are not adequately addressed with current methods or tools used in prior tests. Or if a particular area needs extra attention because earlier results revealed unexpected behaviour. This allows testers to quickly switch approaches during their testing sessions when needed, preserving valuable resources while also increasing efficiency due to quick adaptability as issues arise throughout the analysis and debugging processes.

3. Reduced Human Biases: When using a randomly generated approach, human bias is minimized because there is no predetermined set path that must be taken when performing tests on an application - it's completely reliant upon chance selections alone. This ensures no aspect receives undue focus (nor neglect) since everything will have been examined evenly regardless of the order, i.e. what happens first or last. Moreover, a tester does not get stuck in any kind of pattern/cycle. Instead, they move freely among areas of an application with fresh eyes each time.

4. Uncover Complex Behaviors: Random testing helps to uncover complex behaviours in a much faster and easier way than manually designing test cases for the same purpose. This is because randomization tends to cover scenarios that wouldn't be easily thought up by humans beforehand, no matter how experienced they are - especially when dealing with highly intricate software containing multiple components intertwined together (e.g. databases or advanced algorithms).

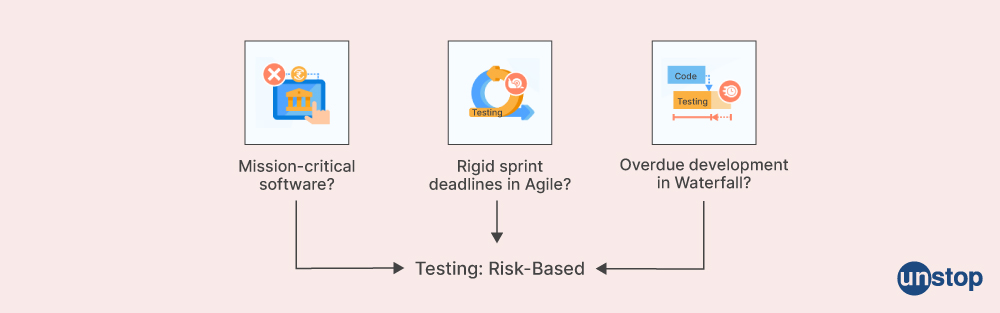

30. Explain the Risk-based testing approach and the different stages involved in it?

Risk-based testing is a systematic approach that involves analyzing and evaluating potential risks associated with different parts of an application or system. This approach allows testers to prioritize tests based on the most critical aspects while considering cost-benefit analyses, timeline constraints, resource allocations, and regulatory requirements. It focuses on testing strategically important components first to identify any issues early so they can be rectified before the launch date.

The primary steps involved in Risk-based testing are:

1) Identifying Risks: The team needs to understand all possible risks associated with each component/system, including those related to functionality, performance, usability & security, by analyzing business requirements and functional specifications documents.

2) Assessing Impact Level: Once the risks have been identified, the team needs to assess how severe any issues could be under various circumstances (e.g., user experience vs customer impact).

3) Prioritizing Tests: All risks should then be ranked according to their likelihood of occurrence and severity of impacts if there were indeed problems at hand. This will help determine which tests need more focus than others depending upon what’s deemed most important for the successful operation of the system.

4) Selecting Tests: After all risks have been properly prioritized based on their importance, appropriate tests must be selected that will expose any issues with the system to fix them before launch.

5) Executing Tests: Lastly, these chosen tests need to be executed & re-evaluated periodically throughout the development process to validate expected outcomes (and revise if necessary).

31. How can testing time constraints affect test planning and execution when performing manual tests for an application or project?

When performing manual tests for an application or project, testing time constraints can significantly affect test planning and execution. Depending on the complexity of the project or application being tested, additional time than accounted for may be required to cover all aspects that need to be examined against predetermined criteria such as functionality and usability. This necessitates careful planning by the tester to determine which components require more attention due to their impact on core features within the system. Scheduling is also affected by available resources. If there are limited people involved with testing, then time estimates must reflect accuracy when assessing how long each task will take so no part of higher-level tasks falls through the cracks due to lack of resources (e.g., longer tasks should break down into smaller ones).

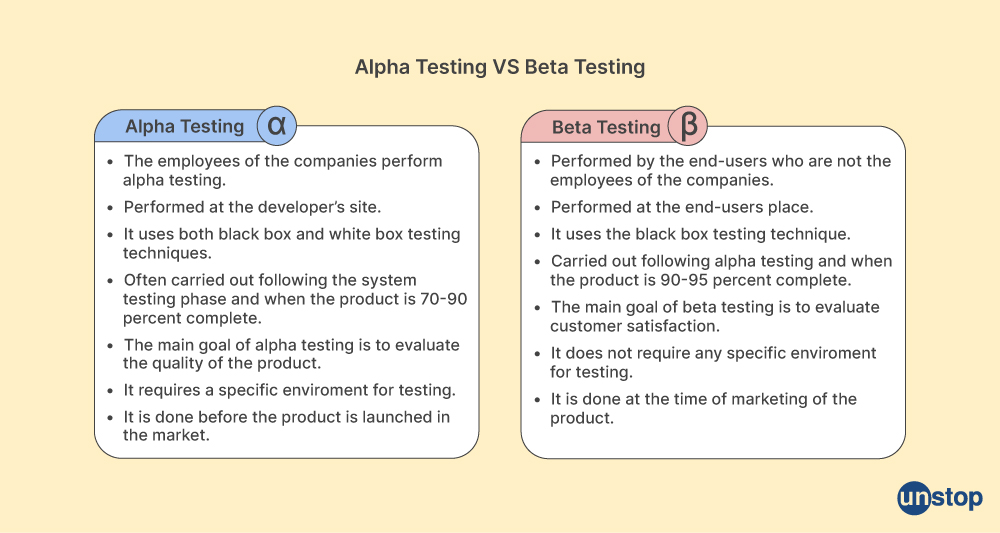

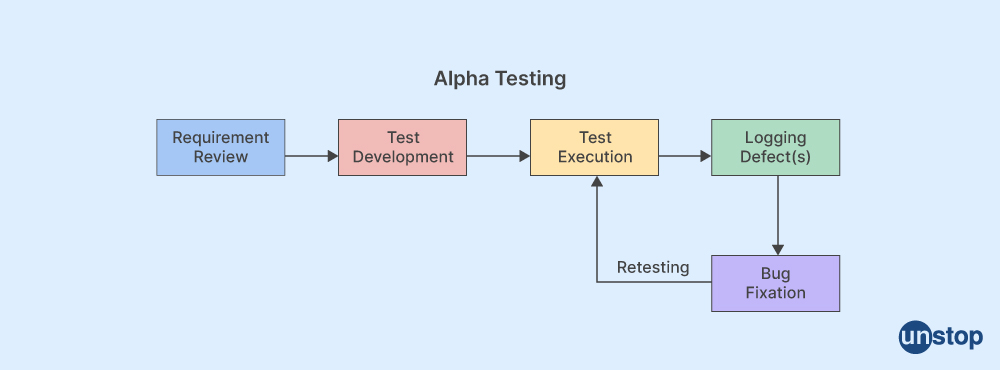

32. What is the difference between Alpha Testing and Beta Testing when assessing system performance before release into the production environment?

Alpha Testing is usually carried out internally by members of the development team before release, whereas Beta Testing is done externally to gather feedback from actual end users about product features once it has been released into the production environment. Hence, both are important steps in assessing system performance before the official launch.

33. What value do dummy modules add during the System Integration Test phase?

Dummy modules assist with System Integration Tests, such as database access API integration verification. They do so by replicating real-world conditions where components that are not ready still exist. And then checking that the expected responses are accurately generated when simulated messages are sent/received accordingly.

34. Describe the Code Coverage concept and list the methods used to measure code coverage.

Code Coverage is a metric to quantify how thoroughly a program's source code has been tested. It helps an organization determine how much testing has been conducted and measures test effectiveness by providing metrics on whether certain sections or lines of code have been executed during testing. Code Coverage can generally be classified into statement, decision/branch, condition, function/subroutine, or modified condition/decision coverage (MC/DC).

Some of the typically used Code Coverage methods are:

- Statement Coverage (number of lines taken into account)

- Decision Coverage (testing that all branches in code get executed at least once)

- Path coverage (assessing overall path/structure complexity).

35. Which factors should testers consider while evaluating regression test status after the completion of development phases?

Dummy modules can be extremely useful during a product or system development's System Integration Test (SIT) phase. Dummy modules provide an effective way to simulate real hardware components, allowing teams to effectively test their system in isolation before integrating it into existing systems or other software. By providing realistic responses and behaviours for individual components without having to go through actual procurement and integration processes for each component type, dummy modules enable developers to reduce costs associated with purchasing extra parts and shorten the time required for SIT testing. Additionally, dummy modules are also used when connecting two distinct subsystems since they help keep track of various data points exchanged between them while adding flexibility where minor changes may occur quickly without requiring all connected devices to get replaced at once.

36. Explain the latent defect type found most commonly during unit-level tests.

Latent defects usually arise due to misinterpretation or miscommunication of software requirements that often get overlooked until unit-level testing is performed. Because this is when anomalies become more visible such as subtle incompatibilities between modules, etc. The latent defects can also be considered hidden bugs that remain undiscovered until they reach the production environment. These are found worst in integration-level tests.

37. What information should be included in bug reports to make them more effective for analysis and corrective action?

Bug reports must be specific about particular issues encountered and detailed descriptions of events leading up to it, including reproducible steps if possible. It should also reference system logs, error messages and screenshots from the user interface, whenever applicable, for better analysis and corrective action accordingly.

38. What real user scenarios should be tested to ensure high-quality products and compliance with the business rules?

Real user scenarios should be tested to ensure high-quality products and compliance with the business rules by evaluating the use cases from a customer's point of view, testing different combinations of parameters, and validating if expected results are achieved in each test case.

39. How would you use statement coverage testing techniques to identify bugs in the software specifications?

Statement coverage is used to identify bugs in software specifications by measuring code branch processing during runtime, which helps developers determine parts that have not been executed or tested properly for errors or defects. Statement coverage testing requires developers to establish a set of criteria for their test cases, which ensures that the code is highly tested and any bugs are caught before release.

40. What's the importance of tracking the bug life cycle during manual testing?

Tracking the bug life cycle is an important part of the manual testing process. It allows us to track progress on possible solutions while highlighting any critical issues that need developers' immediate attention before releasing them for end-user approval and usage.

41. How will you effectively utilize a top-down approach while developing a test plan with large complex functionality requirements?

A top-down approach can be effectively utilized while developing a test plan with large complex functionality requirements through proper planning. And dividing applications into manageable functions, thereby allowing testers to focus efforts only on core modules such as User Interface (UI), Database systems, etc. This also ensures objectives are met sooner rather than later without compromising quality standards at any stage throughout the development process.

42. Could you explain how regression testing, performance testing and transition testing help improve the overall product quality assurance process?

Regression testing verifies existing functionalities against new changes made within the application. Performance testing measures how well the system will handle load conditions under certain circumstances, like when multiple users access the same data, creating conflicts among shared resources. Transition testing checks new applications or modules against existing system architecture with respect to capacity and workload, that is whether it can handle the requirements of users without causing any delays.

All these testing processes can improve the overall product quality assurance process by involving a dedicated team that works independently from the development division, ensuring optimal coverage for each specific test case, avoiding redundant work, and increasing efficiency in the long run.

43. How can we successfully perform performance testing?

Performance testing assesses a system’s response time, throughput, and scalability under varying loads. Successful performance testing requires careful planning, design and execution to ensure that the results are accurate and reliable. Performance testing will ensure that software applications can handle the expected load and usage scenarios.

44. Can you explain the process used for transition testing?

Transition testing is an end-to-end validation process that tests the application's components, including databases, web services and user interfaces, for any changes that occur when transitioning from one environment to another, such as development or production environments. It includes validating functions affected by code branch merges and integration/regression testing between different applications within a system architecture stack.

45. What accessibility testing procedures should be followed for the software module?

Accessibility testing should include identifying and testing any aspects of the software module that could prevent users with disabilities from using it comfortably. To ensure this, common accessibility testing principles such as keyboard navigability should be tested, along with other elements like colour contrast and text readability for those with a visual impairment. Accessibility testing ensures that the software module can be used by people with disabilities.

46. How will Ad hoc testing help identify any hidden bugs in the software module?

Ad hoc testing involves studying the application's behaviour in an unstructured fashion to try and uncover any hidden bugs or flaws in its design that might not typically be found through standard test cases or scenarios. Ad hoc testing can often reveal problems related to usability, which are difficult to identify when only relying on structured tests such as black-box methodology. Ad hoc testing is an exploratory approach to manually uncover any hidden bugs or flaws in software modules that may not be apparent through traditional test cases.

47. What white-box testing techniques can be used to test components of the requirement stack?

White-box testing techniques involve utilizing knowledge about source code for effective program verification throughout development processes. It examines how data flows around different components within a system's architecture while performing various operations during execution, including exposing unknown errors or vulnerabilities. These approaches provide insight into understanding control flow decisions taken at each step and help create better strategies for comprehensive modularized unit tests.

48. Explain how is automation testing beneficial for conducting compatibility testing?

Automation testing is an effective way to ensure that all compatibility testing is conducted on time and accurately. This type of testing involves the automation of testing cases and running them against different versions or configurations of a system to check for any unexpected errors in utilizing compatibility testing. It allows faster identification and resolution process timeliness, without compromising the quality assurance phase/stages during product launches. It does so by making it easier to record data electronically across all phases upon accurate completion.

49. How can mutation testing approaches improve a software module's testing coverage?

Mutation testing is one approach that could be used to increase the testing coverage of our software module's tests since it involves introducing syntactical variations into existing code so that potential weaknesses can be identified more effectively than through white-box techniques alone (which might miss out on certain scenarios). Using this strategy helps provide better insight into how various codes behave differently under varying conditions and also highlights areas where there may be a need for more robustness or instability.

50. How do manual tests contribute to ensuring a comprehensive scope of testing is achieved before deployment?

Manual tests are important for ensuring a thorough, comprehensive scope of testing is achieved before deployment as they help identify areas that automated processes may have overlooked during testing phases and allow for more detailed investigations into potential issues and bugs in the system from end-user perspectives. This can prove invaluable in uncovering any hidden problems related to usability or functionality that could otherwise go unnoticed until after launch, thus saving considerable time and resources when it comes to bug fixing post-release.

51. What is defect density in the code deployment process?

Defect density (DD) measures the number of defects per 1000 lines of code. It is frequently used to assess software quality and can be a sign of efforts being made to patch bugs or enhance features while the product is being developed. Defects are typically identified through rigorous testing processes like unit tests, integration tests, system tests, or user acceptance testing before releasing new software versions into production environments. To calculate defect density, you first need to total all the defects discovered in your application and then divide this by the total size (in KLOC - 1-kilo line of code = 1000 lines).

A higher defect density ratio indicates that more bugs may be present than originally expected when creating/deploying software. The acceptable defect density rate will differ from organization to organization, depending on the type of applications being developed. But generally, most organizations aim to have their defect densities under 10:1 (10 errors per thousand lines), with some aiming even lower at 5-7 errors per thousand.

52. How do you ensure the input boundaries are properly validated and tested?

To ensure proper validation and testing of input boundaries, it is important to create effective test cases which will cover a wide range of inputs, including invalid inputs such as wrong type (e.g., string instead of integer) and out-of-range values outside acceptable limits (e.g., negative numbers when only positive ones are expected). Additionally, input boundaries' conditions should be tested - i.e., extreme input values towards each end of an accepted threshold range (such as upper/lower bounds on length or numerical value ranges).

53. How do you test for unexpected functionality outside major functionality requirements?

Testing for unexpected functionality outside major functionality requirements can be done by providing documents of valid requirements along with clear specifications about what constitutes desired major functionality versus non-desired behaviour. Thus, testers have the necessary guidance for effectively examining system responses under various scenarios, thereby finding any unexpected functionality issues that may arise from external sources not currently included in major functionality tests plans that were created based on specific customer needs or industry trends/standards at the time the product originated.

54. How to prioritize bug releases with a suitable management tool?

A suitable management tool would involve creating a tracker bug release spreadsheet with assigned priority levels based on severity and urgency to ensure quicker resolution of high-priority bugs. Additionally, regular review of the bug release with a suitable management tool list should be conducted with stakeholders, including product and technical leads, so that management can better understand the impact on the overall timeline for project completion or any other areas where resources may need to be redirected. This facilitates more efficient prioritization and dealing with issues related to bug releases.

55. Are there any specifications for standard runtime environment setups when performing manual tests?

When performing manual tests, a standard runtime environment must be established to provide consistent results across different test cases carried out by testers. This means defining system requirements, including software versions used and hardware setup conditions such as memory size available or device type being tested upon which these tests will take place.

56. What are the input boundaries that should be considered when performing manual testing on an input box?

When performing manual testing on an input box, input boundaries should be considered, such as input length, data type validation, acceptable values for the input field, and so on. Boundaries of input should also include restrictions such as maximum and minimum values, date or time formats, etc. For an input box, manual testing involves verifying the functionality of the text field (e.g., data entry length limits) to ensure that characters are correctly displayed in it, the accuracy of selection from drop-down menus; validation against incorrect user inputs, and any other relevant features associated with the input box.

57. How can memory leaks be identified during product and software development?

Memory leaks can be identified during product and software development by defining a memory profiler to detect any potential performance issues related to allocating or deallocating memory blocks without properly freeing up space in the system's RAM. Moreover, memory leaks can also be identified by running unit tests to check for unexpected behaviour of software components and verifying the release notes before executing. Furthermore, testing teams should ensure that all resources used in a program are released after use to prevent these memory leaks from occurring.

58. What strategies could be implemented to improve quality assurance in the software industry?

Strategies that could be implemented to improve quality assurance in the software industry include:

- Reducing time-to-market of products and services by implementing automated test suites.

- Improving communication between developers and testers through integrated platforms such as Slack.

- Integrating DevOps processes across teams for faster deployment cycles with fewer bugs.

- Introducing internal procedures that require several rounds of rigorous testing before releasing a product/service.

- Rewarding employees who raise awareness about cybersecurity threats early in the software industry.

59. To what extent is human intervention necessary for detecting the time between failure issues?

Human intervention is necessary when detecting the time between failure issues since this helps identify individual components where human analysis is required instead of machines automatically pinpointing problems within complex systems - especially those prone to multiple failures over long periods due to insufficient maintenance practices.

60. How would you assess if a poor design has been used within standard documents or the internal architecture of a system or application?

Poor design within standard documents or internal architecture can be assessed through various tools, including code review checklists, automation scripts that generate reports on compliance levels, peer reviews during architecture/design meetings, etc.

61. In what ways can the type of performance testing detect all potential flaws before the launch of a system/application?

The type of performance testing can detect potential flaws before launch by implementing a range of tests that simulate real-world conditions, including load and performance tests such as stress, scalability, and endurance. These should be run manually and/or through automated systems to analyze different aspects like latency, throughput, etc.

62. How should localization testing be conducted to ensure the accuracy of the application's output?

Localization testing should involve comparing the application output with predetermined parameters to check if it adheres to the specified design specifications across all relevant geographical regions or languages. Localization testing should also involve reviewing the local language resources, including translations and dialects, to identify discrepancies or potential issues.

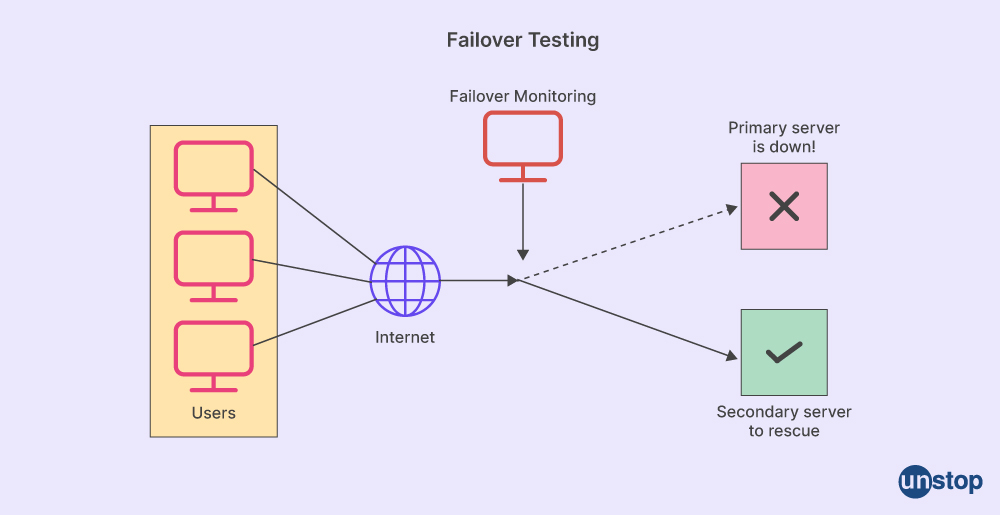

63. What strategies can we use to guarantee system stability for failover testing?

Strategies for failover testing include using simulations and virtual environments to test system stability with increasing levels of load or stress on resources and ensuring disaster recovery plans are in place if any faults occur during operations. Failover testing components should be identified quickly and replaced using the disaster recovery plan to maintain system stability.

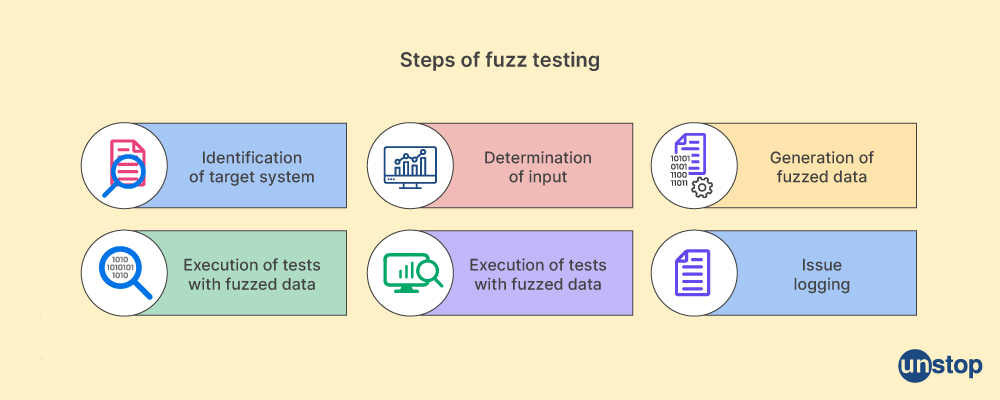

64. Is fuzz testing an appropriate approach for validating software security features?

Yes, fuzz testing is suitable for validating software security features by randomly changing inputs that simulate unexpected user behaviour and checking how the application responds in such scenarios before releasing it into a production environment. Fuzz testing is an effective method for uncovering bugs and potential security vulnerabilities in applications, as it focuses on validating input parameters that are not usually tested by traditional testing techniques.

65. Are there any specific considerations that need to be considered while conducting globalization testing?

Globalization testing should consider factors such as language support availability, cultural reference compatibility, and usability/accessibility among different locales before releasing an international version of an application. Globalization testing ensures an application works and behaves similarly for users across all target languages, regions, or cultures.

66. What techniques and procedures can we utilize in hybrid integration testing cases to detect all possible errors or discrepancies within different modules of the program codebase?

Techniques utilized in hybrid integration testing cases can range from manual investigations (e.g. comparison between expected and actual results) to automated script execution that cross-references data offerings provided by disparate subsystems at various stages during a single run operation. Hybrid integration testing also allows for the identification of masked defects, blocking-type issues, and errors within boundary values analysis technique that would not be visible in individual module test cases.

67. What kind of semi-random test cases could provide a comprehensive overview of individual module performance on various operating systems?

Semi-random test cases could encompass unit tests conducted on individual modules' process flow logic when running on particular operating systems (e.g. Unix, Linux, etc.) to ensure that all functionalities are running as expected. Semi-random test cases should also be used to test the error values on different inputs and boundaries to identify any blocking-type issues or masked defects.

68. Can surface-level tests help identify blocking-type issues related to user interfaces, such as functionality failure due to masked defects that are not visible at first glance by testers?

Yes, surface-level tests can identify blocking-type issues related to UI (e.g. user input validation failures or form element display errors ), which would not have been detected if the system was tested in isolation without considering its interaction with other components within a given application infrastructure environment. Blocking-type issues occur when the UI fails to function correctly due to a user input validation failure or form element display errors. Masked defects are errors hidden within valid functionality and can be difficult for testers to identify unless certain conditions or scenarios are actively tested for them to surface.

69. Which types of high-level tests would prove useful when assessing error values caused by incorrect boundary value analysis technique execution during manual verification processes?

High-level tests such as end-to-end integration testing and system performance testing should be conducted when assessing error values caused by incorrect boundary value analysis technique execution during manual verification processes to guarantee overall accurate results from an application's internal functions and external interfaces throughout all stages of its development life cycle. The boundary value analysis technique is a testing approach used to identify errors by examining an application's input and output values from which valid inputs can be determined. With this, error values due to incorrect boundary value analysis technique execution during manual verification processes can be identified and rectified accordingly.

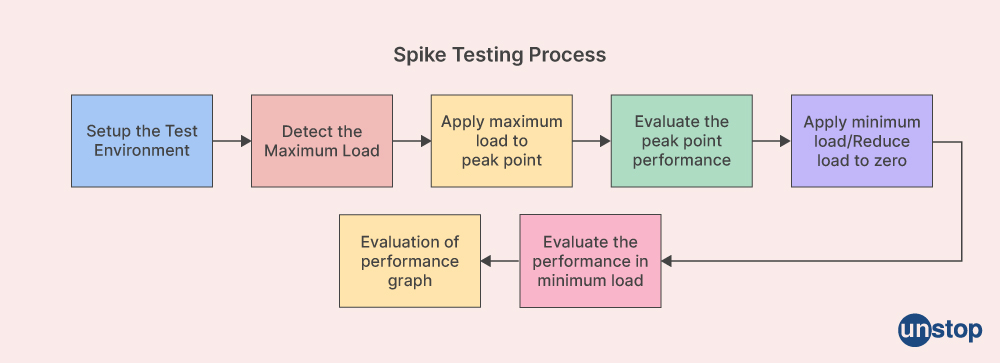

70. What is spike testing? What does it evaluate?

Spike testing is a software testing technique that evaluates the system response when subjected to a sudden burst of workloads or requests beyond its normal operating capacity. It measures and records how well your application can withstand extremely high loads so that you know just how much abuse it can handle before it fails. When running spike testing, testers should monitor performance metrics such as memory utilization, CPU usage, server latency times, etc., to understand which part of the system has failed and why it has become unresponsive under heavy load conditions.

71. How is defect priority determined in complete functionality tests?

Defect priority during complete functionality tests is determined by analyzing the defects' impact on the overall test objectives like efficiency and effectiveness of functional requirements with respect to user expectations while using a particular feature. Or complete functionality tests within an application interface/module level or at page-level across all different applications/modules respectively depending upon where they are reported from. The defect priority depends upon factors such as criticalness and severity levels associated with them. For instance, critical defects have a more damaging impact than major ones. In contrast, minor types may not be deemed worthy enough since this could lead to too much wastage of development time and effort in resolving irrelevant issues, instead of spending quality time on higher priorities efficiently. When determining defect priorities, it's important to simultaneously consider other operations-related constraints inside delivery parameters defined by stakeholders.

72. Which inherent failures can occur during lower-level modules?

Inherent failures that can occur during lower-level modules include incorrect business logic implementation, missing/incorrect data validation in forms, broken user or system flows, etc. Unintentional vulnerabilities due to insufficient permissions grants on elements like files, directories, and databases should also be assessed at this stage for potential security risks associated with them from malicious actors outside the organization’s infrastructure boundaries. This acts as an additional step to prevent any abuse of application weaknesses down the line irrespective of how trivial they appear on the surface level initially while performing testing activities not under controlled work environment setups - such as production servers where real-world scenarios have greater chances of impacting overall performance adversely if left unchecked over a period of time.

73. Are higher-priority issues identified while performing top-level modules?

Higher priority issues are identified through resource-intensive tests performed before pushing code changes into different stages. These tests can be conducted without compromising the stability and integrity of existing features and offer significantly higher return investments when compared with other development operations often deemed too risky. It's important that vendors maintain minimum release cycles along with a proper versioning strategy, properly supplemented via strong documentation components throughout each phase till completion, covering all necessary aspects required for making migration smoother. This even helps to roll back changes quickly and easily when something goes wrong somewhere - like an unanticipated issue at a midpoint basis can only be found after rigorous validations take place prior to launching.

74. Can the input domain be used to identify prevalent issues in the manual testing process?

Yes, the input domain can be used to identify prevalent issues in the manual testing process by performing feature-level validations like checking field types assigned under each form from a frontend perspective. And evaluating backend processing flows associated with them. This involves analyzing the data structures employed during the transfer of information between different tiers over similar networking technologies or optimized links, depending upon the system architecture being followed here while also taking into account latency factors due to location differences that can cause additional hiccups. Not factoring in these factors prior to embarking on the journey can lead to issues.

Whenever applicable, capture all user personas across multiple scenarios, ideally along their most frequent use cases throughout each session from start to finish until feedback on successful completion is received. This ensures improvement in overall performance, as per initially defined requirements while simultaneously maintaining appropriate audit records and acting as an early warning system for any potential risks identified against predetermined acceptable parameters and threshold limits. These limits are set ahead beforehand, thwarting the likelihood of malicious actors that exist silently within core layers going unnoticed.

75. How does the alpha testing period impact the product's performance in endurance testing?

The alpha testing period can indicate how well the product will perform under endurance tests, allowing developers to better understand any underlying system issues before running prolonged operational simulations.

76. Does enabling pre-emptive monitoring during alpha testing provide useful data for evaluating system resilience under long-term operation?

Yes, pre-emptive monitoring during alpha testing provides essential data for long-term operations by allowing engineers to identify potential risks and test software stability over different scenarios, such as regular load or peak demand conditions that may not be encountered in short-duration functional tests.

77. Is there a correlation between results observed in alpha and endurance tests of similar products or environments?

Depending on the complexity and type of application being tested, there could be some correlation between results observed in alpha and endurance tests. However, this cannot be assumed without carrying out specialized assessments against key performance indicators for each given environment/product combination before scheduling full test cycles.

78. How can any issues found during the alpha test stage be addressed before undergoing endurance testing?

When performing alpha tests, development teams need to identify any defects early on so that they can take appropriate action and prevent larger problems from occurring or escalating further down the line when endurance testing takes place. This includes implementing bug fixes promptly if needed, validating code changes with suitable integration and regression testing plus incorporating periodic maintenance activities into release schedules where necessary to ensure system stability.

78. How does the tool prioritize high-priority issues?

The tool prioritizes high-priority issues by identifying which components are most critical to completing a given task and providing the user with a clear overview of these elements so that they can decide how best to proceed.

79. What techniques are used in manual testing when utilizing this powerful tool?

When using this powerful tool, manual testing processes usually involve comparing actual results against expected outcomes to diagnose any discrepancies or errors and then making adjustments during development cycles for better accuracy and reliability. Additionally, automated tests may be used alongside manual testing techniques to verify system functionality further when changes have been made before releasing an update into the production environment or conducting final acceptance tests by stakeholders.

80. What is the expected operational time of the executable file?

An executable file's operational time depends on several factors, including the computer's hardware, operating system, and installed software. Measuring the expected operational time is important so any bottlenecks can be identified and addressed. This information can be gathered by running performance tests or benchmarking tools on the system in question before deployment.

81. How does manual frame testing help ensure equivalence class partitioning and interdependent environments?

Manual frame testing helps ensure equivalence class partitioning and interdependent environments by breaking down logical groupings into smaller components that are easier to test for accuracy against predetermined criteria within each class area or environment. Doing this makes unit testing more efficient because testers do not need to review every component at once manually, and can instead focus on specific areas of concern needed for integration with other systems and applications as part of the end-user experience.

82. How can we create a comprehensive list of implementation knowledge when using manual frame testing?

To create a comprehensive list for implementation knowledge when using manual frame testing, there are several steps involved, such as: defining what type(s) of functional/non-functional requirements should be addressed; identifying objectives; establishing acceptance criteria; creating detailed test cases based on all previous inputs; executing these tests in distinct stages within their respective test plan(s); and finally interpreting results from each stage before proceeding further along in development process until completion. This method helps ensure a quality product is released that meets and exceeds customer expectations while also helping to identify any potential issues or areas of improvement during early development stages for quicker resolution.

83. What are the benefits and challenges of the white box testing approach?

The white box testing approach provides detailed information about how code is executed, which allows testers to identify areas where bugs may occur due to incorrect coding practices or logic errors inside programs. This leads to faster issue debugging times than other traditional approaches since testers already have an idea where exactly potential issues could arise from within source code files, instead of searching through random parts throughout the application's interface elements. The latter is a more time-consuming process overall, especially compared to testing by having prior knowledge beforehand that is based on a thorough investigation using white-box techniques.

The major challenge of white box testing is the requirement of testers who have the technical expertise, in-depth knowledge, and/or understanding of a software's internal structure, code, and implementation details.

84. How can automation testing skills help save time and effort during software testing?

Automation testing skills help save time and effort compared to the manual software testing process by allowing a tester to program scripts that will automatically execute tests against an application's user interface. Additionally, these scripted tests can be run multiple times within a short period, while also recording the results. This leads to a more detailed analysis down the track if needed, at an anytime given moment during the development lifecycle itself, instead of waiting long amounts of hours, days, or weeks depending on the size and complexity of the project.

You may also like to read:

As a biotechnologist-turned-writer, I love turning complex ideas into meaningful stories that inform and inspire. Outside of writing, I enjoy cooking, reading, and travelling, each giving me fresh perspectives and inspiration for my work.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Blogs you need to hog!

This Is My First Hackathon, How Should I Prepare? (Tips & Hackathon Questions Inside)

10 Best C++ IDEs That Developers Mention The Most!

Advantages and Disadvantages of Cloud Computing That You Should Know!

Comments

Add comment