- About Software Testing: A Brief History

- Basics Level

- Intermediate Level

- Advanced Level

45+ Software Testing Interview Questions And Answers (2026)

Software testing is a crucial stage of developing and implementing a software/ program. No wonder there are specialists who carry on various software testing processes. But an individual must possess the right skills and knowledge set to perform well in this position. Companies often test prospective employees by putting their skills and knowledge to the test by asking them software testing interview questions and giving assignments.

We have compiled a list of 50 software-testing interview questions and answers to help you revise the major topics and perform well during the interview in this article. Let's begin with a brief description of software testing and its importance.

Practice makes you perfect! Check out Unstop'smock tests, coding sprints and more.

About Software Testing: A Brief History

During World War II, all involved parties wanted to decode the enemy codes/ communication materials and understand their secrets to get the upper hand. In the process, they ended up building powerful electronic computers to be used for this purpose. As a result, by the end of the war in 1945, the world became familiar with the terms- 'debugging' and 'computer bug', etc. For a long time, software testing was limited to debugging.

About two decades later, developers sought to go beyond debugging and test their applications in more real-world settings. And today, software testing has become a vast head comprising many test types. The primary motive of this testing is to ensure that the software meets the requirements and that there are no defects/ errors. Over time, testing has advanced and grown just like software development. Now let's have a look at the software testing interview questions for freshers, intermediates, and advanced professionals.

Software Testing Interview Questions: Basics

Q1. Explain software testing.

Software testing refers to the process of evaluating a software application or system to identify any defects, errors, or malfunctions. It involves executing the software with the intention of finding bugs, verifying its functionality, and ensuring that it meets the specified requirements and quality standards.

The primary goal of software testing is to uncover issues that could potentially impact the software's performance, reliability, security, usability, and overall user satisfaction. By systematically testing the software, developers and quality assurance teams can gain confidence in its quality and make informed decisions regarding its release.

Software testing can encompass various techniques and methodologies, including manual testing, automated testing, and a combination of both. It typically involves designing test cases, executing them, comparing the actual results with expected results, and reporting any discrepancies or failures. Testers may also perform different types of testing, such as functional testing, regression testing, performance testing, security testing, application performance testing, and usability testing, depending on the specific requirements and objectives of the software.

Effective software testing helps ensure that the software meets the desired standards of quality, functionality, and performance, thereby reducing the risk of software failures, customer dissatisfaction, and potential financial or reputational losses. It is an integral part of the software development life cycle (SDLC) and plays a crucial role in delivering reliable and robust software solutions to end users.

Q2. What is the importance or benefits of software testing?

Software testing offers several important benefits in the software development process. Here are some of the key reasons why software testing is important:

-

Bug Detection and Defect Prevention: Software testing helps identify bugs, defects, and errors in the early stages of development. By uncovering these issues early, developers can address them promptly, preventing them from escalating into more significant problems during production or after deployment.

-

Improved Software Quality: Thorough testing ensures that the software meets the specified requirements and quality standards. It helps identify gaps between expected and actual behavior, allowing developers to refine and enhance the software's functionality, reliability, and performance.

-

Enhanced User Experience: Testing helps ensure that the software is user-friendly, intuitive, and provides a positive user experience. By identifying usability issues, interface glitches, or performance bottlenecks, testers can provide feedback to improve overall usability and user satisfaction.

-

Increased Reliability and Stability: Rigorous testing helps uncover and fix defects that could cause software crashes, system failures, or data corruption. By addressing these issues, software reliability and stability are improved, reducing the risk of downtime and enhancing the overall dependability of the system.

-

Cost and Time Savings: Detecting and fixing defects early in the development process is more cost-effective than dealing with them later during production or post-release. By investing in thorough testing, organizations can minimize the expenses associated with rework, maintenance, and customer support.

-

Security and Compliance: Software testing plays a crucial role in identifying security vulnerabilities, such as weak access controls or potential breaches. By performing security testing, organizations can identify and address these risks, ensuring that the software complies with industry standards and regulations.

-

Customer Satisfaction and Reputation: Reliable, bug-free software results in higher customer satisfaction, positive reviews, and improved brand reputation. Testing helps deliver a high-quality product that meets or exceeds customer expectations, enhancing user trust and loyalty.

-

Continuous Improvement: Testing provides valuable feedback and data on the software's performance, functionality, and user behavior. This information can be used to iterate and improve future versions of the software, ensuring a continuous cycle of enhancement and innovation.

Q3. What is the difference between SDET, Test Engineer, and Developer?

SDET (Software Development Engineer in Test), Test Engineer, and Developer are all roles related to software testing and development, but they have distinct differences in their focus and responsibilities. Here's a breakdown of each role:

-

SDET (Software Development Engineer in Test): SDETs are typically involved in both software development and testing activities. They have a strong background in programming and are responsible for creating and maintaining test automation frameworks, tools, and infrastructure. SDETs write code to automate test cases and perform continuous integration and delivery to ensure the quality and reliability of software systems. They work closely with developers, understanding the codebase, and collaborate with the testing team to design and execute tests.

-

Test Engineer: Test Engineers are primarily focused on the testing aspect of software development. They design and execute test cases, create test plans, identify and report defects, and ensure the overall quality of software applications. Test Engineers may use manual testing techniques, as well as test automation tools, to verify the functionality, performance, and usability of software products. They collaborate with developers and stakeholders to understand requirements and provide feedback on potential improvements.

-

Developer: Developers, also known as Software Engineers or Software Developers, are primarily responsible for writing and maintaining code to build software applications. Their focus is on designing, implementing, and debugging software solutions based on specific requirements. Developers typically have expertise in programming languages and frameworks and work closely with other team members to develop software systems that meet functional and non-functional requirements. While they may write unit tests and perform some level of testing, their main focus is on software development rather than testing.

Q4. List the different categories and types of testing.

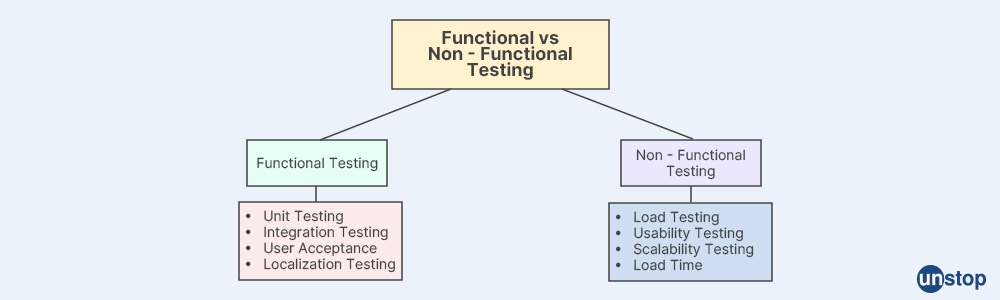

Testing is an integral part of the software development life cycle (SDLC). It helps ensure the quality of software products. There are various categories and types of testing, each serving a specific purpose. Here are some common categories and types of testing:

Functional Testing

- Unit Testing: Testing individual units of code to verify their correctness.

- Integration Testing: Testing the interaction between different units/modules of the software.

- System Testing: Testing the entire system to verify that all components work together as expected.

- Acceptance Testing: Testing is conducted to determine if a system satisfies the acceptance criteria and meets the business requirements.

Non-Functional Testing

- Performance Testing: Evaluating system performance under specific conditions, such as load testing, stress testing, or scalability testing.

- Security Testing: Identifying vulnerabilities and ensuring the system is secure against unauthorized access, attacks, and data breaches.

- Usability Testing: Evaluating the user-friendliness of the software and its ability to meet user expectations.

- Compatibility Testing: Testing the software's compatibility with different operating systems, browsers, devices, or databases.

- Accessibility Testing: Testing the software's compliance with accessibility standards to ensure it can be used by individuals with disabilities.

Structural Testing

- White Box Testing: Examining the internal design/ structure of the software, including code and logic, to ensure all paths and conditions are tested.

- Code Coverage Testing: Measuring the extent to which the source code is executed during testing.

- Mutation Testing: Introducing small changes (mutations) into the code to check if the existing test cases can detect those changes.

Regression Testing

- Retesting: Repeating tests that previously failed to ensure the reported issues have been resolved.

- Smoke Testing: Running a subset of tests to quickly determine if critical functionalities work after a software change or release.

- Sanity Testing: This is a subset of regression testing with conducts a brief round of tests to ensure that the critical functionalities of the system are working after major changes.

Exploratory Testing

- Ad-hoc Testing: Testing without any formal test cases, following an exploratory approach to discover defects and assess the system's behavior.

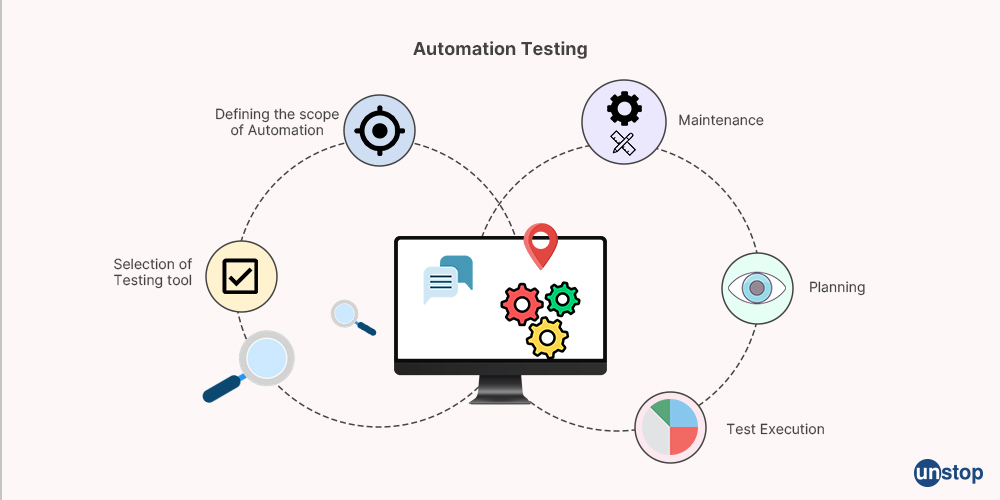

Automation Testing

- Test Automation: Writing scripts or using specialized tools to automate the execution of test cases and improve testing efficiency.

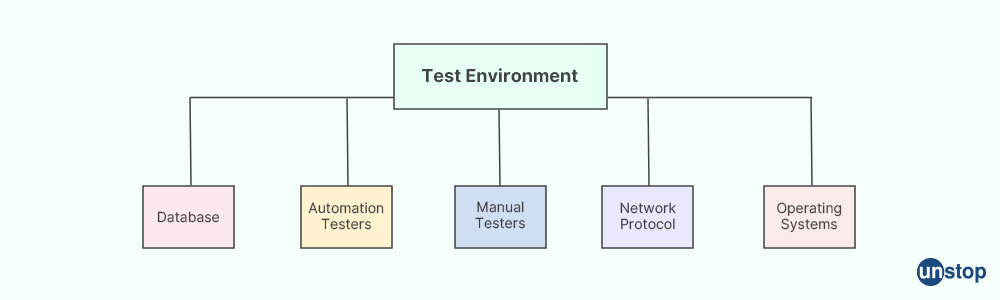

Q5. What do you mean by 'test environment'?

In software testing, a test environment refers to a specific setup or configuration in which software testing activities take place. It is an environment created to mimic the real-world conditions under which the software will be used. A test environment typically consists of hardware, software, network configurations, and other resources necessary for conducting testing.

The purpose of a test environment is to provide a controlled and isolated space where testers can verify the functionality, performance, compatibility, and other aspects of the software. It allows testers to evaluate the software's behavior under different conditions and to identify any issues or defects that may arise.

Q6. State the principles of software testing with a brief explanation.

There are 7 widely accepted principles:

- Presence of defects: The purpose of testing is to show the presence of defects in the software, not the absence. That is, if repeated regression testing doesn't show any defects, it does not mean the absence of bugs i.e., the software is bug-free.

- Not possible to conduct exhaustive testing: Conducting tests on all functionalities, using all invalid/ valid inputs and preconditions is referred to as exhaustive testing which is impossible to conduct. This is why we conduct optimal testing based on the importance of the modules and risk assessment requirements of the software/ application.

- Early testing: Another important principle is early testing, that is, we should start conducting tests from the initial stages of the software development life cycle. The point here is to detect and fix defects early on in the application and testing lifecycle.

- Defect clustering: This refers to the Pareto Principle, that is small modules (about 20%) contain most of the defects/ bugs (80%). And the remaining 80% of functionality contains the remaining 20% of defects.

- Pesticide paradox: The base for this principle comes from the observation that continuous use of a particular pesticide mix to eradicate insects leads the insects to develop resistance to the pesticide, rendering it ineffective. The same holds true for software testing. It is believed that employing a set of repetitive tests over and over again is ineffective in discovering new defects. One must hence review/ revise the test cases regularly and keep them up-to-date.

- Testing is context-dependent: The type of test we use depends on the context and purpose of the software under test.

- The fallacy of absence of errors: The fallacy principle states that software which 99% bug-free can be unusable if it does not meet the user's needs. Here the absence of error is a fallacy that leads us to believe that the software will work perfectly fine just because there I no bug.

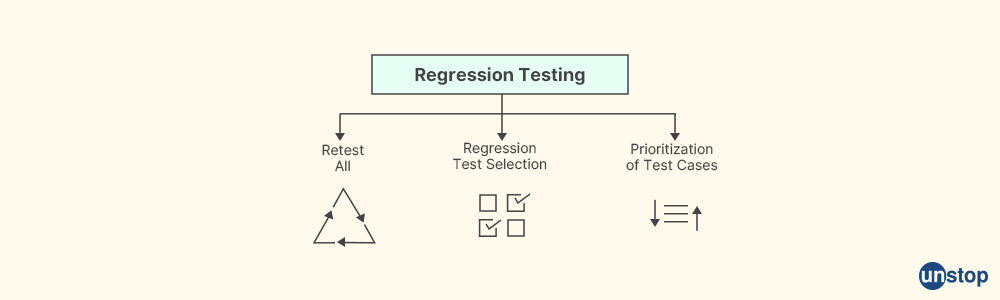

Q7. Explain regression testing.

Regression testing is a type of software testing that aims to ensure that previously developed and tested software continues to function correctly after changes or updates have been made. It is performed to verify that modifications or enhancements to the software have not introduced new defects or caused any regression, meaning that previously working features or functionalities have not been negatively impacted.

The purpose of regression testing is to identify any unintended side effects or conflicts between the modified code and the existing codebase. It helps ensure that the software remains stable and reliable throughout the development and maintenance process.

Regression testing typically involves retesting the affected areas of the software as well as related features to ensure that they still function as expected. It can be performed at various levels, including unit testing, integration testing, system testing, and acceptance testing, depending on the scope and complexity of the changes made.

Some common techniques used in regression testing include re-executing existing test cases, creating new test cases specifically targeting the modified functionality, and using automated testing tools to streamline the process. The selection of test cases for regression testing is crucial and should be based on the potential impact of the changes and the criticality of the affected features.

Q8. What is end-to-end testing?

End-to-end testing is a software testing methodology that verifies the entire flow of an application from start to finish, simulating real user scenarios. It aims to test the interaction and integration of various components, subsystems, and dependencies to ensure that the system works as expected as a whole.

The goal of end-to-end testing is to validate the functional and non-functional requirements of an application by simulating real-world scenarios that a user might encounter. It ensures that all the individual components, modules, and systems work together seamlessly and that data can flow correctly between them. This type of testing helps identify any issues that may arise due to the interactions and dependencies among different parts of the application.

End-to-end testing typically covers the following aspects:

-

User Interactions: It tests the complete user journey or user workflow in the application, including all the steps and interactions involved in achieving a specific task or goal.

-

Integration Points: It verifies the integration and communication between different modules, services, databases, APIs, or third-party systems that the application relies on.

-

Data Flow: It ensures that data is correctly transmitted and transformed throughout the entire system, from input to output, and that it remains consistent and accurate.

-

Performance: End-to-end testing may also include performance testing to evaluate the application's response time, throughput, scalability, and resource usage under realistic usage scenarios.

-

Security: It can involve testing for security vulnerabilities, ensuring that sensitive data is protected, and authentication and authorization mechanisms are working as intended.

End-to-end testing is typically performed in an environment that closely resembles the production environment to mimic real-world conditions as closely as possible. It can involve both manual testing, where testers simulate user interactions, and automated testing, where scripts or tools automate the execution of test scenarios.

Q9. Name the different phases of the STLC (Software Testing Life Cycle).

The Software Testing Life Cycle (STLC) typically consists of the following phases:

-

Requirement Analysis: In this phase, testers and stakeholders analyze the requirements to gain a clear understanding of the software being developed, its functionalities, and expected outcomes. Testers identify testable requirements, define test objectives, and determine the scope of testing.

-

Test Planning: Test planning involves defining the overall testing strategy and approach. Testers create a test plan that outlines the test objectives, test scope, test schedules, resources required, test environment setup, and test deliverables. Testers also identify the test techniques and tools to be used during the testing process.

-

Test Case Development: In this phase, test cases are designed based on the identified requirements and the test objectives. Testers create detailed test cases that specify the inputs, expected outputs, and the steps to be executed. Test cases may be manual or automated, depending on the testing approach.

-

Test Environment Setup: Test environment setup involves creating the necessary infrastructure and configurations for testing. This phase includes preparing the hardware, software, networks, databases, and other components required for testing. Testers ensure that the test environment closely resembles the production environment to simulate realistic scenarios.

-

Test Execution: In this phase, test cases are executed based on the test plan. Testers follow the predefined test procedures and record the actual results. Defects or issues encountered during testing are logged in a defect tracking system. Testers may perform different types of testing, such as functional testing, integration testing, system testing, performance testing, and regression testing, depending on the project requirements.

-

Test Closure: Test closure involves analyzing the test results and making decisions based on the outcomes. Testers prepare test closure reports, which summarize the testing activities, test coverage, and any unresolved issues. The reports also provide an evaluation of the software quality and readiness for deployment.

Q10. What do you mean by Bug Life Cycle or Defect life cycle?

The Bug Life Cycle, also known as the Defect Life Cycle, represents the various stages that a software defect or bug goes through from the time it is identified until it is resolved and closed. It helps track the progress and status of a defect throughout the software testing and development process. The specific stages and terminology may vary between organizations, but the general Bug Life Cycle typically includes the following stages:

-

New/Open: This is the initial stage where a tester or a team member identifies and reports a bug. The bug is logged into a defect tracking system or bug tracking tool with relevant details, such as a description of the issue, steps to reproduce, severity, and priority.

-

Assigned: Once the bug is reported, it is assigned to a developer or a development team responsible for fixing the bug. The assignment ensures that someone takes ownership of the bug and begins working on it.

-

In Progress: At this stage, the developer starts working on fixing the bug. They analyze the bug, reproduce the issue, debug the code, and make the necessary changes to address the problem. The bug is considered "in progress" until the developer has implemented a fix.

-

Fixed: When the developer believes they have addressed the bug, they mark it as "fixed" in the defect tracking system. The developer may provide additional details or comments regarding the fix.

-

Verified/Ready for Retesting: After the bug is fixed, it is assigned back to the testing team for verification. Testers perform retesting to ensure that the bug has been successfully resolved and that the fix did not introduce any new issues. If the bug is found to be resolved, it is marked as "verified" or "ready for retesting."

-

Retest: In this stage, the testing team executes the affected test cases to verify that the bug fix did not impact other areas of the software and that the bug is indeed resolved. If the bug is not reproduced during retesting, it is considered closed. However, if the bug reoccurs or the fix is deemed ineffective, the bug is reopened and moves back to the "In Progress" or "Fixed" stage.

-

Closed: If the retesting is successful and the bug is no longer reproducible, it is marked as "closed." The bug is considered resolved, and no further action is required.

Q11. State the difference between functional and non-functional testing.

Functional testing and non-functional testing are two distinct types of software testing that focus on different aspects of the system. Here's a breakdown of the differences between them:

| Functional Testing | Non-Functional Testing | |

| Objective | Functional testing aims to validate whether the software functions according to the specified requirements and performs the intended tasks. | Non-functional testing evaluates the system's characteristics and attributes beyond its functionality, examining aspects like performance, usability, reliability, security, and scalability. |

| Focus | It primarily focuses on testing the features and functionality of the system, ensuring that it works as expected and meets the business requirements. | It focuses on testing the system's quality attributes and how well it performs under different conditions rather than specific features. |

| Examples | Unit testing, integration testing, system testing, regression testing, user acceptance testing (UAT), etc. | Performance testing, usability testing, security testing, reliability testing, compatibility testing, stress testing, etc. |

| Test Cases | Functional testing involves designing test cases based on the system's functional requirements and desired behavior. | Non-functional testing requires test cases that target the specific attributes being tested, such as load scenarios for performance testing or different user profiles for usability testing. |

| Verification | It verifies if the system meets the functional specifications, such as user interactions, input/output processing, data manipulation, and business logic. | It validates the system's performance, usability, security measures, response time, stability, and other non-functional aspects to ensure it meets the desired standards. |

In summary, functional testing ensures that the system functions correctly according to the defined requirements, while non-functional testing examines the system's qualities beyond functionality, such as performance, usability, reliability, security, and scalability. Both types of testing play crucial roles in ensuring a software system is of high quality and meets the end-users' needs.

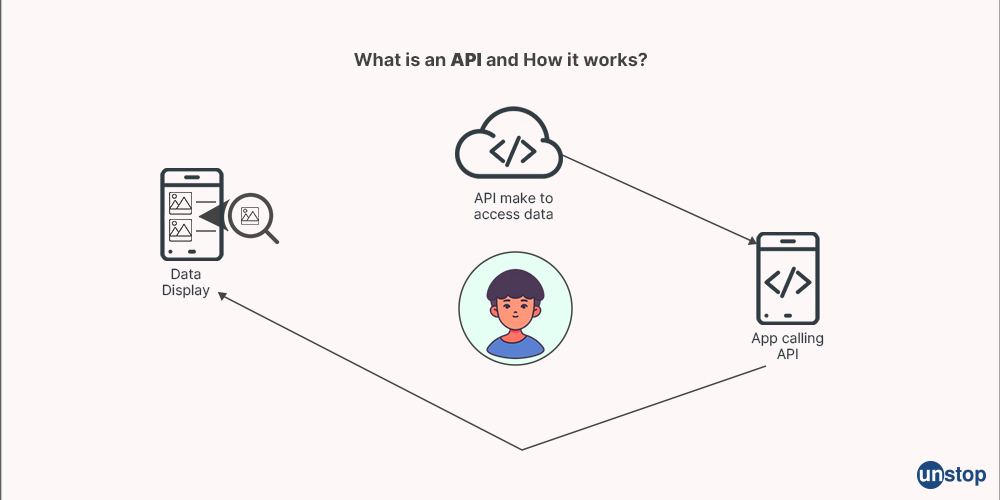

Q12. What is an API?

API stands for Application Programming Interface. It is a set of rules, protocols, and tools that allows different software applications to communicate and interact with each other. An API defines the methods and data formats that applications can use to request and exchange information or perform specific actions.

APIs enable software systems to interact and integrate seamlessly, providing a standardized way for applications to access and utilize each other's functionalities and data. They abstract the underlying implementation details and provide a consistent interface for developers to work with.

Here are a few key points about APIs:

-

Communication: APIs facilitate communication between different software components or systems. They define how requests and responses should be structured and transmitted.

-

Functionality: APIs expose a set of functions, operations, or services that developers can utilize to perform specific tasks or access certain features of an application. APIs provide well-defined methods and parameters to interact with the underlying system.

-

Interoperability: APIs promote interoperability between software applications developed by different parties. By following the API specifications, developers can build applications that can seamlessly integrate and interact with other systems.

-

Data Exchange: APIs allow applications to exchange data in various formats, such as JSON (JavaScript Object Notation) or XML (eXtensible Markup Language), enabling the sharing of information between systems.

-

Security: APIs often include authentication and authorization mechanisms to ensure secure access to protected resources. This helps control access to sensitive data or functionalities.

-

Documentation: APIs are typically documented, providing developers with information on how to use them, including the available endpoints, request/response formats, parameters, and error handling.

APIs can be categorized into different types based on their purpose and functionality. Common types include:

-

Web APIs: These APIs allow applications to interact with web services or access web-based resources. They are often based on HTTP protocols and can be used to retrieve data, submit data, or perform specific operations.

-

Platform APIs: These APIs are provided by software platforms, such as operating systems, programming frameworks, or cloud platforms, to enable developers to access platform-specific features and services.

-

Library APIs: Library APIs provide pre-defined functions and classes that developers can use to perform specific tasks or access specific functionalities. These APIs are typically part of software libraries or development frameworks.

-

SOAP APIs and REST APIs: These are two common architectural styles for designing web APIs. SOAP (Simple Object Access Protocol) is a protocol-based API, while REST (Representational State Transfer) is an architectural style that uses HTTP for communication.

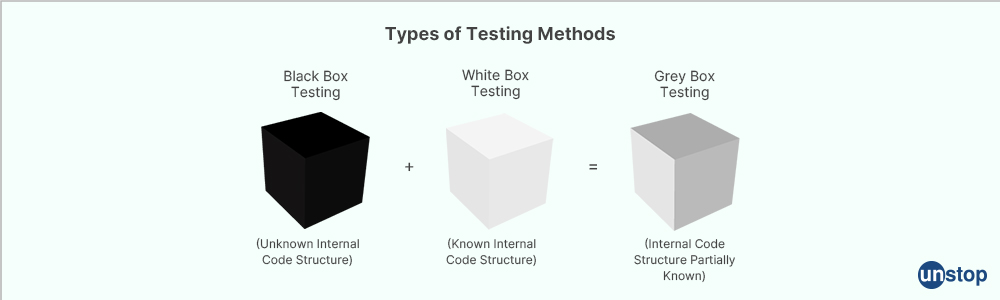

Q13. Define white box testing, black box testing, and gray box testing.

White box testing, black box testing, and gray box testing are different approaches to software testing based on the level of knowledge and access to the internal structure and workings of the system being tested. Here's a brief explanation of each:

- White Box Testing: White box testing can also be called as clear box testing or structural testing. It involves testing the internal structure, code, and logic of the software application. Testers have access to the underlying implementation details, including the source code, algorithms, and data structures. This allows them to design test cases that target specific paths, branches, and conditions within the code to ensure thorough coverage.

- The objectives of white box testing are to verify the correctness of individual functions or modules, identify logical errors, validate the flow of data, and assess the overall code quality. Techniques such as code coverage analysis, path testing, and unit testing are commonly used in white box testing.

- Black Box Testing: Black box testing focuses on testing the software application from an external perspective without knowledge of its internal structure or implementation details. Testers treat the system as a "black box" and focus on the inputs, expected outputs, and behavior of the application.

- Testers design test cases based on the specified requirements, functionality, and expected user behavior. The goal is to evaluate whether the application behaves as expected and meets the desired requirements without concerning how it is implemented.

- Black box testing techniques include equivalence partitioning, boundary value analysis, error guessing, and user acceptance testing. It aims to validate the functionality, usability, performance, and other non-functional aspects of the software.

- Gray Box Testing: Gray box testing is a hybrid approach that combines elements of both white box and black box testing. Testers have limited knowledge of the internal workings of the system, such as high-level design or internal data structures, while still primarily focusing on external behavior.

- Gray box testing allows testers to design test cases based on both the specified requirements and their understanding of the internal system design. This knowledge can help identify critical areas for testing, design more effective test cases, and increase overall test coverage.

- Gray box testing is often used in integration testing, where multiple components or modules are tested together to ensure proper interaction and communication. Testers can simulate different scenarios, inputs, and configurations to assess the system's behavior and performance under various conditions.

The choice of testing approach (white box, black box, or gray box) depends on various factors such as the project requirements, available knowledge of the system, testing objectives, and the level of access to the internal components. Each approach has its advantages and limitations, and a combination of these approaches can be used to achieve comprehensive test coverage.

Q14. Mention the different types of defects in testing.

In software testing, various types of defects or issues can be encountered. Here are some common types of defects that testers may come across during the testing process:

-

Functional Defects: These defects relate to the incorrect behavior or malfunctioning of the software application. Functional defects occur when the software does not meet the specified functional requirements. Examples include incorrect calculations, incorrect data processing, missing or incorrect validations, and improper user interface behavior.

-

Performance Defects: Performance defects are related to issues with the system's performance, such as slow response times, high resource consumption, or poor scalability. These defects can impact the application's speed, efficiency, and overall performance under different workloads or user volumes.

-

Usability Defects: Usability defects affect the user-friendliness and ease of use of the software application. These defects hinder the user's ability to understand, navigate, and interact with the application effectively. Examples include confusing user interfaces, inconsistent layouts, lack of clear instructions, or cumbersome workflows.

-

Compatibility Defects: Compatibility defects arise when the software application does not function correctly or as expected across different platforms, devices, or environments. These defects can occur due to issues with operating systems, browsers, hardware configurations, or dependencies on other software components.

-

Security Defects: Security defects refer to vulnerabilities or weaknesses in the software application that can be exploited by unauthorized users. These defects can lead to unauthorized access, data breaches, or other security risks. Security defects include issues like insufficient authentication mechanisms, inadequate data encryption, or lack of proper access controls.

-

Localization and Internationalization Defects: Localization defects occur when the software application does not adapt properly to different languages, cultures, or regional settings. Internationalization defects refer to issues related to the application's ability to be easily localized for different regions or languages. These defects can include problems with character encoding, text truncation, or cultural-specific functionality.

-

Documentation Defects: Documentation defects pertain to inaccuracies, errors, or omissions in the software documentation, including user manuals, help guides, or system documentation. These defects can lead to confusion, misunderstandings, or inadequate information for users or developers.

-

Interoperability Defects: Interoperability defects occur when the software application has difficulties interacting or integrating with other systems, dummy modules, or components. These defects can arise due to incompatible interfaces, data format mismatches, or communication issues between systems.

Q15. Are there any downsides to using the black box testing techniques?

While black box testing techniques have their advantages, there are also some potential downsides or limitations to consider. Here are a few downsides to using black box testing:

-

Limited Code Coverage: Since black box testing focuses on the external behavior of the software without knowledge of the internal structure or implementation, there is a risk of limited code coverage. Testers may not be able to test every possible path or condition within the code, which can result in potential defects going undetected.

-

Incomplete Test Coverage: Black box testing relies heavily on the specified requirements and expected functionality of the software. If the requirements are incomplete, ambiguous, or incorrect, there is a possibility that certain test cases may not cover all possible scenarios or edge cases, leading to insufficient test coverage.

-

Lack of Visibility into Implementation Details: Testers performing black box testing do not have access to the internal code, data structures, or algorithms. This lack of visibility can make it challenging to identify the root causes of defects or understand the underlying reasons for certain behaviors, which may hinder effective defect analysis and resolution.

-

Difficulty in Reproducing Defects: When testers encounter defects during black box testing, reproducing the issues can sometimes be challenging. Without knowledge of the internal workings, it may be difficult to precisely identify the steps or conditions that trigger the defect. This can make it harder for developers to debug and fix the issues accurately.

-

Limited Ability to Test Complex Interactions: Black box testing may struggle to effectively test complex interactions between different components or systems. As testers do not have visibility into the internal components, it may be challenging to simulate specific scenarios or validate intricate interactions thoroughly.

-

Inefficiency in Performance-related Testing: Black box testing may not be the most efficient approach for performance testing or identifying performance bottlenecks. Since testers lack access to the internal code and performance-related details, it can be challenging to isolate performance issues accurately or conduct detailed performance analyses.

Q16. Define 'bugs' in reference to software testing?

In software testing, a bug refers to a flaw, defect, or error in a software application that causes it to deviate from its intended behavior or produce incorrect or unexpected results. Bugs can occur at various levels of the software, including code, design, or configuration.

Here are some key points about bugs in software testing:

-

Detection: Bugs are typically identified during the testing phase of software development, although they can also be found during other phases or even in production environments. Testers execute test cases and examine the software's behavior to uncover any inconsistencies, failures, or deviations from the expected functionality.

-

Impact: Bugs can have varying degrees of impact on the software. Some bugs may cause minor inconveniences or cosmetic issues, while others can lead to critical failures, system crashes, data corruption, or security vulnerabilities. The impact of a bug depends on its severity and the context in which the software is being used.

-

Types: Bugs can manifest in different ways. Common types of bugs include functional bugs (e.g., incorrect calculations, invalid outputs), performance bugs (e.g., slow response times, resource leaks), usability bugs (e.g., confusing user interfaces, broken links), compatibility bugs (e.g., issues across different platforms or browsers), and security bugs (e.g., vulnerabilities that can be exploited by attackers).

-

Root Causes: Bugs can have various root causes, such as programming errors, database server error, logical mistakes, incorrect assumptions, inadequate testing coverage, data input issues, integration problems, or environmental factors. Understanding the root cause of a bug is crucial for effective bug resolution and prevention.

-

Bug Reporting and Tracking: When a bug is identified, it should be reported in a structured manner to ensure proper tracking and resolution. Bug reports typically include information about the bug's symptoms, steps to reproduce, expected behavior, actual behavior, and any relevant screenshots or logs. Bug tracking systems are often used to manage the bug lifecycle from identification to resolution.

-

Bug Fixing: Once a bug is reported, it undergoes a process of investigation, analysis, and fixing by the development team. The team identifies the root cause, develops a solution, and performs necessary code changes or configuration adjustments to address the bug. Afterward, the fix is tested to verify that it resolves the issue without introducing new problems.

-

Regression Bugs: Regression bugs are defects that occur when a previously fixed or working functionality breaks again due to changes in the software. Regression testing is performed to uncover such bugs and ensure that previously resolved issues do not reoccur.

Effective bug management is essential for delivering high-quality software. Timely detection, thorough reporting, and proper prioritization of bugs contribute to overall software reliability and user satisfaction. Additionally, organizations often have bug triage processes to prioritize and allocate resources for bug fixing based on their severity, impact, and business priorities.

Q17. Differentiate between bugs and errors

| Bugs | Errors |

|---|---|

| Bugs are defects or flaws in software applications. | Errors are mistakes or incorrect actions by humans. |

| Bugs are introduced during the software development process. | Errors are made during the software development process. |

| Bugs are identified and reported by testers or users. | Errors are typically identified during code reviews or inspections. |

| Bugs can cause the software to deviate from its intended behavior. | Errors can lead to incorrect implementation of functionality. |

| Bugs can have varying degrees of impact, from minor inconveniences to critical failures. | Errors can lead to functionality not working as intended. |

| Bugs can be caused by logical mistakes, programming errors, or inadequate testing coverage. | Errors can be caused by incorrect coding, incorrect algorithms, or misunderstanding of requirements. |

| Bugs require bug tracking, reporting, and resolution processes. | Errors require identification, debugging, and fixing processes. |

| Bug fixing involves analyzing the root cause and implementing appropriate changes in the software. | Error fixing involves identifying and correcting the mistakes made in the code or implementation. |

| Regression bugs can occur when previously fixed functionality breaks again. | Regression errors can occur when previously fixed issues reappear. |

While bugs and errors are related, bugs refer specifically to defects or flaws in software applications, whereas errors refer to mistakes or incorrect actions made during the software development process. Bugs are identified and reported by testers or users, while errors are typically identified during code reviews or inspections. Both bugs and errors require appropriate processes for identification, resolution, and prevention to ensure the quality and reliability of software applications.

Q18. What comes first- white box test cases or black box test cases?

The order in which white box test cases and black box test cases are created depends on various factors and the specific testing approach being followed. There is no fixed rule dictating which should come first. The decision can be influenced by the development methodology, project requirements, and the availability of information about the software system.

In some cases, white box testing may be performed first to ensure adequate coverage of the internal code and logic of the software. White box test cases can be designed based on the knowledge of the internal structure and implementation details. This approach helps identify any issues or flaws in the code, ensuring its correctness and adherence to the desired specifications.

On the other hand, black box testing is typically performed to validate the software's external behavior, functionality, and user experience. Black box test cases are designed based on the software's requirements, specifications, or expected user interactions. This approach focuses on testing the system without any knowledge of its internal workings.

However, in practice, a combination of both white-box and black-box testing approaches is often utilized. This approach is called gray box testing. Gray box testing allows testers to have limited knowledge of the internal structure or high-level design while still focusing on the software's external behavior. It combines the benefits of both approaches and helps achieve comprehensive test coverage.

The specific order of creating white box and black box test cases can vary depending on the project context and requirements. It is recommended to align the testing approach with the overall project plan and ensure that both white box and black box testing are adequately performed to achieve the desired level of quality and reliability.

Software Testing Interview Questions: Intermediate

Q19. Explain the meaning of a Test Plan in simple terms. What does it include?

A test plan is a document that outlines the approach and strategy for testing a software application. It serves as a blueprint or roadmap for the testing process, helping testers and stakeholders understand how the testing activities will be conducted.

In simple terms, a test plan is like a game plan that guides testers on what to test, how to test, and when to test during the software development lifecycle. It ensures that testing is organized, thorough, and aligned with the project goals.

A typical test plan includes the following components:

-

Introduction: It provides an overview of the software being tested, the objectives of testing, and the scope of the test plan.

-

Test Objectives: This section outlines the specific goals and objectives of the testing effort, such as verifying functionality, ensuring quality, identifying defects, or validating system performance.

-

Test Strategy: The test strategy defines the overall approach to be followed during testing, including the testing techniques, tools, and resources that will be utilized.

-

Test Scope: It describes the boundaries and extent of the testing, including which features, software modules, or components will be tested and which ones will be excluded.

-

Test Environment: This section details the hardware, software, and network configurations needed for testing, including the setup of test environments or test beds.

-

Test Schedule: It outlines the timeline and sequence of testing activities, including start and end dates, milestones, and dependencies on other activities or deliverables.

-

Test Deliverables: This section lists the documents, reports, or artifacts that will be produced as part of the testing process, such as test cases, test scripts, defect reports, and test summary reports.

-

Test Approach: It describes the testing techniques and methodologies that will be employed, such as black-box testing, white box testing approach, manual testing, automated testing, or a combination of these.

-

Test Entry and Exit Criteria: These are the conditions or criteria that must be met before testing can begin (entry criteria) and the conditions under which testing will be considered complete (exit criteria).

-

Test Resources: It identifies the roles and responsibilities of the testing team members, including testers, test managers, developers, and other stakeholders involved in the testing process.

-

Risks and Contingencies: This section addresses the potential risks, issues, or challenges that may arise during testing and proposes contingency plans or mitigation strategies to handle them.

-

Test Execution: It describes the specific test cases, test data, and test procedures that will be executed during the testing phase and design phase, including any special instructions or conditions.

-

Test Metrics and Reporting: This section defines the metrics that will be used to measure the testing progress and quality, as well as the frequency and format of test reports.

By documenting these details in a test plan, the testing team can have a clear roadmap and reference point throughout the testing process, ensuring that testing is comprehensive, well-organized, and aligned with project requirements.

Q20. Explain in brief the meaning of a Test Report.

A test report is a document that provides an overview of the testing activities and their outcomes. It serves as a communication tool between the testing team, project stakeholders, and management, conveying important information about the quality and status of the software being tested. The primary purpose of a test report is to summarize the testing results, identify any issues or risks, and help stakeholders make informed decisions based on the testing outcomes.

A test report typically includes the following information:

-

Introduction: It provides an overview of the testing objectives, scope, and the software version being tested.

-

Test Summary: This section summarizes the testing activities performed, including the number of test cases executed, passed, failed, and any remaining open issues.

-

Test Coverage: It outlines the extent to which the software has been tested, including the features, software modules, or components covered and those not covered during testing.

-

Test Execution Results: This section provides detailed information about the test results, including the status of each test case (pass, fail, or blocked), any defects found, and their severity or priority.

-

Defect Summary: It presents a summary of the defects discovered during testing, including the total number of defects, their categories, and the current status (open, resolved, or closed).

-

Test Metrics: This section includes relevant metrics that measure the effectiveness and efficiency of the testing process, such as test coverage percentage, defect density, test execution progress, and other key performance indicators.

-

Risks and Issues: It highlights any risks, challenges, or issues encountered during testing, along with their impact and proposed mitigation strategies.

-

Recommendations: Based on the testing results and analysis, this section may include suggestions or recommendations for improving the software quality, refining the testing process, or addressing specific areas of concern.

-

Conclusion: It provides a concise summary of the overall testing results, emphasizing key findings, achievements, and lessons learned during the testing effort.

-

Attachments: Additional supporting documents, such as detailed defect reports, test logs, screenshots, or test data, may be included as attachments to provide further evidence or context.

The test report serves as a vital tool for decision-making, as it helps stakeholders assess the quality of the software, understand the testing progress, and make informed decisions regarding further development, bug fixes, or release readiness. It also serves as a historical record that can be referred to in future testing cycles or for auditing purposes.

Q21. What are Test Deliverables?

Test deliverables are the requirements documents, artifacts, and tangible outcomes produced during the testing process. These deliverables serve as evidence of the testing activities performed, the results obtained, and the overall quality of the software being tested. Test deliverables are typically created and shared with project stakeholders, development teams, and other relevant parties to provide insights into the testing progress, findings, and recommendations. The specific test deliverables can vary depending on the project, organization, and the testing approach being followed. Here are some common examples of test deliverables:

-

Test Plan: The test plan outlines the approach, strategy, and scope of the testing activities. It includes details on test objectives, test strategy, test scope, test schedule, test resources, and other relevant information.

-

Test Cases: Test cases are step-by-step instructions that specify inputs, actions, and expected production outputs for testing specific features or functionality of the software. They serve as a guide for testers to execute tests consistently.

-

Test Scripts: Test scripts are automated scripts written in scripting languages like Selenium, Cucumber, or JUnit. These scripts automate the execution of test cases, reducing manual effort and improving testing efficiency.

-

Test Data: Test data includes the specific inputs, configurations, or datasets used for testing. It ensures that the software is tested with various scenarios, covering different combinations of inputs, boundary values, and edge cases.

-

Test Logs: Test logs capture detailed information about the execution of test cases, including timestamps, test outcomes (pass/fail), any errors or exceptions encountered, and relevant system or environment information.

-

Defect Reports: Defect reports document any issues, bugs, or discrepancies discovered during testing. They include details such as the defect description, steps to reproduce, severity, priority, and other relevant information.

-

Test Summary Report: The test summary report provides an overview of the testing activities and their outcomes. It includes a summary of executed test cases, pass/fail status, defect summary, key metrics, risks, and recommendations.

-

Test Metrics: Test metrics are quantitative measurements used to evaluate the effectiveness and efficiency of the testing process. Examples include test coverage percentage, defect density, test execution progress, and other relevant performance indicators.

-

Test Environment Setup Documentation: This document provides instructions and configuration details for setting up the required test environments, including hardware, software, network setups, and dependencies.

-

Test Completion Certificate: In some cases, a test completion certificate may be issued to signify that the testing activities for a particular design phase or bug release have been completed as planned.

Q22. Explain what is test coverage.

Test coverage refers to the measure of how much of the software or system under test is exercised by the test suite. It assesses the extent to which the test cases and test scenarios cover the various components, features, and functionalities of the software. Test coverage helps in evaluating the thoroughness and effectiveness of the testing effort.

The main purpose of test coverage is to identify any areas of the software that have not been adequately tested. It helps ensure that the test suite includes test cases that exercise different paths, conditions, and scenarios within the software, increasing the likelihood of detecting defects and vulnerabilities.

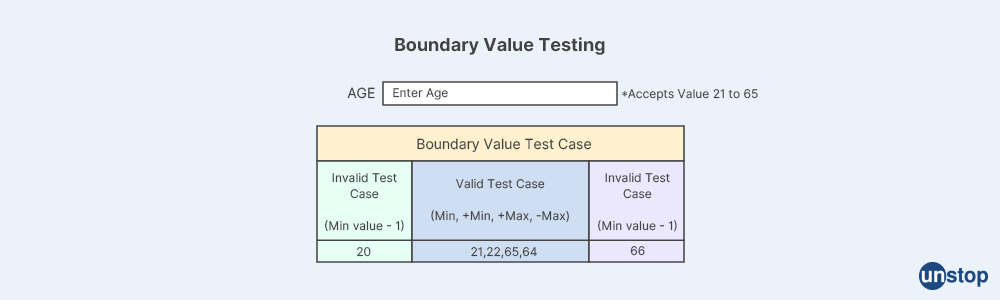

Q23. What do you understand by the term boundary value analysis?

Boundary value analysis is a software testing technique that focuses on testing the boundaries or limits of input values within a specified range. The objective of boundary value analysis is to identify potential defects or issues that may occur at the edges or boundaries of input domains.

In boundary value analysis, test cases are designed based on the values at the boundaries, including the minimum and maximum allowable values, as well as values just inside and outside those boundaries. The rationale behind this approach is that errors are more likely to occur near the boundaries of the input range rather than in the middle.

The key steps involved in boundary value analysis are as follows:

-

Identify the boundaries: Determine the valid input boundaries for each input field or parameter in the software under test. This includes understanding the minimum and maximum values allowed, any constraints or limits, and any special conditions or rules.

-

Determine the boundary values: Select test values that are at the boundaries of the input range. For example, if the input range is 1 to 100, the boundary values would be 1, 2, 99, and 100. In addition, select values just inside and outside the boundaries, such as 0, 101, 98, and 101.

-

Design test cases: Create test cases using the boundary values identified. Each test case should focus on a specific boundary condition. For example, if the input range is 1 to 100, a test case might involve providing a value of 1, 2, or 3 to test the lower boundary.

-

Execute test cases: Execute the test cases designed for boundary value analysis. This involves providing the selected boundary values and verifying that the software handles them correctly. The focus is on observing how the system behaves at the edges of the input range.

Boundary value analysis helps in uncovering defects that may occur due to incorrect handling of boundaries, off-by-one errors, or issues related to boundary conditions. It is based on the principle that errors are more likely to occur near the boundaries, so testing those values thoroughly can help improve the overall robustness and accuracy of the software.

By using boundary value analysis, testers can focus their efforts on critical areas and potentially uncover defects that may go unnoticed with random or typical input values. It is an effective technique for improving the coverage and reliability of testing, particularly when dealing with input fields, parameters, or conditions with specified ranges or limits.

Q24. Define the following- test scenarios, test cases, and test scripts.

Let's define each term:

- Test Scenarios: Test scenarios are high-level descriptions or narratives that outline a specific situation or condition under which testing will be performed. They represent a set of related test cases that together cover a specific aspect or functionality of the software. Test scenarios are often written in a natural language format and provide an overview of the testing objectives, conditions, and expected outcomes. They help in understanding the overall testing scope and serve as a basis for designing detailed test cases. Example of a test scenario: "Verify the login functionality of the application with valid credentials."

- Test Cases: Test cases are detailed sets of steps, conditions, and expected results that are designed to validate specific features, functions, or behavior of the software. Test cases provide a structured and systematic approach to performing testing activities. They include inputs, actions, and expected production outputs to be executed by the tester. Test cases are typically written in a formalized format and can be documented in test management tools, spreadsheets, or test case management systems.

Example of a test case:

Test Case ID: TC001 Test Case Title: Login with valid credentials

Preconditions: User must be registered in the system Steps:

-

Open the application login page.

-

Enter valid username and password.

-

Click on the "Login" button. Expected Result: User should be successfully logged into the system.

-

Test Scripts: Test scripts refer to a set of instructions or code written in a scripting language that automates the execution of test cases. Test scripts are used in automated testing frameworks and tools to automate repetitive testing tasks. They contain commands, statements, and test data that emulate user interactions, system operations, or API calls. Test scripts help in executing test cases efficiently and consistently, reducing the manual effort required for testing. Example of a test script (Selenium WebDriver in Java):

-

WebDriver driver = new ChromeDriver();

driver.get("https://www.example.com");

driver.findElement(By.id("username")).sendKeys("testuser");

driver.findElement(By.id("password")).sendKeys("password");

driver.findElement(By.id("loginBtn")).click();

Q25. Explain the user story.

A user story is a concise, informal description of a software feature or functionality from the perspective of an end user or stakeholder. It is a popular technique used in agile software development methodologies, such as Scrum, to capture and communicate requirements in a user-centered manner. User stories serve as a means of expressing the desired functionality or behavior of the software in a simple and understandable way.

User stories typically follow a specific template format, known as the "As a [user role], I want [goal] so that [benefit]" format. Each part of the template conveys important information about the user story:

-

"As a [user role]": This part specifies the role or persona of the user who will benefit from or interact with the feature. It helps in identifying the target audience or user group for the functionality.

-

"I want [goal]": This part describes the specific functionality or desired outcome that the user wants to achieve. It focuses on the user's needs or requirements and what they expect from the software.

-

"So that [benefit]": This part explains the value or benefit that the user will gain from the desired functionality. It highlights the purpose or motivation behind the user's request.

Here's an example of a user story:

"As a registered user, I want to reset my password to get access to my account if I forget my password."

User stories are often written on index cards or in digital tools, and they are typically maintained in a product backlog. They are intentionally kept brief and straightforward to encourage collaboration, conversation, and iterative development. User stories serve as a starting point for discussions between stakeholders, product owners, developers, and testers to gain a shared understanding of the desired functionality. They can be further refined and broken down into smaller tasks or acceptance criteria during the planning and implementation / initial phases of the software development process.

The use of user stories helps foster a customer-focused approach, promotes flexibility, and allows for incremental delivery of valuable features. They enable the development team to prioritize work based on user needs, focus on delivering customer value, and adapt to changing requirements and priorities throughout the development cycle.

Q26. What is A/B testing?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, app, or other digital assets to determine which version performs better in terms of user engagement, conversion rates, or other predefined metrics. It is a controlled experiment where users are randomly divided into two or more groups, with each group being exposed to a different version of the asset.

In A/B testing, one version is the control (usually the existing version or the default version), while the other version is the variation (often with specific changes or modifications). The purpose is to understand the impact of specific changes on user behavior or performance metrics. By comparing the results between the control and variation, statistical analysis is used to determine if there are any significant differences in user response.

The process of A/B testing typically involves the following steps:

-

Goal Definition: Clearly define the goal or objective of the A/B test. It could be increasing click-through rates, improving conversion rates, enhancing user engagement, or any other relevant metric.

-

Variations Creation: Develop two or more versions of the asset, where each version incorporates a specific change or variation. The control version represents the original or existing design, while the variations introduce specific modifications.

-

Test Setup: Randomly divide the users into groups and assign each group to one version (control or variation). It is crucial to ensure a statistically significant sample size to obtain reliable results.

-

Data Collection: Collect relevant data and metrics from each group during the testing period. This may include tracking user interactions, conversions, click-through rates, bounce rates, or any other key performance indicators.

-

Statistical Analysis: Analyze the collected data to determine if there are any statistically significant differences in user behavior or performance metrics between the control and variation. This analysis helps identify which version performs better.

-

Decision Making: Based on the results and analysis, make informed decision testing on whether to implement the variation as the new default version, roll back to the control version, or further refine the variations for additional testing.

A/B testing is commonly used in various digital marketing and product development contexts. It allows organizations to make data-driven decisions, optimize user experiences, and improve conversion rates by systematically testing and refining different design elements, layouts, content, call-to-actions, or other variables. It helps to validate assumptions, reduce risks, and continuously improve the performance and effectiveness of digital assets based on user preferences and behavior.

Q27. Discuss latent defect vs. masked defect.

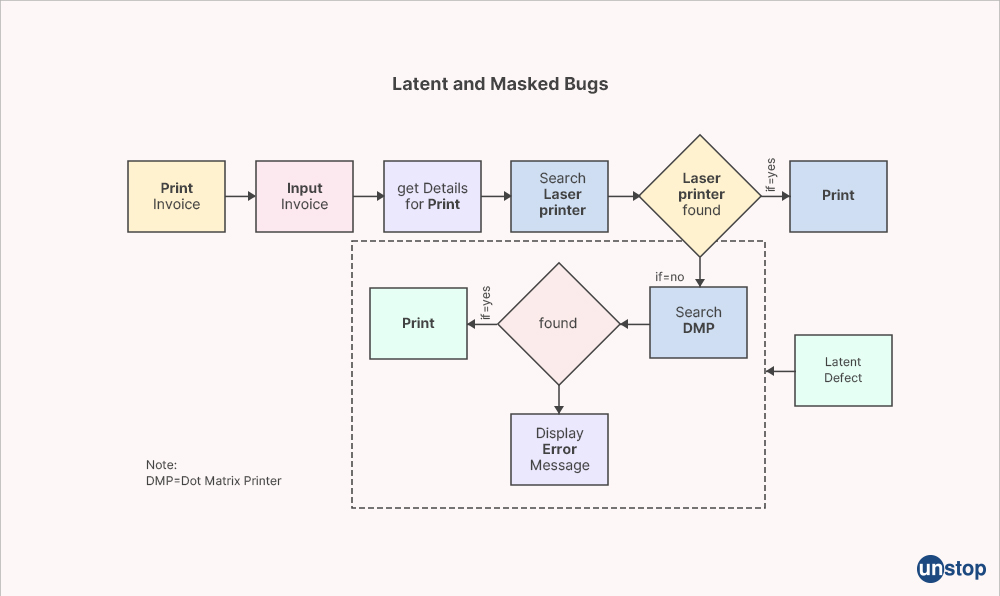

Latent defects and masked defects are two types of software defects that can impact the quality and reliability of a software product. Let's understand each term:

- Latent Defect: A latent defect is a type of defect that remains dormant or hidden within the software and does not manifest or cause issues under normal conditions or during initial testing. It refers to a flaw or error in the software's design, code, or functionality that may not be immediately apparent but can lead to problems or failures in the future. Latent defects typically emerge when specific conditions or scenarios occur during real-world usage that triggers the defect and results in undesired behavior, system crashes, or other issues.

Latent defects are challenging to detect during the development and testing phase because they may not be obvious or may not cause any immediate problems. They can remain undetected until they are triggered by certain inputs, data combinations, or specific usage patterns. Effective testing techniques, such as static testing, beta testing, volume testing, alpha testing, agile testing, localization testing, recovery testing, positive testing, negative testing, exploratory testing, stress testing, or robustness testing, can help uncover latent defects by subjecting the complete software to a wide range of scenarios and edge cases.

- Masked Defect: A masked defect occurs when multiple defects exist in the complete software, but the presence of one defect hides or masks the presence of another defect. It means that the identification and debugging of one defect are hindered or obscured due to the presence of another defect. Masked defects can lead to incomplete or inaccurate defect detection, as the focus may be on fixing the visible defect while remaining unaware of the underlying defects that are masked by it.

Masked defects can occur when defects are intertwined or when the manifestation of one defect suppresses or conceals the manifestation of another. For example, fixing a bug in an individual module may inadvertently introduce a new bug or overlook an existing bug in another module, resulting in a masked defect. Masked defects can be challenging to identify, as they require a comprehensive and systematic approach to testing and debugging.

To mitigate the risks associated with latent defects and masked defects, it is important to follow best practices in software development and testing. This includes conducting thorough and diverse testing techniques, utilizing code reviews and inspections, maintaining good documentation, performing regression testing after bug fixes, and fostering a culture of quality assurance throughout the development process. Regular and proactive testing, along with robust defect management practices, can help identify and address latent defects and masked defects, improving the overall reliability and stability of the software.

Q28. Explain spice in software testing?

In the context of software testing, "SPICE" refers to the Software Process Improvement and Capability Determination model. SPICE is a framework that provides a set of guidelines and best practices for assessing and improving software development and testing processes within an organization. It aims to enhance the quality, efficiency, and maturity of software development practices by defining a structured approach for process assessment, measurement, and improvement.

SPICE is based on the ISO/IEC 15504 standard, also known as the Software Process Improvement and Capability Determination (SPICE) standard. It defines a comprehensive model for evaluating the capability of software development and maintenance processes. The model consists of several process assessment and improvement guides, collectively known as the SPICE framework.

The SPICE framework encompasses the following key components:

-

Process Assessment: This involves evaluating the organization's software development and testing processes against a set of predefined criteria. The assessment helps identify strengths, weaknesses, and areas for improvement. The assessment is typically conducted by performing a detailed analysis of the processes, documentation, and practices followed within the organization.

-

Process Capability Determination: Based on the assessment results, the capability of the software development and testing processes is determined. The capability levels range from Level 0 (incomplete or ad hoc processes) to Level 5 (optimized and well-defined processes). The capability determination provides insights into the maturity and effectiveness of the processes.

-

Process Improvement: Once the capability levels are determined, the organization can focus on improving the identified weak areas and implementing best practices. Process improvement activities may involve revising processes, enhancing documentation, providing training and resources, adopting industry standards, or implementing automation tools. The objective is to continuously enhance the processes and achieve higher capability levels.

SPICE aims to provide a standardized approach for organizations to assess, measure, and improve their software development and testing practices. It helps organizations identify areas for improvement, establish benchmarks, and set goals for enhancing the quality and efficiency of their software development processes. By adopting SPICE, organizations can align their practices with international standards and industry best practices, leading to more effective and reliable software development and testing outcomes.

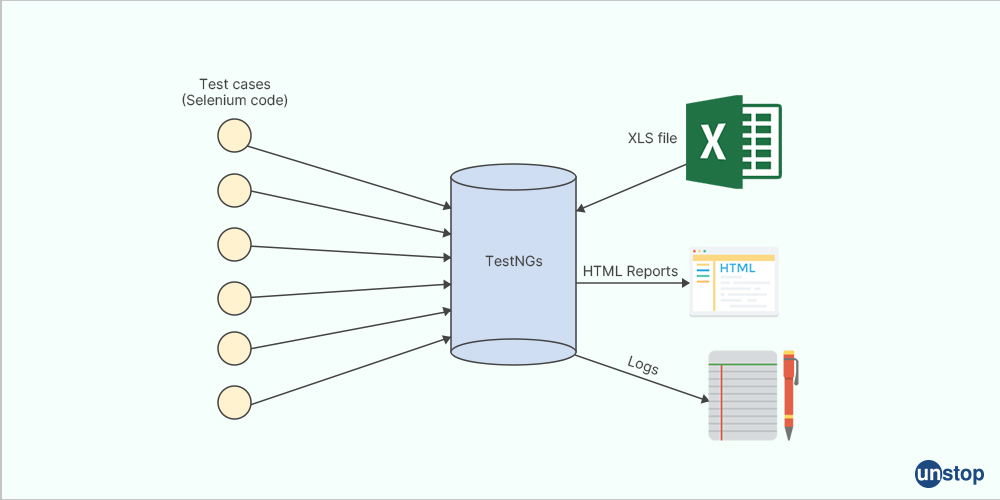

Q29. What is TestNG?

TestNG is a widely used open-source testing framework for Java applications. It is designed to enhance the testing process, providing a more flexible and powerful alternative to the traditional JUnit framework. TestNG stands for "Test Next Generation," and offers many features and functionalities that make it popular among developers and testers.

Here are some key features and capabilities of TestNG:

-

Test Configuration Flexibility: TestNG allows the configuration of tests through XML files, annotations, or programmatically. This provides flexibility in defining test suites, test dependencies, test parameters, and other test configurations.

-

Test Annotations: TestNG introduces a set of annotations that enable the definition of test methods, test classes, test suites, and test setups/teardowns. Annotations such as

@Test,@BeforeSuite,@AfterMethod, and more help in organizing and structuring test cases effectively. -

Test Dependency Management: TestNG allows the specification of dependencies between test methods or groups, ensuring that specific tests are executed in a particular order or based on certain conditions. This helps in managing complex test scenarios and maintaining test integrity.

-

Test Parameterization: TestNG supports the parameterization of tests, allowing the use of different data sets to run the same test method with different valid and invalid inputs. This is useful for data-driven testing, where tests are executed with various combinations of input values.

-

Test Groups and Prioritization: TestNG allows the grouping of tests into logical categories or groups, enabling selective test execution based on requirements. Additionally, tests can be prioritized to determine their execution order.

-

Test Execution Control: TestNG provides various mechanisms to control test execution, including the ability to run tests in parallel, specify thread counts, quality control timeouts, and more. This helps in optimizing test execution time and resource utilization.

-

Test Reporting and Logging: TestNG generates detailed HTML test reports that provide comprehensive information about test execution results, including pass/fail status, execution time, error messages, and stack traces. It also supports custom loggers for capturing and reporting test logs.

-

Integration and Extensibility: TestNG integrates well with popular build tools and development environments such as Ant, Maven, and Eclipse. It also offers extensibility through the use of custom listeners, reporters, and plugins.

TestNG is widely adopted in the Java testing community due to its rich feature set, flexibility, and extensibility. It provides a robust and efficient framework for writing, organizing, and executing tests, making it suitable for a variety of testing scenarios, including unit testing, integration testing, and functional testing.

Q30. Can we skip a code or a test method in TestNG?

Yes, TestNG provides a mechanism to skip a specific code block or test method during test execution. This can be useful in scenarios where you want to exclude certain tests temporarily or conditionally based on specific criteria.

To skip a test method in TestNG, you can use the @Test annotation with the enabled attribute set to false. Here's an example:

@Test(enabled = false)

public void skippedTestMethod()

{

// This test method will be skipped during the execution

// Add any specific logic or assertions here if needed

}

Q31. What annotation do we use to set priority for test cases in TestNG?

In TestNG, you can set priorities for test cases using the @Test annotation's priority attribute. The priority attribute allows you to assign a priority level or order to your test cases, indicating the sequence in which they should be executed. The test cases with higher priority values will be executed before the ones with lower priority values.

Here's an example of how to set the priority for a test case using the @Test annotation:

import org.testng.annotations.Test;

public class ExampleTest {

@Test(priority = 1)

public void testCase1() {

// Test case logic

}

@Test(priority = 2)

public void testCase2() {

// Test case logic

}

@Test(priority = 3)

public void testCase3() {

// Test case logic

}

}

aW1wb3J0IG9yZy50ZXN0bmcuYW5ub3RhdGlvbnMuVGVzdDsKCnB1YmxpYyBjbGFzcyBFeGFtcGxlVGVzdCB7CgpAVGVzdChwcmlvcml0eSA9IDEpCnB1YmxpYyB2b2lkIHRlc3RDYXNlMSgpIHsKLy8gVGVzdCBjYXNlIGxvZ2ljCn0KCkBUZXN0KHByaW9yaXR5ID0gMikKcHVibGljIHZvaWQgdGVzdENhc2UyKCkgewovLyBUZXN0IGNhc2UgbG9naWMKfQoKQFRlc3QocHJpb3JpdHkgPSAzKQpwdWJsaWMgdm9pZCB0ZXN0Q2FzZTMoKSB7Ci8vIFRlc3QgY2FzZSBsb2dpYwp9Cn0=

In the above example, testCase1() has the highest priority (1), testCase2() has the next priority (2), and testCase3() has the lowest priority (3). During test execution, TestNG will execute the test cases in ascending order of priority.

It's important to note that the priority values can be any integer value, and TestNG uses the numeric order for determining the execution sequence. If two test cases have the same priority value, their execution order may be arbitrary.

Additionally, it's worth mentioning that TestNG also provides other annotations such as dependsOnMethods and dependsOnGroups to define dependencies between test cases, allowing you to establish a specific order of execution based on the results of other tests.

Q32. Mention a few criteria on which you can map the success of Automation testing.

The success of automation testing can be evaluated based on various criteria, including:

-

Test Coverage: Automation testing should cover a significant portion of the application's functionality. The more test cases automated, the higher the test coverage and the better the chances of detecting defects.

-

Test Execution Time: Automation should reduce the overall test execution time compared to manual testing. Faster test execution allows for more frequent testing cycles and quicker feedback on the application's quality.

-

Bug Detection: Automation should effectively detect bugs and issues in the application. The number of bugs identified and their severity can indicate the effectiveness of automation in uncovering defects that might have been missed during manual testing.

-

Test Maintenance Effort: Automation scripts require maintenance as the application evolves. The success of automation testing can be assessed by the effort required to maintain the automation suite. A well-designed and maintainable test suite should have minimal maintenance overhead.

-

Reliability and Stability: The automation suite should consistently produce reliable results. It should run without unexpected failures or errors, providing stable outcomes across multiple test runs.

-

Reusability: Successful automation testing involves reusable components, libraries, and frameworks. The ability to reuse test scripts, functions, and resources across different projects or test scenarios reduces effort and enhances efficiency.

-

Integration with CI/CD: Automation testing (which does not need human intervention) should seamlessly integrate with Continuous Integration/Continuous Delivery (CI/CD) pipelines. It should be compatible with build and deployment processes, enabling automatic test execution as part of the software development lifecycle.

-

Cost and Resource Savings: Automation should bring cost and resource savings by reducing the reliance on manual testing efforts. Evaluating the financial benefits, such as reduced testing time, increased productivity, and optimized resource utilization, can determine the success of automation testing.

-

Reporting and Analysis: Automation testing should provide comprehensive test reports and analysis. The availability of detailed reports, including test results, coverage metrics, and defect statistics, aids in tracking progress, identifying trends, and making informed decisions.

-

Team Satisfaction: The success of automation testing can also be measured by the satisfaction of the testing team. If the team finds automation beneficial, productive, and an enabler of better quality, it indicates a positive outcome.

These criteria provide a basis for evaluating the success of automation testing. However, it's important to define specific goals and metrics aligned with the project's objectives and requirements to assess the effectiveness of automation testing in a particular context.

Q33. Mention the basic components of the defect report format.

The basic components of a defect report format, also known as a bug report or issue report, typically include the following information:

-

Defect ID: A unique identifier assigned to the defect for easy tracking and reference.

-

Title/Summary: A concise and descriptive title that summarizes the defect or issue.

-

Description: A detailed description of the defect, including the steps to reproduce it, the observed behavior, and any relevant information that can help understand and reproduce the issue.

-

Severity: The level of impact or seriousness of the defect, indicating how severely it affects the functionality or usability of the software. Common severity levels are Critical, High, Medium, and Low (as discussed earlier).

-