- What Is Data Reduction In Data Mining?

- Why Is Data Reduction Necessary?

- Major Techniques For Data Reduction

- Choosing The Right Data Reduction Technique

- Challenges And Limitations Of Data Reduction

- Applications Of Data Reduction In Real Life

- Future Trends In Data Reduction

- Conclusion

- Frequently Asked Questions

Data Reduction In Data Mining | Goals, Techniques & More (+Examples)

In the world of data mining, bigger doesn't always mean better. As organizations collect massive volumes of data every second, the challenge lies not just in storage, but in making sense of it efficiently. This is where data reduction steps in. Data reduction refers to the process of reducing the volume of data while preserving its integrity and the patterns hidden within. By trimming down the dataset without losing critical information, data reduction helps improve the performance of mining algorithms, speeds up computation, saves storage costs, and simplifies analysis.

In this article, we'll explore what data reduction really means, why it is essential in today's data-driven world, the various techniques used, and how it ultimately empowers smarter, faster decision-making.

What Is Data Reduction In Data Mining?

Data reduction is the process of minimizing the amount of data without losing important information. Simply put, it means making a large dataset smaller and more manageable while still keeping the key patterns, trends, and structures intact. Think of it like condensing a long book into a short summary—you skip the extra details but still understand the full story.

Goals of Data Reduction

The primary goals of data reduction include:

- Improve Efficiency of Data Mining Algorithms: By reducing the size of the dataset, algorithms can process information faster and more efficiently, leading to quicker insights.

- Save Storage and Computational Resources: Smaller datasets require less memory and processing power, helping save valuable storage space and reduce computational costs.

- Retain Patterns, Trends, and Structures: Even after reducing data, the essential characteristics must be preserved to ensure the analysis remains accurate and meaningful.

Real-World Analogy: Summarizing a Book Without Losing the Plot

Imagine you have a 500-page novel. Instead of reading every page, you read a 10-page summary that captures all the important characters, events, and plot twists. You still understand the essence of the story without going through every tiny detail. Similarly, data reduction trims down huge amounts of data while ensuring that critical information is never lost.

In short: Data reduction helps make big data smaller, faster to analyze, cheaper to store, and easier to understand—without losing the story it tells.

Why Is Data Reduction Necessary?

Data reduction is necessary because today's world generates massive amounts of information every second. From social media updates to scientific research, the volume of data is exploding—and handling all of it can quickly become overwhelming. Without data reduction, analyzing, storing, and managing such vast data would be inefficient, costly, and time-consuming.

Here’s why data reduction is crucial:

- Enhances Processing Speed: Smaller datasets allow algorithms to run faster, making it easier to extract insights and make decisions quickly.

- Saves Storage Space: Storing huge volumes of data is expensive. Data reduction helps minimize storage costs by keeping only the most important information.

- Optimizes Computational Resources: Reduced data requires less memory and processing power, which is critical for high-performance systems and real-time analytics.

- Maintains Data Quality: By eliminating noise and redundant information, data reduction improves the quality of data, leading to more accurate and reliable results.

- Simplifies Data Management: Managing a smaller dataset is much easier, whether you're organizing, securing, or backing up the information.

Simple Analogy:

Think of cleaning out your closet. Instead of keeping every piece of clothing (even the ones you never wear), you keep only what you need and love. It saves space, makes it easier to find what you’re looking for, and keeps everything neat. Similarly, data reduction keeps data manageable, efficient, and ready for action!

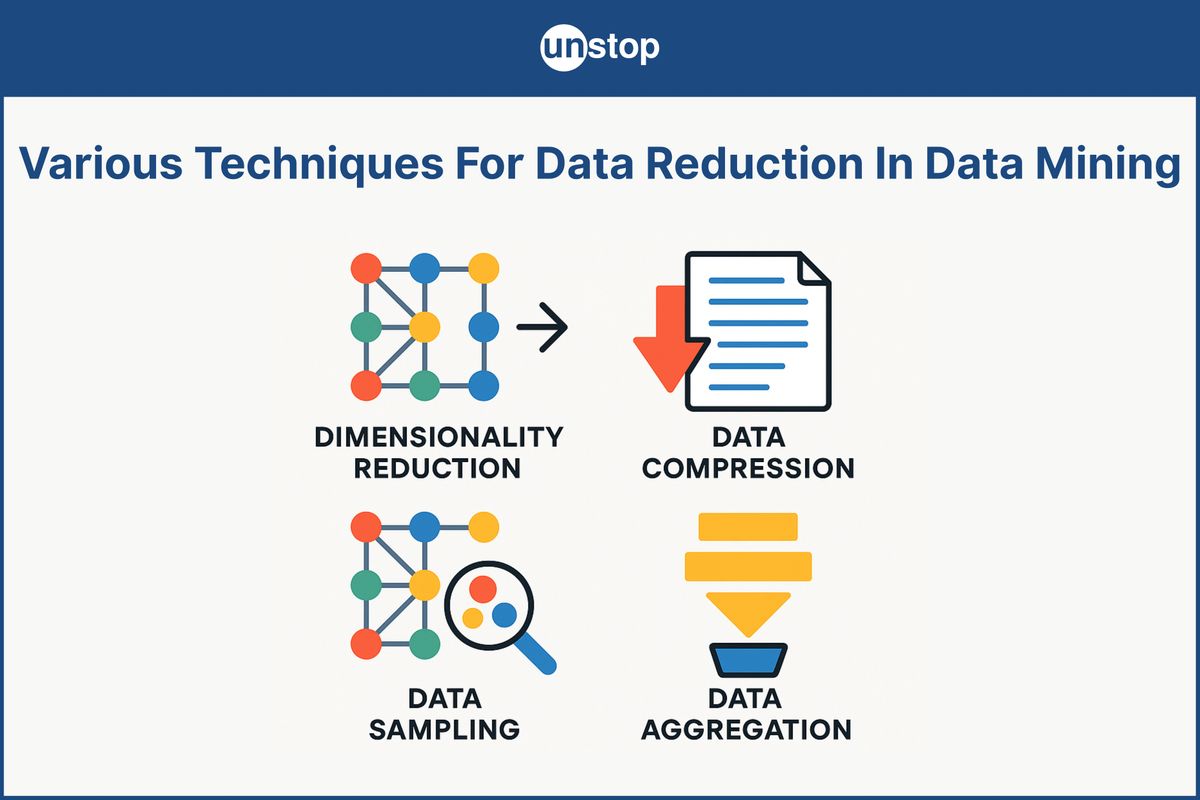

Major Techniques For Data Reduction

As data volumes continue to grow, using effective data reduction techniques becomes critical for improving efficiency, saving storage, and simplifying analysis. Let’s explore the major techniques for data reduction in detail:

1. Dimensionality Reduction

Dimensionality reduction refers to the process of reducing the number of input variables in a dataset. High-dimensional data often leads to challenges such as the curse of dimensionality, where the data becomes sparse, distances between points lose meaning, and models perform poorly.

Key Techniques:

- Principal Component Analysis (PCA): PCA identifies the directions (principal components) that maximize variance in the data, allowing us to project high-dimensional data into a lower-dimensional space without losing critical information.

- Linear Discriminant Analysis (LDA): LDA focuses on maximizing the separability between multiple classes. It reduces dimensions by finding a feature space that best separates different categories of data.

- Feature Selection: Instead of transforming data, feature selection picks a subset of the original features that are most relevant to the target output. This can be done using techniques like forward selection, backward elimination, or mutual information.

Examples and Use Cases:

- PCA is widely used in image compression and gene expression analysis.

- LDA is common in pattern recognition, such as face recognition systems.

- Feature selection is essential in machine learning tasks like spam detection, where only certain keywords matter.

2. Numerosity Reduction

Numerosity reduction involves representing data using fewer numbers while maintaining its essential characteristics. Instead of storing all the original data points, it summarizes the data.

Techniques:

- Parametric Methods: These assume a model for the data (such as linear regression or polynomial functions) and only store the parameters of the model.

- Non-Parametric Methods: These do not assume a fixed model. Methods like histograms, clustering (e.g., k-means clustering), and sampling are used to summarize the dataset.

Practical Applications:

- Regression models are used in trend forecasting, like predicting stock prices.

- Clustering is widely used in customer segmentation for marketing strategies.

3. Data Compression

Data compression is the process of encoding information using fewer bits than the original representation, reducing the data size for storage or transmission.

Types of Data Compression:

- Lossless Compression: Data is compressed without any loss of information. Techniques like run-length encoding and Huffman coding ensure that the original data can be perfectly reconstructed.

- Lossy Compression: Some loss of information is acceptable to achieve higher compression rates. This is common in multimedia data such as images (JPEG), audio (MP3), and videos.

When to Use Lossless vs. Lossy Compression:

- Use lossless compression when data integrity is critical, such as text files, executable files, or financial records.

- Use lossy compression when minor losses are tolerable, and saving space is prioritized, such as in image sharing or video streaming.

4. Data Aggregation

Data aggregation combines multiple data records to create a summarized representation. By grouping data and calculating summary statistics, large datasets become easier to handle and interpret.

Example: Instead of analyzing individual daily sales transactions, businesses aggregate daily sales into monthly sales reports to identify broader trends.

How Aggregation Helps: Aggregation simplifies data mining processes by reducing noise and variability while retaining critical trends and patterns needed for decision-making.

5. Data Sampling

Data sampling involves analyzing a subset of the full dataset that accurately represents the whole. It is especially useful when working with extremely large datasets that are too costly or time-consuming to process entirely.

Types of Sampling:

- Simple Random Sampling: Every data point has an equal chance of being selected, ensuring an unbiased sample.

- Stratified Sampling: The data is divided into distinct groups (strata), and samples are taken from each group proportionally. This ensures that important subgroups are represented.

- Cluster Sampling: The dataset is divided into clusters, and a random set of clusters is selected. All data points within chosen clusters are analyzed.

When Sampling is Preferable: Sampling is ideal when full data processing is impractical due to time, cost, or computational constraints, such as during early-stage exploratory data analysis.

Choosing The Right Data Reduction Technique

Selecting the right data reduction method depends on several important factors. The nature of the data, the goal of the analysis, and the tolerance for information loss all play a role in choosing the best technique. Here's a detailed breakdown:

|

Factor |

Considerations |

Preferred Techniques |

Examples |

|

Nature and Size of the Dataset |

- Extremely large datasets need aggressive reduction. - High-dimensional data needs simplification. |

- Dimensionality Reduction (PCA, LDA) - Data Sampling |

- Reducing features in genomic data. - Sampling millions of user clicks. |

|

Type of Data Mining Task |

- Depends on the goal: classification, clustering, or regression. |

- LDA for classification tasks. - Clustering for unsupervised learning. - Regression models for predicting trends. |

- LDA used in spam email detection. - Clustering used in customer segmentation. |

|

Acceptable Level of Information Loss |

- Some tasks allow minor loss (e.g., image analysis). - Others demand full accuracy (e.g., financial records). |

- Lossless Compression when full accuracy is needed. - Lossy Compression when minor loss is acceptable. |

- Lossless compression for bank transaction records. - Lossy compression for social media image sharing. |

|

Need for Interpretability |

- If understanding the reduced data is important, prefer simpler techniques. |

- Feature Selection - Aggregation |

- Selecting key features in healthcare diagnosis. - Summarizing daily sales into monthly reports. |

|

Available Resources (Time, Computation) |

- Limited storage or slow systems need faster methods. |

- Data Sampling - Numerosity Reduction |

- Sampling web logs for quick analysis. - Using regression models instead of raw datasets. |

Quick Summary:

- For huge datasets, sampling and dimensionality reduction are often the best choice.

- For classification tasks, LDA and feature selection work well.

- When accuracy is critical, lossless compression or parametric modeling should be prioritized.

- When speed and resource saving are important, aggregation and sampling are effective strategies.

Challenges And Limitations Of Data Reduction

While data reduction offers significant benefits such as faster processing, reduced storage needs, and improved efficiency, it also presents several challenges and limitations. Understanding these is critical for selecting the right techniques and avoiding unintended consequences.

|

Challenge / Limitation |

Explanation |

Impact |

|

Loss of Important Information |

Data reduction techniques may remove subtle but critical patterns, leading to incomplete analysis. |

Reduced accuracy in predictions and insights. |

|

Over-Simplification |

Excessive reduction can oversimplify complex data relationships, masking important trends. |

Loss of valuable business or research insights. |

|

Bias Introduction |

Sampling or feature selection may introduce bias if not done carefully. |

Results may not be representative or generalizable. |

|

Difficulty in Choosing Techniques |

Selecting the wrong reduction method for a specific task or dataset can cause poor outcomes. |

Wasted resources and inaccurate conclusions. |

|

Computational Cost for Reduction |

Some data reduction methods, like PCA or clustering, themselves require significant computation. |

High upfront processing time before benefits are realized. |

|

Irreversibility (Especially in Lossy Compression) |

Once lossy methods are applied, original data cannot be fully recovered. |

Permanent loss of fine details, unsuitable for critical applications. |

|

Complexity in Implementation |

Advanced techniques like dimensionality reduction or sophisticated sampling may require expertise. |

Increases project complexity and demands skilled personnel. |

|

Difficulty in Maintaining Interpretability |

Reduced datasets may be harder to explain or justify, especially in regulated industries. |

Challenges in compliance, auditing, and stakeholder reporting. |

Key Takeaways:

- Careful Selection is Crucial: Choosing inappropriate techniques can do more harm than good.

- Trade-offs are Inevitable: There is always a balance between reducing data size and preserving data quality.

- Expertise Matters: Skilled data scientists are often required to properly apply advanced data reduction methods without introducing errors or biases.

- Consider the End Goal: Always align the data reduction strategy with the nature of the task (e.g., predictive accuracy vs. storage savings).

Applications Of Data Reduction In Real Life

Data reduction techniques are not just academic concepts — they are actively shaping industries across the globe. Let’s explore some real-world applications where data reduction plays a crucial role:

1. Healthcare and Medical Research

- Patient Records Management: Hospitals generate enormous amounts of data from patient visits, lab reports, imaging scans, etc. Data reduction helps by summarizing critical patient information, enabling faster diagnosis without overwhelming doctors with raw data.

- Genomic Data Analysis: Genetic sequencing generates terabytes of information. Techniques like dimensionality reduction (e.g., PCA) are used to focus only on the most significant gene expressions for faster research and discovery.

2. Financial Services

- Stock Market Data Compression: Financial markets produce data at microsecond levels. Aggregation and sampling methods reduce this flood of information into manageable datasets for real-time decision-making, trading strategies, and pattern analysis.

- Fraud Detection: Data reduction helps by narrowing down transactional data to suspicious activities, making fraud detection systems more efficient and faster.

3. E-commerce and Retail

- Customer Behavior Analysis: E-commerce platforms track millions of user interactions daily. Feature selection and dimensionality reduction techniques help focus on critical behaviors (like purchase frequency, time on site, product categories) rather than all user activities, making recommendation systems smarter.

- Sales Data Aggregation: Summarizing daily sales into weekly or monthly reports enables managers to observe broader trends without dealing with noisy daily fluctuations.

4. Internet of Things (IoT) and Sensor Networks

- Smart Cities and Infrastructure Monitoring: IoT devices continuously send vast amounts of sensor data. Data compression and sampling ensure only relevant, summarized data is transmitted to central systems, reducing network load and storage costs.

- Wearable Technology: Fitness trackers and health monitors use real-time data reduction to highlight significant metrics (like steps taken, heart rate averages) rather than flooding users with raw second-by-second readings.

5. Telecommunications

- Network Traffic Analysis: Telecom providers use sampling and aggregation to manage and monitor enormous data traffic efficiently, identifying bottlenecks and anomalies without storing every packet of data.

- Call Data Record (CDR) Compression: Telecom companies reduce billions of call records into summarized formats for billing, network optimization, and customer behavior studies.

6. Scientific Research and Environmental Monitoring

- Climate Data Summarization: Weather stations collect atmospheric data around the clock. Aggregating and compressing this data enables researchers to model climate patterns and make predictions without being bogged down by raw measurements.

- Space Missions: Satellites and space probes have limited bandwidth. Data reduction techniques are crucial to prioritize and compress data before sending it back to Earth.

Future Trends In Data Reduction

As data generation continues to accelerate, traditional data reduction techniques are evolving to meet new challenges. The future of data reduction is shaped by smarter, more adaptive methods that can handle massive, dynamic datasets in real time. Here’s a look at key trends driving this evolution:

1. Role of AI and Machine Learning in Smart Data Reduction

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing how we approach data reduction. Instead of applying static methods, AI-driven systems can learn patterns in data and dynamically decide:

- Which data is most valuable and should be preserved.

- Which features or dimensions are redundant and can be eliminated.

- How much compression or summarization is appropriate without sacrificing accuracy.

For instance, ML algorithms can automate feature selection for predictive models, choose optimal sampling rates based on data variability, or apply intelligent compression methods that adapt as data streams change. This results in smarter, context-aware data reduction processes that constantly improve themselves over time.

2. Adaptive and Dynamic Reduction Techniques

Traditional data reduction methods are often static — they apply the same reduction rules regardless of data changes. However, the future lies in adaptive and dynamic techniques that:

- Adjust their reduction strategy based on real-time data characteristics.

- Reassess which features are important as new trends emerge.

- Automatically re-aggregate or resample data during anomalies or bursts in volume.

For example, in real-time monitoring systems, an adaptive reduction algorithm could decide to retain more detailed information during a detected system failure while reducing normal operational data more aggressively. This flexibility makes data reduction smarter and more resource-efficient.

3. Importance in Big Data, Edge Computing, and Real-Time Analytics

Data reduction is becoming critical in modern computing environments where speed and efficiency are paramount:

- Big Data: In environments like Hadoop or Spark, reducing data at early stages saves enormous computational costs and shortens analytics cycles.

- Edge Computing: Devices at the "edge" (like IoT sensors, autonomous vehicles, and smart cameras) generate vast data but have limited storage and processing power. Data reduction techniques help pre-process and filter data locally, minimizing the load sent to centralized servers.

- Real-Time Analytics: Industries such as finance, healthcare, and e-commerce increasingly require instant insights. Data reduction ensures that only the most relevant, high-value data points are processed in real time, enabling faster decision-making without overwhelming systems.

Conclusion

Data reduction is a cornerstone of modern data mining, empowering organizations to manage and analyze vast amounts of information more efficiently. By streamlining datasets without sacrificing key insights, it enables faster processing, reduced storage costs, and more accurate models. Whether through dimensionality reduction, data compression, aggregation, or sampling, the techniques we use today are just the beginning.

As AI and machine learning continue to advance, we can expect even more dynamic, context-aware data reduction methods that adapt to evolving data landscapes. The growing importance of big data, edge computing, and real-time analytics ensures that data reduction will remain a crucial tool in extracting meaningful patterns from ever-expanding datasets.

Ultimately, data reduction is not about cutting corners but about enhancing the quality and accessibility of the information we mine. With the right techniques in place, businesses, researchers, and organizations can continue to make smarter decisions, faster and with greater precision.

Frequently Asked Questions

Q. What is data reduction in data mining?

Data reduction in data mining refers to techniques used to reduce the volume of data while retaining essential information. The goal is to simplify datasets, improve processing times, save storage space, and enhance the performance of data mining algorithms.

Q. Why is data reduction important in data mining?

Data reduction is crucial because it helps manage large datasets more efficiently. It speeds up the data mining process, reduces computational costs, and makes it easier to identify meaningful patterns. By removing noise and redundant information, data reduction helps improve the accuracy and relevance of results.

Q. What are the most common techniques for data reduction?

Some of the most common data reduction techniques include:

- Dimensionality Reduction (e.g., PCA, LDA)

- Data Compression (e.g., lossless and lossy compression)

- Sampling (e.g., simple random sampling, stratified sampling)

- Data Aggregation (e.g., summarizing data into groups) These techniques help reduce the complexity and size of datasets without losing valuable information.

Q. How does AI and machine learning contribute to data reduction?

AI and machine learning play a significant role in smart data reduction by automating the process of feature selection, compression, and aggregation. These technologies enable systems to learn which features or data points are most relevant and adjust the reduction strategy in real-time based on data patterns.

Q. What are the challenges in implementing data reduction?

Some challenges include:

- Information Loss: Over-reduction can lead to the loss of important details or patterns.

- Balancing Accuracy and Reduction: Finding the right balance between reducing data size and maintaining the quality of insights is often difficult.

- Dynamic Datasets: Adapting reduction techniques to handle evolving data or real-time data streams can be complex.

Q. Where is data reduction commonly applied in the real world?

Data reduction techniques are widely used in industries such as:

- Healthcare (for managing patient data and genomics analysis)

- E-commerce (for customer behavior analysis and recommendation systems)

- Telecommunications (for efficient network traffic management)

- IoT and Edge Computing (for reducing data generated by smart devices and sensors) These applications help organizations reduce data overload while preserving essential information for decision-making.

You might also be interested in reading:

I’m a Computer Science graduate with a knack for creative ventures. Through content at Unstop, I am trying to simplify complex tech concepts and make them fun. When I’m not decoding tech jargon, you’ll find me indulging in great food and then burning it out at the gym.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment