- What Is CPU Scheduling In Operating Systems?

- Process Scheduler And Its Types

- Why Do We Need CPU Scheduling in Operating Systems

- Key Terminologies For CPU Scheduling In Operating Systems

- Types of CPU Scheduling (Non-Preemptive Vs. Preemptive Scheduling)

- Types Of CPU Scheduling Algorithms In OS

- Criteria For Effective CPU Scheduling

- Factors Influencing CPU Scheduling Decisions

- Objectives & Considerations In Designing CPU Scheduling Algorithms

- Conclusion

- Frequently Asked Questions

CPU Scheduling In Operating Systems & All Related Concepts Explained

CPU scheduling is a fundamental part of operating systems, responsible for deciding the order in which processes access the CPU. By prioritizing tasks, CPU scheduling helps manage system performance and responsiveness, ensuring that each active process gets its share of the CPU's resources. This is especially important in multitasking environments, where multiple programs run concurrently.

In this article, we will discuss what CPU scheduling in the operating system is, its key components, decision-making criteria and algorithms, its impact on system efficiency, other key CPU scheduling concepts and why it's critical for an optimized user experience.

What Is CPU Scheduling In Operating Systems?

Before discussing CPU scheduling, let’s see what a process means. It is essentially a program in execution, complete with its own code, data, and allocated resources. Every process in an OS needs CPU time to perform tasks. This is where CPU scheduling in OS comes into the picture.

CPU scheduling is the mechanism by which an operating system decides the order in which active processes access the CPU. Since there is only one CPU (or a limited number of CPUs), CPU scheduling helps coordinate which process gets CPU access at any given moment. This ensures that multiple tasks can proceed smoothly, minimizing idle time and avoiding bottlenecks.

The scheduling process follows specific algorithms, like First-Come, First-Served (FCFS), Shortest Job Next (SJN), and Round Robin, which are selected based on system needs. Efficient scheduling results in a balanced, responsive system that can manage user tasks, system operations, and background processes effectively.

How CPU Scheduling Works?

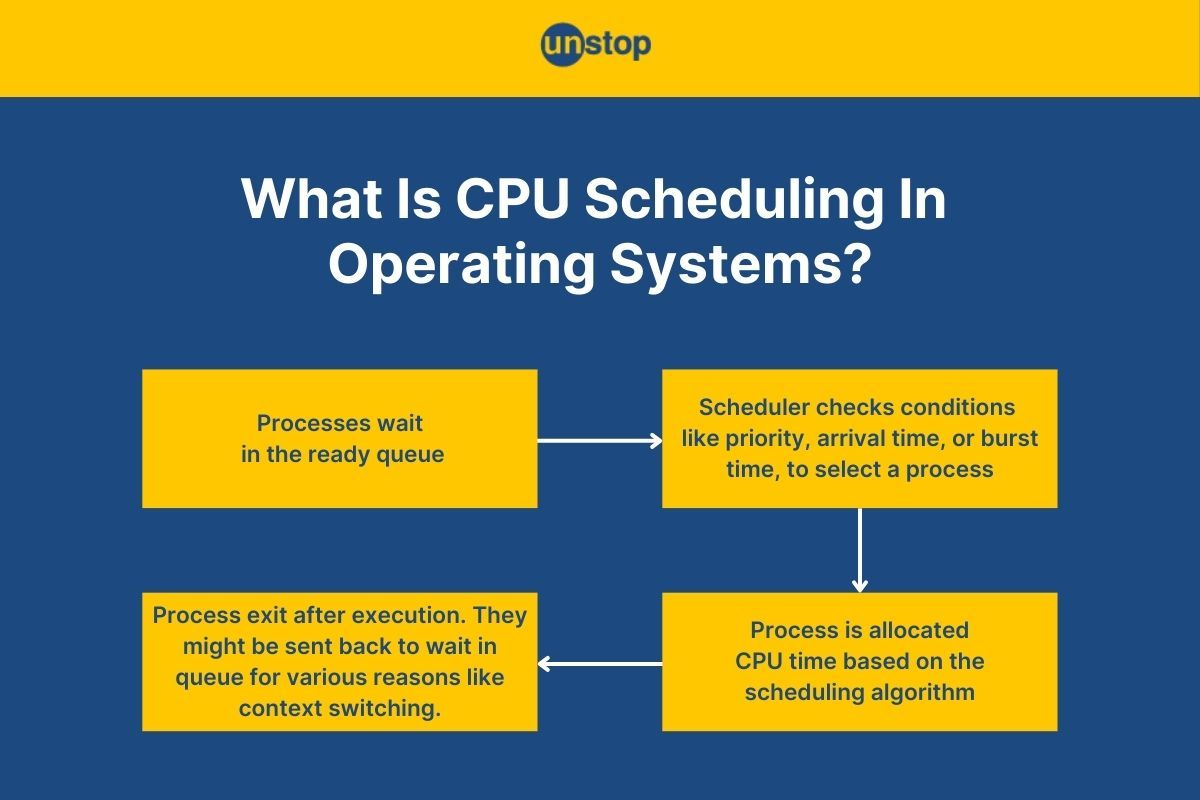

CPU scheduling in an operating system follows a systematic approach to ensure efficient process execution. Here’s a step-by-step look at how it works:

- Process Selection: Processes enter the system in the form of tasks, and the OS places each one into a queue, awaiting scheduling.

- Scheduling Conditions: The CPU scheduler evaluates each process based on certain criteria, such as priority, arrival time, or burst time, to determine the order in which they will run.

- Allocation of CPU Time: Based on selected scheduling algorithms, the OS allocates a slice of CPU time to each process.

- Context Switching: If the CPU needs to switch from one process to another, it performs a context switch, saving the current process state and loading the next one. This ensures seamless transitions between tasks.

CPU scheduling is essential for a multitasking OS, ensuring processes are managed efficiently and maximizing the CPU's productivity. It is the job of the process scheduler to execute CPU scheduling in operating systems, coordinating and managing these tasks effectively.

Process Scheduler And Its Types

The process scheduler is a component of the OS that is responsible for managing and selecting processes for CPU execution. There are three main types of process schedulers:

- Long term Scheduler (Job Scheduler): Controls the number of processes in the ready queue by deciding which of the available processes should enter the queue. It affects overall system multitasking capability.

- Short term Scheduler (CPU Scheduler): Selects the next process from the ready queue for immediate execution, operating frequently to maintain smooth performance.

- Medium term Scheduler: Manages processes by temporarily removing or suspending them to optimize memory use, especially in complex multitasking environments.

Why Do We Need CPU Scheduling in Operating Systems

- Maximizing System Throughput: Efficient CPU scheduling maximizes the number of tasks the CPU completes in a given time, optimizing throughput. When processes have fair access to CPU time, the system can handle multiple tasks simultaneously.

- Minimizing Response Time: Scheduling reduces wait times by quickly assigning CPU time to tasks as needed. With efficient CPU scheduling, users experience reduced delays, leading to a faster response when interacting with applications.

- Ensuring Fair Access to Resources: By prioritizing processes based on algorithms like Round Robin or Shortest Job Next (SJN), CPU scheduling maintains system balance. Fair access prevents any single process from monopolizing CPU time, ensuring stable and predictable system performance.

- Reducing Latency and Smooth Transitioning: Lowering dispatch latency— the time taken to switch the CPU from one process to another—enhances performance, especially in real-time systems. Swift context switching ensures smooth transitions, reducing the chance of noticeable lags.

- Preventing System Deadlock and Starvation: Proper scheduling also prevents deadlocks, where processes wait indefinitely due to resource conflicts, and starvation, where lower-priority tasks never receive CPU time.

Key Terminologies For CPU Scheduling In Operating Systems

Understanding the basic terminology is essential for grasping CPU scheduling in operating systems. Here are some important terms:

- Ready Queue: A data structure that holds all processes waiting for CPU execution, ordered based on the scheduling algorithm.

- Arrival Time: When a process enters the ready queue for execution, it marks the arrival time. The arrival time plays a significant role in prioritizing tasks within the system. For instance, if Process A arrives before Process B, it will likely be scheduled for execution first.

- Burst Time: The total time a process requires to complete or finish its execution cycle. It helps determine the duration of each task's completion. Shorter burst times often improve efficiency by reducing total wait time.

- Turnaround Time: The total time taken from when a process arrives until it completes, encompassing both waiting and execution time.

- Waiting Time: The duration a process spends waiting in the ready queue before execution.

- Context Switch: The act of saving a process's state and loading another, which is crucial in preemptive scheduling for multitasking.

- Throughput: The number of processes completed per time unit, an indicator of system productivity.

Types of CPU Scheduling (Non-Preemptive Vs. Preemptive Scheduling)

There are two primary types of CPU scheduling in operating systems, namely, preemptive and non-preemptive. Let’s take a look at what these terms mean.

Non-Preemptive Scheduling

Non-preemptive scheduling allows a process to finish its execution before another one starts. In other words, it allows every task to finish its CPU cycle uninterrupted once it begins. Naturally, this method of CPU scheduling in operating systems is suitable for tasks that should run without interruption.

- For example, if Task A begins executing, it will continue until completion, even if a higher-priority task arrives.

- Non-preemptive scheduling ensures simplicity in implementation and avoids the overhead of context switching when compared to preemptive methods.

- However, it may lead to inefficiency as high-priority tasks might have to wait behind lower-priority ones.

Preemptive Scheduling

This type of CPU scheduling allows the OS to interrupt a currently running process if a more critical task arrives. It allows for better responsiveness and prioritization of critical processes over less important ones.

- Real-time systems often use preemptive scheduling because it ensures that time-critical tasks meet their deadlines effectively.

- Preemptive scheduling provides better control over system resources by allowing the operating system to prioritize tasks dynamically based on factors like task priority or remaining execution time.

Now that we know about the types of CPU scheduling in operating systems, let’s discuss what we mean by scheduling algorithms.

Types Of CPU Scheduling Algorithms In OS

A CPU scheduling algorithm is a method used to determine the order of process execution based on system requirements. Here are a few different CPU scheduling algorithms that are commonly used:

- First-Come, First-Served (FCFS): This algortihm stipulates that processes be executed in the order they arrive in. Meaning that processes are executed based on their arrival time. While simple, it may lead to longer waiting times for shorter tasks if larger tasks arrive first.

For example, if Process A arrives before Process B, it will be executed first. However, a drawback is that shorter processes may get stuck behind longer ones. - Shortest Job Next (SJN): The process with the shortest burst time is prioritized in this scheduling algorithm. And if two processes have equal burst times, FCFS scheduling is used to break ties. This can minimize wait time but may cause starvation for longer tasks.

- Round Robin Scheduling (RR): In this algorithm, each process receives a fixed time slice (quantum) in a rotating order. If a process doesn't complete within its quantum, it goes back to the end of the queue and waits for its turn again. While RR prevents starvation and provides reasonable response times for all tasks, a quantum that is too small can lead to context-switching overheads.

- Priority CPU Scheduling Algorithm: This algorithm assigns priority levels to every process, and higher-priority tasks are executed first. It can improve efficiency for urgent tasks but may lead to starvation of low-priority processes.

- Multilevel Queue Scheduling: In this CPU scheduling algorithm, processes are categorized into multiple queues based on criteria like priority or process type, with each queue having its own scheduling algorithm.

- Multilevel Feedback Queue: An extension of multilevel queue scheduling, it allows processes to move between queues based on their behavior and needs, providing flexibility for varying task requirements.

We now know about the various scheduling algorithms. For more detailed information on these, read: Scheduling Algorithms In OS (Operating System) Explained +Examples

Criteria For Effective CPU Scheduling

There are some criteria that must be met for CPU scheduling to be effective. They are:

- Maximizing CPU Utilization: The CPU must always remain active, which is done by efficiently distributing its time and ensuring the processor is always busy. Efficient CPU time management is essential to maintain a high level of productivity and to enhance system performance and efficiency.

- Maximizing Throughput: When considering different scheduling algorithms, selecting one that enhances throughput can significantly benefit system operations. It increases the number of tasks completed in a set timeframe, which is vital for productivity.

- Minimizing Waiting Time: It is required to reduce the wait time by ensuring that processes are executed promptly once they are ready. This helps enhance the response times, improve user experience and contribute to efficient system/ CPU resource allocation.

- Minimizing Turnaround Time: When selecting an algorithm for CPU scheduling in operating system, one must aim to complete each task as quickly as possible from arrival to finish. This is especially essential in time-sensitive applications.

- Fairness: It is also required to ensure that each process receives a fair share of CPU time. This helps prevent starvation and promotes a balanced use of CPU resources.

Factors Influencing CPU Scheduling Decisions

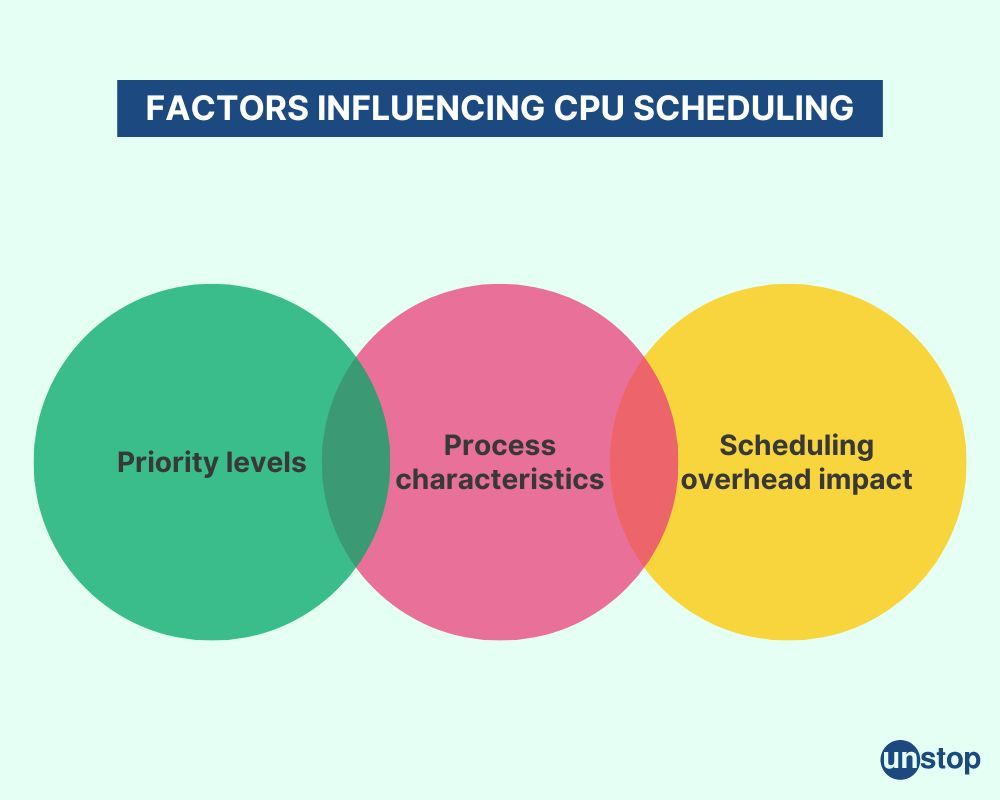

Several factors impact the choice of methods and performance of CPU scheduling in operating systems. Here are the three key factors that influence CPU scheduling decisions:

- Priority Levels: Assigning priorities to processes is crucial in CPU scheduling as it ensures that critical tasks are handled promptly. So, all the processes are first assigned a priority to determine their execution sequence, and those with higher priority are executed first.

- Process Characteristics: The nature of a process, whether it's input/output (I/O) bound or CPU bound, influences its scheduling needs. Understanding if a process is I/O or CPU intensive in an operating system helps optimize scheduling decisions and CPU resource allocation.

- Scheduling Overhead: The time and resources needed for context switching between processes affect CPU scheduling decisions significantly. So, algorithms are often chosen to minimize this overhead, which ultimately enhances the overall efficiency of the system.

Objectives & Considerations In Designing CPU Scheduling Algorithms

We’ve already discussed the key factors that affect decision making for CPU scheduling in operating systems. Now, let’s look at the objectives and considerations in designing scheduling algorithms:

Fairness

In designing CPU scheduling algorithms, fairness is an essential aspect. It ensures that all processes get an equal opportunity to utilize the CPU's processing time.

- This prevents any single process from being unfairly starved of resources.

- Processes receiving a fair share of the CPU's processing time can lead to improved system performance overall.

- By implementing fair scheduling policies, operating systems can enhance user satisfaction and prevent bottlenecks in resource allocation.

Efficiency

Efficiency plays a vital role in CPU scheduling algorithms as it aims to maximize system throughput while minimizing response times.

- Efficient algorithms help in utilizing the available resources optimally, leading to better overall system performance.

- Maximizing system throughput ensures that the CPU is constantly utilized effectively, handling more tasks efficiently within a given period. Minimizing response times contributes to faster task completion and enhances user experience.

Conclusion

CPU scheduling is a fundamental aspect of operating systems, ensuring efficient, fair, and timely process management to optimize overall system performance.

- By strategically allocating CPU resources through various scheduling algorithms, the OS can balance tasks, improve response times, and enhance user experience.

- Each scheduling algorithm, whether preemptive or non-preemptive, addresses unique needs—such as minimizing waiting times, maximizing throughput, or prioritizing critical tasks.

- Understanding the types of CPU scheduling in operating systems, key terminologies, and influential factors provides essential insights into how modern operating systems manage multitasking and resource allocation.

- With the flexibility to implement different scheduling methods based on system requirements, CPU scheduling serves as the backbone of multitasking systems, from handling background tasks to running complex real-time applications.

- In designing efficient scheduling systems, OS developers must weigh critical factors like system efficiency, fairness, and responsiveness. This balance not only maximizes CPU utilization but also ensures that the system remains responsive, stable, and resource-efficient.

CPU scheduling in operating systems continues to evolve alongside advancements in computing, enabling OS(s) to handle increasingly complex workloads and support diverse user demands.

Frequently Asked Questions

Q1. What is CPU scheduling in an operating system?

CPU scheduling in an operating system is the process of deciding which process from the ready queue should be assigned to the CPU for execution. It manages the order and timing of processes to maximize efficiency, ensure fairness, and enhance system throughput.

Q2. Why is understanding CPU scheduling important?

Understanding CPU scheduling in operating systems is crucial as it directly affects system performance, resource allocation, and user experience. Efficient scheduling ensures fair distribution of CPU time among processes, reduces waiting and response times, and optimizes the overall productivity of the system.

Q3. What is the role of a process scheduler in CPU scheduling?

The process scheduler is a system component responsible for selecting processes from the ready queue and assigning them to the CPU based on specific scheduling algorithms and policies. It essentially implements CPU scheduling by managing the execution sequence of processes.

Q4. What are CPU-bound and I/O-bound processes, and why are they relevant to CPU scheduling?

CPU-bound processes primarily require the CPU for intensive computations and are often scheduled with less waiting time. In comparison, I/O-bound processes rely more on input/output operations than on the CPU. Differentiating between these types helps the OS optimize scheduling and balancing CPU and I/O tasks to prevent bottlenecks.

Q5. How does preemptive and non-preemptive scheduling differ?

In preemptive CPU scheduling algorithms, the operating system can interrupt a running process to assign CPU time to a more critical task. In contrast, non-preemptive CPU scheduling algorithms don’t allow interruptions; a process continues executing until it completes or voluntarily relinquishes control.

Q6. What factors influence decisions in CPU scheduling?

Decisions in CPU scheduling are influenced by several factors, including:

- Priority Levels: Higher-priority processes are typically scheduled before lower-priority ones.

- Process Characteristics: Whether a process is I/O-bound or CPU-bound can affect its scheduling.

- Burst Time and Arrival Time: The time a process requires and when it arrives in the ready queue impact scheduling order.

- Scheduling Algorithm: The choice of scheduling algorithm (e.g., FCFS, Round Robin) affects which process is selected next and how resources are allocated.

Q7. How does Round Robin scheduling prevent process starvation?

In Round Robin scheduling, each process receives a fixed time slice or quantum in a cyclical order. This time-sharing approach ensures that no process is left waiting indefinitely, effectively preventing starvation and providing fair CPU access.

Q8. Why might an operating system use different CPU scheduling algorithms for different tasks?

Different tasks may have unique requirements, such as real-time processing, batch processing, or interactive response needs. By selecting suitable algorithms for specific tasks, the OS can optimize both system performance and user experience.

This concludes our discussion on CPU scheduling in operating systems. Here are a few more topics you must explore:

An economics graduate with a passion for storytelling, I thrive on crafting content that blends creativity with technical insight. At Unstop, I create in-depth, SEO-driven content that simplifies complex tech topics and covers a wide array of subjects, all designed to inform, engage, and inspire our readers. My goal is to empower others to truly #BeUnstoppable through content that resonates. When I’m not writing, you’ll find me immersed in art, food, or lost in a good book—constantly drawing inspiration from the world around me.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment