- What Is An Operating System?

- What Is The Importance Of Operating Systems?

- What Are The Functions Of Operating System?

- Services Provided By Operating System

- Types Of Operating System

- Some Popular Operating Systems

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is An Operating System?

- Types Of Operating Systems

- Batch OS | Types Of Operating Systems

- Multi-Programming OS | Types Of Operating Systems

- Multi-Processing OS | Types Of Operating Systems

- Multi-Tasking OS | Types Of Operating Systems

- Time-Sharing OS | Types Of Operating Systems

- Distributed OS | Types Of Operating Systems

- Network OS | Types Of Operating Systems

- Real-Time OS | Types Of Operating Systems

- Mobile OS | Types Of Operating Systems

- History Of Operating System

- Operating Systems in Business: Achieving Maximum Utilization

- Advantages & Disadvantages of Operating Systems

- Popular Operating Systems In The Market

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Process In Programming?

- What Is Process Scheduling In OS?

- The Need for Process Scheduling In OS

- What is CPU Scheduling Algorithm?

- Different Types Of CPU Scheduling Algorithms

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Evolution & Generations Of Computers

- Definition Of A Computer System

- Components & Classification Of Computer Systems

- Basics Of Computer Networking & The Internet

- Introduction To Operating Systems

- Exploring Computer Memory & Storage

- Importance Of Computer Security & Privacy

- Applications Of Computer Fundamentals

- Job Prospects With Computer Fundamentals

- Frequently Asked Questions (FAQs)

- Master Your Skills: Quiz Time!

- Types of threads per process

- Introducing Thread Models

- What is Multithreading?

- Advantages of Multithreading

- Disadvantages of Multithreading

- Multithreading Models

- Multithreading Vs. Multitasking

- Difference between Process, Kernel Thread and User Thread

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- What is Booting Process?: Understanding the Types

- Steps in the Booting Process of Computer

- Boot Sequence in Operating System

- Booting Process: Evolution & Troubleshooting

- What is Secure Boot?

- What is Dual Booting?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Counter?

- Difference Between Synchronous And Asynchronous Counters

- What Is Synchronous Counter?

- What Is Asynchronous Counter?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- History Of Mutex And Semaphore

- Difference Between Mutex And Semaphore

- What Is A Mutex?

- What Is A Semaphore?

- Common Facts About Mutex And Semaphore

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is a File?

- What is a Folder?

- Difference Between File and Folder (Table)

- Summing Up

- Test Your Skills: Quiz Time

- Paging

- Advantages of Paging

- Disadvantages of Paging

- Segmentation

- Advantages of segmentation

- Disadvantages of segmentation

- Difference between Paging and Segmentation

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- Types of Fragmentation

- Internal Fragmentation

- External Fragmentation

- Difference between Internal and External Fragmentation

- Summing up

- Test Your Skills: Quiz Time

- What is Paging in OS?

- Characteristics of Paging in OS

- Paging in OS: Page Table

- Paging with TLB (Translation Look-aside Buffer)

- Advantages of Paging in OS

- Disadvantages of Paging in OS

- Summing Up

- Frequently Asked Questions

- Test your skills: Quiz Time

- What Is Starvation In OS?

- Starvation In OS: Common Causes

- What Is Deadlock?

- Difference Between Deadlock And Starvation in OS

- Solutions To Starvation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Introduction to DOS: A brief history

- Introduction to Windows: A brief history

- Working of DOS

- Working of Windows

- Line of difference: DOS versus Windows

- Versions of DOS and Windows

- Summing Up

- Test Your Skills: Quiz Time

- Understanding File And Directory In OS

- The Directory Structure In OS

- Types Of Directory Structure In OS

- Directory Implementation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is the purpose of CPU scheduling?

- What is preemptive scheduling?

- What is a non-preemptive scheduling method?

- Comparison between preemptive and non-preemptive scheduling

- Basic differences between the preemptive and non-preemptive scheduling techniques

- Advantages of preemptive scheduling -

- Disadvantages of preemptive scheduling

- Advantages of non-preemptive scheduling -

- Disadvantages of non-preemptive scheduling

- Test Your Skills: Quiz Time

- What Is Multiprogramming?

- Advantages of Multiprogramming Operating System

- Disadvantages of Multiprogramming Operating System

- What Is Multitasking?

- Advantages of Multitasking Operating System

- Disadvantages of Multitasking Operating System

- Multiprogramming Vs Multitasking

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Character User Interface

- Advantages of CUI

- Disadvantages of CUI

- Graphical User Interface

- Advantages of GUI

- Disadvantages of GUI

- Major Differences Between CUI and GUI

- Summing Up

- Test Your Skills: Quiz Time

Scheduling Algorithms In OS (Operating System) Explained +Examples

An operating system (OS) connects computer hardware to the user by facilitating the interaction between various applications and the hardware/ devices. Its job is to perform functions such as memory management, file management, process management, etc. An OS performs many tasks and handles multiple processes/ requests at a single point in time. There is, hence, the need to chart out a path that guides the OS in catering to each of these processes without running into problems or missing anything.

This is where the scheduling algorithms in OS come into play. Scheduling algorithms help ensure that the CPU prioritizes a process according to a schedule while keeping others on standby so that resource utilization is optimized.

In this article, we will discuss everything from what scheduling is in OS, its basics, its needed, and how it helps optimum CPU utilization with different types of scheduling algorithms in OS. So read on.

What Is A Process In Programming?

In computer programming, a process refers to a program that is being executed in that instance. Ideally, a program does nothing until and unless a CPU executes the program instructions.

In short, we can say that a process is a program in execution. This then leads to the question- who decides the sequence in which the programs must be executed? Or which process to carry on when? The answer, as you might have guessed, is process scheduler.

What Is Process Scheduling In OS?

Process scheduling is the activity of deciding which process should be performed first (or when) based on a particular strategy/ algorithm. The process manager performs this activity by removing an active process from the CPU and selecting another from the queue.

The need for scheduling in OS arises since most applications are multi-programing, i.e., they allow multiple processes to be loaded and shared with the CPU at a point in time. In multiprogramming operating systems, the manager needs to decide which of these processes must be performed first.

The three major types of schedulers:

- Long-term scheduler

- Medium-term scheduler

- CPU or short-term scheduler

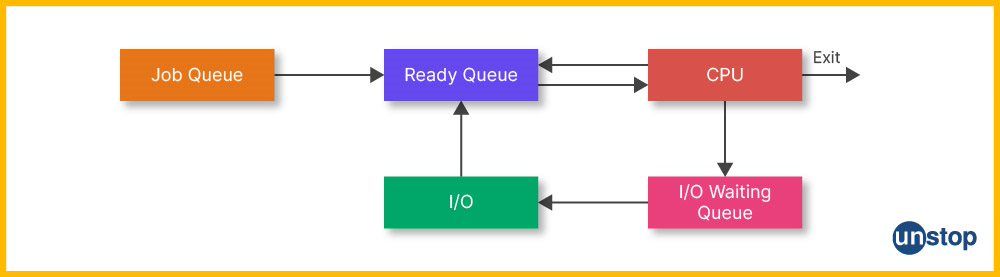

Another component of process scheduling is the process queues. All the programs and processes in the system are split up into process scheduling queues as follows:

- Job queue: All the programs in the system stay in this queue before they are up for execution. (waiting queue)

- Ready queue: The programs that are ready to be executed remain in this queue just before they are picked up for execution.

- Device queue: This queue consists of the processes that have been blocked due to a lack of input/output (I/O) device.

The Need for Process Scheduling In OS

At any given point in time, numerous programs within an operating system (OS) are lined up, waiting to be executed. These programs, or processes, compete for access to the CPU, which can only execute one process at a time (on a single-core processor). To handle this efficiently, the OS employs a component called the process scheduler.

The process scheduler determines which program should run at a particular moment, when it should pause, and which process should run next. This continuous decision-making ensures that the system remains responsive and performs smoothly.

Without an intelligent scheduling mechanism, certain programs could monopolize CPU time, while others might starve and never get a chance to execute. Scheduling allows the OS to allocate CPU time to each process based on a predefined strategy or algorithm, such as Round Robin, Shortest Job Next, or Priority Scheduling. The chosen strategy affects the system’s performance characteristics, such as responsiveness and fairness.

Here’s why process scheduling is essential:

-

Maximizing CPU Utilization: Scheduling helps the CPU stay busy as much as possible. By swiftly switching between processes, the CPU minimizes idle time and optimizes the use of processing power.

-

Minimizing Waiting Time: The scheduler aims to ensure that the time a process spends waiting in the queue before getting CPU time is kept as short as possible. This improves overall system efficiency and user experience.

-

Reducing Turnaround Time: Turnaround time refers to the total time taken for a process to complete, from submission to completion. Good scheduling minimizes this duration, ensuring that processes finish faster.

-

Improving Response Time: In interactive systems, users expect prompt feedback. Process scheduling helps achieve this by quickly assigning CPU time to processes that handle user interactions, thereby reducing perceived delays.

In summary, process scheduling plays a vital role in managing the execution of multiple processes. It ensures that resources are distributed fairly and efficiently, system responsiveness is maintained, and CPU utilization is maximized - enabling the OS to deliver a seamless multitasking experience.

What is CPU Scheduling Algorithm?

Now that we have covered most of the basics, let’s discuss what is a CPU scheduling algorithm. A scheduling algorithm in OS is the algorithm that defines how much CPU time must be allotted to which process and when. There are two types of scheduling algorithms:

- Non-preemptive scheduling algorithms: For these algorithms once a process starts running, they are not stopped until completion. That is, under non-preemptive algorithms, processes cannot be pre-empted in favor of other high-priority processes before their runtime is over.

- Preemptive scheduling algorithms: These algorithms allow for low-priority processes to be preempted in favor of a high-priority process, even if they haven’t run to completion.

The objectives of these process scheduling algorithms are:

- Maximize CPU utilization

- Maximize throughput (i.e. number of processes to complete execution per unit time)

- Minimize wait, turnaround, and response time

Different Types Of CPU Scheduling Algorithms

There are 10 major types of CPU scheduling algorithms, which are discussed in detail.

1. First Come First Serve (FCFS) Scheduling Algorithm

The FCFS algorithm is the simplest of all CPU scheduling algorithms in OS. This is because the deciding principle behind it is just as its name suggests- on a first come basis. The job that requests execution first gets the CPU allocated to it, then the second, and so on.

Characteristics of FCFS scheduling algorithm:

- The algorithm is easy to understand and implement.

- Programs are executed on a first-come, first-served basis.

- It is a non-preemptive scheduling algorithm.

- In this case, the ready queue acts as the First-in-First-out (FIFO) queue, where the job that gets ready for execution first also gets out first.

- This is used in most batch systems.

Advantages Of FCFS Scheduling Algorithm:

- The fact that it is simple to implement means it can easily be integrated into a pre-existing system.

- It is especially useful when the processes have a large burst time since there is no need for context switching.

- The absence of low or high-priority preferences makes it fairer.

- Every process gets its chance to execute.

Disadvantages Of FCFS Scheduling Algorithm:

- Since its first come basis, small processes with a very short execution time, have to wait their turn.

- There is a high wait and turnaround time for this scheduling algorithm in OS.

- All in all, it leads to inefficient utilization of the CPU.

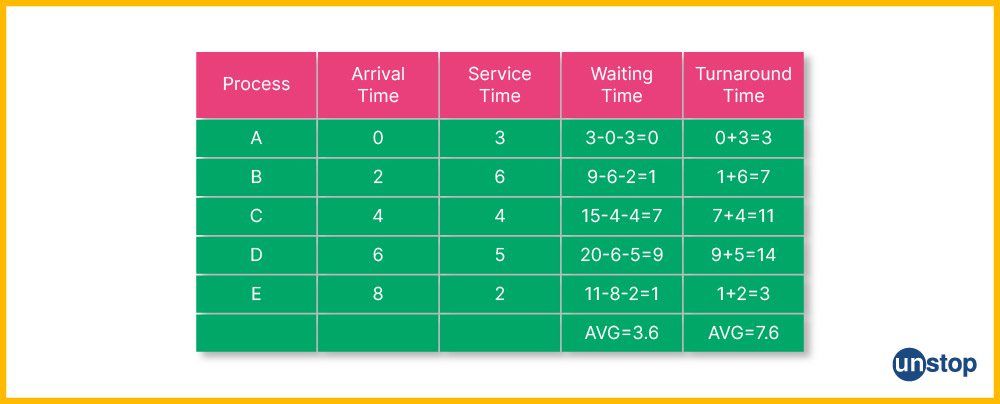

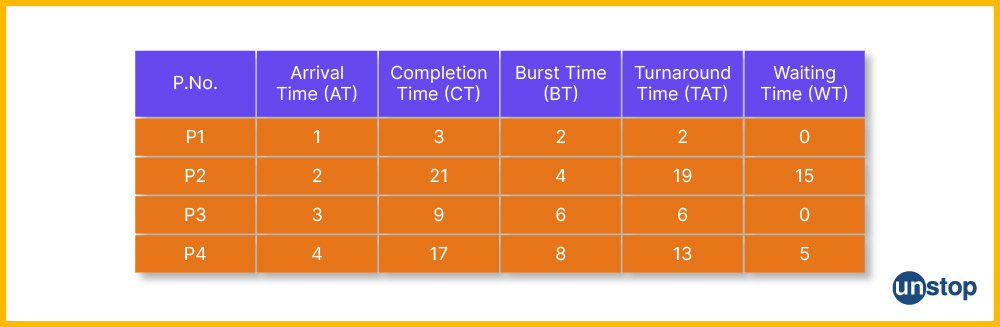

Example of FCFS scheduling algorithm in OS:

In the table above, 5 processes have arrived at the CPU at different times. The process with the minimal arrival time goes first.

- Since the first process has a burst time of 3, the CPU will remain busy for 3 units of time, which indicates that the second process will have to wait for 1 unit of time since it arrives at T=2.

- In this way, the waiting and turnaround times for all processes can be calculated. This also gives the average waiting time and the average turnaround time. We can contrast this with other algorithms for the same set of processes.

Using a queue for the execution of processes is helpful in keeping track of which process comes at what stage. Although this is one of the simplest CPU scheduling algorithms, it suffers from the convoy effect. This occurs when multiple smaller processes get stuck behind a large process, which leads to an extremely high average wait time. This is similar to multiple cars stuck behind a slow-moving truck on a single-lane road.

2. Shortest Job First (SJF) Scheduling Algorithm

The Shortest Job First (SJF) is a CPU scheduling algorithm that selects the shortest jobs on priority and executes them. The idea is to quickly get done with jobs that have short/ lowest CPU burst time, making CPU available for other, longer jobs/ processes. In other words, this is a priority scheduling algorithm based on the shortest burst time.

Characteristics of SJF scheduling algorithm:

- This CPU scheduling algorithm has a minimum average wait time since it prioritizes jobs with the shortest burst time.

- If there are multiple short jobs, it may lead to starvation.

- This is a non-preemptive scheduling algorithm.

- It is easier to implement the SJF algorithm in Batch OS.

Advantages of SJF scheduling algorithm:

- It minimizes the average waiting time and turnaround time.

- Beneficial in long-term scheduling.

- It is better than the FCFS scheduling algorithm.

- Useful for batch processes.

Disadvantages of the SJF scheduling algorithm:

- As mentioned, if short time jobs keep on coming, it may lead to starvation for longer jobs.

- It is dependent upon burst time, but it is not always possible to know the burst time beforehand.

- Does not work for interactive systems.

Example of SJF scheduling algorithm in OS:

Here, the first 2 processes are executed as they come, but when the 5th process comes in, it instantly jumps to the front of the queue since it has the shortest burst time. The turnaround time and waiting time is calculated accordingly. It's visible that this is an improvement over FCFS, as it has a smaller average waiting time as well as a smaller average turnaround time. This algorithm is especially useful in cases where there are multiple incoming processes and their burst time is known in advance. The average waiting time obtained is lower as compared to the first-come-first-served scheduling algorithm.

3. Longest Job First (LJF) Scheduling Algorithm

The Longest Job First scheduling algorithm is the opposite of SJF scheduling in OS. This algorithm calls for jobs with the longest burst time to be executed first, and then move on to jobs with short burst time.

Characteristics of LJF Scheduling Algorithm:

- Incoming execution requests are sorted on the basis of burst time in descending order.

- It is a non-preemptive CPU scheduling algorithm.

- If two jobs have the same burst time, then FCFS is followed.

- This priority scheduling in OS is both non-preemptive and preemptive.

Advantages of LJF Scheduling Algorithm:

- The longest job gets done on priority. That is, no other job gets executed until the longest one completes the execution phase.

Disadvantages of LJF Scheduling Algorithm:

- There is potential for process starvation in jobs with a short burst time.

- The average waiting and turnaround time is high.

- The CPU needs to know the burst time beforehand, which is not always possible.

Example of LJF Scheduling Algorithm in OS:

The first process is executed first, then there is a choice between selecting the second or third process. The third one is selected since it has a higher burst time. Then the fourth one gets executed since it also has a higher burst time than the second process. Finally, the second process gets its chance to go. This approach of CPU scheduling leads to very high waiting times since shorter processes might have to wait for a long period of time to get executed. This also causes the aforementioned convoy effect.

4. Priority Scheduling Algorithm In OS

This CPU scheduling algorithm in OS first executes the jobs with higher priority. That is, the job with the highest priority gets executed first, followed by the second prioritized jobs, and so on.

Characteristics of Priority Scheduling Algorithm:

Jobs are scheduled on the basis of the priority level, in descending order.

- If a job with higher priority than the one running currently comes on, the CPU preempts the current job in favor of the one with higher priority.

- But for other purposes, it follows a non-preemptive scheduling approach.

- In between two jobs with the same priority, the FCFS process decides which jobs get executed first.

- The priority of a process can be set depending on multiple factors like memory requirements, required CPU time, etc.

Advantages of Priority Scheduling Algorithm:

- This process is simpler than most other scheduling algorithms in OS.

- Priorities help in sorting the incoming processes.

- Works well for static and dynamic environments.

Disadvantages of Priority Scheduling Algorithm:

- It may lead to starvation in jobs with low priority.

- The average turnaround and waiting time might be higher than those of other CPU scheduling algorithms.

Example of Priority Scheduling Algorithm in OS:

Here, different priorities are assigned to the incoming processes. The lower the number, the higher the priority. The 1st process to be executed is the second one, since it has higher priority than the first process. Then the fourth process gets its turn. This is known as priority scheduling. The calculated times may not be the lowest but it helps to prioritize important processes over others.

5. Round Robin Scheduling Algorithm In OS

In this scheduling algorithm, the OS defines a quantum time or a fixed time period. And every job is run cyclically for this predefined period of time, before being preempted for the next job in the ready queue. The jobs that are preempted before completion go back to the ready queue to wait their turn. It is also referred to as the preemptive version of the FCFS scheduling algorithm in OS.

As seen from the figure, the scheduler executes the 3 incoming processes part by part.

Characteristics of RR Scheduling Algorithm:

- Once a job begins running, it is executed for a predetermined time and gets preempted after the time quantum is over.

- It is easy and simple to use or implement.

- The RR scheduling algorithm is one of the most commonly used CPU scheduling algorithms in OS.

- It is a preemptive algorithm.

Advantages of RR Scheduling Algorithm:

- This seems like a fair algorithm since all jobs get equal time CPU.

- Does not lead to any starvation problems.

- New jobs are added at the end of the ready queue and do not interrupt the ongoing process.

- This leads to efficient utilization of the CPU.

Disadvantages of RR Scheduling Algorithm:

- Every time a job runs the course of quantum time, a context switch happens. This adds to the overhead time and, ultimately, the overall execution time.

- A low slicing time may lead to low CPU output.

- Important tasks aren’t given priority.

- Choosing the correct time quantum is a difficult job.

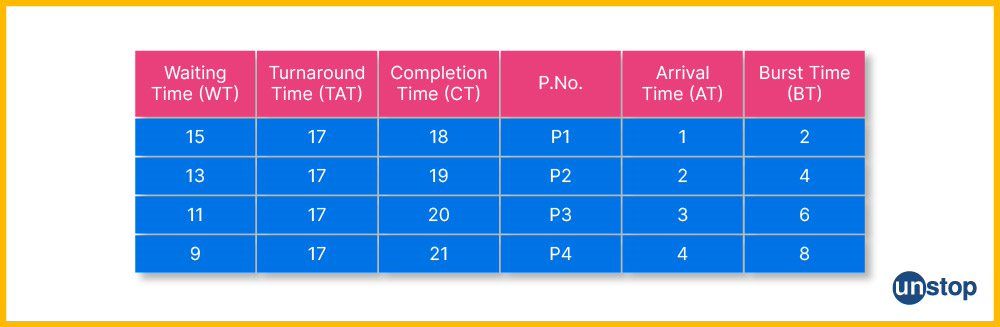

Example of RR Scheduling Algorithm in OS:

Let's take a quantum time of 4 units. The first process will execute and get completed. After a gap of 1 unit, the second process executes for 4 units. Then the third one executes since it has also arrived in the ready queue. After 4 units, the fourth process executes. This process keeps going until all processes are done. It is worth noting that the minimum average waiting time is higher than some of the other algorithms. While this approach does result in a higher turnaround time, it is much more efficient in multitasking operating environments in comparison to most other scheduling algorithms in OS.

6. Shortest Remaining Time First (SRTF) Scheduling Algorithm

The SRTF scheduling algorithm is the preemptive version of the SJF scheduling algorithm in OS. This calls for the job with the shortest burst time remaining to be executed first, and it keeps preempting jobs on the basis of burst time remaining in ascending order.

Characteristics of the SRTF Scheduling Algorithm:

- The incoming processes are sorted on the basis of their CPU-burst time.

- It requires the least burst time to be executed first, but if another process arrives that has an even lesser burst time, then the former process will get preempted for the latter.

- The flow of execution is- a process is executed for some specific unit of time and then the scheduler checks if any new processes with even shorter burst times have arrived.

Advantages of SRTF Scheduling Algorithm:

- More efficient than SJF since it's the preemptive version of SJF.

- Efficient scheduling for batch processes.

- The average waiting time is lower than that of many other scheduling algorithms in OS.

Disadvantages of SRTF Scheduling Algorithm:

- Longer processes may starve if short jobs keep getting the first shot.

- Can’t be implemented in interactive systems.

- The context switch happens too many times, leading to a rise in the overall completion time.

- The remaining burst time might not always be apparent before the execution.

Example of SRTF scheduling algorithm in OS:

Here, the first process starts first and then the second process executes for 1 unit of time. It is then preempted by the arrival of the third process, which has a lower service time. This goes on until the ready queue is empty and all processes are done executing.

7. Longest Remaining Time First (LRTF) Scheduling Algorithm

This CPU scheduling algorithm is the primitive version of the LJF algorithm. It calls for the execution of the jobs with the longest remaining burst time on a priority basis.

Characteristics of LRTF Scheduling Algorithm:

- The incoming processes are sorted in descending order based on their burst time.

- It schedules the jobs with longer remaining burst time first.

- The CPU scheduler keeps checking for new jobs with long burst times in regular intervals. Then, it preempts the current job in favor of other longer burst time remaining jobs.

Advantages of LRTF Scheduling Algorithm:

- More efficient than LJF since it is the preemptive version of the same.

- Almost all jobs get done at the same time, approximately.

- Prioritizes longer jobs first, leaving shorter ones for later.

Disadvantages of LRTF Scheduling Algorithm:

- The average waiting and turnaround time is extremely high.

- Shorter jobs may be neglected in favor of longer ones; which may cause starvation.

- Burst time has to be known beforehand.

Example of LRTF scheduling algorithm in OS:

The first process executes for one unit before being preempted by the second process. That, in turn, is preempted by the third process, which is also preempted by the fourth process. After the longest process is done, the others get executed.

8. Highest Response Ratio Next (HRRN) Scheduling Algorithm

The HRRN scheduling algorithm calls for the calculation of the response ratio of all the jobs/ processes. It then selects the job with the highest response ratio to execute on a priority basis. The response ratio (RR) is calculated as follows:

Response Ratio = (W+B)/ B

Where W is the waiting time, and B is the burst time/ service time.

Characteristics of HRRN Scheduling Algorithm:

- The HRRN algorithm selects the job with the highest response ratio and puts it up for execution.

- Once this job is executed, all the jobs are again put in the read queue to calculate wait time, and then the response ratio is calculated again. And so on.

- In case two jobs have the same response ratio, the FCFS method is used to select which is put to execution, first.

- This is a non-preemptive algorithm, that is, once a job is put to execution the next response ratio is not calculated until the job completes the execution process.

Advantages of HRRN Scheduling Algorithm:

- This is more efficient than SJF scheduling.

- The throughput for this algorithm is high or at least more than many other scheduling algorithms in OS.

- While on the one hand, the waiting time for longer jobs is lesser, on the other it encourages the execution of shorter jobs.

Disadvantages of HRRN Scheduling Algorithm:

- It is not the most implemented algorithm since we cannot always know the burst time for every job beforehand.

- Processor overhead may occur.

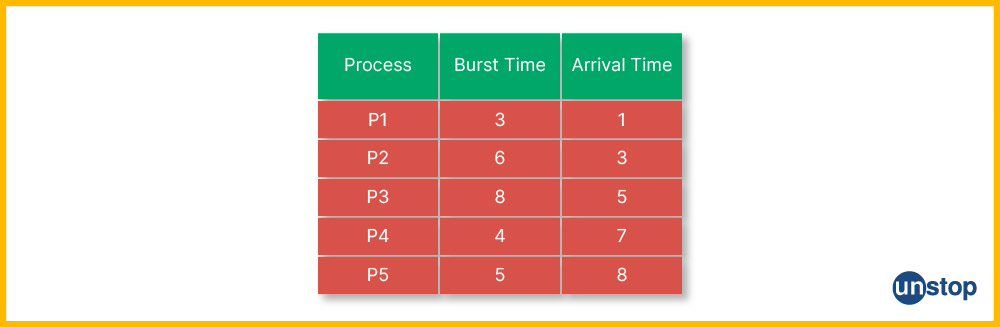

Example of HRRN scheduling algorithm in OS:

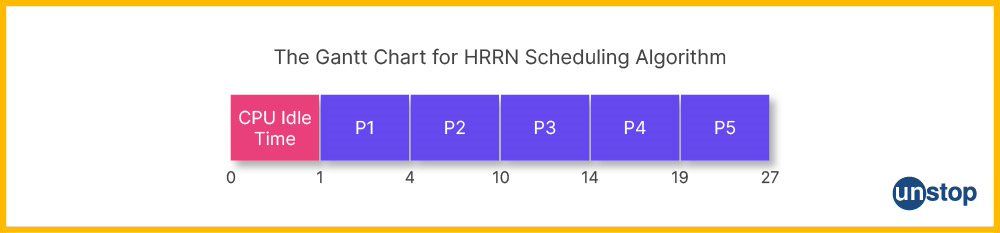

At time 0, there is no job in the ready queue, so the CPU remains idle from time 0 to 1. At time 1, P1 comes in, and since no other job is available, P1 runs to execution without the need to calculate the response ratio. This runs till time 4, when the only job in the queue is P2, so it runs till completion to time 10. At time 10, all P3, P4, and P5 are available, so there is a need to calculate the response ratios, which is done as follows:

RR(P3) = [(10-5)+8]/8 = 1.625

RR(P4) = [(10-7)+4]/4 = 1.75

RR(P5) = [(10-8)+5]/5 = 1.40

So, at time 10, the response ratio for P4 is the highest, which is why this job goes to execution next. When it runs the completion, the next job to execute is again decided on the basis of the response ratio. And so on.

9. Multiple-level Queue (MLQ) Scheduling Algorithm

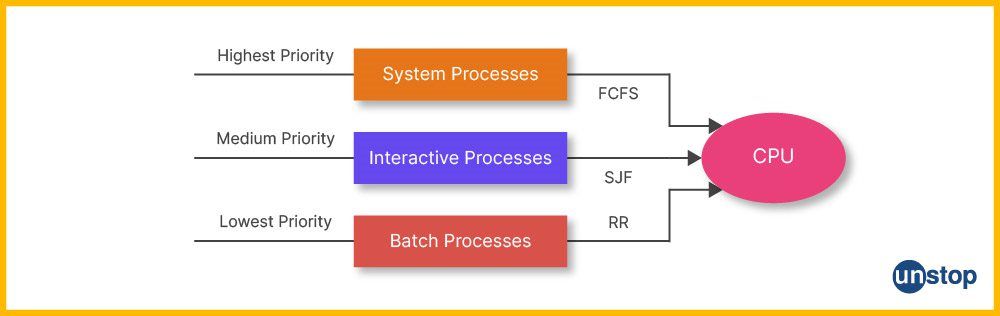

The multiple-level queue scheduling approach first divides all the processes in the ready queue into different classes based on their scheduling needs. These classes lead to the creation of multiple queues, such as queues of foreground jobs, batch jobs, etc. The OS then treats these queues individually and executes them by running different algorithms on them. Take a look at the diagram below.

The figure above displays how processes are separated based on their priority depending on the type and executed using different algorithms. Here, for example, FCFS, SJF, and the Round-Robin (RR) scheduling algorithms.

Characteristics of MLQ Scheduling Algorithm:

- Jobs with common characteristics are grouped into individual queues.

- Each queue is assigned a scheduling algorithm depending on specific needs.

- The queues are then given a priority level to decide which queue gets CPU time first.

Advantages of MLQ Scheduling Algorithm:

- Uses a combination of algorithms to provide the best results.

- The overhead time is less.

Disadvantages of MLQ Scheduling Algorithm:

- Once a job is assigned to a queue, it cannot be changed. This may lead to inflexibility and, hence, inefficiency.

- This may lead to a starvation problem if a high-priority queue does not allow for low-priority queues to take turns.

- Not easy to implement.

- Sorting the jobs might become complex.

10. Multilevel Feedback Queue (MLFQ) Scheduling Algorithm

The MLFQ algorithm is similar to the MLQ algorithm, with the primary difference being it preempts depending on feedback. That is, in MLFQ scheduling, there is an allowance for jobs to switch between queues.

Characteristics of MLFQ Scheduling Algorithm:

- This is a modification of the MLQ algorithm.

- Here the jobs are not assigned to the queues on a permanent basis, that is, they are allowed to move between queues.

Advantages of MLFQ Scheduling Algorithm:

- It is comparatively more flexible than MLQ.

- Allowing jobs to switch between queues might lead to low overhead.

- Also, switching between queues can help avoid starvation issues.

Disadvantages of MLFQ Scheduling Algorithm:

- It is even more complex than the MLQ algorithm since there is an added layer that allows jobs to switch between queues.

- It may lead to an increase in CPU overhead

- The process of selecting the best scheduler is not an easy one.

Conclusion

As is evident, scheduling algorithms are used to ensure the efficient execution of various processes within an operating system and to achieve optimum utilization of CPU time. The overall throughput and CPU utilization will depend on the type of scheduling algorithm implemented. Now, you have all the information you need to decide which scheduling algorithm in OS is best for which situation.

Frequently Asked Questions

Q. What are the different types of schedulers in an operating system?

Long-term, short-term, and medium-term schedulers are the three types of process schedulers found in operating systems.

- The long-term scheduling type, also known as the job scheduler, selects processes from the pool and loads them into memory for execution.

- The short-term scheduling type, or the CPU scheduler, selects a process from the ready queue and allocates the CPU to it.

- The medium-term scheduler is responsible for swapping out processes from memory and bringing them back in later.

Q. What is process scheduling in an operating system?

Process scheduling, which arranges processes in various stages, such as ready, waiting, and executing, is a crucial component of an operating system. Process management activities include choosing a different process based on a certain strategy and removing an active process from the CPU. The choice of which process executes at a certain moment is made by the process scheduler. Usually, it has the capacity to halt an active process, place it at the end of the active queue, and launch a new process.

Q. Explain the concept of priority scheduling.

A process scheduling method called priority scheduling chooses tasks for processing depending on their priority. Each step in this algorithm is given a priority number, and the procedure with the greatest priority is the one that is carried out first. The priority number may be determined by a variety of criteria, including memory needs, time needs, or any other resource needs. Preemptive and non-preemptive priority scheduling are the two forms that are possible. In preemptive priority scheduling, a newly arrived process with a higher priority can preempt a currently running process. In non-preemptive priority scheduling, the currently running process continues to execute until it completes or enters a waiting state.

Q. What is the purpose of a CPU burst in the context of scheduling algorithms?

In the context of scheduling algorithms, a CPU burst refers to the amount of time a process uses the CPU before it is no longer ready. Process execution is characterized by a cycle of CPU bursts and I/O activity. In most cases, CPU timings are lower than the duration of waiting for I/O operations to finish. A CPU burst's goal is to give the scheduling information about how long a process will utilise the CPU before it is no longer ready, which helps the scheduler decide which task to run next.

Q. What is round-robin scheduling?

In round-robin scheduling, each process gets a chance to execute for a fixed amount of time, and then the CPU is given to the next process in the queue. The time quantum is usually small, typically between 10 and 100 milliseconds. The round-robin scheduling method is a first-come, first-served preemptive CPU scheduling system that distributes CPU time cyclically (turn by turn) to each task that is in the ready state. The approach is straightforward, simple to apply, and starvation-free.

The advantages of round-robin scheduling include good response time and fair allocation of CPU time. It's thus, good for time-sharing systems. The disadvantage of round-robin scheduling is that it may cause unnecessary context switching, which can lead to overhead.

Q. What is meant by the term "starvation" in the context of scheduling?

When referring to scheduling, the term "starvation" describes a circumstance in which a process, although being in the ready state, is unable to obtain the resources it requires to run.

This can happen when a scheduling algorithm is not designed to allocate resources equitably, leading to some processes being given priority over others. Starvation can occur in priority-based scheduling algorithms, where high-priority processes are executed first, and low-priority processes may never get a chance to execute.

Q. What is the Shortest Remaining Time First Algorithm?

The Shortest Remaining Time First (SRTF) scheduling algorithm is a preemptive scheduling algorithm used in operating systems and it is also sometimes referred to as Shortest Job Next (SJN) or Shortest Job First (SJF) with preemption. SRTF is designed to minimize the waiting time for processes and optimize the use of CPU time by always selecting the process with the shortest remaining burst time to execute next. Due to its persistent preference for the shortest work, SRTF seeks to reduce the typical processing wait time. While this can result in frequent context shifts and more overhead, it also necessitates routinely comparing the remaining burst times of all ready processes.

Test Your Skills: Quiz Time

This compiles our discussion on scheduling algorithms in OS. Do check out the following:

An economics graduate with a passion for storytelling, I thrive on crafting content that blends creativity with technical insight. At Unstop, I create in-depth, SEO-driven content that simplifies complex tech topics and covers a wide array of subjects, all designed to inform, engage, and inspire our readers. My goal is to empower others to truly #BeUnstoppable through content that resonates. When I’m not writing, you’ll find me immersed in art, food, or lost in a good book—constantly drawing inspiration from the world around me.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment