- What Is An Operating System?

- What Is The Importance Of Operating Systems?

- What Are The Functions Of Operating System?

- Services Provided By Operating System

- Types Of Operating System

- Some Popular Operating Systems

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is An Operating System?

- Types Of Operating Systems

- Batch OS | Types Of Operating Systems

- Multi-Programming OS | Types Of Operating Systems

- Multi-Processing OS | Types Of Operating Systems

- Multi-Tasking OS | Types Of Operating Systems

- Time-Sharing OS | Types Of Operating Systems

- Distributed OS | Types Of Operating Systems

- Network OS | Types Of Operating Systems

- Real-Time OS | Types Of Operating Systems

- Mobile OS | Types Of Operating Systems

- History Of Operating System

- Operating Systems in Business: Achieving Maximum Utilization

- Advantages & Disadvantages of Operating Systems

- Popular Operating Systems In The Market

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Process In Programming?

- What Is Process Scheduling In OS?

- The Need for Process Scheduling In OS

- What is CPU Scheduling Algorithm?

- Different Types Of CPU Scheduling Algorithms

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Evolution & Generations Of Computers

- Definition Of A Computer System

- Components & Classification Of Computer Systems

- Basics Of Computer Networking & The Internet

- Introduction To Operating Systems

- Exploring Computer Memory & Storage

- Importance Of Computer Security & Privacy

- Applications Of Computer Fundamentals

- Job Prospects With Computer Fundamentals

- Frequently Asked Questions (FAQs)

- Master Your Skills: Quiz Time!

- Types of threads per process

- Introducing Thread Models

- What is Multithreading?

- Advantages of Multithreading

- Disadvantages of Multithreading

- Multithreading Models

- Multithreading Vs. Multitasking

- Difference between Process, Kernel Thread and User Thread

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- What is Booting Process?: Understanding the Types

- Steps in the Booting Process of Computer

- Boot Sequence in Operating System

- Booting Process: Evolution & Troubleshooting

- What is Secure Boot?

- What is Dual Booting?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Counter?

- Difference Between Synchronous And Asynchronous Counters

- What Is Synchronous Counter?

- What Is Asynchronous Counter?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- History Of Mutex And Semaphore

- Difference Between Mutex And Semaphore

- What Is A Mutex?

- What Is A Semaphore?

- Common Facts About Mutex And Semaphore

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is a File?

- What is a Folder?

- Difference Between File and Folder (Table)

- Summing Up

- Test Your Skills: Quiz Time

- Paging

- Advantages of Paging

- Disadvantages of Paging

- Segmentation

- Advantages of segmentation

- Disadvantages of segmentation

- Difference between Paging and Segmentation

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- Types of Fragmentation

- Internal Fragmentation

- External Fragmentation

- Difference between Internal and External Fragmentation

- Summing up

- Test Your Skills: Quiz Time

- What is Paging in OS?

- Characteristics of Paging in OS

- Paging in OS: Page Table

- Paging with TLB (Translation Look-aside Buffer)

- Advantages of Paging in OS

- Disadvantages of Paging in OS

- Summing Up

- Frequently Asked Questions

- Test your skills: Quiz Time

- What Is Starvation In OS?

- Starvation In OS: Common Causes

- What Is Deadlock?

- Difference Between Deadlock And Starvation in OS

- Solutions To Starvation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Introduction to DOS: A brief history

- Introduction to Windows: A brief history

- Working of DOS

- Working of Windows

- Line of difference: DOS versus Windows

- Versions of DOS and Windows

- Summing Up

- Test Your Skills: Quiz Time

- Understanding File And Directory In OS

- The Directory Structure In OS

- Types Of Directory Structure In OS

- Directory Implementation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is the purpose of CPU scheduling?

- What is preemptive scheduling?

- What is a non-preemptive scheduling method?

- Comparison between preemptive and non-preemptive scheduling

- Basic differences between the preemptive and non-preemptive scheduling techniques

- Advantages of preemptive scheduling -

- Disadvantages of preemptive scheduling

- Advantages of non-preemptive scheduling -

- Disadvantages of non-preemptive scheduling

- Test Your Skills: Quiz Time

- What Is Multiprogramming?

- Advantages of Multiprogramming Operating System

- Disadvantages of Multiprogramming Operating System

- What Is Multitasking?

- Advantages of Multitasking Operating System

- Disadvantages of Multitasking Operating System

- Multiprogramming Vs Multitasking

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Character User Interface

- Advantages of CUI

- Disadvantages of CUI

- Graphical User Interface

- Advantages of GUI

- Disadvantages of GUI

- Major Differences Between CUI and GUI

- Summing Up

- Test Your Skills: Quiz Time

Key Difference Between Mutex & Semaphore Explained With Examples

When dealing with concurrent programming, managing access to shared resources becomes crucial to ensuring data integrity and avoiding race conditions. Two of the most commonly used synchronization mechanisms to achieve this are mutexes and semaphores. While both serve to coordinate access to shared resources among multiple threads, they do so in different ways and are suited to different scenarios.

Understanding the difference between mutex and semaphore is vital for choosing the right tool for your application's synchronization needs. In this article, we will explore the key difference between mutex and semaphore, discussing their respective features, advantages, and drawbacks to help you make informed decisions in your multi-threaded programming projects.

History Of Mutex And Semaphore

The cause of the confusion where semaphores and mutexes are interchangeable is dated back to 1974 when Dijkstra invented the Semaphore. Earlier, there were no known task synchronization and signaling mechanisms that could be scaled for more than two tasks. So it was Dijkstra's revolutionary, safe-and-scalable Semaphore that was applied for critical section protection and as well as signaling. This is where the confusion began.

After the appearance of priority-based preemptive RTOS (e.g., VRTX, ca. 1980), it became clear to operating system developers that mutexes must be more than just semaphores with a binary counter. Unfortunately, numerous sources of knowledge, such as textbooks, user manuals, and wikis, contribute to the historical confusion by bringing the terms "binary semaphore" (for mutex) and 'counting semaphore' to the mix.

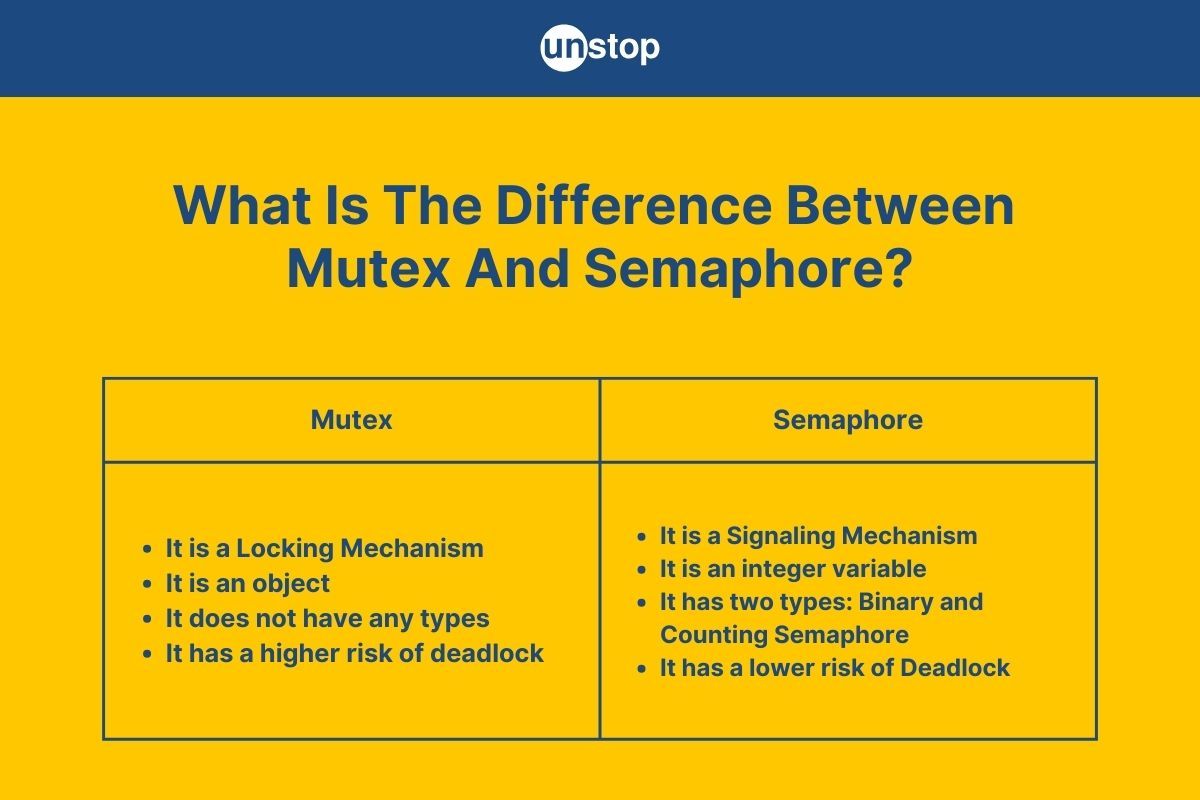

Difference Between Mutex And Semaphore

Here's a comparison table highlighting the important difference between mutex and semaphore:

| Feature | Mutex | Semaphore |

|---|---|---|

| Definition | A Mutex (Mutual Exclusion) is a locking mechanism that ensures only one thread can access a resource at a time. | A Semaphore is a signaling mechanism that controls access to a resource by multiple threads through a counter. |

| Usage | Used to protect shared resources that can be accessed by one thread at a time. | Used to control access to a pool of resources, allowing multiple threads to use a resource up to a certain limit. |

| Initialization | Typically initialized in a default (unlocked) state. | Initialized with a specific count representing the number of available resources. |

| Ownership | A thread that locks a mutex must unlock it; only the owner thread can release the mutex. | Any thread can signal (release) a semaphore, not necessarily the one that acquired it. |

| Value Range | Binary: Can only be locked (1) or unlocked (0). | Can have a non-negative integer value, representing the number of available resources. |

| Blocking Behavior | If a mutex is locked, other threads attempting to lock it will be blocked until it is unlocked. | If the semaphore count is zero, threads trying to decrement it will be blocked until it becomes positive. |

| Deadlock Possibility | Higher risk of deadlock if not managed properly, especially with multiple mutexes. | Lower risk of deadlock, though possible with incorrect usage (e.g., acquiring too many resources). |

| Priority Inversion | Susceptible to priority inversion problems, where a lower-priority thread holds a mutex needed by a higher-priority thread. | Priority inversion can also occur but is less common compared to mutexes. |

| Overhead | Slightly higher overhead due to ownership tracking. | Generally lower overhead due to simpler signaling mechanism. |

| Typical Use Case | Ensuring exclusive access to a single resource (e.g., a shared variable). | Controlling access to a finite number of identical resources (e.g., a pool of database connections). |

What Is A Mutex?

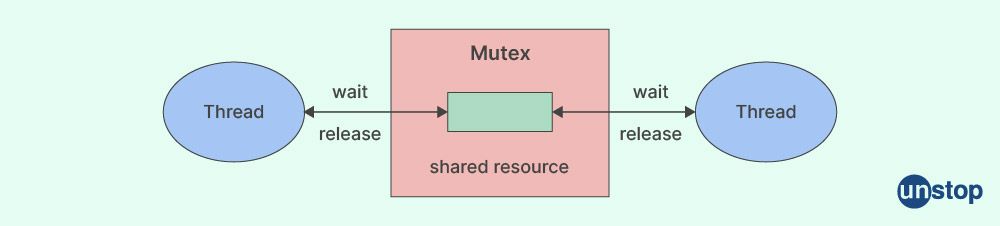

A mutex (short for mutual exclusion) is a synchronization primitive used to prevent multiple threads from simultaneously accessing a shared resource, ensuring that only one thread can enter the critical section at a time. It achieves this through a locking mechanism, making it a key tool for solving the critical section problem in concurrent programming.

When a thread needs to access a resource protected by a mutex, it must first acquire (or lock) the mutex. While the mutex is locked, other threads attempting to acquire it will be blocked until the mutex is released. Once the thread finishes its work with the shared resource, it releases (or unlocks) the mutex, allowing other threads to access the resource.

Key Concepts About Mutex:

- Lock-Based Mechanism: The mutex operates as a binary semaphore that controls access to a resource by locking and unlocking.

- Ownership: A critical feature of a mutex( mutual exclusion object) is that the thread that locks it must be the one to unlock it. This ensures controlled access and prevents accidental release by other threads.

- Priority: Mutexes are designed to keep higher-priority tasks blocked for the shortest time possible, reducing the risk of priority inversion and deadlock in some scenarios.

- Process Synchronization: By using a mutex, we achieve process synchronization, allowing threads to work with shared resources without interfering with each other.

Advantages Of Mutex:

- Data Consistency: Since only one thread can be in its critical section at a time, mutexes help prevent race conditions, ensuring data consistency.

- Simplicity: Mutexes have a simpler interface compared to semaphores, making them easier to use for basic mutual exclusion.

- Efficient Performance: When implemented properly, mutexes can be efficient, especially when using features like blocking instead of busy waiting, which reduces CPU usage.

Disadvantages Of Mutex:

- Potential CPU Wastage: If implemented with busy waiting (spinlocks), mutexes can lead to a waste of CPU cycles, as the thread continuously checks for the lock, leading to inefficient use of CPU time.

- Single Thread Access: Only one thread is allowed in the critical section at a time, which can become a bottleneck in some scenarios.

- Risk of Starvation: If a thread holding a mutex is preempted or put to sleep, other threads waiting for the mutex might experience starvation, especially if higher-priority threads continually acquire the mutex.

- Deadlock Possibility: If not managed carefully, mutexes can lead to deadlock situations, particularly in systems with multiple mutexes or complex locking sequences.

What Is A Semaphore?

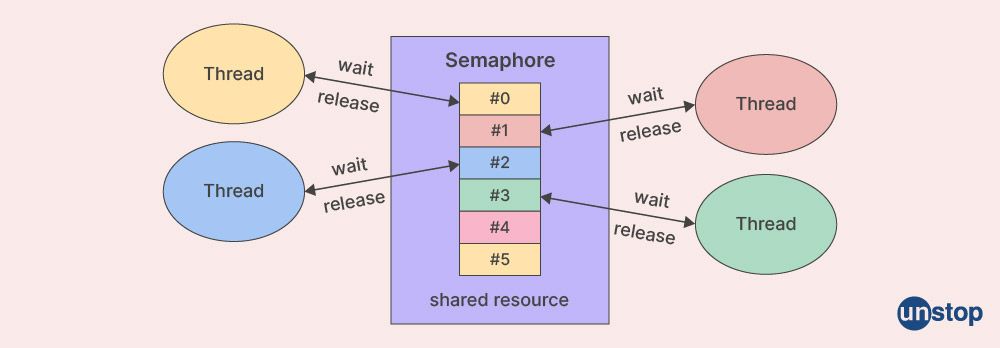

A semaphore is a synchronization primitive used to manage access to shared resources in a concurrent system. It is essentially a non-negative integer variable that is shared among threads or processes. The value of a semaphore represents the number of available resources or the extent to which a resource is available. Semaphores are used not only as process synchronization tools but also as signaling mechanisms to indicate whether a resource is being acquired or released.

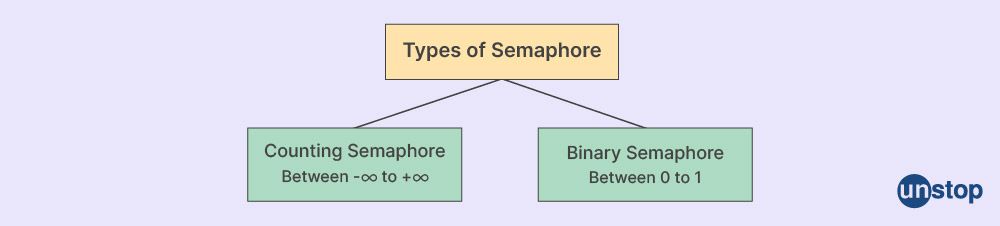

Types Of Semaphores:

There are two primary types of semaphores:

- Binary Semaphores: A binary semaphore can have only two values: 0 or 1. It is often used to achieve mutual exclusion, similar to a mutex lock. Binary semaphores are used for process synchronization where only one process can access a shared resource at a time.

- Counting Semaphores: A counting semaphore can have a range of values, typically starting from 0 up to a maximum value, which represents the number of available resources. Counting semaphores are ideal for managing access to a pool of identical resources, such as a fixed number of database connections or memory of entire buffers.

Key Concepts About Semaphores:

- Initialization: A semaphore is initialized with the number of available resources. For instance, if there are N identical resources available, the semaphore is initialized to N.

- Access Control: A semaphore restricts or grants access to resources based on its value. When a thread acquires a resource, the semaphore’s value is decremented. When a resource is released, the value is incremented.

- Atomic Operations: Semaphores rely on two key atomic signal operations:

- wait() (also known as P or decrement): Decreases the semaphore value. If the value becomes negative, the calling thread is blocked until the value is positive.

- signal() (also known as V or increment): Increases the semaphore value, potentially unblocking a waiting thread.

Advantages Of Semaphore:

- Multiple Thread Access: Semaphores allow more than one thread to access the critical section or resource, up to a specified limit, making them useful for managing access to a pool of resources.

- Machine-Independent: Semaphore operations are typically machine-independent, making them versatile across different hardware architectures.

- Flexible Resource Management: Semaphores are flexible and can be used to manage various resource access patterns, not just mutual exclusion.

- No Busy Waiting (in Proper Implementations): Properly implemented semaphores avoid busy waiting by blocking threads until the resource becomes available, leading to better resource utilization.

- Modularity in Microkernel Implementations: Semaphores can be effectively implemented within the microkernel, supporting modular and portable code.

Disadvantages Of Semaphore:

- Complexity: Semaphore usage is more complex than mutexes, leading to a higher likelihood of programming errors such as deadlocks or missed signals.

- Deadlock Risk: Incorrect signalling mechanisms of wait() and signal() operations can easily lead to deadlocks in semaphore.

- Loss of Modularity: Extensive use of semaphores can lead to loss of modularity, making the system harder to manage and maintain, especially in large-scale applications.

- Priority Inversion: Semaphores are more prone to priority inversion, where lower-priority threads hold resources needed by higher-priority threads, causing performance issues.

- Not Ideal for Mutual Exclusion: While semaphores can manage multiple resources, they are not inherently designed to solve the mutual exclusion problem for single resources, making them less suitable in scenarios where mutual exclusion is the primary goal.

Common Facts About Mutex And Semaphore

-

Mutex Ownership and Access: A mutex can only be acquired by one task at a time. It has ownership, meaning that only the thread that acquired (or locked) the mutex has the ability to release (or unlock) it. This ownership model is what distinguishes a mutex from a binary semaphore.

-

Distinct Purposes: Mutexes and semaphores serve different purposes. A mutex is specifically designed for mutual exclusion, ensuring that only one thread can access a critical section at a time. While a mutex can be thought of as a binary semaphore due to their similarities, they are used in different contexts. The main difference lies in the ownership and intent: a mutex is for mutual exclusion, whereas a binary semaphore can be used for signaling between tasks.

-

Thread Safety: Both mutexes and semaphores are essential tools in achieving thread safety. They help ensure data integrity when multiple threads access shared resources concurrently, preventing race conditions and other synchronization-related issues.

-

Misconceptions: A common misconception is that mutexes and semaphores are nearly identical, with the main difference being that a mutex can only count to 1, while a semaphore can count from 0 to N. However, this overlooks the fact that mutexes have ownership and are specifically designed for mutual exclusion, while semaphores (even binary semaphores) do not have ownership and can be used for more general signaling purposes.

-

Binary Semaphore vs. Mutex: There is often confusion between binary semaphores and mutexes. While they share similarities, especially in that both can be used to control access to a single resource, a mutex has ownership semantics, meaning that only the thread that locks it can unlock it. A binary semaphore, on the other hand, does not enforce this ownership.

-

Synchronization Algorithms: Many well-known synchronization algorithms, such as the Peterson's algorithm for mutual exclusion in two processes, are based on the principles of mutexes and semaphores. These algorithms demonstrate the foundational role that mutexes and semaphores play in ensuring correct synchronization in concurrent programming.

Conclusion

Both mutexes and semaphores are crucial synchronization mechanisms in concurrent programming, serving different purposes based on the nature of the problem at hand. Mutexes are suited for scenarios where exclusive access to a resource is required, while semaphores shine when there is a need to control access to resources with a limited capacity.

Understanding the difference between mutex and semaphore empowers developers to design robust and efficient multi-threaded or multi-process applications while avoiding common pitfalls related to race conditions and data inconsistency.

Frequently Asked Questions

Q. Can I use a semaphore when I only need exclusive access to a resource?

No, semaphores are not specifically designed for exclusive access. While a binary semaphore can be used in a way that mimics exclusive access, it’s not ideal. Mutexes are the proper tool for ensuring exclusive access, as they are designed to prevent multiple threads from accessing a resource simultaneously, with built-in ownership semantics.

Q. Are there cases where a mutex and a semaphore could be used interchangeably?

In general, mutexes and semaphores serve different purposes. While there are scenarios where a binary semaphore might seem interchangeable with a mutex, the inherent differences in ownership, usage intent, and behavior make it important to choose the synchronization primitive that best fits your application’s requirements.

Q. Can semaphores guarantee fairness among competing threads?

Semaphores do not inherently guarantee fairness among competing threads. Some implementations may offer fairness mechanisms, like first-come, first-served (FCFS) ordering, but this is not guaranteed across all systems. If fairness is critical, you should evaluate the specific semaphore implementation or consider synchronization techniques that explicitly support fairness, such as certain types of locks.

Q. Can I prevent all deadlocks by using mutexes or semaphores correctly?

No, while mutexes and semaphores help prevent race conditions and manage resource access, they do not inherently prevent deadlocks. Deadlocks can still occur if resources are not managed carefully. Techniques like proper resource ordering, timeout mechanisms, and deadlock detection are necessary to minimize the risk of deadlocks.

Q. Can I use semaphores with an initial count of zero?

Yes, semaphores can be initialized with an initial count of zero. This setup is useful for synchronization, as it blocks until another thread or process increments the semaphore, ensuring that certain operations occur in a specific order.

Q. Can a different thread release a mutex than the one that acquired it?

No, mutexes have an ownership concept, meaning that the thread that locks a mutex is responsible for unlocking it. Releasing a mutex from a different thread violates this ownership model and can lead to synchronization issues and unexpected behavior. However, in the case of a recursive mutex, the same thread can lock it multiple times.

Q. Can I use mutexes and semaphores in distributed systems?

Yes, mutexes and semaphores can be used in distributed systems, but their usage is more complex. Challenges like network latency, communication failures, and ensuring consistency across multiple nodes must be carefully addressed when applying these synchronization mechanisms in a distributed environment.

Q. What other synchronization mechanisms can I consider apart from mutexes and semaphores?

In addition to mutexes and semaphores, other synchronization mechanisms include condition semaphore variables, barriers, spinlocks, and read-write locks. The choice of synchronization primitive depends on the specific synchronization service patterns, resource access requirements, and performance considerations of your application.

Test Your Skills: Quiz Time

With this, we come to the end of the article. You might also be interested in reading:

As a biotechnologist-turned-writer, I love turning complex ideas into meaningful stories that inform and inspire. Outside of writing, I enjoy cooking, reading, and travelling, each giving me fresh perspectives and inspiration for my work.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment