- What Is An Operating System?

- What Is The Importance Of Operating Systems?

- What Are The Functions Of Operating System?

- Services Provided By Operating System

- Types Of Operating System

- Some Popular Operating Systems

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is An Operating System?

- Types Of Operating Systems

- Batch OS | Types Of Operating Systems

- Multi-Programming OS | Types Of Operating Systems

- Multi-Processing OS | Types Of Operating Systems

- Multi-Tasking OS | Types Of Operating Systems

- Time-Sharing OS | Types Of Operating Systems

- Distributed OS | Types Of Operating Systems

- Network OS | Types Of Operating Systems

- Real-Time OS | Types Of Operating Systems

- Mobile OS | Types Of Operating Systems

- History Of Operating System

- Operating Systems in Business: Achieving Maximum Utilization

- Advantages & Disadvantages of Operating Systems

- Popular Operating Systems In The Market

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Process In Programming?

- What Is Process Scheduling In OS?

- The Need for Process Scheduling In OS

- What is CPU Scheduling Algorithm?

- Different Types Of CPU Scheduling Algorithms

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Evolution & Generations Of Computers

- Definition Of A Computer System

- Components & Classification Of Computer Systems

- Basics Of Computer Networking & The Internet

- Introduction To Operating Systems

- Exploring Computer Memory & Storage

- Importance Of Computer Security & Privacy

- Applications Of Computer Fundamentals

- Job Prospects With Computer Fundamentals

- Frequently Asked Questions (FAQs)

- Master Your Skills: Quiz Time!

- Types of threads per process

- Introducing Thread Models

- What is Multithreading?

- Advantages of Multithreading

- Disadvantages of Multithreading

- Multithreading Models

- Multithreading Vs. Multitasking

- Difference between Process, Kernel Thread and User Thread

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- What is Booting Process?: Understanding the Types

- Steps in the Booting Process of Computer

- Boot Sequence in Operating System

- Booting Process: Evolution & Troubleshooting

- What is Secure Boot?

- What is Dual Booting?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What Is A Counter?

- Difference Between Synchronous And Asynchronous Counters

- What Is Synchronous Counter?

- What Is Asynchronous Counter?

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- History Of Mutex And Semaphore

- Difference Between Mutex And Semaphore

- What Is A Mutex?

- What Is A Semaphore?

- Common Facts About Mutex And Semaphore

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is a File?

- What is a Folder?

- Difference Between File and Folder (Table)

- Summing Up

- Test Your Skills: Quiz Time

- Paging

- Advantages of Paging

- Disadvantages of Paging

- Segmentation

- Advantages of segmentation

- Disadvantages of segmentation

- Difference between Paging and Segmentation

- Conclusion

- FAQs

- Test Your Skills: Quiz Time

- Types of Fragmentation

- Internal Fragmentation

- External Fragmentation

- Difference between Internal and External Fragmentation

- Summing up

- Test Your Skills: Quiz Time

- What is Paging in OS?

- Characteristics of Paging in OS

- Paging in OS: Page Table

- Paging with TLB (Translation Look-aside Buffer)

- Advantages of Paging in OS

- Disadvantages of Paging in OS

- Summing Up

- Frequently Asked Questions

- Test your skills: Quiz Time

- What Is Starvation In OS?

- Starvation In OS: Common Causes

- What Is Deadlock?

- Difference Between Deadlock And Starvation in OS

- Solutions To Starvation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Introduction to DOS: A brief history

- Introduction to Windows: A brief history

- Working of DOS

- Working of Windows

- Line of difference: DOS versus Windows

- Versions of DOS and Windows

- Summing Up

- Test Your Skills: Quiz Time

- Understanding File And Directory In OS

- The Directory Structure In OS

- Types Of Directory Structure In OS

- Directory Implementation In OS

- Conclusion

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- What is the purpose of CPU scheduling?

- What is preemptive scheduling?

- What is a non-preemptive scheduling method?

- Comparison between preemptive and non-preemptive scheduling

- Basic differences between the preemptive and non-preemptive scheduling techniques

- Advantages of preemptive scheduling -

- Disadvantages of preemptive scheduling

- Advantages of non-preemptive scheduling -

- Disadvantages of non-preemptive scheduling

- Test Your Skills: Quiz Time

- What Is Multiprogramming?

- Advantages of Multiprogramming Operating System

- Disadvantages of Multiprogramming Operating System

- What Is Multitasking?

- Advantages of Multitasking Operating System

- Disadvantages of Multitasking Operating System

- Multiprogramming Vs Multitasking

- Frequently Asked Questions

- Test Your Skills: Quiz Time

- Character User Interface

- Advantages of CUI

- Disadvantages of CUI

- Graphical User Interface

- Advantages of GUI

- Disadvantages of GUI

- Major Differences Between CUI and GUI

- Summing Up

- Test Your Skills: Quiz Time

Multithreading In Operating Systems - Types, Pros, Cons And More

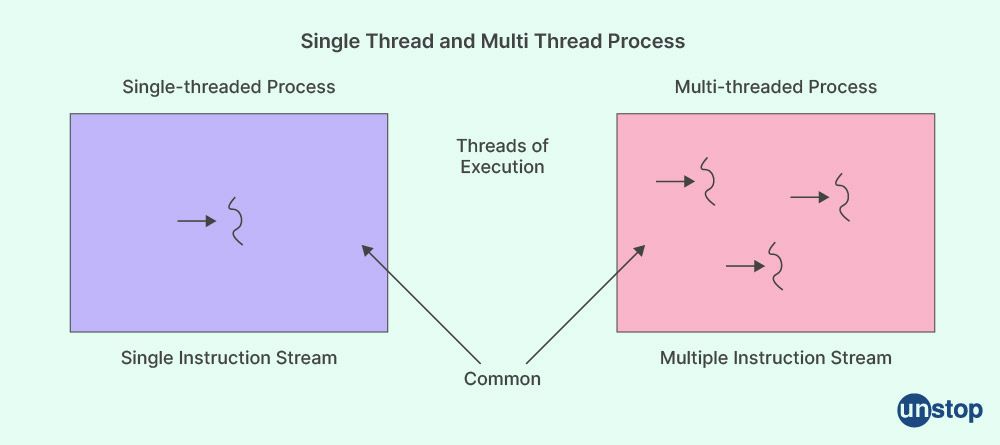

Multithreading in OS allows a task to break into multiple threads. In simple terms, a thread is a lightweight process consuming lesser resource sharing than the process. It is defined as a flow of execution through the process code that has its own program counter to keep track of which instruction thread to execute next. The system registers to maintain its current working variables and a stack to keep track of the execution history. A process is defined as a collection of threads.

Practice with Unstop! Solve complex problems and prepare yourself for the recruitment process. Click Here to Explore

It's worth noting that each thread belongs to just one process, and there are no threads outside of a classical process. Each thread reflects the flow of control in its own way. Threads are a good basis for running programs in parallel on shared-memory multiprocessors. The concept of thread is used for improving the performance of the execution of applications through parallelism.

Through parallelism, thread forks provide a technique to boost application-level performance. Threads are a software approach to increase operating system speed by lowering overhead thread. A thread is equivalent to a traditional process. Threads provide a suitable foundation for running applications in parallel processing on shared-memory multiprocessors.

Types of threads per process

There are two sorts of process based on the number of threads:

- Single thread process: Only one thread in an entire process

- Multi-thread process: Multiple threads within an entire process.

Both single-threaded and a multithreaded process have two sorts of threads, depending on the level:

- User-level threads: Process threads are handled at the user level. The kernel is unaware of user-level threads. Execution of user-level thread scheduling by thread library (user mode).

- Kernel-level threads: Process threads are handled at the kernel level. Kernel level threads are known to the kernel and are scheduled by the operating system (Kernel-mode)

Introducing Thread Models

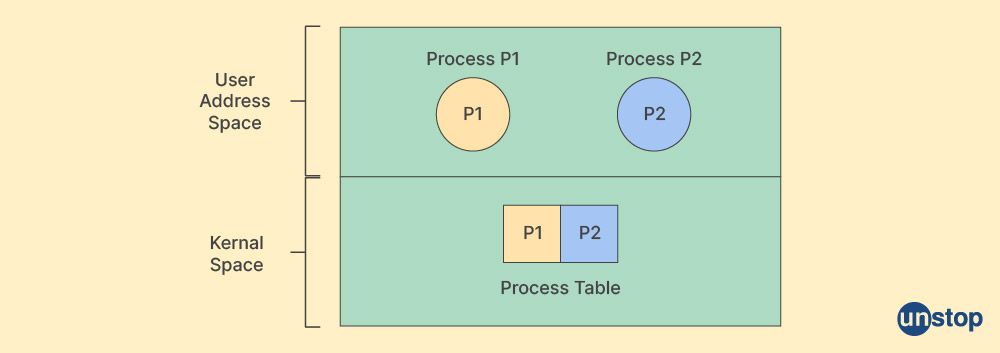

1. User-level single-thread process model

Each process maintains a single thread. The single process itself is a single thread. Every process has an entry in the process table, which keeps track of its PCB. It is used at the application programmer level. Multiprocessing is not available to the multithreaded application.

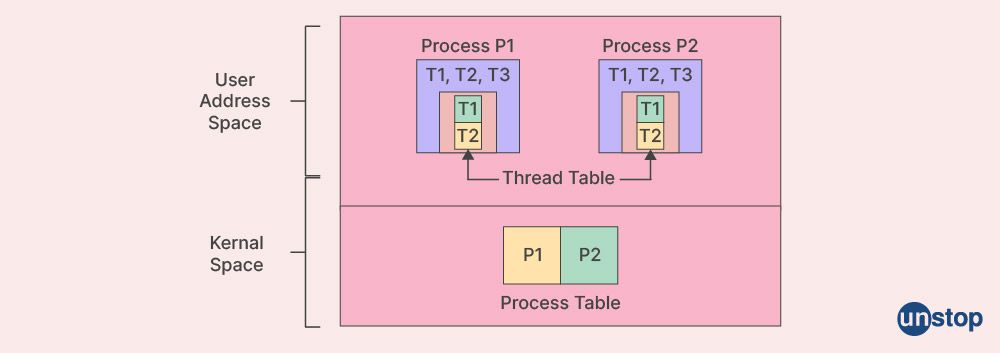

2. User-level multi-thread process model

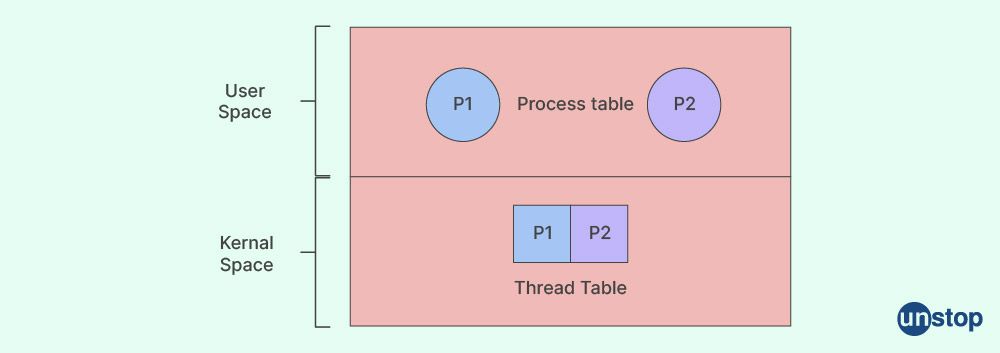

There are several user-level thread libraries in each process. At the user level, a kernel with thread library schedules all process threads. Thread mode switch can be done within a process, regardless of the operating system. If one thread is blocked, the whole process is blocked. The TCB of each thread in a process is kept in a thread table. Kernel library is unaware of thread scheduling, which occurs within a process.

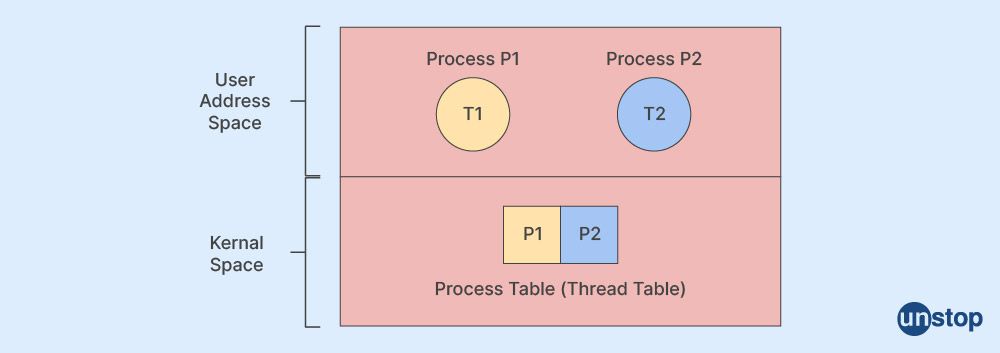

3. Kernel-level single-thread process model

Every process has a single kernel-level thread basis, and when there is only one thread in a process, the process table can be used as a thread table.

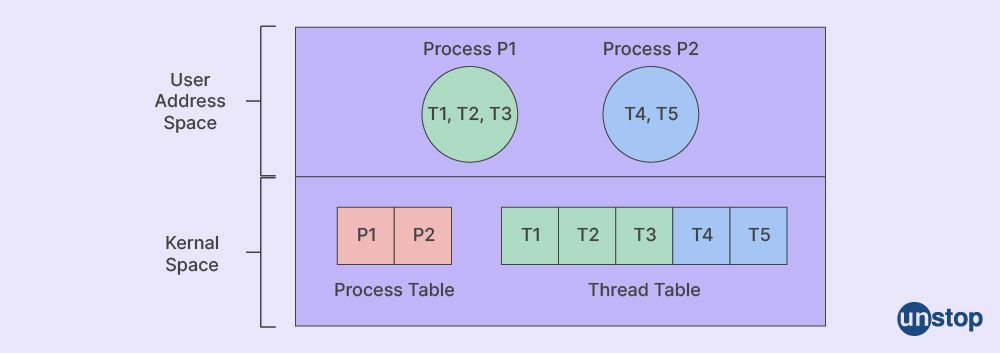

4. Kernel-level multi-thread process model

Thread scheduling is carried out at the kernel level. Fine-grain scheduling is done thread by thread. If a thread is blocked, another thread can be scheduled without the entire operation being halted. Thread scheduling at the kernel level is slower than thread scheduling at the user level.

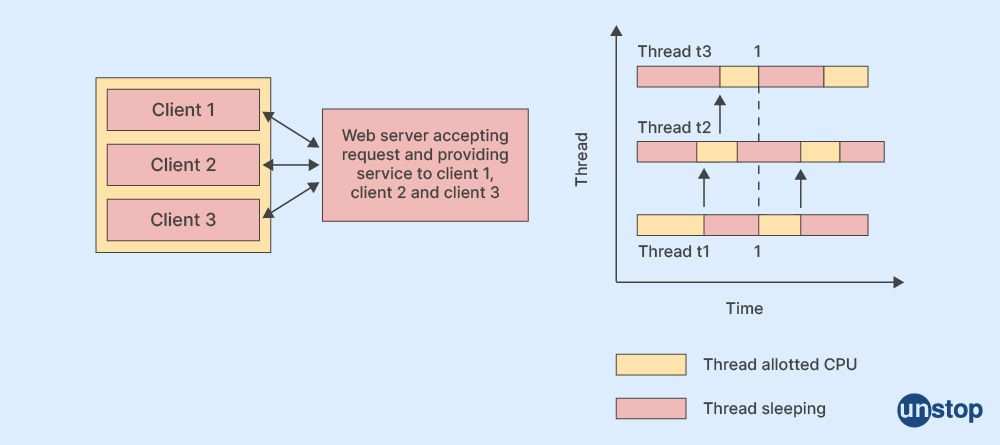

What is Multithreading?

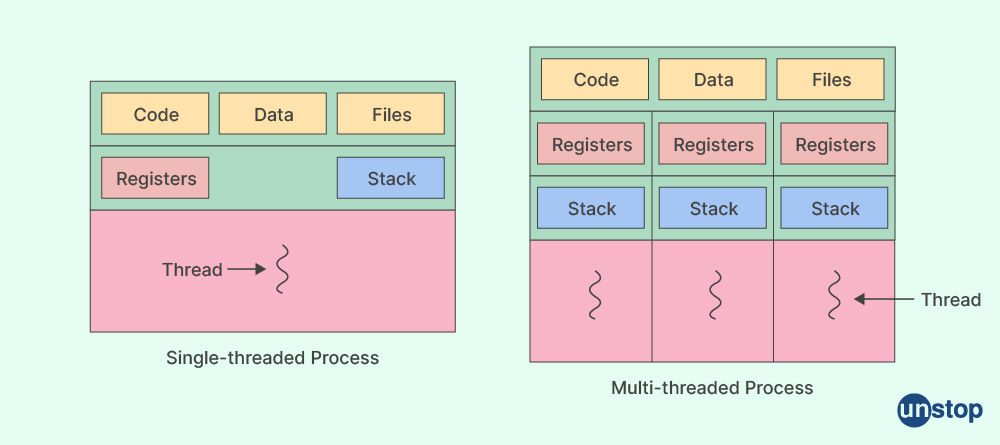

The term multithreading refers to an operating system's capacity to support execution among fellow threads within a single process. The advantages of implementation of threads are resource ownership (Address space and utilized file I/O) and execution (TCB and scheduling). All threads inside a process will have to share compute resources such as code, data, files, and memory space with its peer thread, but stacks and registers will not be shared, and each new thread will have its own stacks and registers.

What is important to note here is that requests from one thread do not block requests from other separate threads, which improves application responsiveness. The term 'multithreading' also reduces the number of computing resources used and makes them more efficient. The concept of multithreading is the event of a system executing many threads, with the execution of these threads being of two types: Concurrent and Parallel multithread executions.

The concurrent process of threads is defined as the ability of a processor to move execution resources between threads in a multithreaded process on a single processor. When each thread in a multithreaded program executes on a separate processor at the same time, it is referred to as parallel execution.

The threads of the same process share the following items during multithreading:

- Address space

- Global variables

- Accounting information

- Opened files, used memory and I/O

- Child processes

- Pending alarms

- Signal and signal handlers

The following things are private (not shared) to the individual thread of a process in multithreading.

- Stack (parameters, temporary, variables return address, etc)

- TCB (Thread Control Block): It contains 'thread ids', 'CPU state information' (user-visible, control and status registers, stack pointers), and 'scheduling information' (state of thread priority, etc.)

Advantages of Multithreading

- Improves execution speed (by combining CPU machine and I/O wait times).

- Multithreading can be used to achieve concurrency.

- Reduces the amount of time it takes for context switching.

- Improves responsiveness.

- Make synchronous processing possible (separates the execution of independent threads).

- Increases throughput.

- Performs foreground and background work in parallel.

Disadvantages of Multithreading

- Because the threads share the same address space and may access resources such as open files, difficulties might arise in managing threads if they utilize incompatible data structures.

- If we don't optimize the number of threads, instead of gaining performance, we may lose performance.

- If a parent process requires several threads to function properly, the child processes should be multithreaded as well, as they may all be required.

Process Image vs. Multi Thread Process Image: PCB, Stack, Data, and Code segments are all shown in the process image. PCB for the parent process is included in multithread process images. Each individual thread has a TCB and a stack and address space for everything (data and code)

Multithreading Models

There are three sorts of models in multithreading.

- Many-to-many models

- Many-to-one model

- One-to-one model

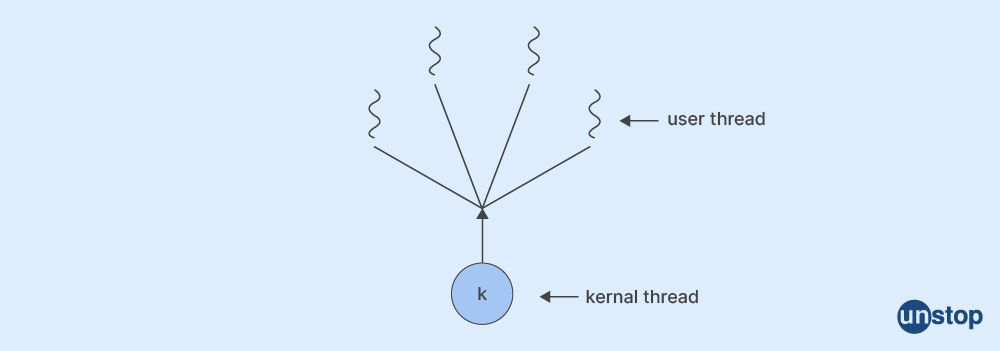

1. Many-to-One: There will be a many-to-one relationship model between threads, as the name implies. Multiple user threads are linked or mapped to a single kernel thread in this case. Management of threads is done on a user-by-user basis, which makes it more efficient. It converts a large number of user-level threads into a single Kernel-level thread.

The following issues arise in many to one model:

- A block statement on a user-level thread stops all other threads from running.

- Use of multi-core architecture is inefficient.

- There is no actual concurrency.

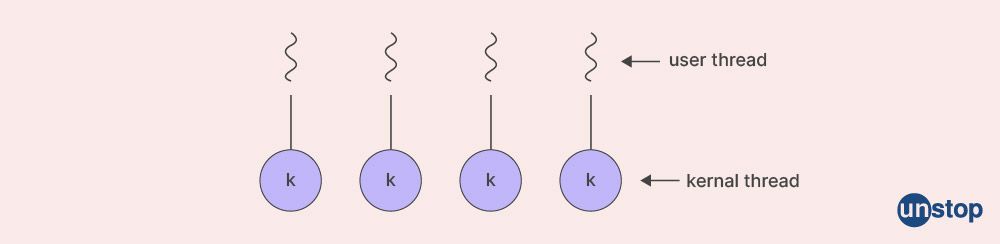

2. One-to-One: We can deduce from the name that one user thread is mapped to one separate kernel thread. The user-level thread and the kernel-level thread have a one-to-one relationship. The many-to-one model for thread provides less concurrency than this architecture. When a thread performs a blocking system call, it also allows another thread to run.

It provides the following benefits over the many-to-one model:

- A block statement on one thread does not cause any other threads to be blocked.

- Concurrency in the true sense.

- Use of a multi-core system that is efficient.

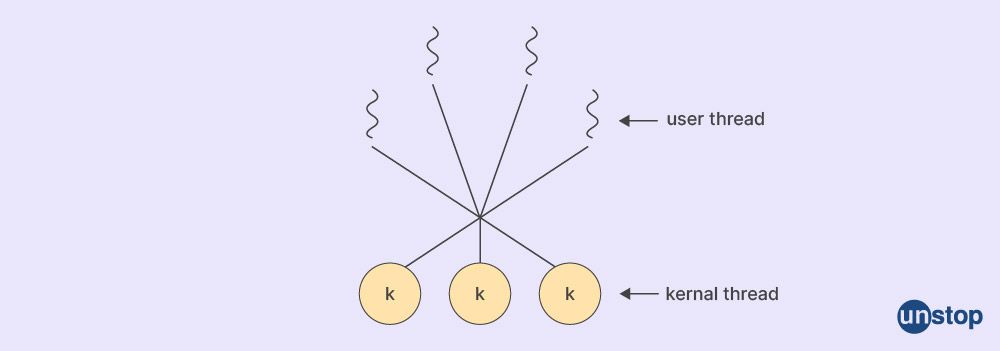

3. Many-to-Many: From the name itself, we may assume that there are numerous user threads mapped to a lesser or equal number of kernel threads. This model has a version called a two-level model, which incorporates both many-to-many and one-to-one relationships. When a thread makes a blocked system call, the kernel can schedule another thread for execution.

The particular application or machine determines the number of kernel threads. The many-to-many model multiplexes any number of user threads onto the same number of kernel threads or fewer kernel threads. On the utilization of multiprocessor architectures, developers may build as many user threads as they need, and the associated kernel threads can execute in parallel.

So, in a multithreading system, this is the optimal paradigm for establishing the relationship between user thread and the kernel thread.

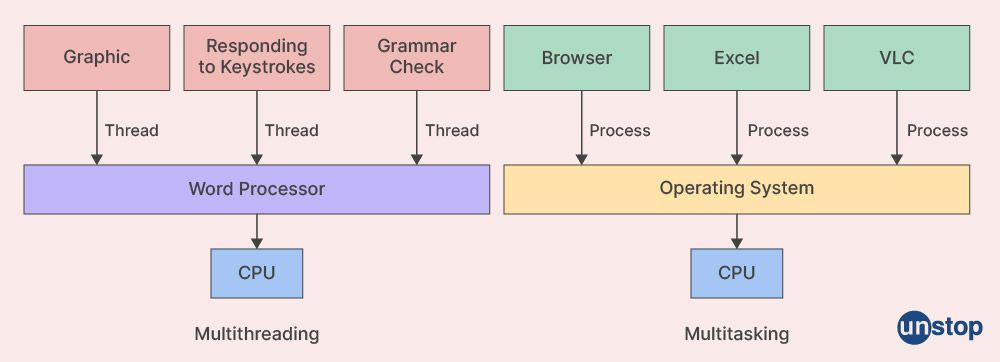

Multithreading Vs. Multitasking

- Terminating a thread in multithreading takes lesser time than terminating a process in multitasking.

- As opposed to switching between processes, switching between threads takes less time.

- Multithreading allows threads in the same process to share memory, I/O, and file resources, allowing threads to communicate at the user level.

- When compared to a process, threads are light.

- Web-client browsers, network servers, bank servers, word processors, and spreadsheets are examples of multithreading programs.

- When compared to multitasking, multithreading is faster.

- Creating a thread in multithreading requires less time than creating a process in multitasking.

- Multiprocessing relies on pickling objects in memory to send to other processes, but Multithreading avoids it.

Difference between Process, Kernel Thread and User Thread

|

Process |

Kernel Thread |

User Thread |

|

There is no collaboration between processes. |

Share address space |

Share address space |

|

When one process is stopped, it has no effect on the others. |

A process's other threads are unaffected by blocking one thread. |

When one thread is blocked, the entire thread's process is blocked. |

|

In a multiprocessor system, each process can operate on a distinct processor to facilitate parallelism. |

In a multiprocessor system, each thread might execute on a distinct processor to allow parallelism, and Kernel manages all threads in a process. |

Only one processor should be used by all threads, and only one thread should be active at any given moment. Because a process's threads are all interdependent and governed by it. |

|

The process has a lot of overhead. |

Kernel threads have a moderate overhead. |

The overhead of the user thread is minimal. |

|

The procedure is a lengthy procedure. |

Kernel threading is a lightweight method that does not require the use of a process. |

A user thread is a small process that belongs to a larger process. |

|

The operating system schedules processes using a process table. |

The OS uses a thread table to schedule threads. |

Thread library uses a thread table to schedule threads. |

|

It is possible to suspend a process without affecting other processes. |

Suspending a process causes all of its threads to stop executing, which isn't conceivable. |

Thread suspension is not possible since suspending a process causes the current thread to stop operating. |

|

OS support is required for efficient communication between processes. |

OS support is required for thread communication. |

The threads communicate with each other at the user level (does not require OS support). |

Conclusion

Multiple threads can be executed within a single process thanks to the strong notion of multithreading included in operating systems. Breaking down complicated operations into more manageable smaller execution units promotes better code organization and modularity. The usage of several threads simultaneously increases resource utilization, responsiveness, and efficiency by using fewer resources overall. It enables concurrent execution of threads with the possibility for parallel execution on systems with multiple processors. Benefits of multithreading include increased parallelism, greater performance, and the capacity to carry out several activities at once.

Despite its drawbacks, multithreading has a number of benefits, including the ability to improve parallelism and make the most of the CPU resources available. If a process's main thread is stalled, the Kernel can schedule another process's main thread. It improves the responsiveness of the program and the user's engagement with it.

FAQs

1. What is meant by thread creation?

The process of starting a new thread of execution within a program is referred to as thread creation. Threads are autonomous execution paths that can coexist with other threads in the same program. Thread creation is feasible in several computer languages, including Java and C++.

In Java, you can construct threads by either extending the Thread class or by implementing the Runnable interface.

2. What is meant by Kernel Space?

The memory space designated for the kernel, the central component of the operating system, is known as kernel space. It is the location where the kernel operates and kernel mode commands are carried out. The majority of device drivers, kernel extensions, and privileged operating system kernels run in the kernel space, which is a secure portion of memory. To offer memory security and hardware protection against malicious or errant program behavior, virtual memory is divided into user space and kernel space.

3. Tell me about the multiprocessor machine.

A computer system with two or more central processing units (CPUs) that share a common main memory and peripherals is referred to as a multiprocessor. This facilitates the processing of several programs at once. The primary goal of employing a multiprocessor is to increase the system's processing performance, with fault tolerance and application matching as secondary goals.

4. What is the full form of POSIX standard?

POSIX stands for Portable Operating System Interface. For the purpose of preserving operating system compatibility, IEEE has developed a family of standards. Any program that complies with POSIX standards should thus work with other POSIX-compliant operating systems.

5. What is multi-thread?

In a muti-thread, two or more instruction threads can run independently while extensively sharing the same process resources. A thread is a standalone set of instructions that can work in tandem with other threads belonging to the same root process, and a program may do numerous tasks at once thanks to multithreading, which boosts speed.

6. Describe thread management.

The actions and methods used to start, stop, coordinate, and manage threads inside a multithreaded program or operating system are referred to as thread management. It entails controlling thread lifecycles and making sure they are executed effectively. Thread creation, thread termination, and thread join are all part of thread management.

7. What is thread cancellation?

A target thread cancellation mechanism in a multithreaded program enables one thread to stop another thread's execution. It is a beneficial feature for controlling thread lifecycles and assuring their effective execution. An essential aspect of multithreaded programming that allows for effective thread management code and guarantees thread execution is thread cancellation. To prevent conflicts brought on by race conditions and deadlocks, thread cancellation must be utilized cautiously.

Hope you found this article useful. Happy Learning!

Test Your Skills: Quiz Time

You might also be interested in reading:

As a biotechnologist-turned-writer, I love turning complex ideas into meaningful stories that inform and inspire. Outside of writing, I enjoy cooking, reading, and travelling, each giving me fresh perspectives and inspiration for my work.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Blogs you need to hog!

This Is My First Hackathon, How Should I Prepare? (Tips & Hackathon Questions Inside)

10 Best C++ IDEs That Developers Mention The Most!

Advantages and Disadvantages of Cloud Computing That You Should Know!

Comments

Add comment