- What Is Data Structure?

- Key Features Of Data Structures

- Basic Terminologies Related To Data Structures

- Types Of Data Structures

- What Are Linear Data Structures & Its Types?

- What Are Non-Linear Data Structures & Its Types?

- Importance Of Data Structure In Programming

- Basic Operations On Data Structures

- Applications Of Data Structures

- Real-Life Applications Of Data Structures

- Linear Vs. Non-linear Data Structures

- What Are Algorithms? The Difference Between Data Structures & Algorithms

- Conclusion

- Frequently Asked Questions

- What Is Asymptotic Notation?

- How Asymptotic Notation Helps In Analyzing Performance

- Types Of Asymptotic Notation

- Big-O Notation (O)

- Omega Notation (Ω)

- Theta Notation (Θ)

- Little-O Notation (o)

- Little-Omega Notation (ω)

- Summary Of Asymptotic Notations

- Real-World Applications Of Asymptotic Notation

- Conclusion

- Frequently Asked Questions

- Understanding Big O Notation

- Types Of Time Complexity

- Space Complexity In Big O Notation

- How To Determine Big O Complexity

- Best, Worst, And Average Case Complexity

- Applications Of Big O Notation

- Conclusion

- Frequently Asked Questions

- What Is Time Complexity?

- Why Do You Need To Calculate Time Complexity?

- The Time Complexity Algorithm Cases

- Time Complexity: Different Types Of Asymptotic Notations

- How To Calculate Time Complexity?

- Time Complexity Of Sorting Algorithms

- Time Complexity Of Searching Algorithms

- Writing And optimizing An algorithm

- What Is Space Complexity And Its Significance?

- Relation Between Time And Space Complexity

- Conclusion

- What Is Linear Data Structure?

- Key Characteristics Of Linear Data Structures

- What Are The Types Of Linear Data Structures?

- What Is An Array Linear Data Structure?

- What Are Linked Lists Linear Data Structure?

- What Is A Stack Linear Data Structure?

- What Is A Queue Linear Data Structure?

- Most Common Operations Performed in Linear Data Structures

- Advantages Of Linear Data Structures

- What Is Nonlinear Data Structure?

- Difference Between Linear & Non-Linear Data Structures

- Who Uses Linear Data Structures?

- Conclusion

- Frequently Asked Questions

- What is a linear data structure?

- What is a non-linear data structure?

- Difference between linear data structure and non-linear data structure

- FAQs about linear and non-linear data structures

- What Is Search?

- What Is Linear Search In Data Structure?

- What Is Linear Search Algorithm?

- Working Of Linear Search Algorithm

- Complexity Of Linear Search Algorithm In Data Structures

- Implementations Of Linear Search Algorithm In Different Programming Languages

- Real-World Applications Of Linear Search In Data Structure

- Advantages & Disadvantages Of Linear Search

- Best Practices For Using Linear Search Algorithm

- Conclusion

- Frequently Asked Questions

- What Is The Binary Search Algorithm?

- Conditions For Using Binary Search

- Steps For Implementing Binary Search

- Iterative Method For Binary Search With Implementation Examples

- Recursive Method For Binary Search

- Complexity Analysis Of Binary Search Algorithm

- Iterative Vs. Recursive Implementation Of Binary Search

- Advantages & Disadvantages Of Binary Search

- Practical Applications & Real-World Examples Of Binary Search

- Conclusion

- Frequently Asked Questions

- Understanding The Jump Search Algorithm

- How Jump Search Works?

- Code Implementation Of Jump Search Algorithm

- Time And Space Complexity Analysis

- Advantages Of Jump Search

- Disadvantages Of Jump Search

- Applications Of Jump Search

- Conclusion

- Frequently Asked Questions

- What Is Sorting In Data Structures?

- What Is Bubble Sort?

- What Is Selection Sort?

- What Is Insertion Sort?

- What Is Merge Sort?

- What Is Quick Sort?

- What Is Heap Sort?

- What Is Radix Sort?

- What Is Bucket Sort?

- What Is Counting Sort?

- What Is Shell Sort?

- Choosing The Right Sorting Algorithm

- Applications Of Sorting

- Conclusion

- Frequently Asked Questions

- Understanding Bubble Sort Algorithm

- Bubble Sort Algorithm

- Implementation Of Bubble Sort In C++

- Time And Space Complexity Analysis Of Bubble Sort Algorithm

- Advantages Of Bubble Sort Algorithm

- Disadvantages Of Bubble Sort Algorithm

- Applications of Bubble Sort Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding The Merge Sort Algorithm

- Algorithm For Merge Sort

- Implementation Of Merge Sort In C++

- Time And Space Complexity Analysis Of Merge Sort

- Advantages And Disadvantages Of Merge Sort

- Applications Of Merge Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Selection Sort Algorithm

- Algorithmic Approach To Selection Sort

- Implementation Of Selection Sort Algorithm

- Complexity Analysis Of Selection Sort

- Comparison Of Selection Sort With Other Sorting Algorithms

- Advantages And Disadvantages Of Selection Sort

- Application Of Selection Sort

- Conclusion

- Frequently Asked Questions

- What Is Insertion Sort Algorithm?

- How Does Insertion Sort Work? (Step-by-Step Explanation)

- Implementation of Insertion Sort in C++

- Time And Space Complexity Of Insertion Sort

- Applications Of Insertion Sort Algorithm

- Comparison With Other Sorting Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding Quick Sort Algorithm

- Step-By-Step Working Of Quick Sort

- Implementation Of Quick Sort Algorithm

- Time Complexity Analysis Of Quick Sort

- Advantages Of Quick Sort Algorithm

- Disadvantages Of Quick Sort Algorithm

- Applications Of Quick Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding The Heap Data Structure

- Working Of Heap Sort Algorithm

- Implementation Of Heap Sort Algorithm

- Advantages Of Heap Sort

- Disadvantages Of Heap Sort

- Real-World Applications Of Heap Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Counting Sort Algorithm

- Conditions For Using Counting Sort Algorithm

- How Counting Sort Algorithm Works?

- Implementation Of Counting Sort Algorithm

- Time And Space Complexity Analysis Of Counting Sort

- Comparison Of Counting Sort With Other Sorting Algorithms

- Advantages Of Counting Sort Algorithm

- Disadvantages Of Counting Sort Algorithm

- Applications Of Counting Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding Shell Sort Algorithm

- Working Of Shell Sort Algorithm

- Implementation Of Shell Sort Algorithm

- Time Complexity Analysis Of Shell Sort Algorithm

- Advantages Of Shell Sort Algorithm

- Disadvantages Of Shell Sort Algorithm

- Applications Of Shell Sort Algorithm

- Conclusion

- Frequently Asked Questions

- What Is Linked List In Data Structures?

- Types Of Linked Lists In Data Structures

- Linked List Operations In Data Structures

- Advantages And Disadvantages Of Linked Lists In Data Structures

- Comparison Of Linked Lists And Arrays

- Applications Of Linked Lists

- Conclusion

- Frequently Asked Questions

- What Is A Singly Linked List In Data Structure?

- Insertion Operations On Singly Linked Lists

- Deletion Operation On Singly Linked List

- Searching For Elements In Single Linked List

- Calculating Length Of Single Linked List

- Practical Applications Of Singly Linked Lists In Data Structure

- Common Problems With Singly Linked Lists

- Conclusion

- Frequently Asked Questions (FAQ)

- What Is A Linked List?

- Reverse A Linked List

- How To Reverse A Linked List? (Approaches)

- Recursive Approach To Reverse A Linked List

- Iterative Approach To Reverse A Linked List

- Using Stack To Reverse A Linked List

- Complexity Analysis Of Different Approaches To Reverse A Linked List

- Conclusion

- Frequently Asked Questions

- What Is A Stack In Data Structure?

- Understanding Stack Operations

- Stack Implementation In Data Structures

- Stack Implementation Using Arrays

- Stack Implementation Using Linked Lists

- Comparison: Array vs. Linked List Implementation

- Applications Of Stack In Data Structures

- Advantages And Disadvantages Of Stack Data Structure

- Conclusion

- Frequently Asked Questions

- What Is A Graph Data Structure?

- Importance Of Graph Data Structures

- Types Of Graphs In Data Structure

- Types Of Graph Algorithms

- Application Of Graphs In Data Structures

- Challenges And Complexities In Graphs

- Conclusion

- Frequently Asked Questions

- What Is Tree Data Structure?

- Terminologies Of Tree Data Structure:

- Different Types Of Tree Data Structures

- Basic Operations On Tree Data Structure

- Applications Of Tree Data Structures

- Comparison Of Trees, Graphs, And Linear Data Structures

- Advantages Of Tree Data Structure

- Disadvantages Of Tree Data Structure

- Conclusion

- Frequently Asked Questions

- What Is Dynamic Programming?

- Real-Life Example: The Jigsaw Puzzle Analogy

- How To Solve A Problem Using Dynamic Programming?

- Dynamic Programming Algorithm Techniques

- Advantages Of Dynamic Programming

- Disadvantages Of Dynamic Programming

- Applications Of Dynamic Programming

- Conclusion

- Frequently Asked Questions

- Understanding The Sliding Window Algorithm

- How Does The Sliding Window Algorithm Works?

- How To Identify Sliding Window Problems?

- Fixed-Size Sliding Window Example: Maximum Sum Subarray Of Size k

- Variable-Size Sliding Window Example: Smallest Subarray With A Given Sum

- Advantages Of Sliding Window Technique

- Disadvantages Of Sliding Window Technique

- Conclusion

- Frequently Asked Questions

- Introduction To Data Structures

- Data Structures Interview Questions: Basics

- Data Structures Interview Questions: Intermediate

- Data Structures Interview Questions: Advanced

- Conclusion

Learn About Asymptotic Notations (+ Graphs & Real-Life Examples)

In the world of algorithms, efficiency is a crucial factor in determining performance. Asymptotic notation provides a mathematical framework to analyze the time and space complexity of algorithms, helping us understand how they behave as input size grows. By using notations like Big-O, Omega (Ω), and Theta (Θ), we can compare different algorithms and choose the most optimal one for a given problem.

In this article, we will explore the different types of asymptotic notation, their significance, and how they are used to evaluate algorithmic efficiency. Let’s get started!

What Is Asymptotic Notation?

Asymptotic notation is a mathematical tool used to describe the efficiency of an algorithm as the input size approaches infinity. It provides a way to express the growth rate of an algorithm's runtime or space requirement.

Purpose Of Asymptotic Notation

The primary goal of asymptotic notation is to focus on the dominant term in the runtime function, ignoring constant factors and lower-order terms. This helps in:

- Comparing algorithms efficiently – Instead of calculating the exact runtime, we can compare algorithms based on how their runtimes grow.

- Predicting performance for large inputs – As input size increases, minor details become negligible, and the overall growth rate becomes more important.

- Providing a hardware-independent measure – Since it abstracts machine-dependent factors, it allows fair comparisons of algorithms across different systems.

How Asymptotic Notation Helps In Analyzing Performance

When analyzing an algorithm, we are generally concerned with how the runtime increases as the input size (n) grows. Asymptotic notation helps in this by simplifying the runtime analysis into different categories:

- Best case (Ω notation) – The minimum time an algorithm takes.

- Worst case (O notation) – The maximum time an algorithm can take.

- Average case (Θ notation) – The expected runtime in general scenarios.

For instance, consider two sorting algorithms:

- Bubble Sort – Takes O(n²) time.

- Merge Sort – Takes O(n log n) time.

For small inputs, the difference may not be significant. However, for large inputs, Merge Sort is significantly faster due to its lower growth rate.

Thus, asymptotic notation helps in choosing the right algorithm by providing a clear idea of how the algorithm behaves as n becomes large.

Exact Runtime Analysis Vs. Asymptotic Analysis

|

Feature |

Exact Runtime Analysis |

Asymptotic Analysis |

|

Definition |

Determines the precise time taken by an algorithm for a given input size. |

Describes the upper, lower, or tight bounds on growth rate. |

|

Focus |

Machine-dependent execution time. |

Growth rate as n → ∞. |

|

Example |

"This sorting algorithm takes 50ms for n=1000." |

"This sorting algorithm runs in O(n log n) time." |

|

Considerations |

Includes constant factors, CPU speed, and system performance. |

Ignores constants and lower-order terms. |

|

Use Case |

Used when actual performance measurement is needed. |

Used to compare algorithms theoretically. |

Example:

Imagine an algorithm with an exact runtime function:

T(n) = 5n^2 + 3n + 7

- Exact runtime analysis would evaluate this function precisely for different values of n.

- Asymptotic analysis simplifies it by focusing on the highest order term, making it O(n²).

This simplification makes it easier to compare with other algorithms, ignoring unimportant details.

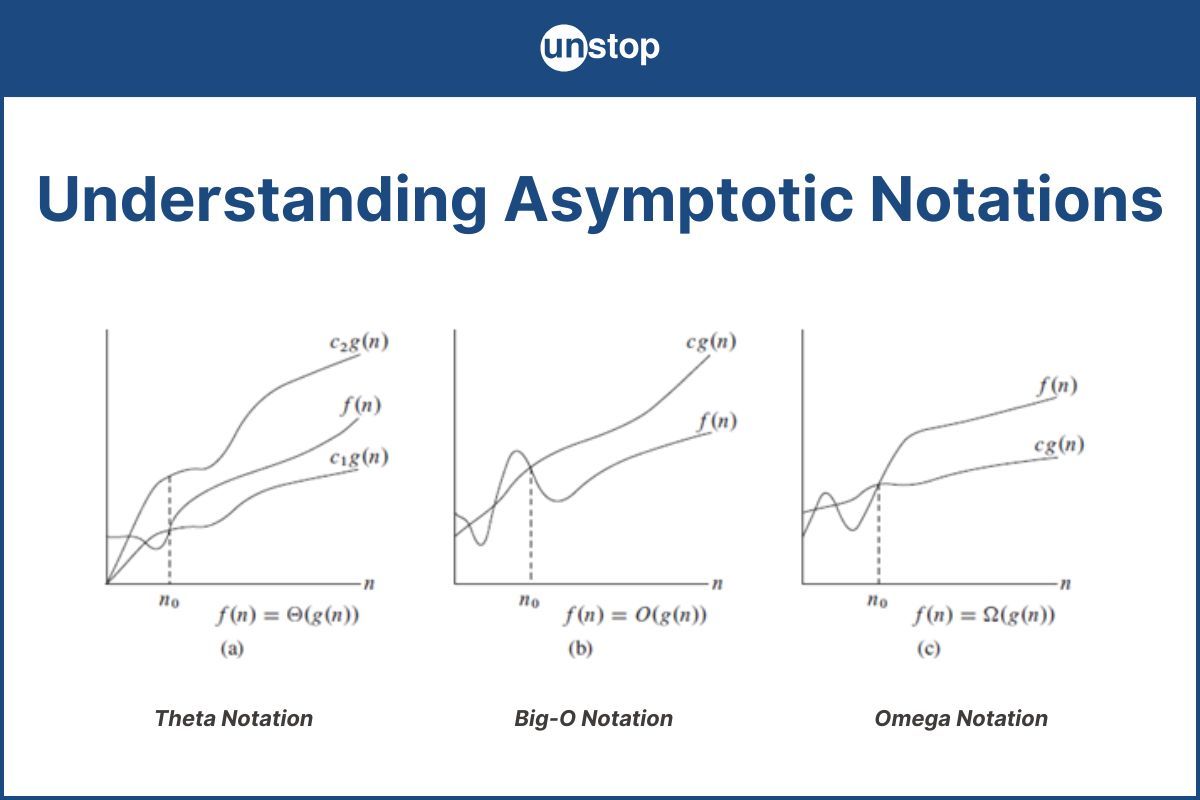

Types Of Asymptotic Notation

Asymptotic notation is used to describe the behavior of algorithms as their input size grows. There are several types of asymptotic notations, each serving a different purpose in analyzing an algorithm's performance. The main types of asymptotic notations are:

- Big-O Notation (O)

- Omega Notation (Ω)

- Theta Notation (Θ)

- Little-O Notation (o)

- Little-Omega Notation (ω)

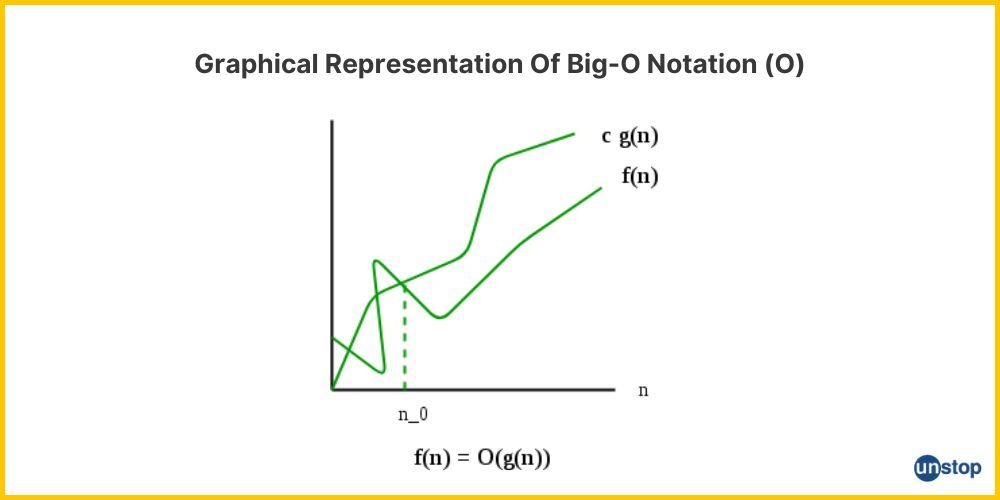

Big-O Notation (O)

Big-O notation (O) describes the upper bound of an algorithm’s growth rate. It provides the worst-case scenario, ensuring that the algorithm never exceeds a certain time complexity as input size n increases.

Mathematically, an algorithm is O(f(n)) if there exist positive constants c and n₀ such that:

T(n)≤c⋅f(n),for all n≥n₀

This means that for sufficiently large inputs, the algorithm’s runtime does not grow faster than f(n), up to a constant factor c.

Common Examples Of Big-O Notation

Different algorithms exhibit different growth rates. Here are some common complexities:

|

Notation |

Complexity Class |

Example Algorithm |

Explanation |

|

O(1) |

Constant Time |

Accessing an array element |

Runtime remains constant regardless of input size. |

|

O(log n) |

Logarithmic Time |

Binary Search |

Performance improves significantly for large inputs. |

|

O(n) |

Linear Time |

Time grows proportionally to input size. |

|

|

O(n log n) |

Log-Linear Time |

Merge Sort, QuickSort (best case) |

Efficient sorting algorithms. |

|

O(n²) |

Quadratic Time |

Bubble Sort, Selection Sort |

Nested loops make execution time grow rapidly. |

|

O(2ⁿ) |

Exponential Time |

Recursive Fibonacci |

Grows exponentially, making it impractical for large inputs. |

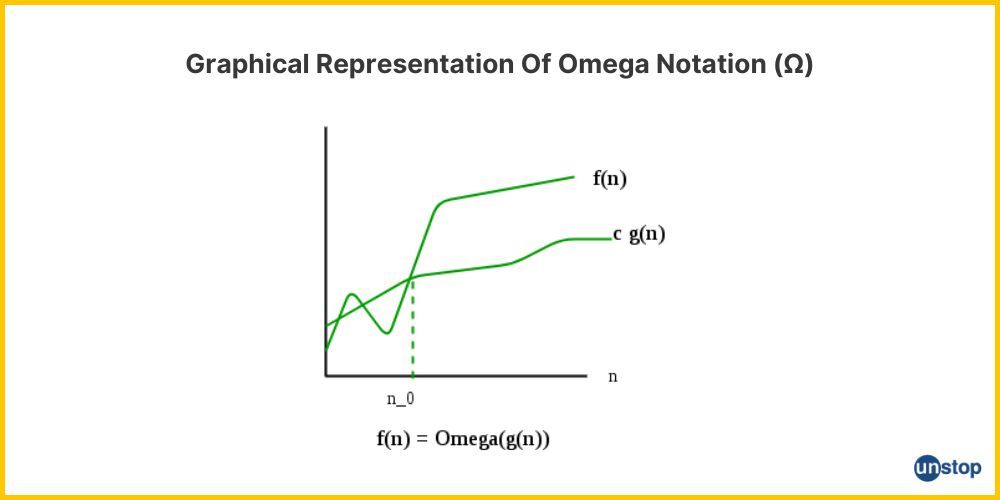

Omega Notation (Ω)

Omega (Ω) notation is used to describe the lower bound of an algorithm’s running time. It provides a guarantee that the algorithm will take at least Ω(f(n)) time for large enough input sizes.

Mathematically, an algorithm is Ω(f(n)) if there exist positive constants c and n₀ such that:

T(n)≥c⋅f(n),for all n≥n₀

This means that, as the input size n grows, the algorithm's runtime cannot be worse than a certain growth rate, f(n), beyond some constant factor c.

In other words, Omega notation provides a lower limit on how much time or space the algorithm will take, ensuring that the algorithm's performance will always be at least as good as the specified lower bound.

Common Examples Of Omega Notation

Omega notation describes the best-case performance of algorithms. Here are some examples:

|

Notation |

Complexity Class |

Example Algorithm |

Explanation |

|

Ω(1) |

Constant Time |

Accessing an array element |

Best case remains constant, no matter the input size. |

|

Ω(log n) |

Logarithmic Time |

Binary Search (best case) |

Best case involves finding the target early in the search. |

|

Ω(n) |

Linear Time |

Must visit all elements in the list, even in the best case. |

|

|

Ω(n²) |

Quadratic Time |

Bubble Sort (best case) |

Best case occurs when the list is already sorted. |

|

Ω(2ⁿ) |

Exponential Time |

Recursive Fibonacci (best case) |

Best case involves calculating fewer recursive calls. |

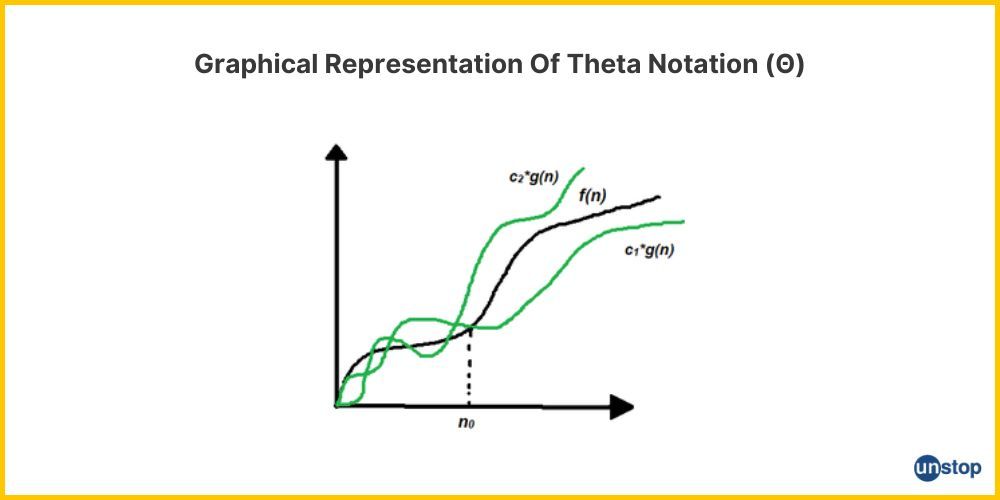

Theta Notation (Θ)

Theta (Θ) notation gives us a tight bound on the running time of an algorithm. It bounds the algorithm's performance both from above and below, meaning the function will grow at the same rate as the function provided in the Theta expression.

Mathematically, an algorithm is Θ(f(n)) if there exist positive constants c₁, c₂, and n₀ such that:

c₁⋅f(n)≤T(n)≤c₂⋅f(n),for all n≥n₀

In simpler terms, the running time T(n) of an algorithm will be within a constant factor of f(n) for sufficiently large inputs. This provides a more precise characterization of the algorithm’s time complexity compared to Big-O (which only gives an upper bound) and Omega (which gives a lower bound).

Common Examples Of Theta Notation

Theta notation describes the exact running time of an algorithm. Here are some examples:

|

Notation |

Complexity Class |

Example Algorithm |

Explanation |

|

Θ(1) |

Constant Time |

Array access (best case) |

Time remains constant for any input size. |

|

Θ(log n) |

Logarithmic Time |

Binary Search (average case) |

Performance improves logarithmically as input grows. |

|

Θ(n) |

Linear Time |

Traversing a linked list |

Must visit all elements in the list. |

|

Θ(n log n) |

Log-Linear Time |

Merge Sort, QuickSort |

Sorting algorithms with logarithmic overhead. |

|

Θ(n²) |

Quadratic Time |

Bubble Sort (average case) |

Time increases quadratically with input size. |

Little-O Notation (o)

Little-o notation (denoted as o(f(n))) provides an upper bound on an algorithm’s growth rate, but with a crucial difference from Big-O notation. While Big-O describes the worst-case scenario, Little-o is stricter and indicates that an algorithm’s growth rate is strictly less than the specified function for large inputs. In other words, Little-o tells us that the algorithm will grow faster than the given function in the limit, but not as fast as the function it is compared to.

Mathematically, an algorithm is o(f(n)) if for all positive constants c, there exists an n₀ such that:

T(n)<c⋅f(n),for all n≥n₀

Real-World Example

For instance, an algorithm with a time complexity of o(n²) will grow faster than n but will never grow as fast as n², making o(n) and o(n log n) valid examples. It’s important to note that little-o notation doesn’t specify an upper bound that the function will never reach, but rather that it won’t reach the function’s growth rate asymptotically.

Little-Omega Notation (ω)

Little-Omega notation (denoted as ω(f(n))) is the opposite of Little-o and describes a lower bound that is not tight. Specifically, it means that an algorithm's running time grows faster than the specified function for large input sizes. In contrast to Omega (Ω), which gives us a lower bound that the algorithm will never perform worse than, Little-omega denotes that the algorithm will always grow faster than the specified lower bound.

Mathematically, an algorithm is ω(f(n)) if for all positive constants c, there exists an n₀ such that:

T(n)>c⋅f(n),for all n≥n₀

Real-World Example

If an algorithm is described as ω(n), it means that the algorithm's runtime will always grow faster than n but not necessarily in a fixed manner (like exponential growth). For example, ω(n log n) implies that the algorithm's growth rate is strictly faster than n log n, but it can be anything like n² or 2ⁿ, depending on the function.

Summary Of Asymptotic Notations

Here’s a quick overview of what we have discussed above:

|

Notation |

Description |

Example |

|

Big-O (O) |

Upper bound (worst case) |

O(n²), O(n log n) |

|

Omega (Ω) |

Lower bound (best case) |

Ω(n), Ω(log n) |

|

Theta (Θ) |

Exact bound (tight bound) |

Θ(n), Θ(n log n) |

|

Little-o (o) |

Strictly smaller than a given function |

o(n²), o(n log n) |

|

Little-omega (ω) |

Strictly greater than a given function |

ω(n), ω(n log n) |

Therefore:

- Big-O vs Omega: Big-O is for the worst-case analysis (upper bound), while Omega is for the best-case analysis (lower bound).

- Big-O vs Theta: Big-O provides an upper bound only, whereas Theta provides both upper and lower bounds, giving a tighter and more exact representation of the algorithm’s growth rate.

- Little-o vs Little-omega: Both provide non-tight bounds, but Little-o is for an upper bound that is strictly smaller, and Little-omega is for a lower bound that is strictly larger.

Real-World Applications Of Asymptotic Notation

Asymptotic notation plays a crucial role in analyzing the performance of algorithms, especially when dealing with large datasets. By providing a way to express the growth rates of algorithms, it helps in making important decisions about which algorithm to use for specific tasks. Let's explore how asymptotic notation is used in the real world across various applications.

1. Sorting Algorithms

Sorting is one of the most common tasks in computer science, and understanding the asymptotic behavior of sorting algorithms is key to selecting the right algorithm for different scenarios.

For example:

- Merge Sort and QuickSort: Both of these algorithms have Θ(n log n) time complexity on average. They are used when dealing with large data sets, as their performance is more efficient than Θ(n²) algorithms like Bubble Sort or Selection Sort.

- Bubble Sort and Insertion Sort: With time complexity of O(n²), these algorithms are often used for small datasets or as part of hybrid algorithms. For example, Insertion Sort can be used in algorithms like Timsort for small partitions of data.

Example Application:

In scenarios like e-commerce websites where sorting products based on price, rating, or availability is required, QuickSort or Merge Sort are ideal because of their efficient sorting capabilities. Using Big-O notation, we can compare the worst-case performance of different sorting algorithms to decide the most efficient one for a particular use case.

2. Searching Algorithms

Efficient searching is essential when working with large datasets. Understanding the asymptotic notation helps determine the most efficient search algorithm based on the input size.

- Binary Search: If the data is sorted, Binary Search performs in Θ(log n) time, making it very efficient for large datasets compared to a simple linear search, which takes Θ(n) time.

Example Application:

In databases or file systems, searching for records in a large dataset (e.g., searching for a customer in a customer database) is highly optimized with algorithms like Binary Search. This helps companies save time and resources when querying large datasets.

3. Data Structures

The performance of different data structures can be evaluated using asymptotic notation to determine how efficiently operations like insertion, deletion, searching, and accessing can be performed.

- Hash Tables: Typically have O(1) time complexity for lookup and insertion, making them extremely fast for operations like checking if a record exists.

- Linked Lists: For operations like traversal, Θ(n) is the typical complexity, while operations like insertion and deletion at the beginning can take Θ(1).

Example Application:

In caching systems or memory management, Hash Tables are commonly used to store and retrieve frequently accessed data efficiently. The O(1) time complexity of hash tables ensures fast lookups, improving the performance of applications like web servers, operating systems, and databases.

4. Network Routing and Traffic Management

In networking algorithms, asymptotic notation helps evaluate the performance of routing algorithms, ensuring that they are efficient enough to handle large networks.

- Dijkstra’s Algorithm: This shortest path algorithm has a time complexity of O(E log V), where E is the number of edges and V is the number of vertices in the graph.

- Bellman-Ford Algorithm: It runs in O(VE) time, which is slower than Dijkstra’s but can handle negative edge weights.

Example Application:

In telecommunication networks or cloud computing environments, routing protocols like Dijkstra's Algorithm are critical for determining the most efficient paths for data transmission. By understanding the asymptotic behavior of these algorithms, engineers can optimize network traffic and avoid bottlenecks.

5. Machine Learning Algorithms

Asymptotic notation helps evaluate the performance of various machine learning algorithms, especially when scaling up to handle large datasets. Whether it's a supervised learning algorithm like Linear Regression or an unsupervised one like K-means clustering, knowing the time complexity ensures that the right algorithm is chosen for the task.

- K-means clustering: The time complexity of O(nk) for each iteration, where n is the number of data points and k is the number of clusters, is important for large datasets.

- Gradient Descent: The time complexity depends on the number of iterations and the number of parameters, typically O(nk) or O(n²) for large-scale problems.

Example Application:

In data science and AI-driven applications, algorithms like K-means or Neural Networks are commonly used for clustering or classification. Asymptotic notation helps determine which algorithm will scale better with large amounts of training data, enabling faster training and prediction times.

6. Web Development and User Interfaces

Asymptotic analysis is also valuable in web development, where we need to optimize the performance of web pages, especially with dynamic content and large datasets.

- Rendering a webpage: The time complexity of rendering HTML, CSS, and JavaScript code on a webpage can be analyzed. For example, O(n) time complexity might be expected for iterating through elements on a page, but inefficient algorithms can increase the time it takes to load and render pages.

- Lazy Loading: With large datasets, implementing lazy loading (loading data as the user scrolls) can help reduce loading times. The time complexity for this could be O(n) for fetching data from a server, but optimizations like pagination can lower this to O(1).

Example Application:

For e-commerce websites with thousands of products, lazy loading and pagination strategies ensure that users only load small chunks of data, making the user experience smoother and faster. By understanding the asymptotic behavior of different techniques, web developers can improve site performance.

Conclusion

In this article, we explored the different types of asymptotic notations—Big-O (O), Omega (Ω), Theta (Θ), Little-o (o), and Little-omega (ω)—which are essential tools in the analysis of algorithms. These notations allow us to describe and compare an algorithm’s efficiency in terms of its time and space complexity, helping developers and computer scientists understand how algorithms will perform as the input size grows.

- Big-O provides a worst-case upper bound.

- Omega captures the best-case lower bound.

- Theta offers a tight bound, giving us an exact description of an algorithm’s performance.

- Little-o and Little-omega describe growth rates that are strictly smaller or larger than a given function, respectively.

By understanding and applying these notations, we can make more informed decisions when selecting algorithms, ensuring that they perform efficiently even as the problem size increases. Ultimately, asymptotic notations are crucial for optimizing code, predicting scalability, and solving real-world computational problems effectively.

Frequently Asked Questions

Q. What is the difference between Big-O and Theta notation?

- Big-O notation provides an upper bound for an algorithm's time complexity, describing the worst-case scenario. It tells us the maximum time the algorithm could take.

- Theta notation represents a tight bound, meaning it gives both an upper and lower bound on the algorithm's time complexity, offering a precise understanding of its performance.

Q. When should I use Omega notation instead of Big-O?

Use Omega notation when you want to describe the best-case scenario or the minimum time the algorithm will take for any input size. It’s useful when you’re interested in how fast the algorithm performs in the best possible situation, whereas Big-O is used for the worst-case analysis.

Q. What does Little-o notation represent?

Little-o notation provides an upper bound that is not tight. It describes an algorithm whose growth rate is strictly smaller than a given function. It’s used to express that an algorithm's time complexity grows slower than another function, but not necessarily at the same rate as Big-O.

Q. How can Little-omega notation help in algorithm analysis?

Little-omega notation is used to describe an algorithm whose growth rate is strictly greater than a given function. It helps in analyzing algorithms that perform worse than a certain complexity, providing a lower bound that is not tight.

Q. Why are asymptotic notations important in algorithm design?

Asymptotic notations are essential because they allow us to compare algorithms and understand their efficiency, especially as the input size grows. By describing an algorithm’s time or space complexity using Big-O, Omega, Theta, Little-o, or Little-omega, we can make informed decisions about which algorithm to use for different problem sizes, ensuring better performance and scalability in real-world applications.

Here are a few other topics you must explore:

- Difference Between Hashing And Encryption Decoded

- 53 Frequently Asked Linked List Interview Questions With Answers 2024

- Data Structure Interview Questions For 2024 [With Detailed Answers]

- Tree Topology | Advantages & Disadvantages In Computer Network

- Decoding Data Redundancy In DBMS| Causes, Advantages, Solutions

I’m a Computer Science graduate with a knack for creative ventures. Through content at Unstop, I am trying to simplify complex tech concepts and make them fun. When I’m not decoding tech jargon, you’ll find me indulging in great food and then burning it out at the gym.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment