- What Is Data Structure?

- Key Features Of Data Structures

- Basic Terminologies Related To Data Structures

- Types Of Data Structures

- What Are Linear Data Structures & Its Types?

- What Are Non-Linear Data Structures & Its Types?

- Importance Of Data Structure In Programming

- Basic Operations On Data Structures

- Applications Of Data Structures

- Real-Life Applications Of Data Structures

- Linear Vs. Non-linear Data Structures

- What Are Algorithms? The Difference Between Data Structures & Algorithms

- Conclusion

- Frequently Asked Questions

- What Is Asymptotic Notation?

- How Asymptotic Notation Helps In Analyzing Performance

- Types Of Asymptotic Notation

- Big-O Notation (O)

- Omega Notation (Ω)

- Theta Notation (Θ)

- Little-O Notation (o)

- Little-Omega Notation (ω)

- Summary Of Asymptotic Notations

- Real-World Applications Of Asymptotic Notation

- Conclusion

- Frequently Asked Questions

- Understanding Big O Notation

- Types Of Time Complexity

- Space Complexity In Big O Notation

- How To Determine Big O Complexity

- Best, Worst, And Average Case Complexity

- Applications Of Big O Notation

- Conclusion

- Frequently Asked Questions

- What Is Time Complexity?

- Why Do You Need To Calculate Time Complexity?

- The Time Complexity Algorithm Cases

- Time Complexity: Different Types Of Asymptotic Notations

- How To Calculate Time Complexity?

- Time Complexity Of Sorting Algorithms

- Time Complexity Of Searching Algorithms

- Writing And optimizing An algorithm

- What Is Space Complexity And Its Significance?

- Relation Between Time And Space Complexity

- Conclusion

- What Is Linear Data Structure?

- Key Characteristics Of Linear Data Structures

- What Are The Types Of Linear Data Structures?

- What Is An Array Linear Data Structure?

- What Are Linked Lists Linear Data Structure?

- What Is A Stack Linear Data Structure?

- What Is A Queue Linear Data Structure?

- Most Common Operations Performed in Linear Data Structures

- Advantages Of Linear Data Structures

- What Is Nonlinear Data Structure?

- Difference Between Linear & Non-Linear Data Structures

- Who Uses Linear Data Structures?

- Conclusion

- Frequently Asked Questions

- What is a linear data structure?

- What is a non-linear data structure?

- Difference between linear data structure and non-linear data structure

- FAQs about linear and non-linear data structures

- What Is Search?

- What Is Linear Search In Data Structure?

- What Is Linear Search Algorithm?

- Working Of Linear Search Algorithm

- Complexity Of Linear Search Algorithm In Data Structures

- Implementations Of Linear Search Algorithm In Different Programming Languages

- Real-World Applications Of Linear Search In Data Structure

- Advantages & Disadvantages Of Linear Search

- Best Practices For Using Linear Search Algorithm

- Conclusion

- Frequently Asked Questions

- What Is The Binary Search Algorithm?

- Conditions For Using Binary Search

- Steps For Implementing Binary Search

- Iterative Method For Binary Search With Implementation Examples

- Recursive Method For Binary Search

- Complexity Analysis Of Binary Search Algorithm

- Iterative Vs. Recursive Implementation Of Binary Search

- Advantages & Disadvantages Of Binary Search

- Practical Applications & Real-World Examples Of Binary Search

- Conclusion

- Frequently Asked Questions

- Understanding The Jump Search Algorithm

- How Jump Search Works?

- Code Implementation Of Jump Search Algorithm

- Time And Space Complexity Analysis

- Advantages Of Jump Search

- Disadvantages Of Jump Search

- Applications Of Jump Search

- Conclusion

- Frequently Asked Questions

- What Is Sorting In Data Structures?

- What Is Bubble Sort?

- What Is Selection Sort?

- What Is Insertion Sort?

- What Is Merge Sort?

- What Is Quick Sort?

- What Is Heap Sort?

- What Is Radix Sort?

- What Is Bucket Sort?

- What Is Counting Sort?

- What Is Shell Sort?

- Choosing The Right Sorting Algorithm

- Applications Of Sorting

- Conclusion

- Frequently Asked Questions

- Understanding Bubble Sort Algorithm

- Bubble Sort Algorithm

- Implementation Of Bubble Sort In C++

- Time And Space Complexity Analysis Of Bubble Sort Algorithm

- Advantages Of Bubble Sort Algorithm

- Disadvantages Of Bubble Sort Algorithm

- Applications of Bubble Sort Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding The Merge Sort Algorithm

- Algorithm For Merge Sort

- Implementation Of Merge Sort In C++

- Time And Space Complexity Analysis Of Merge Sort

- Advantages And Disadvantages Of Merge Sort

- Applications Of Merge Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Selection Sort Algorithm

- Algorithmic Approach To Selection Sort

- Implementation Of Selection Sort Algorithm

- Complexity Analysis Of Selection Sort

- Comparison Of Selection Sort With Other Sorting Algorithms

- Advantages And Disadvantages Of Selection Sort

- Application Of Selection Sort

- Conclusion

- Frequently Asked Questions

- What Is Insertion Sort Algorithm?

- How Does Insertion Sort Work? (Step-by-Step Explanation)

- Implementation of Insertion Sort in C++

- Time And Space Complexity Of Insertion Sort

- Applications Of Insertion Sort Algorithm

- Comparison With Other Sorting Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding Quick Sort Algorithm

- Step-By-Step Working Of Quick Sort

- Implementation Of Quick Sort Algorithm

- Time Complexity Analysis Of Quick Sort

- Advantages Of Quick Sort Algorithm

- Disadvantages Of Quick Sort Algorithm

- Applications Of Quick Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding The Heap Data Structure

- Working Of Heap Sort Algorithm

- Implementation Of Heap Sort Algorithm

- Advantages Of Heap Sort

- Disadvantages Of Heap Sort

- Real-World Applications Of Heap Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Counting Sort Algorithm

- Conditions For Using Counting Sort Algorithm

- How Counting Sort Algorithm Works?

- Implementation Of Counting Sort Algorithm

- Time And Space Complexity Analysis Of Counting Sort

- Comparison Of Counting Sort With Other Sorting Algorithms

- Advantages Of Counting Sort Algorithm

- Disadvantages Of Counting Sort Algorithm

- Applications Of Counting Sort Algorithm

- Conclusion

- Frequently Asked Questions

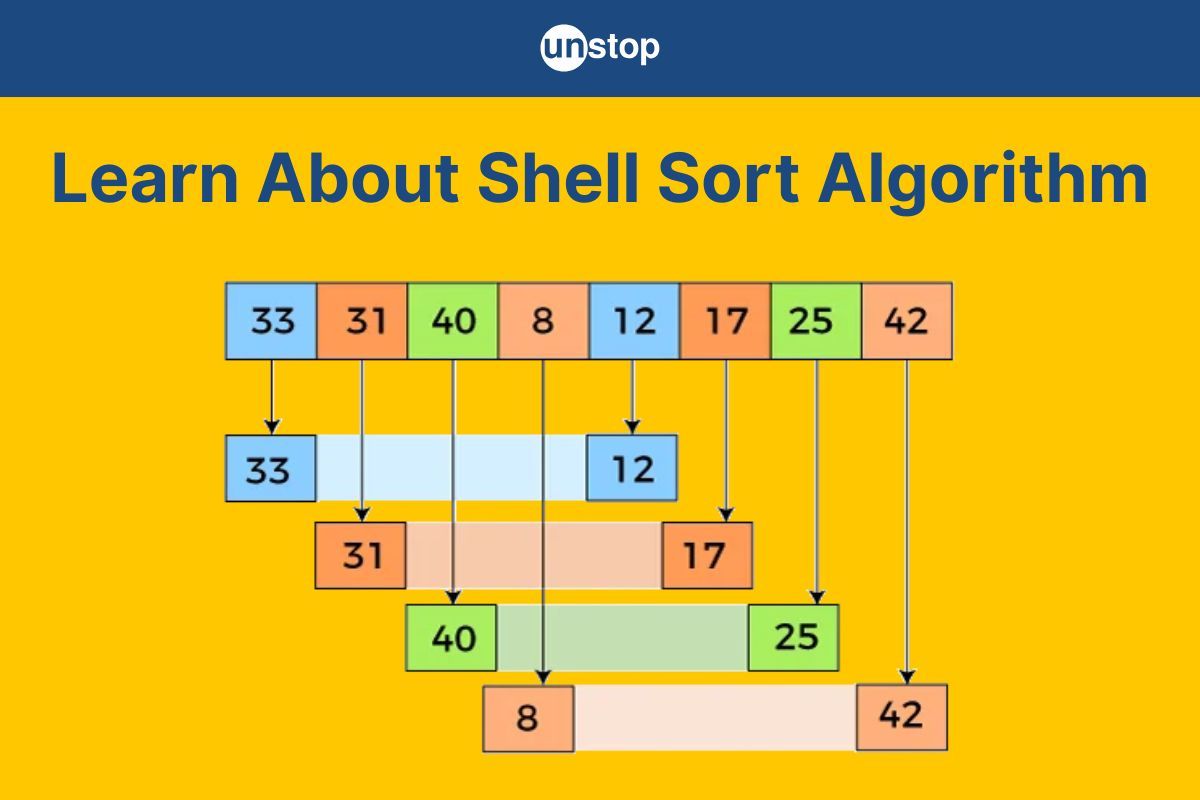

- Understanding Shell Sort Algorithm

- Working Of Shell Sort Algorithm

- Implementation Of Shell Sort Algorithm

- Time Complexity Analysis Of Shell Sort Algorithm

- Advantages Of Shell Sort Algorithm

- Disadvantages Of Shell Sort Algorithm

- Applications Of Shell Sort Algorithm

- Conclusion

- Frequently Asked Questions

- What Is Linked List In Data Structures?

- Types Of Linked Lists In Data Structures

- Linked List Operations In Data Structures

- Advantages And Disadvantages Of Linked Lists In Data Structures

- Comparison Of Linked Lists And Arrays

- Applications Of Linked Lists

- Conclusion

- Frequently Asked Questions

- What Is A Singly Linked List In Data Structure?

- Insertion Operations On Singly Linked Lists

- Deletion Operation On Singly Linked List

- Searching For Elements In Single Linked List

- Calculating Length Of Single Linked List

- Practical Applications Of Singly Linked Lists In Data Structure

- Common Problems With Singly Linked Lists

- Conclusion

- Frequently Asked Questions (FAQ)

- What Is A Linked List?

- Reverse A Linked List

- How To Reverse A Linked List? (Approaches)

- Recursive Approach To Reverse A Linked List

- Iterative Approach To Reverse A Linked List

- Using Stack To Reverse A Linked List

- Complexity Analysis Of Different Approaches To Reverse A Linked List

- Conclusion

- Frequently Asked Questions

- What Is A Stack In Data Structure?

- Understanding Stack Operations

- Stack Implementation In Data Structures

- Stack Implementation Using Arrays

- Stack Implementation Using Linked Lists

- Comparison: Array vs. Linked List Implementation

- Applications Of Stack In Data Structures

- Advantages And Disadvantages Of Stack Data Structure

- Conclusion

- Frequently Asked Questions

- What Is A Graph Data Structure?

- Importance Of Graph Data Structures

- Types Of Graphs In Data Structure

- Types Of Graph Algorithms

- Application Of Graphs In Data Structures

- Challenges And Complexities In Graphs

- Conclusion

- Frequently Asked Questions

- What Is Tree Data Structure?

- Terminologies Of Tree Data Structure:

- Different Types Of Tree Data Structures

- Basic Operations On Tree Data Structure

- Applications Of Tree Data Structures

- Comparison Of Trees, Graphs, And Linear Data Structures

- Advantages Of Tree Data Structure

- Disadvantages Of Tree Data Structure

- Conclusion

- Frequently Asked Questions

- What Is Dynamic Programming?

- Real-Life Example: The Jigsaw Puzzle Analogy

- How To Solve A Problem Using Dynamic Programming?

- Dynamic Programming Algorithm Techniques

- Advantages Of Dynamic Programming

- Disadvantages Of Dynamic Programming

- Applications Of Dynamic Programming

- Conclusion

- Frequently Asked Questions

- Understanding The Sliding Window Algorithm

- How Does The Sliding Window Algorithm Works?

- How To Identify Sliding Window Problems?

- Fixed-Size Sliding Window Example: Maximum Sum Subarray Of Size k

- Variable-Size Sliding Window Example: Smallest Subarray With A Given Sum

- Advantages Of Sliding Window Technique

- Disadvantages Of Sliding Window Technique

- Conclusion

- Frequently Asked Questions

- Introduction To Data Structures

- Data Structures Interview Questions: Basics

- Data Structures Interview Questions: Intermediate

- Data Structures Interview Questions: Advanced

- Conclusion

Shell Sort Algorithm In Data Structures (With Code Examples)

Sorting is a fundamental operation in computer science, used to arrange data in a specific order for efficient searching, retrieval, and processing. One such sorting algorithm is Shell Sort, an optimization of Insertion Sort that significantly improves performance for larger datasets. Developed by Donald Shell in 1959, this algorithm introduces the concept of gap-based sorting, which allows elements to move over larger distances in the initial stages, reducing the total number of swaps required.

In this article, we will explore the working mechanism of Shell Sort, its time complexity, advantages, and implementation in code.

Understanding Shell Sort Algorithm

Shell Sort in data structures introduces the idea of gap-based sorting, where elements are initially compared and swapped at a certain gap distance rather than adjacent positions. This allows larger elements to move faster toward their correct position, reducing the number of swaps in later stages. As the algorithm progresses, the gap size decreases until it becomes 1, effectively turning into Insertion Sort for the final pass.

How Shell Sort Improves Over Insertion Sort:

|

Feature |

Insertion Sort |

Shell Sort |

|

Sorting Approach |

Compares and inserts elements one by one in a sorted portion of the array. |

Uses a gap sequence to compare and swap distant elements, reducing large shifts. |

|

Efficiency on Large Data |

Inefficient for large datasets due to many shifts of elements. |

More efficient as distant swaps reduce overall shifts needed. |

|

Time Complexity (Worst Case) |

O(n²) |

Can be O(n log n) depending on the gap sequence. |

|

Adaptability |

Performs well on nearly sorted data. |

Works well even for moderately unsorted data. |

|

Number of Comparisons & Swaps |

High, as elements are moved one step at a time. |

Lower than Insertion Sort because elements move across larger gaps first. |

|

Best for Small Data? |

Can be overkill for very small datasets. |

|

|

General Performance |

Slower for large and random datasets. |

Faster than Insertion Sort due to reduced shifts and better pre-sorting. |

Thus, Shell Sort is a more generalized and optimized version of Insertion Sort, making it a practical choice for sorting moderate-sized datasets efficiently.

Real-Life Analogy For Shell Sort

Imagine you are arranging books on a messy bookshelf, but instead of sorting them one by one like Insertion Sort, you decide to use a more efficient approach:

- Large Gaps First:

- Initially, you pick books that are far apart (let’s say every 5th book) and arrange them in order.

- This ensures that roughly sorted sections appear quickly, reducing the need for excessive small adjustments later.

- Smaller Gaps Next:

- Now, you refine the order by arranging books every 2nd position.

- This further organizes the shelf with fewer movements compared to placing each book one by one from the beginning.

- Final Fine-Tuning:

- Finally, when books are almost in place, you switch to sorting adjacent books, making small adjustments to achieve the perfectly ordered shelf.

This method is much faster than sorting one book at a time because you reduce unnecessary small movements in the early stages, just like Shell Sort optimizes sorting using gap-based swaps before fine-tuning with Insertion Sort.

Working Of Shell Sort Algorithm

Shell Sort works by sorting elements at a certain gap interval and gradually reducing the gap until it becomes 1. Here’s how it works step by step:

Example: Let's sort the array: [12, 34, 54, 2, 3]

Step 1: Choose An Initial Gap

A common approach is to start with gap = n/2, where n is the number of elements.

- Here, n = 5, so the initial gap = 5/2 = 2.

Step 2: Perform Gap-Based Sorting

Now, we compare and swap elements at the given gap.

Pass 1 (gap = 2)

Compare elements that are 2 positions apart:

|

Index |

0 |

1 |

2 |

3 |

4 |

|

Array |

12 |

34 |

54 |

2 |

3 |

- 12 and 54 → No swap (already in order).

- 34 and 2 → Swap → [12, 2, 54, 34, 3]

- 54 and 3 → Swap → [12, 2, 3, 34, 54]

Pass 2 (gap = 1)

Now, the gap is reduced to 1, which means we perform Insertion Sort on the nearly sorted array.

- 2 and 12 → Already in order.

- 3 and 12 → Already in order.

- 34 and 12 → Already in order.

- 54 and 34 → Already in order.

Final sorted array: [2, 3, 12, 34, 54]

Visualization Of The Sorting Process

- Initial array → [12, 34, 54, 2, 3]

- After gap = 2 → [12, 2, 3, 34, 54]

- After gap = 1 → [2, 3, 12, 34, 54]

Shell Sort effectively reduces the number of shifts by handling large gaps first, making the final sorting stage much faster compared to regular Insertion Sort.

Implementation Of Shell Sort Algorithm

Here is a C++ program to understand the working of the shell sort algorithm-

Code Example:

#include

using namespace std;

// Function to perform Shell Sort

void shellSort(int arr[], int n) {

// Start with a large gap, then reduce it

for (int gap = n / 2; gap > 0; gap /= 2) {

// Perform insertion sort for elements at a distance of 'gap'

for (int i = gap; i < n; i++) {

int temp = arr[i]; // Store current element

int j = i;

// Shift elements to the right until the correct position is found

while (j >= gap && arr[j - gap] > temp) {

arr[j] = arr[j - gap];

j -= gap;

}

// Place temp at its correct position

arr[j] = temp;

}

}

}

// Function to print the array

void printArray(int arr[], int n) {

for (int i = 0; i < n; i++)

cout << arr[i] << " ";

cout << endl;

}

int main() {

int arr[] = {12, 34, 54, 2, 3};

int n = sizeof(arr) / sizeof(arr[0]);

cout << "Original array: ";

printArray(arr, n);

shellSort(arr, n);

cout << "Sorted array: ";

printArray(arr, n);

return 0;

}

I2luY2x1ZGUgPGlvc3RyZWFtPgp1c2luZyBuYW1lc3BhY2Ugc3RkOwoKLy8gRnVuY3Rpb24gdG8gcGVyZm9ybSBTaGVsbCBTb3J0CnZvaWQgc2hlbGxTb3J0KGludCBhcnJbXSwgaW50IG4pIHsKICAgIC8vIFN0YXJ0IHdpdGggYSBsYXJnZSBnYXAsIHRoZW4gcmVkdWNlIGl0CiAgICBmb3IgKGludCBnYXAgPSBuIC8gMjsgZ2FwID4gMDsgZ2FwIC89IDIpIHsKICAgICAgICAvLyBQZXJmb3JtIGluc2VydGlvbiBzb3J0IGZvciBlbGVtZW50cyBhdCBhIGRpc3RhbmNlIG9mICdnYXAnCiAgICAgICAgZm9yIChpbnQgaSA9IGdhcDsgaSA8IG47IGkrKykgewogICAgICAgICAgICBpbnQgdGVtcCA9IGFycltpXTsgIC8vIFN0b3JlIGN1cnJlbnQgZWxlbWVudAogICAgICAgICAgICBpbnQgaiA9IGk7CgogICAgICAgICAgICAvLyBTaGlmdCBlbGVtZW50cyB0byB0aGUgcmlnaHQgdW50aWwgdGhlIGNvcnJlY3QgcG9zaXRpb24gaXMgZm91bmQKICAgICAgICAgICAgd2hpbGUgKGogPj0gZ2FwICYmIGFycltqIC0gZ2FwXSA+IHRlbXApIHsKICAgICAgICAgICAgICAgIGFycltqXSA9IGFycltqIC0gZ2FwXTsKICAgICAgICAgICAgICAgIGogLT0gZ2FwOwogICAgICAgICAgICB9CgogICAgICAgICAgICAvLyBQbGFjZSB0ZW1wIGF0IGl0cyBjb3JyZWN0IHBvc2l0aW9uCiAgICAgICAgICAgIGFycltqXSA9IHRlbXA7CiAgICAgICAgfQogICAgfQp9CgovLyBGdW5jdGlvbiB0byBwcmludCB0aGUgYXJyYXkKdm9pZCBwcmludEFycmF5KGludCBhcnJbXSwgaW50IG4pIHsKICAgIGZvciAoaW50IGkgPSAwOyBpIDwgbjsgaSsrKQogICAgICAgIGNvdXQgPDwgYXJyW2ldIDw8ICIgIjsKICAgIGNvdXQgPDwgZW5kbDsKfQoKaW50IG1haW4oKSB7CiAgICBpbnQgYXJyW10gPSB7MTIsIDM0LCA1NCwgMiwgM307CiAgICBpbnQgbiA9IHNpemVvZihhcnIpIC8gc2l6ZW9mKGFyclswXSk7CgogICAgY291dCA8PCAiT3JpZ2luYWwgYXJyYXk6ICI7CiAgICBwcmludEFycmF5KGFyciwgbik7CgogICAgc2hlbGxTb3J0KGFyciwgbik7CgogICAgY291dCA8PCAiU29ydGVkIGFycmF5OiAiOwogICAgcHJpbnRBcnJheShhcnIsIG4pOwoKICAgIHJldHVybiAwOwp9Cg==

Output:

Original array: 12 34 54 2 3

Sorted array: 2 3 12 34 54

Explanation:

In the above code example-

- We start by including the <iostream> header to handle input and output operations.

- The using namespace std; directive allows us to use standard library functions without prefixing them with std::.

- The shellSort() function sorts an array using the Shell Sort algorithm, which is an optimized version of insertion sort that works with gaps.

- We begin with a large gap (n / 2) and keep reducing it by half in each iteration until it becomes 0.

- For each gap value, we apply insertion sort but only on elements that are gap positions apart.

- We pick an element and compare it with previous elements at a distance of gap, shifting elements if needed to maintain order.

- Once the correct position is found, we insert the element there. This improves efficiency compared to normal insertion sort.

- The printArray() function prints the elements of the array in a single line, separated by spaces.

- In main(), we define an array {12, 34, 54, 2, 3} and determine its size using sizeof(arr) / sizeof(arr[0]).

- We print the original array before sorting.

- We call shellSort() to sort the array in ascending order.

- After sorting, we print the modified array to display the sorted elements.

Time Complexity Analysis Of Shell Sort Algorithm

Shell Sort’s time complexity depends on the gap sequence used, as it determines how elements move across the array. Below is the complexity analysis for different cases:

1. Best-Case Complexity (Ω(n log n))

- The best case occurs when the array is already nearly sorted and requires minimal swaps.

- If the gap sequence is chosen well (e.g., Hibbard's sequence), the best-case complexity is Ω(n log n).

2. Average-Case Complexity (Θ(n log² n))

- The average case varies depending on the gap sequence used.

- For common sequences (like Knuth’s sequence), it is typically Θ(n log² n).

- If the gaps are chosen optimally, performance can approach O(n log n).

3. Worst-Case Complexity (O(n²))

- The worst case occurs when a bad gap sequence is chosen, causing inefficient sorting (similar to Insertion Sort).

- With gaps reducing inefficiently (like simply dividing by 2), the worst-case complexity becomes O(n²).

Impact Of Different Gap Sequences On Performance

|

Gap Sequence |

Formula |

Best Complexity |

Worst Complexity |

Performance |

|

Shell’s Original |

n/2, n/4... |

O(n²) |

O(n²) |

Slower, inefficient for large inputs. |

|

Knuth’s Sequence |

(3^k - 1) / 2 |

O(n^(3/2)) |

O(n^(3/2)) |

Good for moderate-sized data. |

|

Hibbard’s Sequence |

2^k - 1 |

O(n^(3/2)) |

O(n^(3/2)) |

Slightly better than Knuth’s. |

|

Sedgewick’s Sequence |

Hybrid approach |

O(n^(4/3)) |

O(n^(4/3)) |

More efficient than Hibbard’s. |

|

Pratt’s Sequence |

2^p * 3^q |

O(n log² n) |

O(n log² n) |

One of the best choices for Shell Sort. |

Key Takeaway: The choice of gap sequence greatly impacts performance, with Sedgewick’s and Pratt’s sequences offering the best practical results, making Shell Sort approach O(n log n) efficiency.

Advantages Of Shell Sort Algorithm

Some common advantages of shell sort algorithms are:

- Improves Over Insertion Sort: By allowing far-apart elements to move earlier, Shell Sort reduces the number of shifts required in the final pass.

- Efficient for Moderate-Sized Data: Performs significantly better than Bubble Sort and Insertion Sort for medium-sized datasets.

- In-Place Sorting Algorithm: Requires no extra space (O(1) space complexity), making it memory-efficient.

- Adaptive for Nearly Sorted Data: If the array is already partially sorted, Shell Sort performs very efficiently.

- Flexible Choice of Gap Sequence: Different gap sequences can optimize performance for specific datasets.

Disadvantages Of Shell Sort Algorithm

Some common disadvantages of shell sort algorithms are:

- Not Stable: Shell Sort does not maintain the relative order of equal elements, making it unstable.

- Performance Depends on Gap Sequence: A poorly chosen gap sequence (e.g., simple division by 2) can lead to O(n²) worst-case complexity.

- Not Optimal for Very Large Datasets: While better than Insertion Sort, it is still outperformed by Quick Sort, Merge Sort, and Heap Sort for large datasets.

- Complex to Implement Efficiently: Finding the best gap sequence requires additional research and tuning for different datasets.

Applications Of Shell Sort Algorithm

Shell Sort is widely used in scenarios where insertion-based sorting is preferable but needs optimization for larger datasets. Here are some practical applications of Shell Sort:

1. Sorting Small to Medium-Sized Datasets

- Use Case: When sorting a moderate number of elements where Merge Sort or Quick Sort may be unnecessary due to their extra space usage or recursive overhead.

- Example: Sorting small logs or records in applications with limited memory.

2. Improving Performance in Hybrid Sorting Algorithms

- Use Case: Many hybrid sorting algorithms, like Timsort (used in Java’s Arrays.sort() for smaller arrays), use Insertion Sort when subarrays become small.

- Shell Sort can be used as an optimization over Insertion Sort for improved performance.

3. Cache-Friendly Sorting in Low-Level Systems

- Use Case: In embedded systems and memory-constrained environments, Shell Sort works well as it has better cache performance compared to other O(n²) sorting algorithms.

4. Organizing Records in Databases

- Use Case: Databases with moderately sized datasets can use Shell Sort for quick data reordering before applying more complex indexing algorithms.

5. Used in Competitive Programming

- Use Case: Due to its in-place sorting and simple implementation, Shell Sort is often used in competitive programming when sorting small arrays efficiently.

Conclusion

Shell Sort is a powerful improvement over Insertion Sort, making it efficient for moderate-sized datasets. By introducing a gap-based approach, it reduces the number of element shifts, significantly improving performance. While its time complexity depends on the gap sequence used, well-chosen sequences can bring it close to O(n log n) efficiency.

Despite being faster than simple sorting algorithms like Bubble and Insertion Sort, Shell Sort is not the best choice for large datasets where Merge Sort or Quick Sort outperform it. However, its in-place nature, adaptability, and ease of implementation make it a valuable tool in real-world applications, particularly in low-memory environments and hybrid sorting techniques.

In summary, Shell Sort is a great middle-ground sorting algorithm that balances simplicity and efficiency, making it a useful choice in certain scenarios where insertion-based sorting is preferred.

Frequently Asked Questions

Q. Why is Shell Sort considered an improvement over Insertion Sort?

Shell Sort improves Insertion Sort by reducing the number of shifts required for distant elements. Instead of comparing adjacent elements, it sorts elements at larger gaps first, progressively reducing the gap. This helps in minimizing the number of swaps in the final sorting phase.

Q. Is Shell Sort a stable sorting algorithm?

No, Shell Sort is not stable because it allows elements to jump across the array due to large gap values. This can lead to reordering of equal elements, which breaks stability.

Q. How does the choice of gap sequence affect Shell Sort’s performance?

The efficiency of Shell Sort heavily depends on the gap sequence used. A poor gap sequence (like dividing by 2) may lead to O(n²) complexity, while better sequences (like Sedgewick’s or Pratt’s) improve efficiency to O(n log n).

Q. What is the worst-case time complexity of Shell Sort?

The worst-case time complexity of Shell Sort is O(n²), which happens when the chosen gap sequence is inefficient. However, with optimized gap sequences, the performance can be significantly improved.

Q. When should we use Shell Sort instead of Quick Sort or Merge Sort?

Shell Sort is useful when:

- Sorting moderate-sized datasets, where Quick Sort’s overhead isn’t justified.

- Memory is limited, as it’s an in-place sorting algorithm (O(1) extra space).

- Insertion-based sorting is preferred, especially when data is partially sorted.

Suggested Reads:

- Difference Between Hashing And Encryption Decoded

- 53 Frequently Asked Linked List Interview Questions With Answers 2024

- Data Structure Interview Questions For 2024 [With Detailed Answers]

- Tree Topology | Advantages & Disadvantages In Computer Network

- Decoding Data Redundancy In DBMS| Causes, Advantages, Solutions

I’m a Computer Science graduate with a knack for creative ventures. Through content at Unstop, I am trying to simplify complex tech concepts and make them fun. When I’m not decoding tech jargon, you’ll find me indulging in great food and then burning it out at the gym.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment