- What Is Data Structure?

- Key Features Of Data Structures

- Basic Terminologies Related To Data Structures

- Types Of Data Structures

- What Are Linear Data Structures & Its Types?

- What Are Non-Linear Data Structures & Its Types?

- Importance Of Data Structure In Programming

- Basic Operations On Data Structures

- Applications Of Data Structures

- Real-Life Applications Of Data Structures

- Linear Vs. Non-linear Data Structures

- What Are Algorithms? The Difference Between Data Structures & Algorithms

- Conclusion

- Frequently Asked Questions

- What Is Asymptotic Notation?

- How Asymptotic Notation Helps In Analyzing Performance

- Types Of Asymptotic Notation

- Big-O Notation (O)

- Omega Notation (Ω)

- Theta Notation (Θ)

- Little-O Notation (o)

- Little-Omega Notation (ω)

- Summary Of Asymptotic Notations

- Real-World Applications Of Asymptotic Notation

- Conclusion

- Frequently Asked Questions

- Understanding Big O Notation

- Types Of Time Complexity

- Space Complexity In Big O Notation

- How To Determine Big O Complexity

- Best, Worst, And Average Case Complexity

- Applications Of Big O Notation

- Conclusion

- Frequently Asked Questions

- What Is Time Complexity?

- Why Do You Need To Calculate Time Complexity?

- The Time Complexity Algorithm Cases

- Time Complexity: Different Types Of Asymptotic Notations

- How To Calculate Time Complexity?

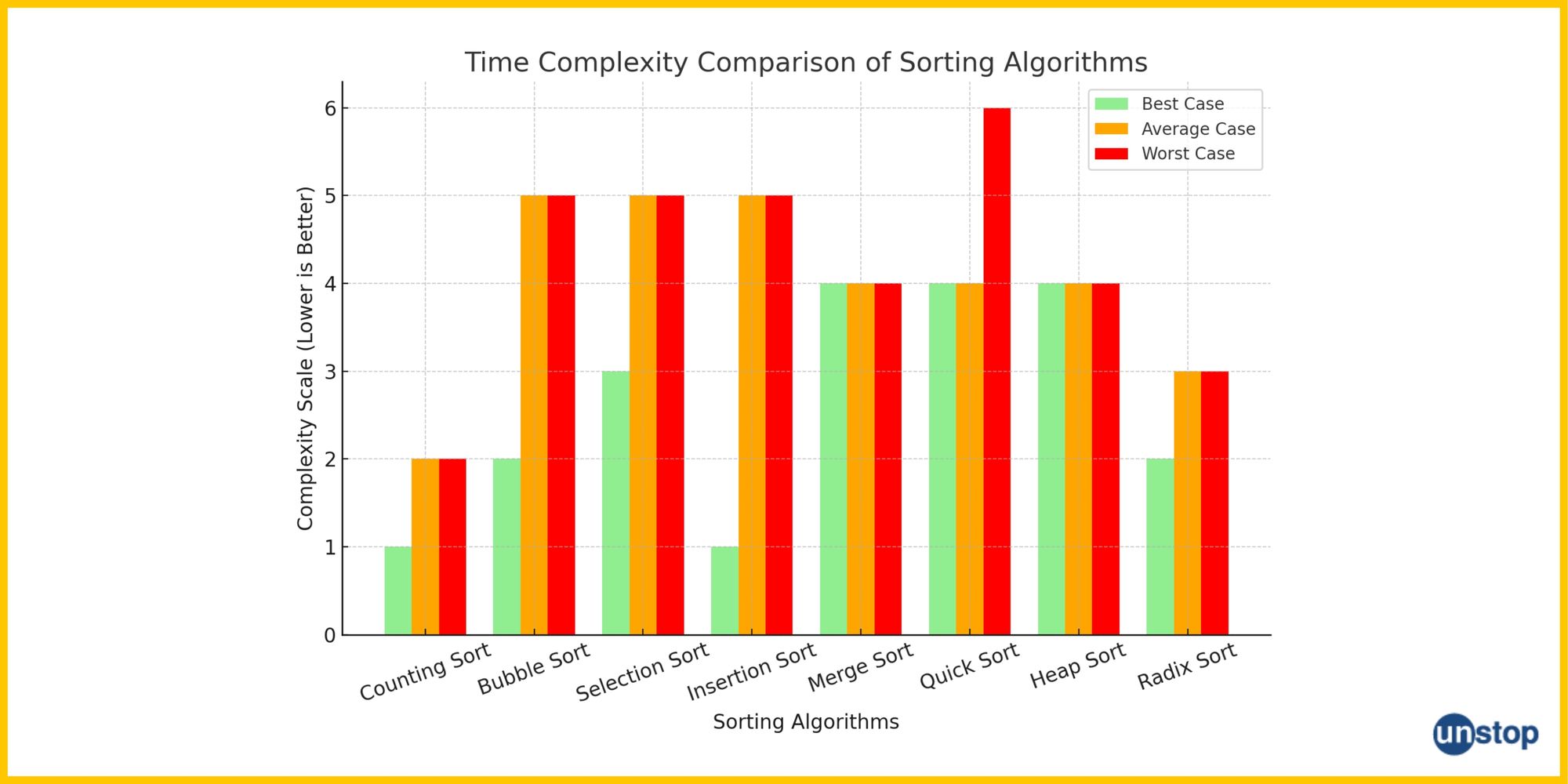

- Time Complexity Of Sorting Algorithms

- Time Complexity Of Searching Algorithms

- Writing And optimizing An algorithm

- What Is Space Complexity And Its Significance?

- Relation Between Time And Space Complexity

- Conclusion

- What Is Linear Data Structure?

- Key Characteristics Of Linear Data Structures

- What Are The Types Of Linear Data Structures?

- What Is An Array Linear Data Structure?

- What Are Linked Lists Linear Data Structure?

- What Is A Stack Linear Data Structure?

- What Is A Queue Linear Data Structure?

- Most Common Operations Performed in Linear Data Structures

- Advantages Of Linear Data Structures

- What Is Nonlinear Data Structure?

- Difference Between Linear & Non-Linear Data Structures

- Who Uses Linear Data Structures?

- Conclusion

- Frequently Asked Questions

- What is a linear data structure?

- What is a non-linear data structure?

- Difference between linear data structure and non-linear data structure

- FAQs about linear and non-linear data structures

- What Is Search?

- What Is Linear Search In Data Structure?

- What Is Linear Search Algorithm?

- Working Of Linear Search Algorithm

- Complexity Of Linear Search Algorithm In Data Structures

- Implementations Of Linear Search Algorithm In Different Programming Languages

- Real-World Applications Of Linear Search In Data Structure

- Advantages & Disadvantages Of Linear Search

- Best Practices For Using Linear Search Algorithm

- Conclusion

- Frequently Asked Questions

- What Is The Binary Search Algorithm?

- Conditions For Using Binary Search

- Steps For Implementing Binary Search

- Iterative Method For Binary Search With Implementation Examples

- Recursive Method For Binary Search

- Complexity Analysis Of Binary Search Algorithm

- Iterative Vs. Recursive Implementation Of Binary Search

- Advantages & Disadvantages Of Binary Search

- Practical Applications & Real-World Examples Of Binary Search

- Conclusion

- Frequently Asked Questions

- Understanding The Jump Search Algorithm

- How Jump Search Works?

- Code Implementation Of Jump Search Algorithm

- Time And Space Complexity Analysis

- Advantages Of Jump Search

- Disadvantages Of Jump Search

- Applications Of Jump Search

- Conclusion

- Frequently Asked Questions

- What Is Sorting In Data Structures?

- What Is Bubble Sort?

- What Is Selection Sort?

- What Is Insertion Sort?

- What Is Merge Sort?

- What Is Quick Sort?

- What Is Heap Sort?

- What Is Radix Sort?

- What Is Bucket Sort?

- What Is Counting Sort?

- What Is Shell Sort?

- Choosing The Right Sorting Algorithm

- Applications Of Sorting

- Conclusion

- Frequently Asked Questions

- Understanding Bubble Sort Algorithm

- Bubble Sort Algorithm

- Implementation Of Bubble Sort In C++

- Time And Space Complexity Analysis Of Bubble Sort Algorithm

- Advantages Of Bubble Sort Algorithm

- Disadvantages Of Bubble Sort Algorithm

- Applications of Bubble Sort Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding The Merge Sort Algorithm

- Algorithm For Merge Sort

- Implementation Of Merge Sort In C++

- Time And Space Complexity Analysis Of Merge Sort

- Advantages And Disadvantages Of Merge Sort

- Applications Of Merge Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Selection Sort Algorithm

- Algorithmic Approach To Selection Sort

- Implementation Of Selection Sort Algorithm

- Complexity Analysis Of Selection Sort

- Comparison Of Selection Sort With Other Sorting Algorithms

- Advantages And Disadvantages Of Selection Sort

- Application Of Selection Sort

- Conclusion

- Frequently Asked Questions

- What Is Insertion Sort Algorithm?

- How Does Insertion Sort Work? (Step-by-Step Explanation)

- Implementation of Insertion Sort in C++

- Time And Space Complexity Of Insertion Sort

- Applications Of Insertion Sort Algorithm

- Comparison With Other Sorting Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding Quick Sort Algorithm

- Step-By-Step Working Of Quick Sort

- Implementation Of Quick Sort Algorithm

- Time Complexity Analysis Of Quick Sort

- Advantages Of Quick Sort Algorithm

- Disadvantages Of Quick Sort Algorithm

- Applications Of Quick Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding The Heap Data Structure

- Working Of Heap Sort Algorithm

- Implementation Of Heap Sort Algorithm

- Advantages Of Heap Sort

- Disadvantages Of Heap Sort

- Real-World Applications Of Heap Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Counting Sort Algorithm

- Conditions For Using Counting Sort Algorithm

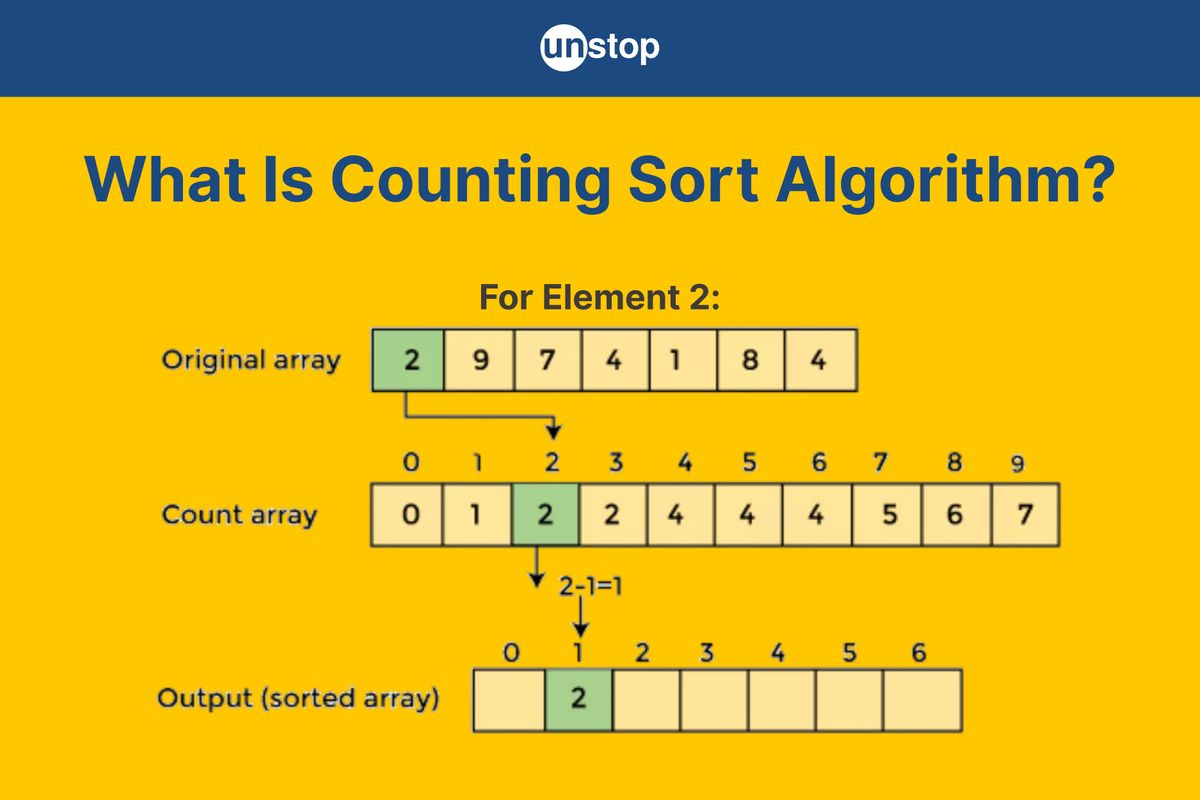

- How Counting Sort Algorithm Works?

- Implementation Of Counting Sort Algorithm

- Time And Space Complexity Analysis Of Counting Sort

- Comparison Of Counting Sort With Other Sorting Algorithms

- Advantages Of Counting Sort Algorithm

- Disadvantages Of Counting Sort Algorithm

- Applications Of Counting Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding Shell Sort Algorithm

- Working Of Shell Sort Algorithm

- Implementation Of Shell Sort Algorithm

- Time Complexity Analysis Of Shell Sort Algorithm

- Advantages Of Shell Sort Algorithm

- Disadvantages Of Shell Sort Algorithm

- Applications Of Shell Sort Algorithm

- Conclusion

- Frequently Asked Questions

- What Is Linked List In Data Structures?

- Types Of Linked Lists In Data Structures

- Linked List Operations In Data Structures

- Advantages And Disadvantages Of Linked Lists In Data Structures

- Comparison Of Linked Lists And Arrays

- Applications Of Linked Lists

- Conclusion

- Frequently Asked Questions

- What Is A Singly Linked List In Data Structure?

- Insertion Operations On Singly Linked Lists

- Deletion Operation On Singly Linked List

- Searching For Elements In Single Linked List

- Calculating Length Of Single Linked List

- Practical Applications Of Singly Linked Lists In Data Structure

- Common Problems With Singly Linked Lists

- Conclusion

- Frequently Asked Questions (FAQ)

- What Is A Linked List?

- Reverse A Linked List

- How To Reverse A Linked List? (Approaches)

- Recursive Approach To Reverse A Linked List

- Iterative Approach To Reverse A Linked List

- Using Stack To Reverse A Linked List

- Complexity Analysis Of Different Approaches To Reverse A Linked List

- Conclusion

- Frequently Asked Questions

- What Is A Stack In Data Structure?

- Understanding Stack Operations

- Stack Implementation In Data Structures

- Stack Implementation Using Arrays

- Stack Implementation Using Linked Lists

- Comparison: Array vs. Linked List Implementation

- Applications Of Stack In Data Structures

- Advantages And Disadvantages Of Stack Data Structure

- Conclusion

- Frequently Asked Questions

- What Is A Graph Data Structure?

- Importance Of Graph Data Structures

- Types Of Graphs In Data Structure

- Types Of Graph Algorithms

- Application Of Graphs In Data Structures

- Challenges And Complexities In Graphs

- Conclusion

- Frequently Asked Questions

- What Is Tree Data Structure?

- Terminologies Of Tree Data Structure:

- Different Types Of Tree Data Structures

- Basic Operations On Tree Data Structure

- Applications Of Tree Data Structures

- Comparison Of Trees, Graphs, And Linear Data Structures

- Advantages Of Tree Data Structure

- Disadvantages Of Tree Data Structure

- Conclusion

- Frequently Asked Questions

- What Is Dynamic Programming?

- Real-Life Example: The Jigsaw Puzzle Analogy

- How To Solve A Problem Using Dynamic Programming?

- Dynamic Programming Algorithm Techniques

- Advantages Of Dynamic Programming

- Disadvantages Of Dynamic Programming

- Applications Of Dynamic Programming

- Conclusion

- Frequently Asked Questions

- Understanding The Sliding Window Algorithm

- How Does The Sliding Window Algorithm Works?

- How To Identify Sliding Window Problems?

- Fixed-Size Sliding Window Example: Maximum Sum Subarray Of Size k

- Variable-Size Sliding Window Example: Smallest Subarray With A Given Sum

- Advantages Of Sliding Window Technique

- Disadvantages Of Sliding Window Technique

- Conclusion

- Frequently Asked Questions

- Introduction To Data Structures

- Data Structures Interview Questions: Basics

- Data Structures Interview Questions: Intermediate

- Data Structures Interview Questions: Advanced

- Conclusion

Counting Sort Algorithm In Data Structures (Working & Example)

Sorting is a fundamental operation in computer science used to organize data efficiently for searching, processing, and analysis. Counting Sort is a non-comparative sorting algorithm that sorts integers by counting the occurrences of each element and using this information to determine their positions in the sorted array. Unlike comparison-based algorithms such as QuickSort or MergeSort, Counting Sort works by leveraging the range of input values, making it highly efficient for datasets with a limited range of integers.

In this article, we will explore how the Counting Sort algorithm works, its time complexity, advantages, limitations, and practical applications.

Understanding The Counting Sort Algorithm

Sorting in data structures plays a crucial role in organizing data efficiently, and Counting Sort is one of the fastest sorting techniques when dealing with integers in a limited range. Unlike QuickSort, MergeSort, or Bubble Sort, which rely on comparing elements, Counting Sort works by counting occurrences and placing elements directly in their correct positions.

Key Characteristics Of Counting Sort

- Non-Comparative Sorting – It does not compare elements against each other; instead, it relies on counting occurrences.

- Stable Sorting Algorithm – If two elements have the same value, their relative order remains unchanged in the sorted array.

- Works Best for a Limited Range – It is efficient when sorting numbers within a known, small range (e.g., sorting student marks from 0 to 100).

- Requires Extra Space – It uses an auxiliary array to store counts, making it less efficient when dealing with large number ranges.

Working Of Counting Sort Algorithm

The algorithm follows a simple approach:

- Count occurrences – It first counts how often each number appears.

- Determine positions – It then determines where each number should be placed.

- Build the sorted array – Finally, it places the numbers in the correct order based on their counts.

Conditions For Using Counting Sort Algorithm

Counting Sort is a highly efficient sorting algorithm for specific cases, but it has certain conditions that must be met for it to work optimally.

1. Input Elements Must Be Within a Known, Small Range

- Counting Sort is best suited when the maximum value (max) in the input array is not significantly larger than the number of elements (n).

- If max is too large relative to n, the auxiliary counting array becomes excessively large, leading to high space complexity.

- Example (Good Use Case): Sorting an array of ages [21, 25, 30, 25, 21, 27] (small range: 21-30).

- Example (Bad Use Case): Sorting an array containing values between 1 and 1,000,000 when there are only 10 elements—this would waste a lot of space.

2. Only Works For Non-Negative Integer Values

- Counting Sort does not work directly with negative numbers or floating-point values.

- The algorithm relies on array indexing, which is non-applicable for negative indices.

- Possible Workarounds for Negative Numbers: Shift all numbers by adding the absolute value of the smallest negative number, then shift back after sorting.

3. Efficient When max Is Close To n

- The space complexity of Counting Sort is O(max).

- It is efficient only when max is close to n, otherwise it wastes memory.

- Best Case: n ≈ max (e.g., sorting grades out of 100).

- Worst Case: n = 10 but max = 1,000,000.

4. Not A Comparison-Based Algorithm

- Unlike Quick Sort or Merge Sort, Counting Sort does not compare elements.

- It uses counting and indexing, making it useful for special cases but unsuitable for generic sorting needs.

5. Stable Sorting Algorithm

- Counting Sort preserves the relative order of duplicate elements, making it a stable sort.

- This is particularly important when sorting records based on multiple attributes (e.g., sorting students by age while maintaining alphabetical order).

6. Requires Extra Space (O(max))

- The algorithm needs two additional arrays:

- The counting array (O(max)).

- The output array (O(n)).

- If memory is a concern, other algorithms like Quick Sort (O(1) extra space) might be preferable.

How Counting Sort Algorithm Works?

The Counting Sort algorithm follows a systematic approach to sorting numbers efficiently. Let’s break it down into five clear steps using an example.

Example Input Array

arr = [4, 2, 2, 8, 3, 3, 1]

Step 1: Find the Maximum Value In The Input Array

Before sorting, we need to determine the largest value in the array. This helps us define the range of numbers and allocate space for counting.

- Input Array: [4, 2, 2, 8, 3, 3, 1]

- Maximum Value (max) = 8

Step 2: Create A Counting Array Of Size max + 1 And Initialize It With Zeros

Now, we create an auxiliary counting array of size max + 1 (since array indices start at 0).

- The size of the counting array will be 8 + 1 = 9.

- We initialize all values to 0 since we haven’t counted any elements yet.

Initial Counting Array:

Index: 0 1 2 3 4 5 6 7 8

Count: 0 0 0 0 0 0 0 0 0

Step 3: Count Occurrences Of Each Element And Store Them In the Counting Array

Now, we traverse the input array and update the counting array by incrementing the index corresponding to each element.

- For 4, increment count[4].

- For 2, increment count[2] twice (as 2 appears twice).

- For 8, increment count[8].

- For 3, increment count[3] twice.

- For 1, increment count[1].

Updated Counting Array (after counting occurrences):

Index: 0 1 2 3 4 5 6 7 8

Count: 0 1 2 2 1 0 0 0 1

Step 4: Modify The Counting Array To Store Cumulative Sums For Positions

We now modify the counting array so that each index stores the cumulative sum of previous values.

- This tells us the position where each number should be placed in the final sorted array.

We compute cumulative sums as follows:

- count[1] = 1 (same as before).

- count[2] = count[1] + count[2] = 1 + 2 = 3

- count[3] = count[2] + count[3] = 3 + 2 = 5

- count[4] = count[3] + count[4] = 5 + 1 = 6

- count[5] = count[4] + count[5] = 6 + 0 = 6

- count[6] = count[5] + count[6] = 6 + 0 = 6

- count[7] = count[6] + count[7] = 6 + 0 = 6

- count[8] = count[7] + count[8] = 6 + 1 = 7

Modified Counting Array (Cumulative Sums):

Index: 0 1 2 3 4 5 6 7 8

Count: 0 1 3 5 6 6 6 6 7

Step 5: Construct The Sorted Output Array Using The Cumulative Positions

We now create the final sorted array by placing each element in its correct position.

- We traverse the input array from right to left to ensure stability (relative order of equal elements is preserved).

- We use the cumulative counting array to determine each element’s position.

Processing from the end of the input array:

- Element 1 → count[1] = 1 → Place 1 at index 0, decrement count[1].

- Element 3 → count[3] = 5 → Place 3 at index 4, decrement count[3].

- Element 3 → count[3] = 4 → Place 3 at index 3, decrement count[3].

- Element 8 → count[8] = 7 → Place 8 at index 6, decrement count[8].

- Element 2 → count[2] = 3 → Place 2 at index 2, decrement count[2].

- Element 2 → count[2] = 2 → Place 2 at index 1, decrement count[2].

- Element 4 → count[4] = 6 → Place 4 at index 5, decrement count[4].

Final Sorted Array:

Sorted: [1, 2, 2, 3, 3, 4, 8]

Implementation Of Counting Sort Algorithm

Here’s a step-by-step C++ implementation of Counting Sort, following the logic we discussed earlier.

Code Example:

#include

#include

#include // Include this for max_element

using namespace std;

void countingSort(vector& arr) {

// Step 1: Find the maximum element

int max = *max_element(arr.begin(), arr.end()); // Requires

// Step 2: Create and initialize count array

vector count(max + 1, 0);

// Step 3: Count occurrences of each element

for (int num : arr) {

count[num]++;

}

// Step 4: Modify count array to store cumulative sums (prefix sum)

for (int i = 1; i <= max; i++) {

count[i] += count[i - 1];

}

// Step 5: Construct the output sorted array

vector output(arr.size());

for (int i = arr.size() - 1; i >= 0; i--) {

output[count[arr[i]] - 1] = arr[i];

count[arr[i]]--; // Decrease count for stable sorting

}

// Copy sorted values back to original array

arr = output;

}

// Driver function

int main() {

vector arr = {4, 2, 2, 8, 3, 3, 1}; // Example array

cout << "Original Array: ";

for (int num : arr) cout << num << " "; // Fixed missing quote

cout << endl;

countingSort(arr);

cout << "Sorted Array: ";

for (int num : arr) cout << num << " ";

cout << endl;

return 0;

}

I2luY2x1ZGUgPGlvc3RyZWFtPgojaW5jbHVkZSA8dmVjdG9yPgojaW5jbHVkZSA8YWxnb3JpdGhtPiAgLy8gSW5jbHVkZSB0aGlzIGZvciBtYXhfZWxlbWVudAoKdXNpbmcgbmFtZXNwYWNlIHN0ZDsKCnZvaWQgY291bnRpbmdTb3J0KHZlY3RvcjxpbnQ+JiBhcnIpIHsKICAgIC8vIFN0ZXAgMTogRmluZCB0aGUgbWF4aW11bSBlbGVtZW50CiAgICBpbnQgbWF4ID0gKm1heF9lbGVtZW50KGFyci5iZWdpbigpLCBhcnIuZW5kKCkpOyAvLyBSZXF1aXJlcyA8YWxnb3JpdGhtPgoKICAgIC8vIFN0ZXAgMjogQ3JlYXRlIGFuZCBpbml0aWFsaXplIGNvdW50IGFycmF5CiAgICB2ZWN0b3I8aW50PiBjb3VudChtYXggKyAxLCAwKTsKICAgIAogICAgLy8gU3RlcCAzOiBDb3VudCBvY2N1cnJlbmNlcyBvZiBlYWNoIGVsZW1lbnQKICAgIGZvciAoaW50IG51bSA6IGFycikgewogICAgICAgIGNvdW50W251bV0rKzsKICAgIH0KCiAgICAvLyBTdGVwIDQ6IE1vZGlmeSBjb3VudCBhcnJheSB0byBzdG9yZSBjdW11bGF0aXZlIHN1bXMgKHByZWZpeCBzdW0pCiAgICBmb3IgKGludCBpID0gMTsgaSA8PSBtYXg7IGkrKykgewogICAgICAgIGNvdW50W2ldICs9IGNvdW50W2kgLSAxXTsKICAgIH0KCiAgICAvLyBTdGVwIDU6IENvbnN0cnVjdCB0aGUgb3V0cHV0IHNvcnRlZCBhcnJheQogICAgdmVjdG9yPGludD4gb3V0cHV0KGFyci5zaXplKCkpOwogICAgZm9yIChpbnQgaSA9IGFyci5zaXplKCkgLSAxOyBpID49IDA7IGktLSkgewogICAgICAgIG91dHB1dFtjb3VudFthcnJbaV1dIC0gMV0gPSBhcnJbaV07CiAgICAgICAgY291bnRbYXJyW2ldXS0tOyAgLy8gRGVjcmVhc2UgY291bnQgZm9yIHN0YWJsZSBzb3J0aW5nCiAgICB9CgogICAgLy8gQ29weSBzb3J0ZWQgdmFsdWVzIGJhY2sgdG8gb3JpZ2luYWwgYXJyYXkKICAgIGFyciA9IG91dHB1dDsKfQoKLy8gRHJpdmVyIGZ1bmN0aW9uCmludCBtYWluKCkgewogICAgdmVjdG9yPGludD4gYXJyID0gezQsIDIsIDIsIDgsIDMsIDMsIDF9OyAvLyBFeGFtcGxlIGFycmF5CgogICAgY291dCA8PCAiT3JpZ2luYWwgQXJyYXk6ICI7CiAgICBmb3IgKGludCBudW0gOiBhcnIpIGNvdXQgPDwgbnVtIDw8ICIgIjsgIC8vIEZpeGVkIG1pc3NpbmcgcXVvdGUKICAgIGNvdXQgPDwgZW5kbDsKCiAgICBjb3VudGluZ1NvcnQoYXJyKTsKCiAgICBjb3V0IDw8ICJTb3J0ZWQgQXJyYXk6ICI7CiAgICBmb3IgKGludCBudW0gOiBhcnIpIGNvdXQgPDwgbnVtIDw8ICIgIjsKICAgIGNvdXQgPDwgZW5kbDsKCiAgICByZXR1cm4gMDsKfQoK

Output:

Original Array: 4 2 2 8 3 3 1

Sorted Array: 1 2 2 3 3 4 8

Explanation:

In the above code example-

- We begin by including necessary headers: <iostream> for input/output and <vector> for using dynamic arrays.

- The countingSort() function sorts an array using the Counting Sort algorithm. It takes a vector<int>& arr as input, meaning the original array is modified directly.

- First, we find the maximum element in the array using max_element(), as this determines the size of our count array.

- We create a count array of size max + 1, initializing all elements to zero. This array will store the frequency of each number in arr.

- We iterate through arr and update count[num] to track how many times each number appears.

- Next, we modify the count array to store cumulative counts, which help in placing elements at the correct position in the sorted array.

- We create an output array of the same size as arr. Starting from the last element in arr, we place each element in its correct position using the count array, ensuring stability.

- After placing each element, we decrement its count to handle duplicates properly.

- Finally, we copy the sorted output array back into arr.

- The main() function initializes an example array, prints it, calls countingSort(), and prints the sorted result.

Time And Space Complexity Analysis Of Counting Sort

Here’s a detailed breakdown of Counting Sort’s complexity in different scenarios:

|

Complexity Type |

Notation |

Explanation |

|

Best Case (Ω) |

O(n + max) |

When the array is already sorted, Counting Sort still processes all elements and fills the counting array. |

|

Average Case (Θ) |

O(n + max) |

Counting Sort always processes n elements and max size of the counting array. |

|

Worst Case (O) |

O(n + max) |

When max is very large, creating and processing the counting array takes significant time. |

|

Space Complexity |

O(n + max) |

Additional space is required for both the counting array (O(max)) and output array (O(n)). |

Key Observations:

- Linear Time Complexity: Counting Sort runs in O(n + max), making it faster than comparison-based algorithms (like Quick Sort O(n log n)) for small-range values.

- High Space Requirement: If max is too large, the algorithm wastes memory, making it impractical for sorting large-range numbers.

- Stable Sorting: The sorting preserves the order of duplicate elements, making it useful for applications requiring stability.

Comparison Of Counting Sort With Other Sorting Algorithms

Here’s a detailed comparison of Counting Sort against other common sorting algorithms:

|

Sorting Algorithm |

Time Complexity (Best) |

Time Complexity (Average) |

Time Complexity (Worst) |

Space Complexity |

Stable? |

Comparison-Based? |

Best Use Case |

|

Counting Sort |

O(n + max) |

O(n + max) |

O(n + max) |

O(n + max) |

Yes |

No |

Small range integers |

|

O(n) |

O(n²) |

O(n²) |

O(1) |

Yes |

Yes |

Small datasets, nearly sorted lists |

|

|

O(n²) |

O(n²) |

O(n²) |

O(1) |

No |

Yes |

Small datasets, minimal swaps |

|

|

O(n) |

O(n²) |

O(n²) |

O(1) |

Yes |

Yes |

Small datasets, nearly sorted lists |

|

|

O(n log n) |

O(n log n) |

O(n log n) |

O(n) |

Yes |

Yes |

Large datasets, stable sorting required |

|

|

O(n log n) |

O(n log n) |

O(n²) (worst case) |

O(log n) (in-place) |

No |

Yes |

General-purpose fast sorting |

|

|

O(n log n) |

O(n log n) |

O(n log n) |

O(1) |

No |

Yes |

Priority queues, heaps |

|

|

Radix Sort |

O(nk) |

O(nk) |

O(nk) |

O(n + k) |

Yes |

No |

Large integers, fixed-length strings |

Key Takeaways:

- Counting Sort is very fast for small-range integer values but has a high space cost.

- Quick Sort is best for general-purpose sorting with good average-case performance.

- Merge Sort is useful when stability is required, despite using extra space.

- Bubble, Selection, and Insertion Sort are not suitable for large datasets.

- Heap Sort is efficient but not stable.

- Radix Sort is useful for sorting large numbers or strings but needs extra space.

Advantages Of Counting Sort Algorithm

Some of the advantages of counting sort algorithms are:

- Linear Time Complexity (O(n + max)) – Faster than comparison-based sorting algorithms for small-range numbers.

- Stable Sorting Algorithm – Maintains the relative order of duplicate elements, which is useful for sorting records.

- Non-Comparison Sorting – Does not rely on element comparisons like Quick Sort or Merge Sort, making it efficient for integers.

- Efficient for Small Range – Performs well when sorting numbers within a limited range, such as 0 to 1000.

- Suitable for Large Input Size – When max is small, Counting Sort is very fast, even for large n.

Disadvantages Of Counting Sort Algorithm

Some of the disadvantages of counting sort algorithms are:

- High Space Complexity (O(n + max)) – Requires additional space for the counting array, making it inefficient for large max values.

- Not Suitable for Large Ranges – If the maximum element (max) is significantly larger than n, memory usage becomes impractical.

- Limited to Integers – Cannot be used for sorting floating-point numbers or complex data types directly.

- Inefficient for Unbounded Data – If numbers have a very large range, Counting Sort becomes space-inefficient compared to Quick Sort (O(n log n)).

- Not In-Place Sorting – Requires extra memory to store the counting array and output array, unlike Quick Sort, which sorts in-place.

Applications Of Counting Sort Algorithm

Counting Sort is useful in scenarios where the range of input values is small and well-defined. Here are some key applications:

1. Sorting Numbers In A Limited Range

- Used when sorting integers within a known, small range (e.g., student roll numbers, exam scores, or age data).

- Example: Sorting exam scores between 0 to 100 for thousands of students efficiently.

2. Data Analysis And Histogram Counting

- Helps in frequency counting, such as counting occurrences of words, letters, or numbers in datasets.

- Example: Counting character occurrences in text processing or frequency distribution analysis.

3. Linear-Time Sorting In Digital Systems

- Used in digital circuits, where sorting small fixed-size integer values is needed for performance optimization.

- Example: Sorting pixel intensity values in image processing.

4. DNA Sequencing And Bioinformatics

- Helps sort and count nucleotide sequences (A, T, G, C) efficiently.

- Example: Finding the frequency of DNA bases in genetic data.

5. Electoral Systems & Voting Count

- Used in electronic voting machines (EVMs) to count and sort votes based on candidate IDs.

- Example: Sorting vote counts when candidate IDs are within a small range.

6. Radar And Sensor Data Processing

- Applied in military and weather systems where sensor data values fall within a limited numerical range.

- Example: Sorting radar frequency signals in air traffic control.

7. Network Packet Sorting

- Helps in organizing fixed-range network packet sizes in communication systems.

- Example: Efficiently managing network traffic data by sorting packets based on predefined priority values.

Conclusion

Counting Sort is a powerful non-comparison-based sorting algorithm that efficiently sorts data in linear time (O(n + max)) when the range of values is limited. It works by counting occurrences, computing cumulative frequencies, and placing elements in their correct positions while maintaining stability.

Despite its advantages—such as fast execution and stability—Counting Sort has limitations, including high space complexity and inefficiency for large ranges. It is best suited for sorting small-range integers, making it useful in data analysis, digital systems, and bioinformatics.

In summary, Counting Sort is an excellent choice for scenarios where speed is crucial, and memory constraints are not a major concern. However, for large or unbounded datasets, comparison-based sorting algorithms like Quick Sort or Merge Sort may be more practical.

Frequently Asked Questions

Q. Why is Counting Sort not a comparison-based sorting algorithm?

Counting Sort does not compare elements directly to determine order. Instead, it counts occurrences of each element and uses cumulative sums to place them in the correct position. This makes it different from algorithms like Quick Sort or Merge Sort, which rely on comparisons.

Q. Can Counting Sort be used for negative numbers?

By default, Counting Sort works with non-negative integers because it uses element values as indices in an auxiliary array. To handle negative numbers, we can shift the range by adding an offset equal to the absolute value of the smallest negative number.

Q. Why is Counting Sort not space-efficient?

Counting Sort requires an extra counting array of size max + 1, where max is the largest number in the input. If max is much larger than n (number of elements), the algorithm wastes memory storing unnecessary indices, making it inefficient for large-range data.

Q. Is Counting Sort stable? Why does it matter?

Yes, Counting Sort is a stable sorting algorithm, meaning it preserves the relative order of duplicate elements in the sorted output. This property is crucial in scenarios where elements have multiple attributes, and we need to maintain their original sequence. For example, if we are sorting a list of students by marks and two students have the same score, a stable sort ensures they remain in the same order as in the input list. This is especially useful in multi-level sorting, where a stable algorithm like Counting Sort can be used as a preliminary step before sorting by another attribute. Stability ensures data integrity and is often required in applications such as database management, lexicographic sorting, and data analytics.

Q. When should Counting Sort be preferred over Quick Sort?

Counting Sort is faster than Quick Sort (O(n log n)) when sorting integers in a small range (max is not significantly larger than n). It is ideal for fixed-range datasets such as exam scores, voting counts, and character frequency analysis. However, Quick Sort is preferable for general-purpose sorting where the range of values is large.

Suggested Reads:

- Difference Between Hashing And Encryption Decoded

- 53 Frequently Asked Linked List Interview Questions With Answers 2024

- Data Structure Interview Questions For 2024 [With Detailed Answers]

- Tree Topology | Advantages & Disadvantages In Computer Network

- Decoding Data Redundancy In DBMS| Causes, Advantages, Solutions

I’m a Computer Science graduate with a knack for creative ventures. Through content at Unstop, I am trying to simplify complex tech concepts and make them fun. When I’m not decoding tech jargon, you’ll find me indulging in great food and then burning it out at the gym.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment