- What Is Data Structure?

- Key Features Of Data Structures

- Basic Terminologies Related To Data Structures

- Types Of Data Structures

- What Are Linear Data Structures & Its Types?

- What Are Non-Linear Data Structures & Its Types?

- Importance Of Data Structure In Programming

- Basic Operations On Data Structures

- Applications Of Data Structures

- Real-Life Applications Of Data Structures

- Linear Vs. Non-linear Data Structures

- What Are Algorithms? The Difference Between Data Structures & Algorithms

- Conclusion

- Frequently Asked Questions

- What Is Asymptotic Notation?

- How Asymptotic Notation Helps In Analyzing Performance

- Types Of Asymptotic Notation

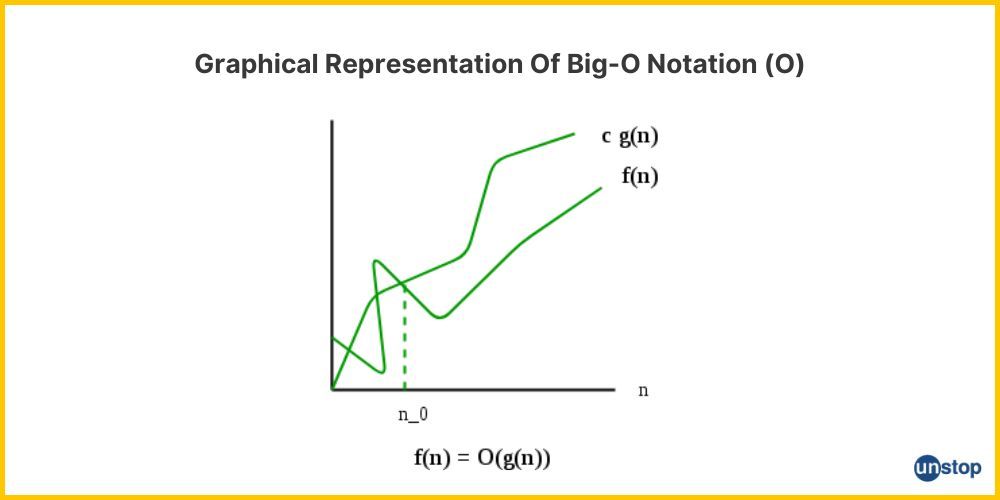

- Big-O Notation (O)

- Omega Notation (Ω)

- Theta Notation (Θ)

- Little-O Notation (o)

- Little-Omega Notation (ω)

- Summary Of Asymptotic Notations

- Real-World Applications Of Asymptotic Notation

- Conclusion

- Frequently Asked Questions

- Understanding Big O Notation

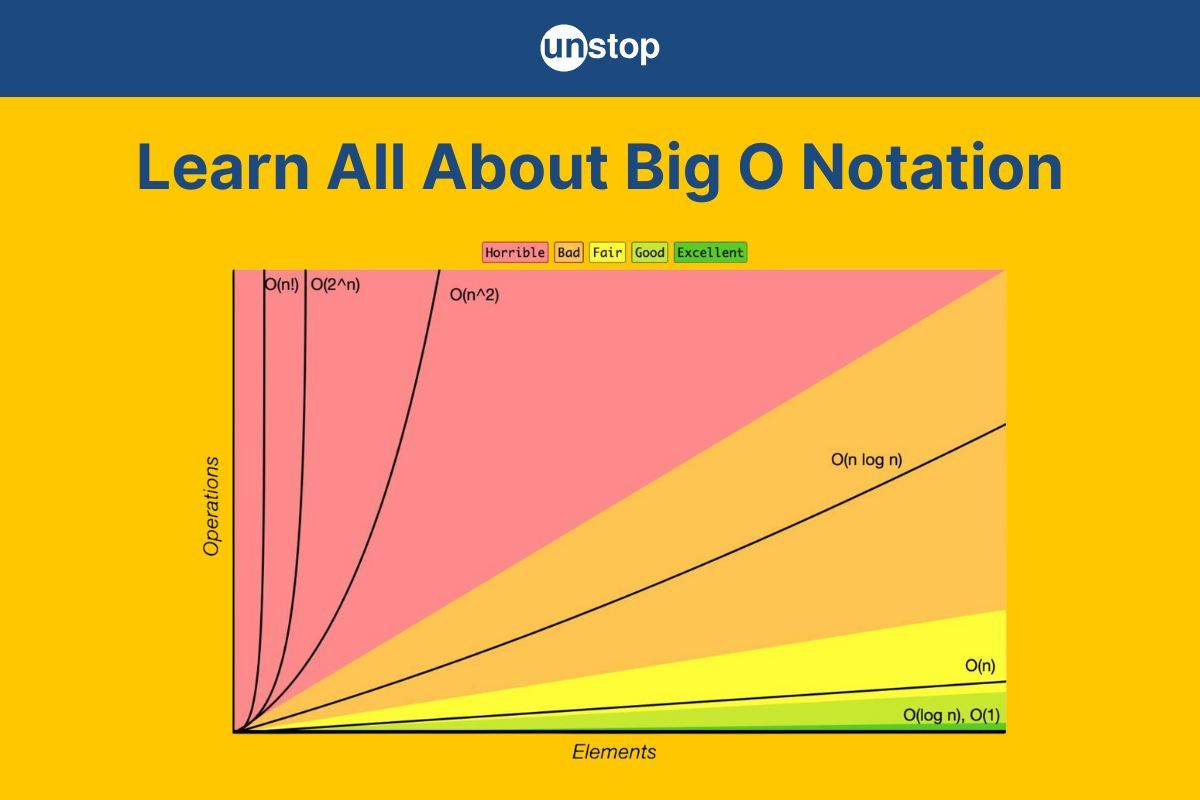

- Types Of Time Complexity

- Space Complexity In Big O Notation

- How To Determine Big O Complexity

- Best, Worst, And Average Case Complexity

- Applications Of Big O Notation

- Conclusion

- Frequently Asked Questions

- What Is Time Complexity?

- Why Do You Need To Calculate Time Complexity?

- The Time Complexity Algorithm Cases

- Time Complexity: Different Types Of Asymptotic Notations

- How To Calculate Time Complexity?

- Time Complexity Of Sorting Algorithms

- Time Complexity Of Searching Algorithms

- Writing And optimizing An algorithm

- What Is Space Complexity And Its Significance?

- Relation Between Time And Space Complexity

- Conclusion

- What Is Linear Data Structure?

- Key Characteristics Of Linear Data Structures

- What Are The Types Of Linear Data Structures?

- What Is An Array Linear Data Structure?

- What Are Linked Lists Linear Data Structure?

- What Is A Stack Linear Data Structure?

- What Is A Queue Linear Data Structure?

- Most Common Operations Performed in Linear Data Structures

- Advantages Of Linear Data Structures

- What Is Nonlinear Data Structure?

- Difference Between Linear & Non-Linear Data Structures

- Who Uses Linear Data Structures?

- Conclusion

- Frequently Asked Questions

- What is a linear data structure?

- What is a non-linear data structure?

- Difference between linear data structure and non-linear data structure

- FAQs about linear and non-linear data structures

- What Is Search?

- What Is Linear Search In Data Structure?

- What Is Linear Search Algorithm?

- Working Of Linear Search Algorithm

- Complexity Of Linear Search Algorithm In Data Structures

- Implementations Of Linear Search Algorithm In Different Programming Languages

- Real-World Applications Of Linear Search In Data Structure

- Advantages & Disadvantages Of Linear Search

- Best Practices For Using Linear Search Algorithm

- Conclusion

- Frequently Asked Questions

- What Is The Binary Search Algorithm?

- Conditions For Using Binary Search

- Steps For Implementing Binary Search

- Iterative Method For Binary Search With Implementation Examples

- Recursive Method For Binary Search

- Complexity Analysis Of Binary Search Algorithm

- Iterative Vs. Recursive Implementation Of Binary Search

- Advantages & Disadvantages Of Binary Search

- Practical Applications & Real-World Examples Of Binary Search

- Conclusion

- Frequently Asked Questions

- Understanding The Jump Search Algorithm

- How Jump Search Works?

- Code Implementation Of Jump Search Algorithm

- Time And Space Complexity Analysis

- Advantages Of Jump Search

- Disadvantages Of Jump Search

- Applications Of Jump Search

- Conclusion

- Frequently Asked Questions

- What Is Sorting In Data Structures?

- What Is Bubble Sort?

- What Is Selection Sort?

- What Is Insertion Sort?

- What Is Merge Sort?

- What Is Quick Sort?

- What Is Heap Sort?

- What Is Radix Sort?

- What Is Bucket Sort?

- What Is Counting Sort?

- What Is Shell Sort?

- Choosing The Right Sorting Algorithm

- Applications Of Sorting

- Conclusion

- Frequently Asked Questions

- Understanding Bubble Sort Algorithm

- Bubble Sort Algorithm

- Implementation Of Bubble Sort In C++

- Time And Space Complexity Analysis Of Bubble Sort Algorithm

- Advantages Of Bubble Sort Algorithm

- Disadvantages Of Bubble Sort Algorithm

- Applications of Bubble Sort Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding The Merge Sort Algorithm

- Algorithm For Merge Sort

- Implementation Of Merge Sort In C++

- Time And Space Complexity Analysis Of Merge Sort

- Advantages And Disadvantages Of Merge Sort

- Applications Of Merge Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Selection Sort Algorithm

- Algorithmic Approach To Selection Sort

- Implementation Of Selection Sort Algorithm

- Complexity Analysis Of Selection Sort

- Comparison Of Selection Sort With Other Sorting Algorithms

- Advantages And Disadvantages Of Selection Sort

- Application Of Selection Sort

- Conclusion

- Frequently Asked Questions

- What Is Insertion Sort Algorithm?

- How Does Insertion Sort Work? (Step-by-Step Explanation)

- Implementation of Insertion Sort in C++

- Time And Space Complexity Of Insertion Sort

- Applications Of Insertion Sort Algorithm

- Comparison With Other Sorting Algorithms

- Conclusion

- Frequently Asked Questions

- Understanding Quick Sort Algorithm

- Step-By-Step Working Of Quick Sort

- Implementation Of Quick Sort Algorithm

- Time Complexity Analysis Of Quick Sort

- Advantages Of Quick Sort Algorithm

- Disadvantages Of Quick Sort Algorithm

- Applications Of Quick Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding The Heap Data Structure

- Working Of Heap Sort Algorithm

- Implementation Of Heap Sort Algorithm

- Advantages Of Heap Sort

- Disadvantages Of Heap Sort

- Real-World Applications Of Heap Sort

- Conclusion

- Frequently Asked Questions

- Understanding The Counting Sort Algorithm

- Conditions For Using Counting Sort Algorithm

- How Counting Sort Algorithm Works?

- Implementation Of Counting Sort Algorithm

- Time And Space Complexity Analysis Of Counting Sort

- Comparison Of Counting Sort With Other Sorting Algorithms

- Advantages Of Counting Sort Algorithm

- Disadvantages Of Counting Sort Algorithm

- Applications Of Counting Sort Algorithm

- Conclusion

- Frequently Asked Questions

- Understanding Shell Sort Algorithm

- Working Of Shell Sort Algorithm

- Implementation Of Shell Sort Algorithm

- Time Complexity Analysis Of Shell Sort Algorithm

- Advantages Of Shell Sort Algorithm

- Disadvantages Of Shell Sort Algorithm

- Applications Of Shell Sort Algorithm

- Conclusion

- Frequently Asked Questions

- What Is Linked List In Data Structures?

- Types Of Linked Lists In Data Structures

- Linked List Operations In Data Structures

- Advantages And Disadvantages Of Linked Lists In Data Structures

- Comparison Of Linked Lists And Arrays

- Applications Of Linked Lists

- Conclusion

- Frequently Asked Questions

- What Is A Singly Linked List In Data Structure?

- Insertion Operations On Singly Linked Lists

- Deletion Operation On Singly Linked List

- Searching For Elements In Single Linked List

- Calculating Length Of Single Linked List

- Practical Applications Of Singly Linked Lists In Data Structure

- Common Problems With Singly Linked Lists

- Conclusion

- Frequently Asked Questions (FAQ)

- What Is A Linked List?

- Reverse A Linked List

- How To Reverse A Linked List? (Approaches)

- Recursive Approach To Reverse A Linked List

- Iterative Approach To Reverse A Linked List

- Using Stack To Reverse A Linked List

- Complexity Analysis Of Different Approaches To Reverse A Linked List

- Conclusion

- Frequently Asked Questions

- What Is A Stack In Data Structure?

- Understanding Stack Operations

- Stack Implementation In Data Structures

- Stack Implementation Using Arrays

- Stack Implementation Using Linked Lists

- Comparison: Array vs. Linked List Implementation

- Applications Of Stack In Data Structures

- Advantages And Disadvantages Of Stack Data Structure

- Conclusion

- Frequently Asked Questions

- What Is A Graph Data Structure?

- Importance Of Graph Data Structures

- Types Of Graphs In Data Structure

- Types Of Graph Algorithms

- Application Of Graphs In Data Structures

- Challenges And Complexities In Graphs

- Conclusion

- Frequently Asked Questions

- What Is Tree Data Structure?

- Terminologies Of Tree Data Structure:

- Different Types Of Tree Data Structures

- Basic Operations On Tree Data Structure

- Applications Of Tree Data Structures

- Comparison Of Trees, Graphs, And Linear Data Structures

- Advantages Of Tree Data Structure

- Disadvantages Of Tree Data Structure

- Conclusion

- Frequently Asked Questions

- What Is Dynamic Programming?

- Real-Life Example: The Jigsaw Puzzle Analogy

- How To Solve A Problem Using Dynamic Programming?

- Dynamic Programming Algorithm Techniques

- Advantages Of Dynamic Programming

- Disadvantages Of Dynamic Programming

- Applications Of Dynamic Programming

- Conclusion

- Frequently Asked Questions

- Understanding The Sliding Window Algorithm

- How Does The Sliding Window Algorithm Works?

- How To Identify Sliding Window Problems?

- Fixed-Size Sliding Window Example: Maximum Sum Subarray Of Size k

- Variable-Size Sliding Window Example: Smallest Subarray With A Given Sum

- Advantages Of Sliding Window Technique

- Disadvantages Of Sliding Window Technique

- Conclusion

- Frequently Asked Questions

- Introduction To Data Structures

- Data Structures Interview Questions: Basics

- Data Structures Interview Questions: Intermediate

- Data Structures Interview Questions: Advanced

- Conclusion

Big O Notation | Complexity, Applications & More (+Examples)

When analyzing algorithms, efficiency is a key factor. We often need to determine how an algorithm's performance scales as the input size grows. This is where Big O Notation comes in—it provides a standardized way to describe the time and space complexity of algorithms.

In this article, we'll explore what Big O Notation is, why it's important, and how different complexities impact algorithm performance. We'll also look at common Big O complexities with examples to help you understand their real-world implications.

Understanding Big O Notation

Big O Notation is a mathematical concept used in computer science to describe the efficiency of algorithms in terms of time and space. It provides a standardized way to express how the execution time or memory usage of an algorithm grows as the input size increases. Instead of focusing on exact execution times, Big O focuses on the growth rate, helping us compare algorithms independently of hardware and implementation details.

For example, if an algorithm takes 5 milliseconds for an input of size n = 100 and 20 milliseconds for n = 200, we need a way to describe this growth pattern. Big O helps by giving us a general formula, such as O(n) or O(log n), to express this behavior.

How Big O Helps In Comparing Algorithms

When solving a problem, multiple algorithms may be available, but their performance can vary significantly. Big O Notation allows us to compare them by estimating how their execution time or memory usage changes as the input size increases.

For instance, let's compare two sorting algorithms:

- Bubble Sort has a time complexity of O(n²), meaning the execution time grows quadratically as input size increases.

- Merge Sort has a time complexity of O(n log n), which grows more efficiently.

If we sort 1,000 elements, Bubble Sort takes roughly 1,000,000 steps (1,000²), while Merge Sort takes around 10,000 steps (1,000 × log₂(1,000)). Clearly, Merge Sort is the better choice for larger datasets.

By understanding Big O Notation, we can make informed decisions about which algorithms to use in different scenarios, ensuring optimal performance for various applications.

Types Of Time Complexity

Time complexity defines how the execution time of an algorithm changes as the input size (n) increases. Let’s explore different types of time complexities with examples to understand their behavior.

1. O(1) – Constant Time

- Definition: The execution time remains the same, regardless of the input size.

- Example: Accessing an element in an array using its index.

int arr[] = {10, 20, 30, 40, 50};

cout << arr[2]; // Always takes the same time, no matter the array size

2. O(log n) – Logarithmic Time

- Definition: The execution time grows logarithmically, meaning it increases slowly as input size increases.

- Example: Binary Search (which repeatedly divides the search space in half).

int binarySearch(int arr[], int left, int right, int key) {

while (left <= right) {

int mid = left + (right - left) / 2;

if (arr[mid] == key) return mid;

else if (arr[mid] < key) left = mid + 1;

else right = mid - 1;

}

return -1;

}

3. O(n) – Linear Time

- Definition: The execution time grows directly with the input size.

- Example: Looping through an array.

for (int i = 0; i < n; i++) {

cout << arr[i] << " ";

}

4. O(n log n) – Linearithmic Time

- Definition: The execution time grows slightly faster than linear time but much slower than quadratic time.

- Example: Merge Sort and Quick Sort.

void mergeSort(int arr[], int left, int right) {

if (left < right) {

int mid = left + (right - left) / 2;

mergeSort(arr, left, mid);

mergeSort(arr, mid + 1, right);

merge(arr, left, mid, right); // Merging takes O(n)

}

}

5. O(n²) – Quadratic Time

- Definition: The execution time grows proportionally to the square of the input size.

- Example: Nested loops in Bubble Sort.

for (int i = 0; i < n; i++) {

for (int j = 0; j < n; j++) {

cout << i << j << " ";

}

}

6. O(2ⁿ) – Exponential Time

- Definition: The execution time doubles with each additional input element.

- Example: Recursive Fibonacci sequence.

int fibonacci(int n) {

if (n <= 1) return n;

return fibonacci(n - 1) + fibonacci(n - 2);

}

7. O(n!) – Factorial Time

- Definition: The execution time grows at an extremely fast rate, making it impractical for large inputs.

- Example: Generating all permutations of a set.

void permute(string s, int l, int r) {

if (l == r) cout << s << endl;

else {

for (int i = l; i <= r; i++) {

swap(s[l], s[i]);

permute(s, l + 1, r);

swap(s[l], s[i]); // Backtrack

}

}

}

Summary Table

|

Complexity |

Growth Rate |

Example |

|

O(1) |

Constant |

Accessing an array element |

|

O(log n) |

Logarithmic |

|

|

O(n) |

Linear |

Looping through an array |

|

O(n log n) |

Linearithmic |

Merge Sort, Quick Sort |

|

O(n²) |

Quadratic |

Bubble Sort, Nested loops |

|

O(2ⁿ) |

Exponential |

Recursive Fibonacci |

|

O(n!) |

Factorial |

Generating all permutations |

Understanding these complexities helps in choosing the right algorithm for the right problem, ensuring efficient performance even for large data sets.

Space Complexity In Big O Notation

Space complexity refers to the amount of memory an algorithm requires to execute relative to the input size. It includes:

- Fixed part – Memory required for variables, constants, and program instructions.

- Variable part – Memory required for dynamic allocation (e.g., arrays, recursion stack).

Like time complexity, space complexity is expressed using Big O Notation to describe how memory usage grows with input size n.

Examples of Different Space Complexities

|

Space Complexity |

Growth Rate |

Example |

|

O(1) |

Constant |

Swapping variables |

|

O(log n) |

Logarithmic |

Recursive Binary Search |

|

O(n) |

Linear |

Storing an array |

|

O(n²) |

Quadratic |

2D Matrix allocation |

|

O(n!) |

Factorial |

Generating all permutations |

Understanding space complexity helps in optimizing algorithms, especially when dealing with large datasets and memory-constrained systems.

How To Determine Big O Complexity

Analyzing an algorithm’s time or space complexity involves understanding how its execution time or memory usage grows as the input size (n) increases. The steps are as follows:

1. Identify Basic Operations

Break down the algorithm into fundamental operations like comparisons, assignments, and iterations.

Example: Finding the sum of an array.

int sumArray(int arr[], int n) {

int sum = 0; // O(1) - Constant operation

for (int i = 0; i < n; i++) { // O(n) - Loop runs n times

sum += arr[i]; // O(1) - Constant operation

}

return sum;

}

Complexity: The loop runs n times, so total complexity = O(n).

2. Count Loops and Nested Loops

Each loop contributes to the overall complexity.

Example: A nested loop.

for (int i = 0; i < n; i++) { // O(n)

for (int j = 0; j < n; j++) { // O(n)

cout << i << j << " "; // O(1)

}

}

Complexity: Since the inner loop runs n times for each iteration of the outer loop, total complexity = O(n × n) = O(n²).

3. Analyze Recursion Depth

Recursion often follows patterns like O(n) (linear), O(log n) (logarithmic), or O(2ⁿ) (exponential).

Example: Recursive Fibonacci.

int fibonacci(int n) {

if (n <= 1) return n;

return fibonacci(n - 1) + fibonacci(n - 2);

}

Complexity: Each call spawns two new calls, leading to O(2ⁿ) growth.

Best, Worst, And Average Case Complexity

When analyzing an algorithm, it’s important to consider how it performs in different scenarios. Big O notation helps us express the worst-case complexity, but we also have best-case and average-case complexities to get a complete picture.

Differences Between Best, Worst, and Average Case

1. Best Case Complexity (Ω - Omega Notation)

- Definition: The minimum time an algorithm takes when given the most favorable input.

- Example: Searching for an element at the first position in an array.

- Notation Used: Ω (Omega) represents the lower bound.

Example: Linear Search

int search(int arr[], int n, int key) {

if (arr[0] == key) return 0; // Best case: Found at first index (Ω(1))

for (int i = 1; i < n; i++) {

if (arr[i] == key) return i;

}

return -1;

}

Best case complexity: Ω(1) (if the element is at the start).

2. Worst Case Complexity (O - Big O Notation)

- Definition: The maximum time an algorithm takes for the worst possible input.

- Example: Searching for an element that is not in an array.

- Notation Used: O (Big O) represents the upper bound.

Example: Linear Search

Worst case complexity: O(n) (if the element is not found or at the last index).

3. Average Case Complexity (Θ - Theta Notation)

- Definition: The expected time an algorithm takes over all possible inputs.

- Example: Searching for an element that is equally likely to be anywhere in the array.

- Notation Used: Θ (Theta) represents the tight bound (average case).

Example: Linear Search

- Average case complexity: Θ(n/2) ≈ Θ(n) (assuming uniform distribution of search queries).

Real-World Significance of These Cases

|

Complexity Type |

Meaning |

Example |

|

Best Case (Ω) |

Ideal scenario |

Finding an element at the first index in Linear Search |

|

Worst Case (O) |

Guarantees an upper limit |

Searching for a non-existent element in an array |

|

Average Case (Θ) |

Most practical scenario |

Searching for an element randomly positioned in an array |

Why does this matter?

- Best case is rare in real applications.

- Worst case helps us prepare for the worst performance.

- Average case is what usually happens, making it the most practical metric for performance analysis.

Understanding these complexities ensures we choose the right algorithm based on expected input scenarios.

Applications Of Big O Notation

Big O notation is widely used in computer science and software development for analyzing algorithm efficiency. Here are some key applications:

1. Algorithm Analysis

- Helps evaluate the efficiency of different algorithms.

- Used to compare time and space complexity before implementation.

2. Optimizing Code Performance

- Identifies bottlenecks in programs.

- Guides developers in choosing faster and more scalable solutions.

3. Data Structure Selection

- Determines which data structure (e.g., arrays, linked lists, hash tables) is best suited for a given problem.

- Example: Hash tables provide O(1) lookup, while binary trees provide O(log n) lookup.

4. Scalability Testing

- Predicts how algorithms will perform as input size grows.

- Essential for handling large-scale applications efficiently.

5. Competitive Programming & Interviews

- Helps in solving coding problems efficiently under time constraints.

- Frequently tested in technical interviews at top tech companies.

6. Database Query Optimization

- Used in indexing and searching techniques (e.g., O(log n) binary search in databases).

- Helps in query optimization for faster data retrieval.

7. Artificial Intelligence & Machine Learning

- Evaluates computational efficiency of training models.

- Optimizes feature selection and algorithm performance.

8. Cryptography & Security

- Analyzes encryption algorithms to ensure security vs. computational cost.

- Example: RSA encryption relies on O(2ⁿ) complexity for breaking keys.

9. Network Routing & Optimization

- Used in shortest path algorithms (Dijkstra’s O(V²), A* search O(b^d)).

- Helps in designing efficient communication networks.

10. Compiler Design

- Helps optimize code compilation and execution time.

- Determines the efficiency of parsing and code generation algorithms.

Conclusion

Big O notation is a fundamental tool in computer science that helps us evaluate the efficiency of algorithms in terms of time and space complexity. By understanding different complexity classes—ranging from constant O(1) to factorial O(n!)—we can make informed decisions when designing and optimizing algorithms.

In real-world applications, Big O notation plays a crucial role in scalability, data structure selection, competitive programming, database optimization, and even artificial intelligence. Whether we are improving search algorithms, optimizing network routing, or enhancing software performance, Big O provides a clear framework for measuring efficiency.

By mastering Big O notation, we gain the ability to write faster, more efficient code, ensuring our applications run smoothly even as data grows.

Frequently Asked Questions

Q. Why is Big O notation important in programming?

Big O notation helps analyze the efficiency of algorithms by describing their time and space complexity. It allows developers to compare different algorithms and choose the most optimal one for a given problem.

Q. What is the difference between time complexity and space complexity?

- Time Complexity measures how the runtime of an algorithm increases with input size.

- Space Complexity measures the amount of memory an algorithm uses as input size grows.

Q. What is the best time complexity an algorithm can have?

The best time complexity is O(1) (constant time), where an algorithm executes in the same amount of time regardless of input size. An example is accessing an element in an array using an index.

Q. How do we determine the Big O complexity of an algorithm?

To determine Big O complexity:

- Identify the number of operations relative to input size.

- Ignore constants and lower-order terms.

- Focus on the dominant term (highest growth rate).

Q. What is the difference between worst-case, best-case, and average-case complexity?

- Best-case: Minimum time taken by an algorithm for certain inputs.

- Worst-case: Maximum time taken by an algorithm in the most complex scenario.

- Average-case: Expected time complexity for random inputs.

Q. Can an algorithm have different time complexities for different cases?

Yes, an algorithm can have different complexities based on the input patterns. For example, Quick Sort has O(n log n) average-case complexity but degrades to O(n²) in the worst case when the pivot selection is poor.

Suggested Reads:

- 50 Software Testing Interview Questions And Answers You Should Know!

- 51 Competitive Coding Questions (With Solutions) - Do NOT Skip These!

- List Of 50 Core Java Interview Questions With Answers (2022)

- Top 50 .NET Core Interview Questions And Answers 2022

- Linux Kernel, In A Nutshell, To Help You Prepare For Linux Interview Questions

I’m a Computer Science graduate with a knack for creative ventures. Through content at Unstop, I am trying to simplify complex tech concepts and make them fun. When I’m not decoding tech jargon, you’ll find me indulging in great food and then burning it out at the gym.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Comments

Add comment