- TCS Technical Interview Questions & Answers

- TCS Managerial Interview Questions & Answers

- TCS HR Interview Questions & Answers

- Overview of Cognizant Recruitment Process

- Cognizant Interview Questions: Technical

- Cognizant Interview Questions: HR Round

- Overview of Wipro Technologies Recruitment Rounds

- Wipro Interview Questions

- Technical Round

- HR Round

- Online Assessment Sample Questions

- Frequently Asked Questions

- Overview of Google Recruitment Process

- Google Interview Questions: Technical

- Google Interview Questions: HR round

- Interview Preparation Tips

- About Google

- Deloitte Technical Interview Questions

- Deloitte HR Interview Questions

- Deloitte Recruitment Process

- Technical Interview Questions and Answers

- Level 1 difficulty

- Level 2 difficulty

- Level 3 difficulty

- Behavioral Questions

- Eligibility Criteria for Mindtree Recruitment

- Mindtree Recruitment Process: Rounds Overview

- Skills required to crack Mindtree interview rounds

- Mindtree Recruitment Rounds: Sample Questions

- About Mindtree

- Preparing for Microsoft interview questions

- Microsoft technical interview questions

- Microsoft behavioural interview questions

- Aptitude Interview Questions

- Technical Interview Questions

- Easy

- Intermediate

- Hard

- HR Interview Questions

- Eligibility criteria

- Recruitment rounds & assessments

- Tech Mahindra interview questions - Technical round

- Tech Mahindra interview questions - HR round

- Hiring process at Mphasis

- Mphasis technical interview questions

- Mphasis HR interview questions

- About Mphasis

- Technical interview questions

- HR interview questions

- Recruitment process

- About Virtusa

- Goldman Sachs Interview Process

- Technical Questions for Goldman Sachs Interview

- Sample HR Question for Goldman Sachs Interview

- About Goldman Sachs

- Nagarro Recruitment Process

- Nagarro HR Interview Questions

- Nagarro Aptitude Test Questions

- Nagarro Technical Test Questions

- About Nagarro

- PwC Recruitment Process

- PwC Technical Interview Questions: Freshers and Experienced

- PwC Interview Questions for HR Rounds

- PwC Interview Preparation

- Frequently Asked Questions

- EY Technical Interview Questions (2023)

- EY Interview Questions for HR Round

- About EY

- Morgan Stanley Recruitment Process

- HR Questions for Morgan Stanley Interview

- HR Questions for Morgan Stanley Interview

- About Morgan Stanley

- Recruitment Process at Flipkart

- Technical Flipkart Interview Questions

- Code-Based Flipkart Interview Questions

- Sample Flipkart Interview Questions- HR Round

- Conclusion

- FAQs

- Recruitment Process at Paytm

- Technical Interview Questions for Paytm Interview

- HR Sample Questions for Paytm Interview

- About Paytm

- Most Probable Accenture Interview Questions

- Accenture Technical Interview Questions

- Accenture HR Interview Questions

- Amazon Recruitment Process

- Amazon Interview Rounds

- Common Amazon Interview Questions

- Amazon Interview Questions: Behavioral-based Questions

- Amazon Interview Questions: Leadership Principles

- Company-specific Amazon Interview Questions

- 43 Top Technical/ Coding Amazon Interview Questions

- Juspay Recruitment: Stages and Timeline

- Juspay Interview Questions and Answers

- How to prepare for Juspay interview questions

- Prepare for the Juspay Interview: Stages and Timeline

- Frequently Asked Questions

- Adobe Interview Questions - Technical

- Adobe Interview Questions - HR

- Recruitment Process at Adobe

- About Adobe

- Cisco technical interview questions

- Sample HR interview questions

- The recruitment process at Cisco

- About Cisco

- JP Morgan interview questions (Technical round)

- JP Morgan interview questions HR round)

- Recruitment process at JP Morgan

- About JP Morgan

- Wipro Elite NTH: Selection Process

- Wipro Elite NTH Technical Interview Questions

- Wipro Elite NTH Interview Round- HR Questions

- BYJU's BDA Interview Questions

- BYJU's SDE Interview Questions

- BYJU's HR Round Interview Questions

- A Quick Overview of the KPMG Recruitment Process

- Technical Questions for KPMG Interview

- HR Questions for KPMG Interview

- About KPMG

- DXC Technology Interview Process

- DCX Technical Interview Questions

- Sample HR Questions for DXC Technology

- About DXC Technology

- Recruitment Process at PayPal

- Technical Questions for PayPal Interview

- HR Sample Questions for PayPal Interview

- About PayPal

- Capgemini Recruitment Rounds

- Capgemini Interview Questions: Technical round

- Capgemini Interview Questions: HR round

- Preparation tips

- FAQs

- Technical interview questions for Siemens

- Sample HR questions for Siemens

- The recruitment process at Siemens

- About Siemens

- HCL Technical Interview Questions

- HR Interview Questions

- HCL Technologies Recruitment Process

- List of EPAM Interview Questions for Technical Interviews

- About EPAM

- Atlassian Interview Process

- Top Skills for Different Roles at Atlassian

- Atlassian Interview Questions: Technical Knowledge

- Atlassian Interview Questions: Behavioral Skills

- Atlassian Interview Questions: Tips for Effective Preparation

- Walmart Recruitment Process

- Walmart Interview Questions and Sample Answers (HR Round)

- Walmart Interview Questions and Sample Answers (Technical Round)

- Tips for Interviewing at Walmart and Interview Preparation Tips

- Frequently Asked Questions

- Uber Interview Questions For Engineering Profiles: Coding

- Technical Uber Interview Questions: Theoretical

- Uber Interview Question: HR Round

- Uber Recruitment Procedure

- About Uber Technologies Ltd.

- Intel Technical Interview Questions

- Computer Architecture Intel Interview Questions

- Intel DFT Interview Questions

- Intel Interview Questions for Verification Engineer Role

- Recruitment Process Overview

- Important Accenture HR Interview Questions

- Points to remember

- What is Selenium?

- What are the components of the Selenium suite?

- Why is it important to use Selenium?

- What's the major difference between Selenium 3.0 & Selenium 2.0?

- What is Automation testing and what are its benefits?

- What are the benefits of Selenium as an Automation Tool?

- What are the drawbacks to using Selenium for testing?

- Why should Selenium not be used as a web application or system testing tool?

- Is it possible to use selenium to launch web browsers?

- What does Selenese mean?

- What does it mean to be a locator?

- Identify the main difference between "assert", and "verify" commands within Selenium

- What does an exception test in Selenium mean?

- What does XPath mean in Selenium? Describe XPath Absolute & XPath Relation

- What is the difference in Xpath between "//"? and "/"?

- What is the difference between "type" and the "typeAndWait" commands within Selenium?

- Distinguish between findElement() & findElements() in context of Selenium

- How long will Selenium wait before a website is loaded fully?

- What is the difference between the driver.close() and driver.quit() commands in Selenium?

- Describe the different navigation commands that Selenium supports

- What is Selenium's approach to the same-origin policy?

- Explain the difference between findElement() in Selenium and findElements()

- Explain the pause function in SeleniumIDE

- Explain the differences between different frameworks and how they are connected to Selenium's Robot Framework

- What are your thoughts on the Page Object Model within the context of Selenium

- What are your thoughts on Jenkins?

- What are the parameters that selenium commands come with a minimum?

- How can you tell the differences in the Absolute pathway as well as Relative Path?

- What's the distinction in Assert or Verify declarations within Selenium?

- What are the points of verification that are in Selenium?

- Define Implicit wait, Explicit wait, and Fluent

- Can Selenium manage windows-based pop-ups?

- What's the definition of an Object Repository?

- What is the main difference between obtainwindowhandle() as well as the getwindowhandles ()?

- What are the various types of Annotations that are used in Selenium?

- What is the main difference in the setsSpeed() or sleep() methods?

- What is the way to retrieve the alert message?

- How do you determine the exact location of an element on the web?

- Why do we use Selenium RC?

- What are the benefits or advantages of Selenium RC?

- Do you have a list of the technical limitations when making use of Selenium RC? Selenium RC?

- What's the reason to utilize the TestNG together with Selenium?

- What Language do you prefer to use to build test case sets in Selenium?

- What are Start and Breakpoints?

- What is the purpose of this capability relevant in relation to Selenium?

- When do you use AutoIT?

- Do you have a reason why you require Session management in Selenium?

- Are you able to automatize CAPTCHA?

- How can we launch various browsers on Selenium?

- Why should you select Selenium rather than QTP (Quick Test Professional)?

- Airbus Interview Questions and Answers: HR/ Behavioral

- Industry/ Company-Specific Airbus Interview Questions

- Airbus Interview Questions and Answers: Aptitude

- Airbus Software Engineer Interview Questions and Answers: Technical

- Importance of Spring Framework

- Spring Interview Questions (Basic)

- Advanced Spring Interview Questions

- C++ Interview Questions and Answers: The Basics

- C++ Interview Questions: Intermediate

- C++ Interview Questions And Answers With Code Examples

- C++ Interview Questions and Answers: Advanced

- Test Your Skills: Quiz Time

- MBA Interview Questions: B.Com Economics

- B.Com Marketing

- B.Com Finance

- B.Com Accounting and Finance

- Business Studies

- Chartered Accountant

- Q1. Please tell us something about yourself/ Introduce yourself to us.

- Q2. Describe yourself in one word.

- Q3. Tell us about your strengths and weaknesses.

- Q4. Why did you apply for this job/ What attracted you to this role?

- Q5. What are your hobbies?

- Q6. Where do you see yourself in five years OR What are your long-term goals?

- Q7. Why do you want to work with this company?

- Q8. Tell us what you know about our organization

- Q9. Do you have any idea about our biggest competitors?

- Q10. What motivates you to do a good job?

- Q11. What is an ideal job for you?

- Q12. What is the difference between a group and a team?

- Q13. Are you a team player/ Do you like to work in teams?

- Q14. Are you good at handling pressure/ deadlines?

- Q15. When can you start?

- Q16. How flexible are you regarding overtime?

- Q17. Are you willing to relocate for work?

- Q18. Why do you think you are the right candidate for this job?

- Q19. How can you be an asset to the organization?

- Q20. What is your salary expectation?

- Q21. How long do you plan to remain with this company?

- Q22. What is your objective in life?

- Q23. Would you like to pursue your Master's degree anytime soon?

- Q24. How have you planned to achieve your career goal?

- Q25. Can you tell us about your biggest achievement in life?

- Q26. What was the most challenging decision you ever made?

- Q27. What kind of work environment do you prefer to work in?

- Q28. What is the difference between a smart worker and a hard worker?

- Q29. What will you do if you don't get hired?

- Q30. Tell us three things that are most important for you in a job.

- Q31. Who is your role model and what have you learned from him/her?

- Q32. In case of a disagreement, how do you handle the situation?

- Q33. What is the difference between confidence and overconfidence?

- Q34. If you have more than enough money in hand right now, would you still want to work?

- Q35. Do you have any questions for us?

- Interview Tips for Freshers

- Tell me about yourself

- What are your greatest strengths?

- What are your greatest weaknesses?

- Tell me about something you did that you now feel a little ashamed of

- Why are you leaving (or did you leave) this position??

- 15+ resources for preparing most-asked interview questions

- CoCubes Interview Process Overview

- Common CoCubes Interview Questions

- Key Areas to Focus on for CoCubes Interview Preparation

- Conclusion

- Frequently Asked Questions (FAQs)

- Data Analyst Interview Questions With Answers

- About Data Analyst

The Only Docker Interview Questions You Need To Practice (2026)

Docker is an excellent containerization platform that helps you distribute your applications quickly and efficiently into different environments, including the cloud. It provides pre-packaged solutions, saving developers time when deploying their applications. Furthermore, developers can build isolated environments that can be scaled up or down as necessary with Docker. This feature makes Docker ideal for constructing software systems with complex architectures, such as microservices distributed across multiple services and servers.

This platform offers complete control over resource utilization by providing fine-grained access management tools to maximize cost efficiency without compromising the security or performance of automated processes running on its infrastructure.

Here's a look at some important Docker interview questions and their sample answers:

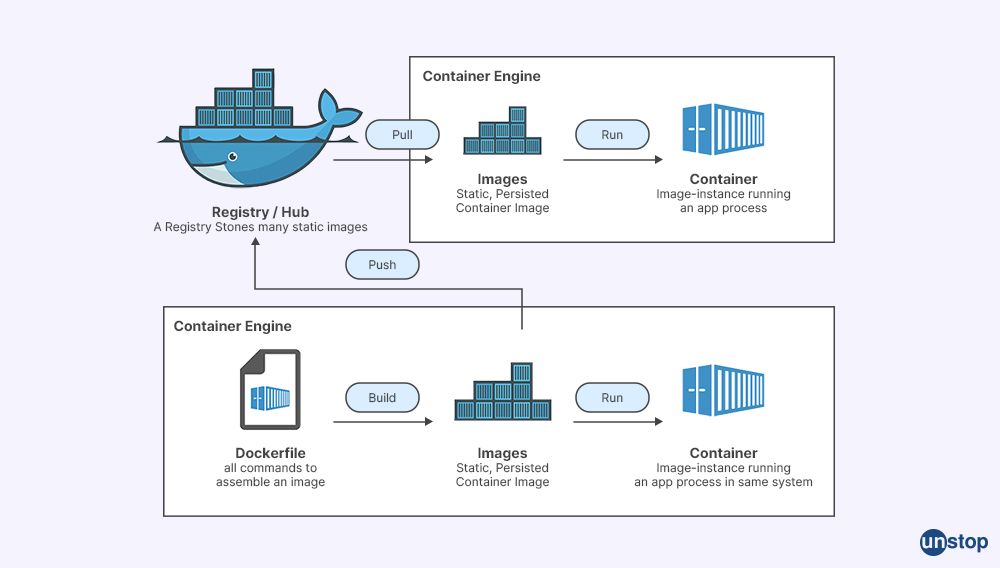

1. Explain Docker Hub

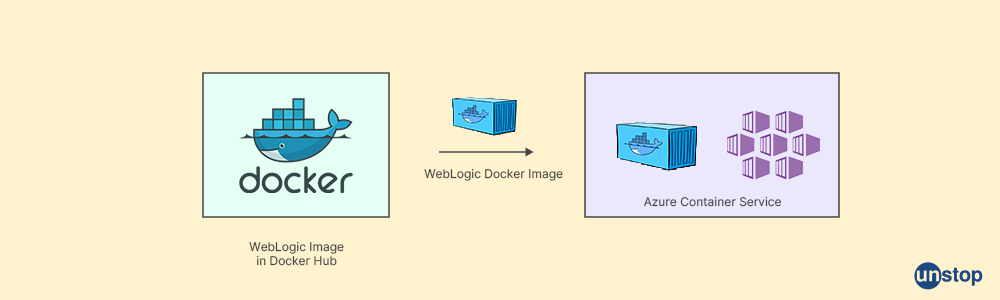

Docker Hub is an online repository where users can create, store, and share Docker images. It offers a centralized repository for the discovery, distribution, and change management of container images. With the help of Docker Hub, developers can easily access publicly available images or push their custom images to be securely shared with other team members around the world.

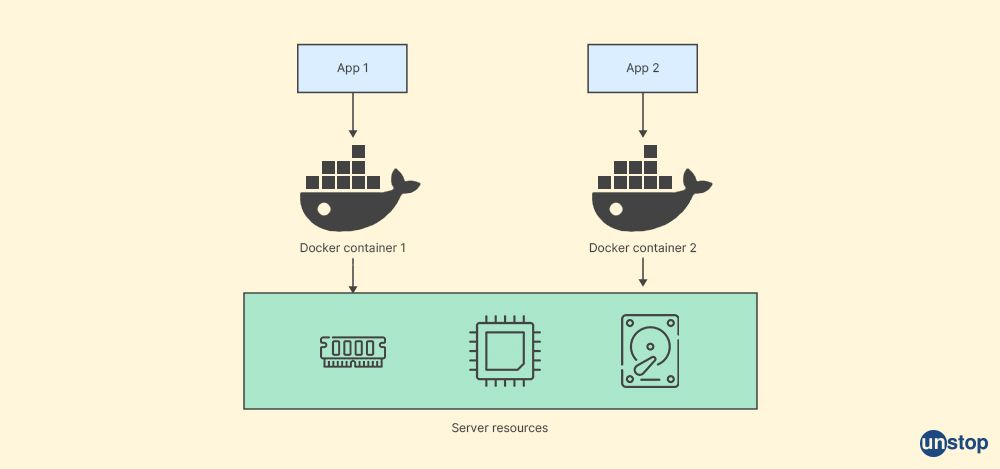

2. What is a container?

A container is a standardized software component. A program will execute quickly and consistently in many computing settings by being packaged in a container. Containers are built on top of Linux Containers (LXC), which provide process isolation using operating system-level virtualization techniques such as groups and Kernel namespaces.

3. Explain a Docker container.

A Docker container encloses a piece of software in a full file system that contains everything it needs to run. This includes code, runtime, system tools, and libraries — anything you would install on a typical server running at any given time, like Apache or MySQL — all bundled into isolated applications. This ensures that no matter where a Docker container is deployed, the applications will always run the same, regardless of whether they are in a production environment or a development environment because each part uses its dedicated resources.

4. How does the Docker Engine interact with the Docker Daemon to create and manage Docker containers?

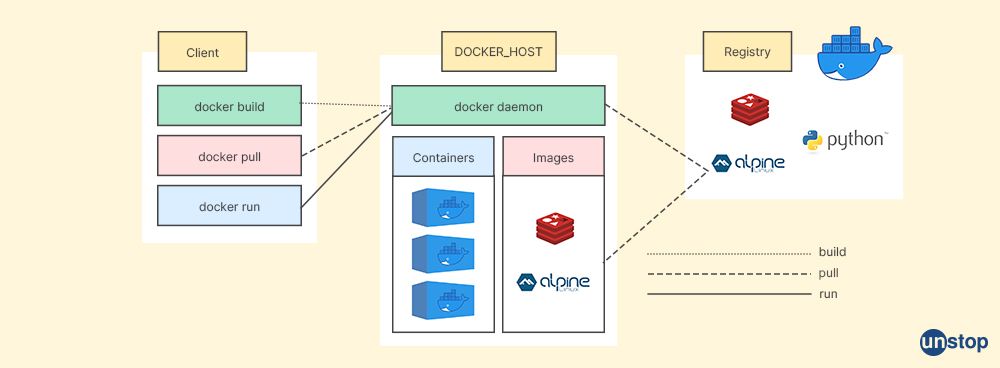

The Docker Engine is the client-server architecture that drives all Docker functionalities. The Docker Client communicates with the Docker Daemon by using a RESTful API or a socket.

5. What is a Public Registry, and how can it be used to store, share, or deploy Docker images?

A Public Registry, in the context of Docker, is a repository of images available to download and use from any computer connected to the internet without building them locally first. These registries are helpful as they provide image versioning capabilities that allow users to store multiple versions of their applications in one registry or publicly share those applications with others in private teams or organizations.

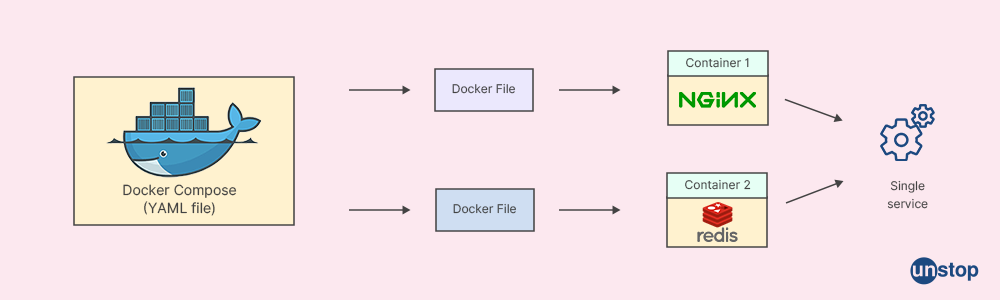

6. How do you use Docker Compose to automate the deployment of multiple services across different virtual machines?

Docker Compose can be used to automate deployment across multiple services by using Deployment Descriptors (YAML files) that specify what services you want to create on each virtual machine instance, along with other customizations such as ports exposed and networking configurations between different services on separate machines. Additionally, Docker Compose also allows developers to easily scale up/down depending on how many instances they need for their application at any given time - potentially saving money while still allowing development teams maximum flexibility when deploying their application cluster(s).

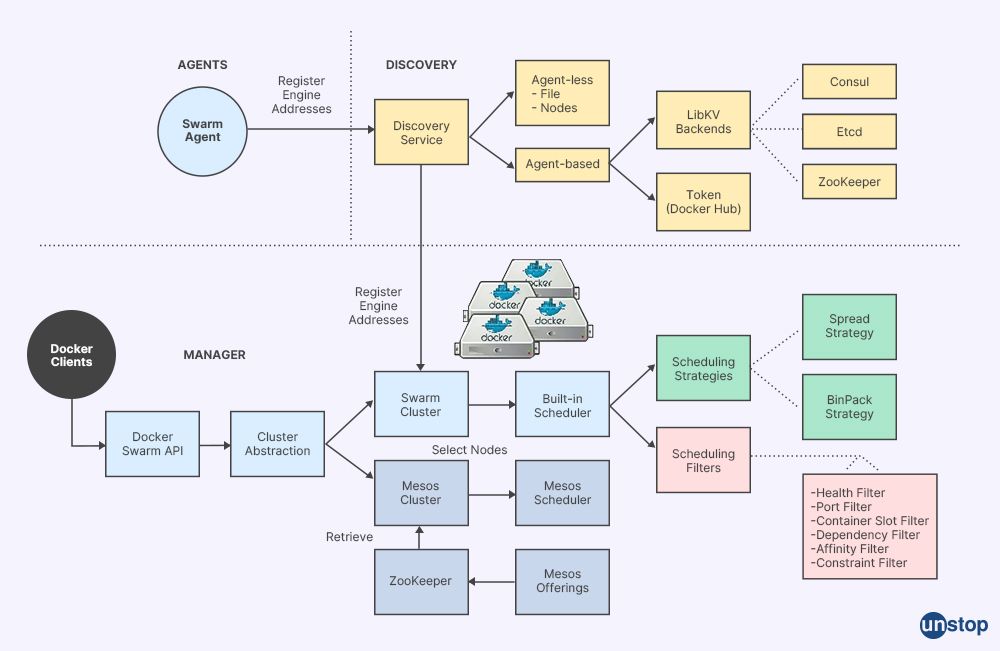

7. What approaches are available for managing clusters of nodes in a production environment with Docker Swarm?

There are several approaches for managing clusters of nodes effectively in production environments where high availability needs must be met. Some of the popular approaches include Kubernetes & Swarm Orchestration Platforms like Amazon ECS, Docker Swarm, or Kubernetes. Each approach provides different levels of features, scalability and performance, so it's important to understand their differences before deciding which is best for the application cluster needs. Many cloud hosting providers also support specific orchestration platforms - providing more options for finding the right solution for a business's unique requirements.

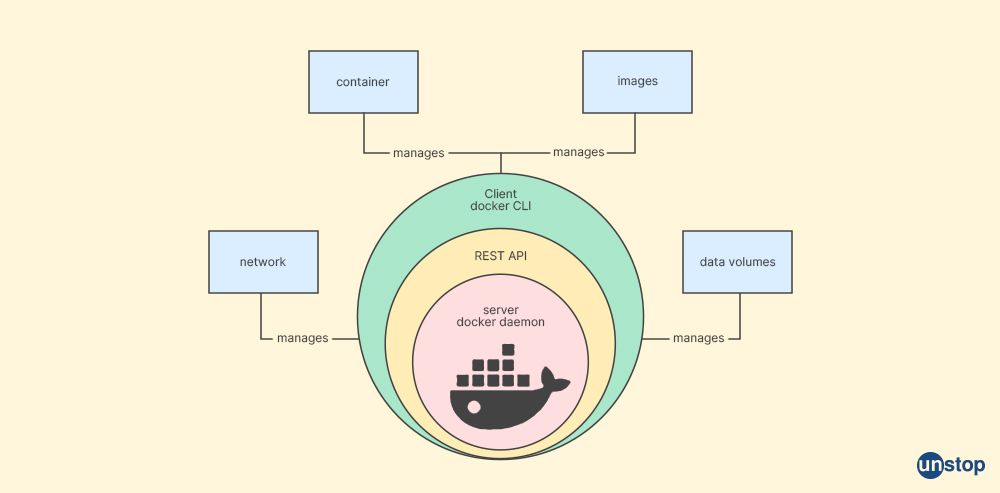

8. Explain Docker components.

There are three Docker components, namely:

Docker Client: The Docker client is a CLI (command-line interface) tool that allows users to manage and interact with the underlying host machine's running docker containers. The Docker Daemon, which handles the labour-intensive building, running, and distribution of your containerized apps, is connected to it.

Docker Host: A computer or virtual machine instance where one or more containers are hosted on top of an operating system kernel layer, allowing you to run software packages inside isolated processes called 'containers.'

Docker Registry: A central repository for storing pre-built images developers use when creating new custom application environments. Developers can upload their images into private repositories and access public ones from other sources, such as open-source projects.

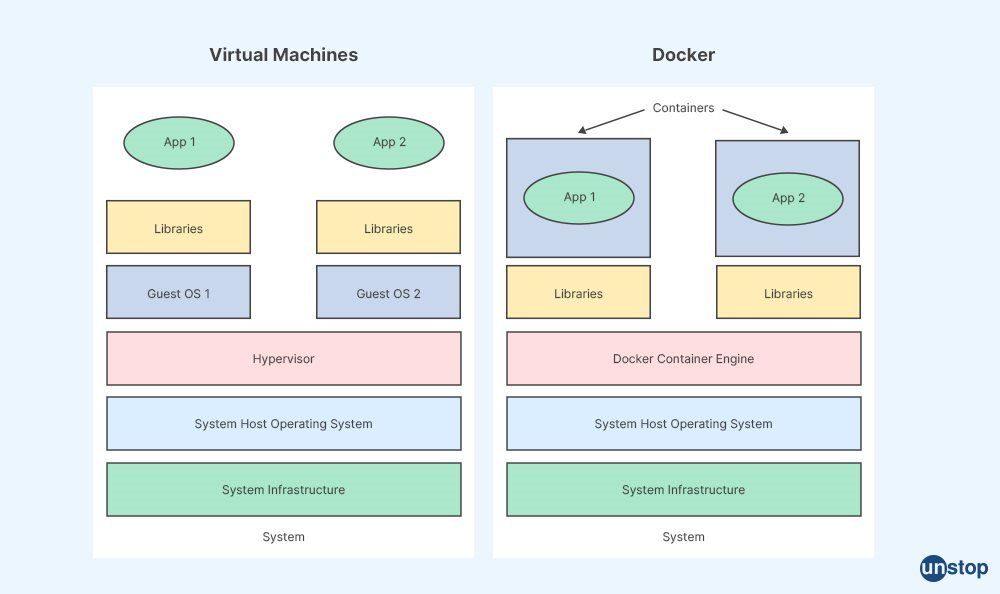

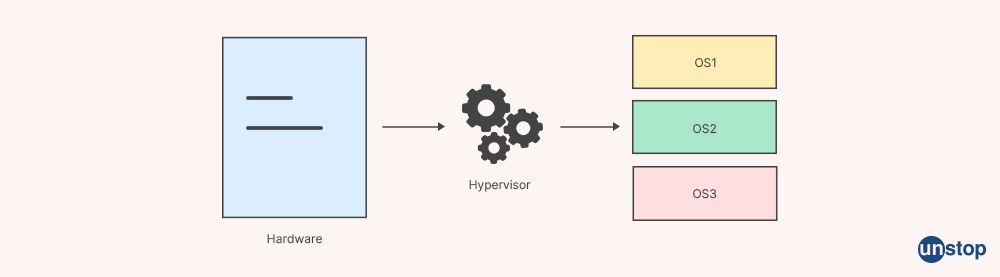

9. What is the functionality of a hypervisor in Docker?

The functionality of a hypervisor in Docker is to provide hardware-level virtualization, which helps managers to compute resource pooling efficiently across different types of workloads, such as web hosting environments or dev/test infrastructure deployments, at scale when compared to traditional Virtual Machines (VMs). The hypervisor acts like an intermediary layer between physical hardware components and virtual machines, allowing for more flexibility while controlling costs associated with server provisioning.

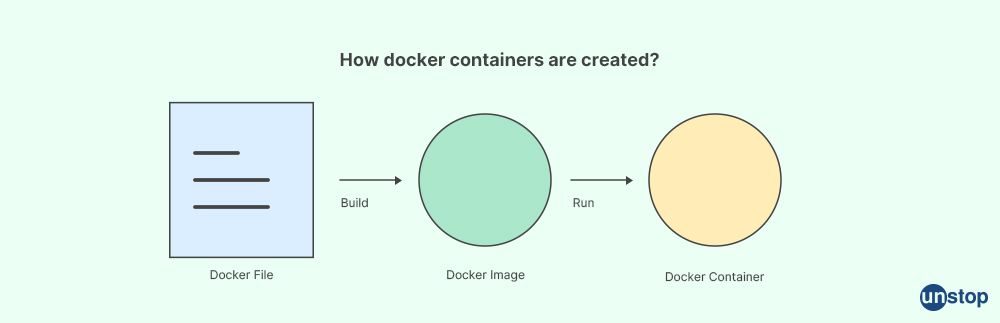

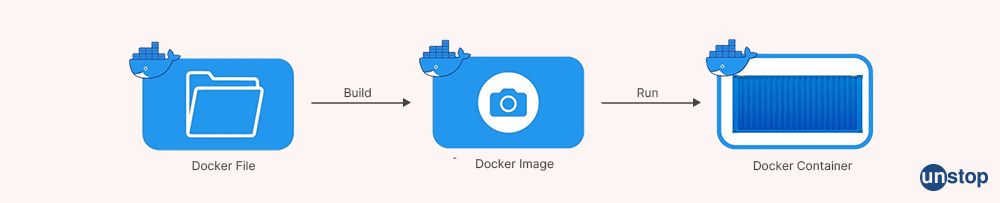

10. Explain the Docker file.

A Docker file is a text document that contains all the commands required to build an image. It is primarily used for creating, building, and shipping applications in containers. A Docker file consists of the daemon's instructions or "commands" to create images/instances from scratch. When these commands are successfully run on a given system/host machine, they can perform various operations such as copying files within file systems, specifying ports exposed externally from containers & environment variables set inside them, configuring networking settings, etc.

11. What network drivers are available for creating a virtual Docker host?

There are several network drivers available to create a virtual Docker host. Network drivers enable communication and connectivity between containers running on the same host or across multiple hosts in a Docker Swarm cluster. Some of the main network drivers for creating a virtual Docker host include bridge, overlay, MACVLAN, and IPvlan.

12. How does the host machine affect the operation of Docker containers?

The host machine affects the operation of Docker containers by providing the underlying resources needed to run them, such as CPU, memory, and disk space. It also plays an important role in networking operations by routing traffic between containers on different hosts or networks, if necessary.

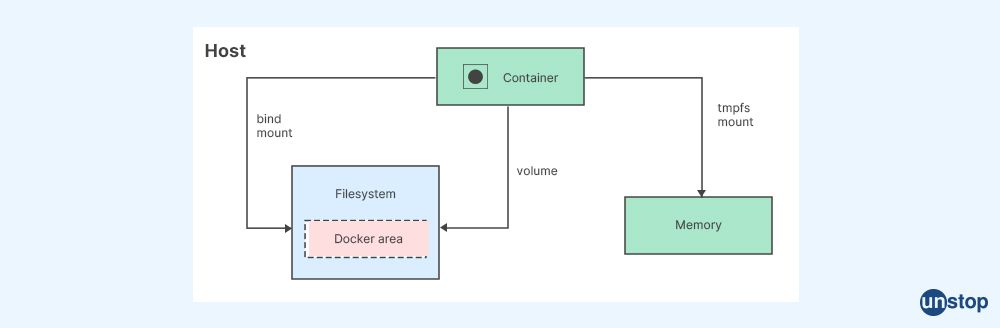

13. What is a Docker Volume, and how can it manage data in multi-container Docker applications?

A Docker Volume is a special type of storage mechanism used to manage data within multi-container Docker applications securely and efficiently without taking up extra memory or disk space on the host system itself. Volumes can be mounted on multiple machines simultaneously, so they're ideal for sharing files across multiple instances while maintaining portability through the entire architecture stack.

14. How do you create and maintain your private registry using Docker technology?

Listed below are the steps to create and maintain your private registry using Docker technology:

i) First, set up a local registry server with basic authentication enabled to secure it from unauthorized access;

ii) Use the command-line interface (CLI) tools provided by the Docker team to push images into this registry

iii) Configure advanced settings for the registry, like HTTPS support, etc.,

iv) Apart from the tools available in Docker, one can use third-party services such as AWS CodeCommit, Harbor, etc., for additional features and management capabilities.

v) To maintain the private registry, users should monitor a registry's performance regularly. This includes managing access controls, applying updates, and monitoring user permissions, among other activities.

15. What are some advantages of running applications inside virtual environments with Docker containers compared to traditional methods?

Some advantages when running applications inside virtualized environments with Docker containers compared to traditional methods include

- faster deployment times,

- better resource utilization as each container can be allocated a specific amount of RAM and CPU resources when needed instead of over-provisioning,

- isolation between components which helps improve security, and

- consistency across development - staging- production environments which can enable easier rollouts.

Unlock endless job and internship opportunities on Unstop!

16. How do Docker APIs manage container deployments and related tasks?

The Docker API is used for managing container deployments with commands such as:

- 'run' or 'create' for creating new containers from images;

- 'start' and 'stop' for running or stopping existing ones;

- 'logs' command line viewing information related to a particular instance;

- 'inspect' that will return detailed info about a single object in the system etc.

The Docker API is used to access data related to all aspects of the Docker platform, from containers and images to networks and volumes.

17. How does version control help manage changes to your Docker environment?

Version control helps manage changes in your Docker setup by allowing users to create different versions (i.e., branches) off their main repository. Users can make modifications in these branches before committing them back into production view, but only once the approved manual review process was followed. An automated CI/CD (Continuous Integration and Continuous Deployment) flow is successfully put in place too, to avoid disruptions in the continuous delivery of your Docker environment. This also avoids the potential disruption of operation systems, that could have happened due to a lack of continuous delivery.

This approach ensures appropriate levels of quality assurance have been achieved across each phase.

18. What is a base image in Docker, and how can it be used?

A base image serves a fundamental purpose, representing a foundational layer for building Docker images, upon which layers that come afterward will be built. The process of creating custom Docker images is simplified by using the base image because the base image provides a preconfigured foundation.

Generally, this allows developers to create custom bundles containing all required components in a faster and easier way, than they would have without the connected base layer. However, the level of ease and speed also depend on the complexity of the application being built.

19. How do you create instances of Docker images?

Instances of Docker image can be created by running the 'docker run' command followed by its parameters like network settings, resource (memory and CPU) allocations, container port mapping, environment variables, or any other settings related to particular processes taking place inside the Docker container. All these settings are first defined within Dockerfile. Action is triggered when the above-mentioned command ('docker run') is executed.

20. Describe what happens when working with Docker namespaces.

When working with namespaces, each process is isolated in its namespace so that it cannot interfere with other applications running on the same machine or affect their performance if there happens to be some resource contention between two such operations. Namespaces also ensure different users do not accidentally expose sensitive data stored in another user's private space as well as help keep access control levels high along those lines, avoiding potential misuse. This allows application development teams larger flexibility in terms of managing multiple tasks at once, in a smoother manner.

21. Explain what "worker nodes" and "inactive nodes" mean for running applications within Docker hosts pools?

Worker nodes refer to actual physical machines providing computing power. In contrast, inactive nodes stand as virtual machines that don't need allocated resources anytime soon due to the lack of active requests going out from external sources. They are still part of clusters; however, they remain idle until further activation. Later, when demand does return, they start being processed again accordingly.

22. What advantages does using a development pipeline bring to building, testing, and deploying applications on container orchestration platforms such as Kubernetes or Mesos clusters?

Development pipelines bring benefits to building, testing, and deploying applications on top of containers by helping reduce the manual intervention required at each step along the journey and enabling automated checks and deployments to take place. Therefore, better reliability and accuracy can be achieved throughout the entire process, often leading to shorter lead times. Pipeline also offers scalability features, thanks to which changes in large-scale projects are adapted more easily.

For instance, when the number of containers needs to increase or decrease, various actions related to this change scale are auto-triggered. So there isn't a need for any physical work i.e. no time is wasted waiting for queues to build up before anything happens. Team management gets mostly streamlined, but also secured in the same manner.

23. How would you create an application deployment powered by a docker-compose.yml file?

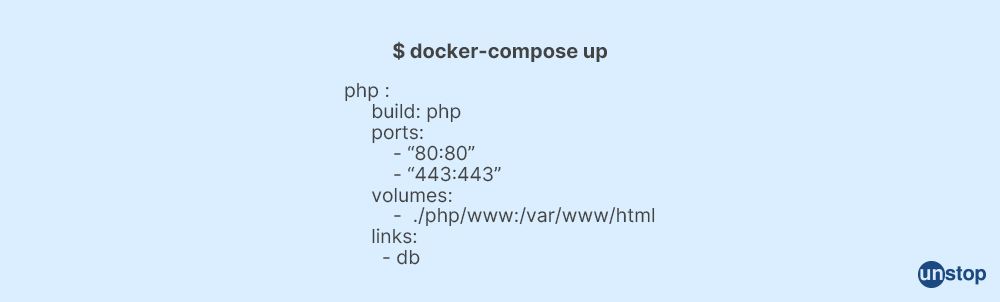

Creating an application deployment powered by the docker-compose.yml file involves writing appropriate configuration settings such as services, ports, volumes network dependencies within referenced document before executing the containerization command afterward. Typically, the 'docker-compose up' followed flags determine how a particular image should start running the environment parameters given to them. The process could successfully come live after that.

24. What benefits come from leveraging distributed dock service provided by toolsets like Swarm or DC/OS Marathon built specifically to work alongside containers like those described above?

The main advantage to using a distributed Docker service is that it allows users to leverage toolsets specifically designed for working with containerized applications to scale out their computing easily, across multiple nodes at once, rather than having one cluster dedicated solely to purpose. This means they don't need to worry about over-provisioning resources if workload increases during peak times or restrict themselves to low-level power due to lack of budget constraints, thereby saving costs previously associated with traditional deployments.

25. What is the default logging driver for Docker?

The default logging driver for Docker is JSON file, but users can also select journaled and Syslog drivers if needed.

26. What is the base server operating system underlying a Docker environment?

The base server operating system underlying a Docker environment depends on what type of OS it runs on; typically, this would be some form of Linux such as Ubuntu, CentOS, or RedHat Enterprise Linux (RHEL). On the other hand, Azure virtual network utilizes IPv4 and IPv6 networking protocols within its compute nodes across various data centers located worldwide, and regionally deployed regions, to guarantee ideal availability levels from every available geographic location.

27. What is the basis of container networking?

The basis of container networking is based on communication between host instances in a virtualized environment, which consists of two primary components:

1) Virtual Ethernet Devices (VEDs) and

2) Docker's software-defined network (SDN)

28. What is the root directory for Docker?

The root directory for Docker generally resides in /var/lib/docker on most Linux-based operating systems. However, this can be customized to a different location during installation or later, by using various configuration options depending on your system setup, desired environment's requirements at hand, and desired levels of security measures.

29. What are the benefits of using Code repositories for Docker images?

Using Code repositories for Docker images offers enhanced security, scalability, and extensibility compared to traditional methods. By creating a library or repository of pre-defined configuration files, applications can be standardized across multiple machines, and operations are less prone to errors caused by manual configuration changes made over time.

30. Why is it important to monitor Docker stats?

Monitoring Docker stats is crucial to optimize performance. Properly monitoring Docker stats such as CPU/memory usage metrics along with container traffic statistics helps us keep track of our containerized workloads. This, in turn, helps optimize performance by allowing us to take corrective measures like scaling up resources when needed or performing capacity planning exercises based on actual data collected from production environments.

31. What is the purpose of Docker object labels, and how do we implement them?

Object labels allow developers & administrators alike to better manage permissions within their Docker environment. This allows them to define access control policies that would restrict containers from talking outside their layer (e.g., inside one pool ), while still being able to communicate among themselves without any associated overheads by using additional tools or software.

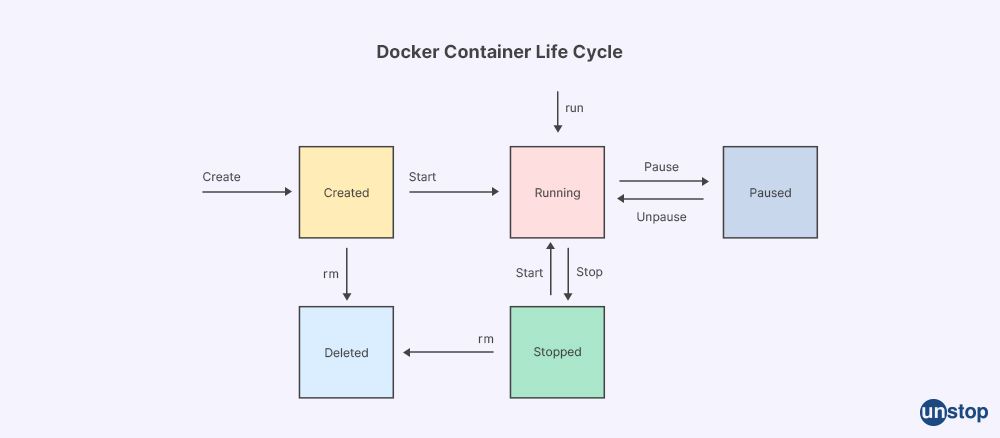

32. When should the 'docker pause' command be used in our workflow?

'Docker pause' is used whenever microservice application components, running in separate containers, need to be paused - such as when underlying infrastructure changes are needed.

33. Can you explain the different Docker versions of 'docker pause' and which one would best suit our needs?

Docker pause is a standard Docker command. It is available in different Docker versions, including the latest i.e., Docker CE (Community Edition) 19.03. The 'docker pause' command, in itself, does not have different versions or variations associated with it.

The latest version of Docker CE ( Comunity Edition ) at the time is 19.03, released on June 10th, 2019. While the Enterprise edition doesn't have a static release number associated with it, Docker EE (Enterprise edition) users should use what their IT Operations team prescribes as it may vary depending upon different custom setup parameters, contracts, and other factors implemented by the customer themselves.

34. What advantages do bridge networks offer over traditional approaches when working with containers on Docker?

Bridge networks offer faster communication speeds between containers along with better security measures such as denial-of-service protection, which lets customers define network policies that can restrict access to applications based on known sources or any other criteria set forth using popular tools like iptables/UFW, etc. This makes it easier to perform automated server provisioning tasks when deploying large-scale workloads without having to configure all individual behavior manually each time we spin up new instances.

35. How do swarm nodes enable us to efficiently manage containerized applications across multiple hosts?

Swarm nodes are specially designed clusters meant for running distributed apps across multiple hosts. Swarm nodes allow efficient resource management since unused resources from one node can get redistributed amongst others during peak load hours, thereby providing superior scalability, availability & reliability over traditional single-instance deployments. In single-instance deployments, only one machine runs application services end-to-end resulting in deteriorated performance levels under heavy usage loads.

36. What security measures must be taken while hosting guest systems within Docker containers?

Security measures need to be taken while hosting guest systems within Docker containers. These security measures include proper identity management, authentication & authorization along with extra steps like internal network segmentation, which essentially means that one container should not have access to another's resources unless explicitly authorized by the customer or provided administrative privileges. There should also be isolation policies between nodes & apps to ensure the highest levels of data integrity during transit.

37. What methods can we use for centralized resource management when running large-scale deployments on Docker?

Centralized resource management when running large-scale deployments on Docker can be done using popular tools such Kubernetes for orchestration tasks, Rancher ( https://rancher.com/ ) to create multi-environment clusters or Swarm mode for managing swarm nodes and deploying services across them in an automated fashion, without any associated downtime.

38. Where can developers find reliable resources for accessing up-to-date container images and their associated source code - public registries or private registries secured behind firewalls?

Resources for getting up-to-date container images from public registries can be found at various sources like the official docker hub repository containing millions of open source projects developed over many years; quay io where we get paid subscription options suited more towards enterprise customers who may require additional support apart from just downloading packages, etc. Private repositories are hosted behind firewalls usually meant only for the organization's staff. They require login credentials before downloading files, thus ensuring all operations follow company-specific rules set by IT departments.

39. What is the Host kernel for a single host environment used by Docker?

The Host kernel Docker uses when working in a single host environment is the Linux Kernel, 4.19 or higher (for full support).

40. How can we use container image discovery and pull images into our containers using Docker?

To discover images and pull them into our containers using Docker, we can use the "docker search" command or look up public docker registries such as hub.docker.com for desired image names and versions that fit our needs. Once found, we can explicitly run "docker pull <image name: version>" to pull it down to our local machine. It is ready to be deployed inside a container instance within the docker engine/daemon environment running locally on each node server if required.

41. Is securing sensitive files inside a container with Docker possible?

Yes, securely storing sensitive files inside a container with Docker is possible. Using image scanning tools such as AquaSec and Twistlock, organizations can detect malicious content within images before being deployed into production environments. Also, encryption solutions like OpenSSL can further secure container data. Furthermore, authentication credentials can be restricted on a per-container or stack-level basis for extra security measures when running multiple services simultaneously from the same environment.

42. How do you create an application using multiple services in development and production environments through Compose file format in Docker?

Compose file format helps create applications comprising multiple services in the development and production stages alike. Configuration settings, in the form of YAML structured documents, provide necessary components' interactions based upon the different, supported version numbers. Such settings define services and links between related applications and assist in respecting specs for environment variables as needed.

43. What kind of container platform does Docker provide for running applications as single containers on a cluster node or across multiple nodes simultaneously?

Docker provides a container platform that is capable of running both single containers on a cluster node or spread over multiple nodes at an instance in the form of clusters. This allows to serve workloads with high availability goals met under varying loads throughout a configured environment, ensuring maximum uptime during run-time operations.

44. How can you launch an Nginx image as part of your deployment process with the help of Single command in Docker?

To launch the Nginx image as part of the deployment process using a single command enabled by Docker, we can execute simple "docker run" command. The command includes additional flags defining parameters like port forwarding and network connection profile included within it, apart from the image name being passed along while passing through respective target ports inside selected network space.

45. How could we build stateful applications within guest virtual machines while creating clusters with docker swarm mode enabled?

Stateful applications are best built when guest virtual machines come into the picture with Docker Swarm mode enabled. Kubernetes could be used alongside Docker Swarm. This ensures effective orchestration techniques while getting desired reliability characteristics fulfilled towards the result achieved this way.

46. What is the Docker architecture behind launching and managing processes under isolated user-space instances when working with Docker?

The Docker architecture behind launching and managing processes under isolated user-space instances consists of the following components:

1. The Docker daemon, which listens for client requests, builds images, launches containers, and manages containers.

2. An image registry where users can push or pull prebuilt container images to launch their services in minutes instead of rebuilding each time a configuration change is needed.

3. A client that sends commands to the Daemon with instructions on what actions should be taken with regard to building/running an application inside a containerized environment (e.g., pulling down an image).

4. Namespaces that provide virtualization capabilities allowing multiple user-space environments such as PIDs (Process ID), networking interfaces, or filesystems all running simultaneously on one system without interfering with each other's settings or files accesses within those respective name spaces domains.

5. Cgroups (control groups) to limit resource usages like CPU cycles, memory utilization, or IO operations per process by setting compute limits for individual containers so they don't consume more resources than allocated, and stopping any runaway processes before it affects others hosted alongside it in this shared infrastructure platform provided by Docker engine.

47. How does Docker Cloud help manage distributed applications?

Docker Cloud is a platform for securely managing and deploying distributed applications. It helps developers quickly build, test and deploy images into production using its automated infrastructure provisioning capabilities, allowing them to share containers with other users via the private registry feature provided by Docker Hub.

48. What new features are available in the recently released version of Docker 17.06?

Numerous new features are included in the most recent release of Docker, version 17.06. These are, support for multiple stack files in compose files, custom labels for tasks or services running inside containers, and improved stack deployment logging output. The new version also includes more options for how nodes can be grouped to enable multi-host networking configuration in a variety of environments, including physical servers and cloud-hosted platforms like Amazon Web Services (AWS).

49. Who can use Docker's container technology for application development and deployment?

Anyone who needs an efficient way to develop, package & deploy their application along with all the associated data/source code onto any environment can benefit from Docker's container technology. Because Docker's container technology simplifies complex development workflows where components need to interact seamlessly, regardless of whether they are natively installed on the host or being run through a virtual machine.

50. What is the role of Docker Inc.?

Docker Inc. is the organization responsible for developing & maintaining docker technology. Additionally, they provide various products such as enterprise editions of software suites and consulting services to support its customers in adopting & effectively implementing containerization within their infrastructure setup.

51. How do I remove a Docker container by its Id or name with the 'docker rm' command?

There are two options available for using the 'docker rm' command to remove a Docker container by its Id/name:

firstly, use the `docker ps -a' command and find out the ID or name of the container, then execute docker rm <container-Id/Name OR

use the same command directly, i.e., docker rm <name_of_the_container>.

52. What are overlay networks, and how do they work in an environment that runs multiple containers simultaneously?

Overlay networks are used when we need communications between containers across different hosts inside our network environment. Overlay networks allow us to easily share resources like processing power, memory and network bandwidth, while also providing secure methods of communication for distributed applications using encryption & authentication.

53. How to identify unused networks within a system running multiple containers?

To detect unused networks within a system running multiple containers, we need to execute the `docker network is` command, which will list all the active/inactive and dangling bridge networks along with their corresponding IDs or names. We can then use further commands like `docker inspect <network_name>` to get detailed information about each associated container, such as labels, IP address range linked, etc. This will help us determine any redundant links so that they can be removed accordingly.

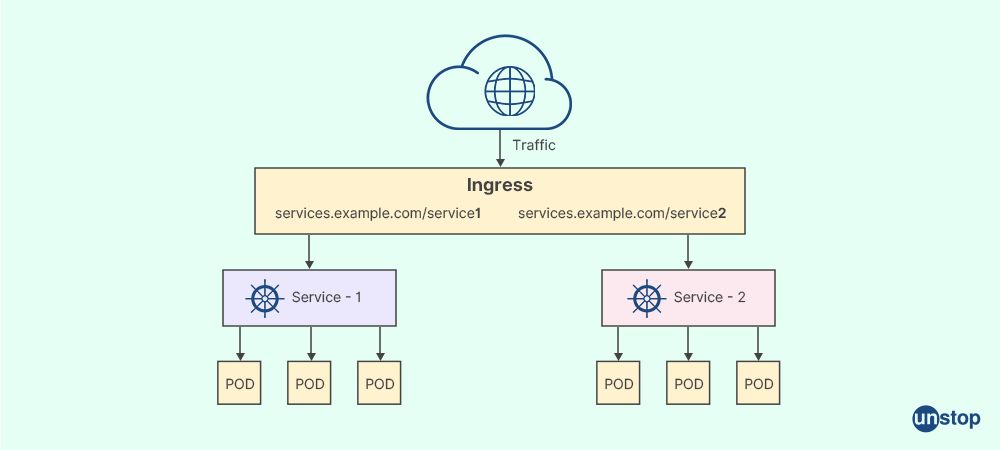

54. What is an Ingress Network, and what are its benefits over traditional networking methods used in application deployments on multi-node systems?

Ingress Network is a specialized type of overlay network that provides additional benefits over traditional networking methods used in application deployments on multi-node systems. Ingress Network provides additional benefits by utilizing Layer 7 (application layer) routing capabilities instead of just relying only upon access level control mechanisms (like regular firewall rules).

55. How does one create a user-defined overlay network across manager nodes for the applications/services deployed within them?

To create a user-defined overlay network across manager nodes, one first needs to ascertain if it's allowed by local regulations before proceeding further. In general, the process involves constructing a virtual network (VXLAN) across all nodes and creating another bridge interface on each node you want to attach to this infrastructure for the applications/services deployed within them.

56. Where should you store your data when working with Application Deployments inside docker containers: the host directory or the current directory being worked upon inside each service's respective containerized instance(s)?

The data should be stored in the host directory rather than the current directory being worked on inside each service's individual containerized instance(s) while working with application deployments inside Docker containers, and it is vital to note this. This will ensure better reliability and security of your data as any changes made during runtime do not affect pre-existing files present outside container space, thus giving administrators the ability to control the system's overall state more conveniently.

57. Are any default arguments used while creating services via `docker stack deploy` or `docker-compose up` commands?

No default arguments are used while creating services through the 'docker stack deploy' or 'docker-compose up' commands. Because these commands allow users to define specific parameters, such as image repository name and networks associated with a given deployment, directly through the command line instead of requiring explicit definitions inside respective Dockerfiles or Compose YAML files.

58. What are the benefits of using a cloud-based registry service like Docker Hub to store and manage containers?

By utilizing a cloud-based registry such as Docker Hub, developers & administrators can easily search, pull or push prebuilt images with the latest patches applied for their applications quickly into any compatible environment, irrespective if it is being run on-premise or hosted on public clouds (like AWS). This also ensures that all instances running the same image have identical environments, thus making troubleshooting simpler in case some issue arises due to incorrect configuration performed manually by the user/administrator instead of relying upon default parameter values provided within each container instance which usually is more secure when compared against manual interventions.

59. What is a Docker-Compose File?

A Docker-Compose File is a configuration file used to define and configure the services that an application requires to run. It uses YAML syntax, which makes it easy for developers to define and manage complex applications using multiple containers, networks, or volumes.

60. How do you use the Docker-Compose Command?

The Docker Compose command creates, starts, and manages multi-container applications. It takes the configuration of multiple services found in a file called 'docker-compose.yml' and creates them as containers on your host machine or cluster.

The command syntax for running a docker-compose file looks like this:

docker-compose [options] [COMMAND] [ARGS...]

`$ docker-compose -f [path/to/config_file] up [-d]`.

The "up" option will launch all defined services within the config files in an isolated environment. Then, it will print any logs generated from each container's output as they're launched and configured.

When you use the `--detach` (-d) flag with "docker-compose up," it starts all defined services without attaching its input (STDIN), output (STDOUT), or error streams (STDERR).

This means once containers are started, their outputs can be viewed using separate commands such as "docker ps" or "docker logs <name>.

For example: `docker-compose up -d --build` will build and start/run all containers associated with the project in detached mode (in the background).

61. What does the Docker-Compose Run Command do?

The Docker-Compose Run Command allows you to execute specific instructions inside particular containers so they will have the same environment variables set up by the host machine where your container runs on. With this command, you could also use different networking configurations like linking other external ports within another group of composers, etc.

62. How can you start an application using the Docker-Compose Start Command?

To start an application using the Docker-Compose Start Command, type “docker-compose up”. This has to be followed by any flags required to configure parameters associated with running your chosen service(s). These flags include things like setting memory/CPU limits as well as making sure certain files exist before starting a service, etc.

Suggested Reads:

I am a storyteller by nature. At Unstop, I tell stories ripe with promise and inspiration, and in life, I voice out the stories of our four-legged furry friends. Providing a prospect of a good life filled with equal opportunities to students and our pawsome buddies helps me sleep better at night. And for those rainy evenings, I turn to my colors.

Login to continue reading

And access exclusive content, personalized recommendations, and career-boosting opportunities.

Subscribe

to our newsletter

Blogs you need to hog!

This Is My First Hackathon, How Should I Prepare? (Tips & Hackathon Questions Inside)

10 Best C++ IDEs That Developers Mention The Most!

Advantages and Disadvantages of Cloud Computing That You Should Know!

Comments

Add comment